Fix simple mistakes in ARC-AGI, etc.

post by Oleg Trott (oleg-trott) · 2024-07-09T17:46:50.364Z · LW · GW · 9 commentsContents

Generalizations: None 9 comments

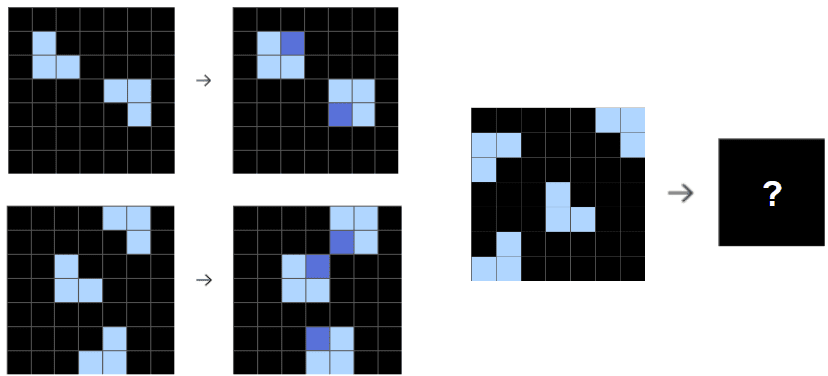

ARC-AGI is a diverse artificial dataset that aims to test general intelligence. It's sort of like an IQ test that's played out on rectangular grids.

Last month, @ryan_greenblatt [LW · GW] proposed an approach [LW · GW] that used GPT-4o to generate about 8000 Python programs per task. It then selected the programs that worked on the "training" examples given, and ran them to actually solve the "test" query.

His approach achieved 72% accuracy on the part of the benchmark that humans have been measured to get 85% accuracy on.

I have an idea for an improvement, on top of this approach. It should be relatively cheap. I don't have time to work on this myself, but I hope someone else runs with it, hence this post.

The motivation for this idea is Ryan's note

[GPT-4o] makes simple mistakes like off-by-one errors extremely often

My idea is to try to fix them automatically. I call it Program Dithering. You go through the generated Python programs, and try to perturb all integer constants in it, one at a time, and maybe several at a time.

Thus, if you try two perturbations at a time, a program that looks like this

x = 7

...

y = x + 3can become

x = 8

...

y = x + 2etc., generating a potentially large number of candidate programs without any extra GPT-4o calls. One could also consider perturbing array indexing locations in a similar way.

If off-by-one errors are extremely common, Program Dithering could fix some or many of them, and improve the overall accuracy.

Off-by-one errors seem like a general flaw, so fixing them should not be "overfitting" the benchmark.

Generalizations:

If there are other simple mistakes that GPT-4o tends to make, e.g. swapping array indexes, one can extend the approach to try to fix them also.

Other tasks, like what AlphaCode does, might find this useful too.

9 comments

Comments sorted by top scores.

comment by ryan_greenblatt · 2024-07-09T20:55:52.484Z · LW(p) · GW(p)

Seems like a reasonable idea. To implement this, I'd have to look more carefully at exactly what types of mistakes GPT-4o makes to calibrate what should/shouldn't be dithered. (Additional programs are cheap, but you can easily get a combinatorial explosion with this sort of thing.)

(I'm not currently working on ARC-AGI methods and I might not ever return to this, so don't count on me trying this!)

Replies from: privard, oleg-trott↑ comment by privard · 2024-07-12T21:34:43.500Z · LW(p) · GW(p)

Can I ask why you "might not ever return to this"? I've just recently discovered the ARC challenge and just went through Chollet's "On the Measure of Intelligence" and I'm tempted to go deeper into the rabbit hole. Just curious to know if your motivations for not returning are strictly personal or if you think this is a wild goose chase.

↑ comment by Oleg Trott (oleg-trott) · 2024-07-10T01:07:55.892Z · LW(p) · GW(p)

If you have locations that you want to perturb, then if you try a single off-by-one perturbation at a time, this adds programs. With two at a time, this adds programs.

There's a possible optimization, where you only try this on tasks where no unperturbed program was found (<28%)

EDIT: Ironically, I made an off-by-one error, which Program Dithering would have fixed: This should be

comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-07-09T22:48:35.274Z · LW(p) · GW(p)

I think I'd try having the 'dithering' done by an LLM also... giving the prompt of 'this code might have an off-by-one error. Can you suggest possibilities for values that might need correcting?

Replies from: oleg-trott↑ comment by Oleg Trott (oleg-trott) · 2024-07-10T21:04:38.573Z · LW(p) · GW(p)

@ryan_greenblatt [LW · GW]'s approach also asks GPT-4o to improve its previous guesses.

These calls are expensive though.

The idea of Program Dithering is to generate many candidate programs cheaply.

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2024-07-10T21:54:39.103Z · LW(p) · GW(p)

Agree overall, but you might be able to use a notably cheaper model (e.g. GPT-3.5) to dither.

Replies from: oleg-trott↑ comment by Oleg Trott (oleg-trott) · 2024-07-11T02:08:44.932Z · LW(p) · GW(p)

If GPT-4o made the off-by-one error, is it reasonable to expect GPT-3.5 to spot it?

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2024-07-11T02:51:58.907Z · LW(p) · GW(p)

No, but it doesn't need to spot errors, just note places which could plausibly be bugs.

comment by Oleg Trott (oleg-trott) · 2024-07-11T13:33:18.896Z · LW(p) · GW(p)

A variation on this:

Any expression should be considered for replacement by a slightly bigger or smaller one. For example

z = f(x**2 * y)

should be replaceable by

z = f((x**2 - 1) * y)

The generated programs are quite short. So I would guess that this multiplies their number by 100-1000, if you consider one perturbation at a time.