Emergent Abilities of Large Language Models [Linkpost]

post by aog (Aidan O'Gara) · 2022-08-10T18:02:25.360Z · LW · GW · 2 commentsThis is a link post for https://arxiv.org/pdf/2206.07682.pdf

Contents

2 comments

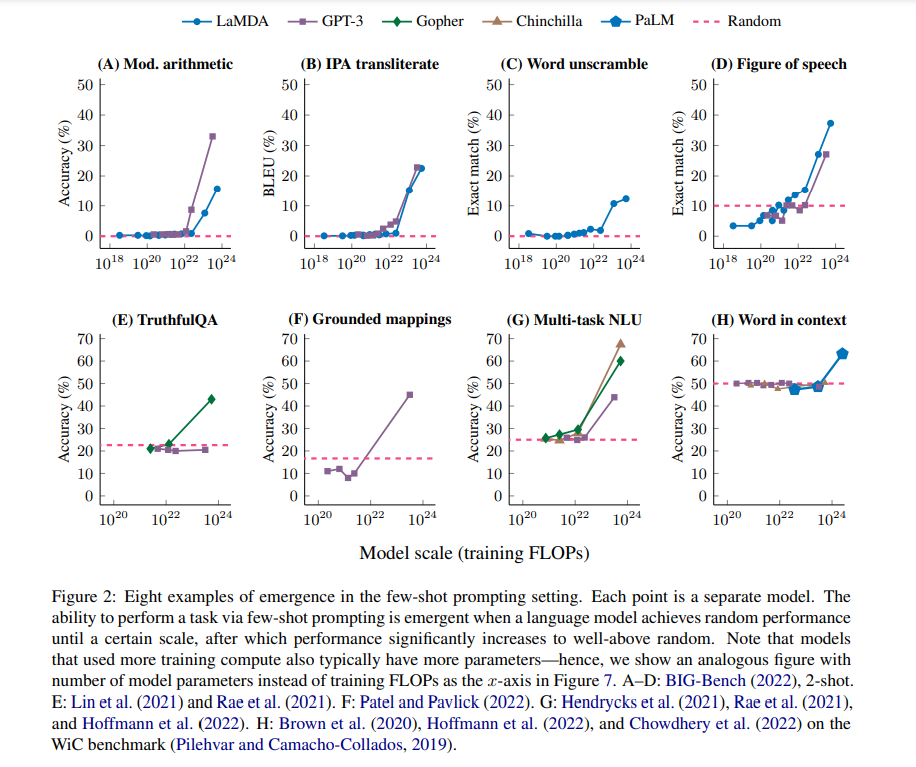

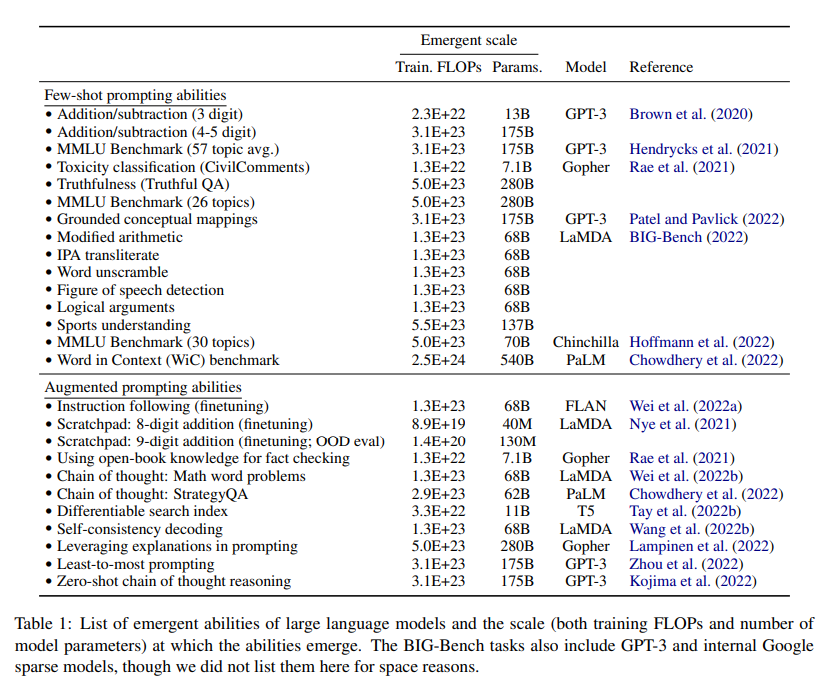

I've argued before [EA(p) · GW(p)] against the view that intelligence is a single coherent concept, and that AI will someday suddenly cross the threshold of general intelligence resulting in a hard takeoff. This paper doesn't resolve that debate entirely, but it provides strong evidence that language models often have surprising jumps in capabilities.

From the abstract:

Scaling up language models has been shown to predictably improve performance and sample efficiency on a wide range of downstream tasks. This paper instead discusses an unpredictable phenomenon that we refer to as emergent abilities of large language models. We consider an ability to be emergent if it is not present in smaller models but is present in larger models. Thus, emergent abilities cannot be predicted simply by extrapolating the performance of smaller models. The existence of such emergence implies that additional scaling could further expand the range of capabilities of language models.

Key Figures:

Related: More is Different for AI [LW · GW], Grokking: Generalization Beyond Overfitting on Small Algorithmic Datasets, Yudkowsky and Christiano on Takeoff Speeds [LW · GW]

2 comments

Comments sorted by top scores.

comment by Evan R. Murphy · 2022-08-16T19:26:29.111Z · LW(p) · GW(p)

Those are fascinating emergent behaviors, and thanks for sharing your updated view.