Is this a good way to bet on short timelines?

post by Daniel Kokotajlo (daniel-kokotajlo) · 2020-11-28T12:51:07.516Z · LW · GW · No commentsThis is a question post.

Contents

Answers 6 Dagon 4 adamShimi 3 niplav None No comments

I was mildly disappointed in the responses to my last question [LW · GW], so I did a bit of thinking and came up with some answers myself. I'm not super happy with them either, and would love feedback on them + variations, new suggestions, etc. The ideas are:

1. I approach someone who has longer timelines than me, and I say: I'll give you $X now if you promise to publicly admit I was right later and give me a platform, assuming you later come to update significantly in my direction.

2. I approach someone who has longer timelines than me, and I say: I'll give you $X now if you agree to talk with me about timelines for 2 hours. I'd like to hear your reasons, and to hear your responses to mine. The talk will be recorded. Then I get one coupon which I can redeem for another 2-hour conversation with you.

3. I approach someone who has longer timelines than me, and I say: For the next 5 years, you agree to put x% of your work-hours into projects of my choosing (perhaps with some constraints, like personal fit). Then, for the rest of my life, I'll put x% of my work-hours into projects of your choosing (constraints, etc.).

The problem with no. 3 is that it's probably too big an ask. Maybe it would work with someone who I already get along well with and can collaborate on projects, whose work I respect and who respects my work.

The point of no. 2 is to get them to update towards my position faster than they otherwise would have. This might happen in the first two-hour conversation, even. (They get the same benefits from me, plus cash, so it should be pretty appealing for sufficiently large X. Plus, I also benefit from the extra information which might help me update towards longer timelines after our talk!) The problem is that maybe forced 2-hour conversations don't actually succeed in that goal, depending on psychology/personality.

A variant of no 2 would simply be to challenge people to a public debate on the topic. Then the goal would be to get the audience to update.

The point of no. 1 is to get them to give me some of their status/credibility/platform, in the event that I turn out to be probably right. The problem, of course, is that it's up to them to decide whether I'm probably right, and it gives them an incentive to decide that I'm not!

Answers

A lot of your ideas suffer from the problem of trust and value of trade. All of these are hard to do the math to calculate the conditional expected value. A lot depends on the specific counter-party and their distribution of beliefs about timelines and impact, as well.

Can you describe what specific timeline you want to bet on and what the even-money-equivalent longer-timeline you're seeking to bet against is? What timeline should make someone indifferent to your wager (and therefore, a longer timeline or weighted mean timeline estimate would make it attractive to bet against you)?

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2020-11-28T16:54:09.860Z · LW(p) · GW(p)

Yeah. I'm hopeful that versions of them can be found which are appealing to both me and my bet-partners, even if we have to judge using our intuitions rather than math.

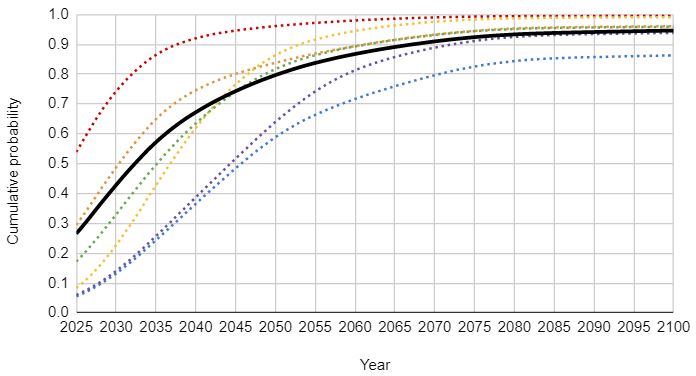

I'm working on a big post (or sequence) outlining my views on timelines, but quantitatively they look like this black line here:

My understanding is that most people have timelines that are more like the blue/bottom line on this graph.

I'm not sure exactly the profile of people you want to convince of short timelines, but I'll assume it's close to mine: researcher on AI safety with funding/job (the latter being better for launching a sustainable project) that updated on short timelines with some recent developments, but not as much as you apparently did.

Here is how I feel personally about your proposals:

- Option 1 seems nice, because I don't have a platform, so I get free money for basically no cost. But a less selfish action would be to tell you to keep your money.

- Option 2 seems nice too, because I would be interested anyway for a timeline discussion, and I get free money. So same caveat of using your money optimally. (I am also under the impression that the people that are not interested/don't have the time for such discussions would probably ask for a decent amount of money, and I'm not sure it's worth it from your point of view).

- Option 3 is interesting. But I don't want to work on something that doesn't seem important and interests me, so I'm slightly afraid of committing myself for 5 years. And also, I'm not sure how interested you would be in the stuff I care about if the timeline is long. So I would be way more excited about this deal if I knew enough about both our research interests to guarantee that I'll be able to work on interesting things you care about and vice versa.

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2020-11-29T08:24:24.238Z · LW(p) · GW(p)

Your assumptions are mostly correct. Thanks for this feedback, it makes me more encouraged to propose options 1 and 2 to various people. I agree with your criticism of option 3.

Under the condition that influence is still useful after a singularity-ish scenario, betting on percentages of one's net worth (in influence, spacetime regions, matterenergy, plain old money etc.) at the resolution time seems to account for some of the change in situation (e.g. "If X, you give me 1% of your networth, otherwise I give you 2% of mine."). The scenarios where those things matter not at all after important events seem pretty unlikely to me.

(Posting here because I expect more attention, tell me if I should move this to the previous question or elsewhere)

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2020-11-28T14:28:28.180Z · LW(p) · GW(p)

Thanks. I don't think that condition holds, alas. I'm trying to optimize for making the singularity go well, and don't care much (relatively speaking) about my level of influence afterwards. If you would like to give me some of your influence now, in return for me giving you some of my influence afterwards, perhaps we can strike a deal!

Replies from: niplavNo comments

Comments sorted by top scores.