My career exploration: Tools for building confidence

post by lynettebye · 2024-09-13T11:37:55.843Z · LW · GW · 0 commentsContents

Background

Deciding Which Problem to Work On

Example

Tips for you to apply

Big Brainstorm of Ideas

Example

Tips for you to apply

Quick Ranking

Tips for you to apply

Note on using spreadsheets

Project Briefs

Example

Scale up ARENA with BlueDot

Outline

Benefit

Motivation and enjoyment

What hypotheses am I trying to test?

What are possible one-month tests of my hypotheses?

How long will this project take?

Counterfactual impact

Metrics to measure

How much would an EA funder be willing to pay me to do this project?

Personal blogging

Outline

Benefit

Motivation and enjoyment

What hypotheses am I trying to test?

What are possible one-month tests of my hypotheses?

How long will this project take?

Counterfactual impact

Metrics to measure

How much would an EA funder be willing to pay me to do this project?

Guestimate model

Tips for you to apply

Planning Tests

Example

Tips for you to apply

Learning from Tests

Example

Tips for you to apply

Tracking Probabilities

Example

Tips for you to apply

Reevaluation Points

Tips

Credits

None

No comments

Crossposting from my blog

I did a major career review during 2023. I’m sharing it now because:

- I think it’s a good case study for iterated depth decision-making in general and reevaluating your career in particular, and

- I want to let you know about my exciting plans! I’m doing the Tarbell Fellowship for early-career journalists for the next nine months. I’m excited to dive in and see if AI journalism is a good path for me long-term. I’ll still be doing coaching, but my availability will be more limited.

Background

I love being a productivity coach. It’s awesome watching my clients grow and accomplish their goals.

But the inherent lack of scalability in 1:1 work frustrated me. There was a nagging voice in the back of my head that kept asking “Is this really the most important thing I can be doing?” This voice grew more pressing as it became increasingly clear artificial intelligence was going to make a big impact on the world, for good or bad.

I tried out a string of couple-month projects. While good, none of them grew into something bigger. I had some ideas but they weren’t things that would easily grow without deliberate effort. (Needing the space to explore these ideas prompted me to try CBT for perfectionism.)

I always had this vague impostery feeling around my ideas, like they would just come crashing down at some point if I continued. I wasn’t confident in my decision-making process, so I wasn’t confident in the plans it generated.

So at the beginning of last year, I set out to do a systematic career review. I would sit down, carefully consider my options, seek feedback, and find one I was confident in.

This is the process I used, including the specific tools I used to tackle each of my sticking points.

Deciding Which Problem to Work On

I’m a big proponent of theories of change, and think that the cause I pick to work on heavily influences how much impact I can make. I also need to match my personal fit to specific career opportunities, so it’s harder than “just” determining what the most important cause is and choosing a job working on that.

My solution was to write about what I thought were the most pressing problems in the world. I kept that info in the back of my mind later when brainstorming options and considering theories of change for specific options.

Example

My short answer from Jan 2023:

AI seems like the clear #1 cause area, while most of the other main EA cause areas seem like good second options. The outputs from ChatGPT, Stable Diffusion, Midjourney, etc. make me feel like we’re on the cusp of world transforming AI. AI might not replace humans en masse for years, but they’re already sparking backlash from threatened artists. My guess is that AI seems like one of the top areas to focus on even if I set aside AI x-risk, because AI has so much potential to improve the world if it’s used well (which doesn’t seem likely without a lot of people working together to make it go well). However, alignment still seems like the most important area, because we need alignment both for x-risk and for maximizing the potential for AI.

I don’t have an especially detailed view of what the bottlenecks are in AI safety. (Based on poor past performance trying out coding, I’m not a good candidate for direct technical work.) Generically, [improving the productivity of people working on AI safety] and [helping promising candidates skill up to start working in the field] both seem promising.

Writing for larger audiences on productivity, epistemic/rationality, and EA also seems promising (for AI and other issues), but I feel like my theory of change via outreach is underdeveloped. My likely avenues of impact are increasing productivity for people working in impactful roles, instilling better reasoning skills in readers, and/or spreading EA ideas.

Tips for you to apply

Tool: Write a couple paragraphs about which causes you think are most important and why.

Tip: Fortunately, there are some good resources to help locate promising cause areas, like 80,000 Hours’ Problem Profiles, so you can focus most of your energy on finding the best roles for you within one of them.

Don’t get bogged down here. There's no single 'best' cause area for everyone. Your goal is to identify promising causes where you can find suitable roles. If it helps, I expect you will have more impact in a great role in a good cause area than you would in a poor role in a great cause area.

I call this the “all else not equal” clause. You can’t just make the theoretically best decision as if all else is equal between your options - practical considerations in the real world usually dominate the decision. For example, (for most people) finding out which jobs you can get is more important than theoretically deciding which of your top options would be most impactful if you could get it.

Big Brainstorm of Ideas

I’ve been feeling uncertain partly because it felt like I hadn’t considered all of the options systematically. So I wanted to balance being systematic and practical, since I couldn’t realistically explore a bunch of ideas in depth.

Over the course of a few weeks, I brainstormed around 30 roles and independent project ideas I could explore. (I’ve seen other people make lists with 10-100 options.) I wrote down options I’d already considered and brainstormed more by looking through the 80,000 Hours job board, talking to some close friends, and thinking about potential bottlenecks in the causes I’d listed in my theory of change brainstorm.

Example

Big brainstorm of work ideas, very roughly grouped by category

- More measured/outcome-based coaching, working toward specific goals

- Do another impact evaluation

- CBT provider course

- Roleplay training sessions with other peers

- Record calls and analyze them for ways to improve

- Blogging about psych/productivity/ea

- Do a deep dive into expertise

- AI Impacts style research

- Deliberate practice writing skills (e.g. Gladwell exercises, reasoning transparency, rationalist discourse norms)

- Trying to get a job writing for a news source

- Engage a lot with short posts on fb, write a handful of longer posts based on what feels most important/gets traction after doing so

- Write fiction

- Spinning up skilling up courses

- Work with BlueDot

- Help run ARENA

- Intro to bio course

- Research exploration/training course/cohort

- Map the EA landscape talent gaps

- Operations training workshops

- Charity Entrepreneurship

- Take over the MHN

- Do operations for some EA org

- Project manager, e.g. for some AI safety org

- Grant making, e.g. Longview or Open Phil or Founders Pledge

- Do lots of broader social organizing for the London community

- Do retreats/weekends away for community building

- Host workshops or teach at workshops, like ESPER and Spark

- Do productivity workshops

- Host retreats for coaches

- Host retreats for people working on mental health

- Host retreats for people working on rationality

Tips for you to apply

Tool: Make a list of 10-100 possible career options.

Tip: Don’t evaluate your ideas while brainstorming. You will get stalled and think of fewer ideas if you’re thinking about whether they are good or bad as you go. Instead just try to think of as many ideas as possible. Many of these ideas will be bad – that’s fine. You’re just trying to find numerous and novel ideas, so that the top 3-10 are probabilistically more likely to include some really good ideas.

Here are some ways to broaden your options:

- Write down any opportunity you already know about which might be very good (e.g. using your capacity for earning to give as a benchmark to try to beat).

- 80,000 Hours job board

- You can filter jobs by problem area, role type and location.

- Also use the job board to discover promising orgs even if they are not currently listing a job you can do right away. See the listed jobs and the ‘Organizations we recommend’ section.

- Ask people who share or understand your values what the most valuable opportunities they are aware of are.

- List big problems you have insight into along with possible solutions.

- Look through 80,000 Hours’ list of promising career paths.

- Look through Open Phil's grantees, which can be filtered by focus area.

- Don’t forget career capital. You want to be doing high-impact work within the next few years. To do that you might focus on building skills or other resources in the near term. Everything here applies to this as much as for jumping into a directly valuable role.

For those interested, you can read Kit Harris’s list of 50 ideas from a similar brainstorm he did years ago. They span operations, generalist research, technical and strategic AI work, grantmaking, community building, earning to give and cause prioritization research.

Quick Ranking

In the past, I’ve found a long list of career ideas overwhelming. This time (based on Kit’s suggestion), I roughly sorted the ideas by how promising they seemed. “Promising” roughly meant [expected impact x how excited I felt about the idea]. Then I selected my top ideas to explore further. This made exploration much more approachable.

I did a quick sanity check by asking my partner if he would have ranked any of the excluded ideas above the ones I prioritized for deeper dives.

Tips for you to apply

Tool: Roughly rank your possible career options by how promising they seem.

If you have a default option that you can definitely pursue (such as continuing in a job you already have), that’s a good threshold. You can immediately rule out any options that seem less promising than your default.

Note on using spreadsheets

I wanted to balance prioritizing quickly and considering ideas I might not have thought about before. After all, I just did a big brainstorm to get new ideas. One way to do this is to put all of the ideas in a spreadsheet and spend a few minutes roughly ranking each one, then see how the averages compare to each other. I quickly did this with a 1-5 scale for personal fit, impact, and skill building potential.

I didn’t personally find this exercise that useful, but I’m still optimistic that spreadsheets can sometimes be helpful for decision making.

Project Briefs

For each of my top options, I spent thirty min to two hours writing a project brief. (Ones for outside roles were shorter, while independent projects were longer.) This consolidated my understanding of the role/project and helped me identify key uncertainties to test.

The questions from my project briefs were drawn from this post. Specifically I answered:

- One-paragraph description of the role/plan

- What is the expected impact?

- How much do I expect to enjoy this? How motivated do I feel?

- What hypotheses am I trying to test? What are possible one-month tests of my hypotheses?

- What metrics could I track to address the most likely failure modes with this plan?

The project briefs were mostly to identify my key uncertainties. Fleshing out the above questions helped me identify the cruxes that would make me choose or abandon each option, so I could start investigating.

Example

Scale up ARENA with BlueDot

Outline

Work with BlueDot to set up a refined course on their platform and set up training for facilitators to scale the course for potential ML research engineers (using my ideas for a full-time course with dedicated technical facilitators). Ideally, I could then hand the course over to them to run going forward.

Benefit

This course could be used for an accelerated study program where people learn to do ML engineering well enough to get jobs at AI safety orgs. By targeting people who specifically want to work on AI safety, this would help with the bottleneck to AI safety research. (Rohin and other people think this is a bottleneck to safety research.)

Motivation and enjoyment

6/10, need some force to pay attention to all of the details. (I’m excited about the program, but not super excited about running it myself.)

What hypotheses am I trying to test?

That ARENA will accelerate the skilling up process to work at AI safety orgs.

That BlueDot will be able to effectively implement and run this program.

Whether these kinds of programs in general are worth creating and running.

Whether BlueDot in particular is good to work with and whether they are doing high impact work.

What are possible one-month tests of my hypotheses?

Discuss whether Matt and Callum and Rohin think ARENA is promising for skilling up research engineers.

Discuss with Callum and BlueDot whether we should scale up the course.

Try implementing the course on BlueDot’s platform, and run a trial program with their facilitators.

How long will this project take?

Probably 3-10 hours spread out over a couple weeks to figure out if we should try implementing.

Highly uncertain about implementation, maybe range of 20-200 hours? So a couple weeks to 5 months. Worst case scenario is even longer, because it’s all the hours of work plus waiting on other people a bunch.

If we move forward with this project, we definitely need intermediate milestones and reevaluation points. Maybe the first point at whether we attempt it at all. Second one month into implementing – how close are we to opening this to a trial group? Second three months in – is this promising enough that it’s worth going further, if it requires more time than this to implement? Monthly reevaluations after that until running with trial cohort. Third 4 weeks into first trial cohort – is this going well, should we majorly shift course? At end of first trial cohort, how did this go? Is it worth running regularly?

Counterfactual impact

Probably the online course will quietly die unless I push it forward, since Matt isn’t continuing.

Metrics to measure

We don’t know whether the course helps people skill up.

Success rate of participants at getting jobs, how many apply for ea jobs, how many get jobs, how many need to do more study before they’re ready for jobs, how many do something else, we could measure lead of how many want ea jobs and lag of how many get them

How many graduate, how highly they rate the course, also good metrics.

How much would an EA funder be willing to pay me to do this project?

Matt’s program was potentially costing millions and people seemed willing (admittedly that was FTX). Open Phil funded the stipends after FTX, so they thought at least that much met their bar.

Personal blogging

Outline

I would continue blogging about psych/productivity/ea/rationality in an attempt to sharpen my own thinking and spread better ideas through the community. I could do some deliberate practice on writing skills (e.g. Gladwell exercises, reasoning transparency, rationalist discourse norms), plus try out my old idea of doing lots of short posts on FB or twitter to refine my understanding of which ideas are most promising for longer posts. Hopefully, those two things would make my writing more useful and compelling.

Benefit

There’s probably something useful in spreading good ideas, and written form is easily spread and scaled. I could reach many times more people than I can in 1:1 coaching, probably including high-impact people.

Motivation and enjoyment

8/10, need some force to follow through on all the exercises and finish posts, but I’m mostly excited about the writing process.

What hypotheses am I trying to test?

Can I write well enough/fast enough that I'm regularly putting out actionable, engaging posts that thousands of people will read?

That my writing will reach a large and/or high impact audience, probably measured by email subscriptions, engagement, and feedback.

That my writing will help the people is reaches, probably measured via self-reports of impact and via engagement.

Whether my FB idea to test engagement works.

Whether I should pursue writing as my main impactful work, including getting a job somewhere with mentorship.

That I can practice and improve the speed/quality of my writing.

What are possible one-month tests of my hypotheses?

Try the FB idea with daily posts for a month, plus at least one blog post.

Read Holden’s post and other materials about the value of journalism (is there an ea forum post about vox FP? 80k post on journalism?) Use these to think about what kinds of messages and audience I want to target.

Ask around for some advice on growing my audience, and implement to see if I can reach a bigger audience (e.g. reposting to hacker news). Measure a bunch of things and see what seems to resonate.

Ask Nuño or someone like Rohin/Kit for estimates of value from posts?

Try out ChatGPT to aid in writing, see if this speeds me up.

How long will this project take?

As much or as little time as I want to give to it. Probably 20+ hours a month if I want to write and read a bunch and publish at least one high quality post a month.

I should have reevaluation points and metrics if I pursue this. Maybe ask for a re-evaluation of donor funding worth after 3 months or 1 year, based on what I produced in that time and how much my audience has grown? Maybe have some metric for how much I should expect my audience to grow? Monthly reevaluations for what I should be doing differently in writing and what to focus on.

Counterfactual impact

There are lots of other writers. None of them are doing exactly what I would be, but maybe I’m not adding that much novel and new?

Metrics to measure

I can imagine I get to the end of a year of blogging, without clearly understanding if this worked. Having specific targets in mind (and ways to measure those targets) for audience growth, engagement, posts published, and donor-evaluated value (or audience? Patron?) could help here.

12 posts published, stretch 24

Audience doubled, to 1000 subscribers (can I measure rss feeds?)

Engagement – I get at least at least one comment on the majority of my posts? Maybe I want substantial comments or people telling me the post was useful for the majority of posts?

Donor-evaluated value – what value would be worth trying this experiment? $200 x number of hours to create a post is a good threshold. Maybe anything that’s higher than the arena course donor value? What value would be worth continuing at the end?

How much would an EA funder be willing to pay me to do this project?

Logan got 80,000 for high variance work on naturalism, with Habyrka expressing expectation that it likely wouldn’t work – this seems relevant but different from what I’m thinking about. Personal blogging is probably below this bar.

Guestimate model

I also put together a guestimate model to see if any option was clearly higher impact. While the exercise was helped clarify some uncertainties, it didn’t cause me to update much. Mostly I learned that my confidence intervals were wide and overlapping, so no option was clearly best.

Tips for you to apply

Tool: Write a short project brief for each of your top options to identify your key uncertainties.

This post has many good questions you can consider. I recommend using a reasoning transparency-inspired style if you’re struggling, laying out your reasons and the evidence for those conclusions.

Planning Tests

In my case, writing the project briefs turned up numerous questions I could investigate. For example, I identified two key uncertainties that would easily change my plans.

- Can I write well enough/fast enough for professional journalism or blogging?

- Do I and the other stakeholders want to scale up the ARENA pilot?

Additionally, I think there’s often value from just doing as close to the role I want as possible. Do I like it? Am I good at it? Does anything surprise me?

With these in mind, I developed quick tests meant to give me more information about my top options.

My brief explorations included:

- Talking to people doing the job

- Reading about the career path (e.g. journalism)

- Talking with potential collaborators

- Applying to a program

- Getting feedback on my plans from people who know me

- Talking with people in the field about how promising a project was

My deeper explorations included:

- Spending a day at an org I was interested in

- Co-running a ten-week trial program of an AI skilling-up course

- Spending three months blogging regularly

- Taking a relevant course

- Pitching posts to publications

- Applying to the Tarbell Fellowship for journalism

Example

A couple ideas got dropped because other people took up the mantle. A couple more were directions I could take coaching in, so I had fewer uncertainties. I could learn more from focusing my tests on options where I had less preexisting information.

In the end, I ended up doing the most tests with journalism, which you can read about in this excerpt from my Making Hard Decisions post:

I knew basically nothing about what journalism actually involved day-to-day and I had only a vague theory of change. So my key uncertainties were: What even is journalism? Would journalism be high impact/was there a good theory of change? Would I be a good fit for journalism?

Cheapest experiments:

So I started by doing the quickest, cheapest test I could possibly do: I read 80,000 Hours’ profile on journalism and a few other blog posts about journalism jobs. This was enough to convince me that journalism had a reasonable chance of being impactful.

Meanwhile, EA Global rolled around and I did the second cheapest quick test I could do: I talked to people. I looked up everyone on Swapcard (the profile app EAG uses) who worked in journalism or writing jobs and asked to chat. Here my key uncertainties were: What was the day-to-day life of a journalist like? Would I enjoy it?

I quickly learned about day-to-day life. For example, the differences between staff and freelance journalism jobs, or how writing is only one part of journalism – the ability to interview people and get stories is also important. I also received advice to test personal fit by sending out freelance pitches.

Deeper experiment 1:

On the personal fit side, one key skill the 80,000 Hours’ profile emphasized was the ability to write quickly. So a new, narrowed key uncertainty was: Can I write fast enough to be a journalist?

So I tried a one-week sprint to draft a blog post each day (I couldn’t), and then a few rounds of deliberate practice exercises to improve my writing speed. I learned a bunch about scoping writing projects. (Such as: apparently, I draft short posts faster than I do six-thousand-word research posts. Shocking, I know.)

It was, however, an inconclusive test for journalism fit. I think the differences between blogging and journalism meant I didn’t learn much about personal fit for journalism. In hindsight, if I was optimizing for “going where the data is richest”, I would have planned a test more directly relevant to journalism. For example, picking the headline of a shorter Vox article, trying to draft a post on that topic in a day, and then comparing with the original article.

Deeper experiment 2:

At this point, I had a better picture of what journalism looked like. My questions had sharpened from “What even is this job?” to “Will I enjoy writing pitches? Will I get positive feedback? Will raising awareness of AI risks still seem impactful after I learn more?”

So I proceeded with a more expensive test: I read up on how to submit freelance pitches and sent some out. In other words, I just tried doing journalism directly. The people I’d spoken with had suggested some resources on submitting pitches, so I read those, brainstormed topics, and drafted up a few pitches. One incredibly kind journalist gave me feedback on them, and I sent the pitches off to the black void of news outlets. Unsurprisingly, I heard nothing back afterwards. Since the response rate for established freelance writers is only around 20%, dead silence wasn’t much feedback.

Instead, I learned that I enjoyed the process and got some good feedback. I also learned that all of my pitch ideas had been written before. Someone, somewhere had a take on my idea already published. The abundance of AI writing undermined my “just raise awareness” theory of change.

Deeper experiment 3:

Since I was now optimistic I would enjoy some jobs in journalism, my new key uncertainties were: Could I come up with a better, more nuanced theory of change? Could I get pieces published or get a job in journalism?

I applied to the Tarbell Fellowship. This included work tests (i.e. extra personal fit tests), an external evaluation, and a good talk about theories of change, which left me with a few promising routes to impact. (Yes, applying to roles is scary and time consuming! It’s also often a very efficient way to test whether a career path is promising.)

Future tests:

Now my key uncertainties are about how I’ll do on the job: Will I find it stressful? Will I be able to write and publish pieces I’m excited about? Will I still have a plausible theory of change after deepening my models of AI journalism?

It still feels like I’m plunging into things I’m not fully prepared for. I could spend years practicing writing and avoiding doing anything so scary as scaling down coaching to work at a journalism org – at the cost of dramatically slowing down the rate at which I learn.

Tips for you to apply

Tool: Do tests to more deeply investigate your top options, especially your key uncertainties.

Start with the cheapest, easiest tests, and work up to deeper tests. I also recommend doing cheap tests of a few things before going deeper with one, especially if you’re early in your career. This post has advice and tons of case studies on making career decisions.

You want to address the key uncertainties you identified. Key uncertainties are the questions that make you change your plans. You’re not trying to know everything - you’re trying to make better decisions. This is very important. There are a thousand and one things you could learn, and most of them are irrelevant. You need to focus your attention on the questions that might change your decision.

Spread out asking for advice, roughly from easy to hard to access. So, if you can casually chat with your housemate about a decision, start there! Later when you need feedback on more developed ideas, reach out to the harder to access people, e.g. experts on the topic or more senior people who you don’t want to bother with lots of questions. Some possible questions: Does this seem reasonable to you given what you know? What is most likely to fail? What would you do differently? Here is additional good advice on asking feedback [EA · GW] from Michelle Hutchinson.

Learning from Tests

While planning my tests, I realized I needed a system of tracking them. I’d miss important lessons if I didn’t have a reliable method for checking the result afterwards, updating on the new information, and planning the next set of experiments.

So I started using what I called hypothesis-driven loops.

Each week during my weekly planning, I would also plan what data I was collecting to help with career exploration. I wrote down my questions, my plan for collecting data, and what I currently predicted I would find.

All of this made it easier to notice when I was surprised. I was collecting data from recent experiences when it was fresh in my mind. Because I was writing in advance what I guessed I’d find, it was easy to notice when I actually found something quite different.

It allowed me to plan what I wanted to learn from my goals each week, have that in the back of my mind, and reliably circle around the next week to write down any info. This left a written trail documenting the evolution of my plans.

Example

Do a 1-week experiment where I draft a new post every day. Hypothesis: ADHD meds will make it easier for me to write quickly and reliably. Drafting a post a day will still be difficult but might be achievable. (80% I'll be able to work on a new draft each day. 70% I'll finish at least 2 drafts. 30% I'll finish all 5.)

- I was able to do 4/5 drafts, but got a headache the last day. I finished two of them basically, and have 2 more in progress. Results consistent with ADHD meds making it a lot easier for me to write, though I want to evaluate the posts, test revisions, and check whether this pace is sustainable next week.

Talk with Matt and Callum about BlueDot. Hypothesis: It will pass this first test for scalability (65%).

- It passed the scalability test, but Callum is going to try exploring himself. I think he's a better fit than I am, so happy to let him try it out. I'll reevaluate later if he doesn't continue or I get new information.

Reach out to people/schedule interviews/have interviews/draft post in 1 week: ambitious goal, but seems good to try for my goal of writing faster. 30% I can have a draft by the end of the week. 70% I can do at least 2 interviews.

- No draft, but I did six interviews.

Tips for you to apply

Set it up somewhere you’ll reliably come back to. The entire point is coming back to update from what you learned, so this exercise is basically useless if you don’t close the loop. I have a todo that I write down the experiments in while doing weekly planning on Saturday, and then I set the due date to be the following Saturday so I’m reminded each week.

State what your plan is for getting information. I tie this into my goals for the week. Usually it looks something like glancing over my goals for the week and seeing if any of my goals are at least partially there to learn from. Then I make hypotheses based on those. This ties together the learning goal with the plan for getting the information.

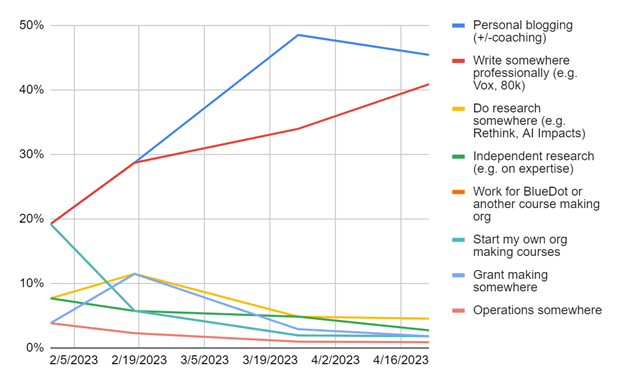

Tracking Probabilities

While I was doing tests, I tracked the probability I assigned to different options, as well as noting what information caused big swings in my probabilities. This let me notice and track changes to my expectations around which option was best.

I updated my spreadsheet weekly for the first couple months while I was exploring and learning about options (roughly corresponding to the period when I was brainstorming, writing project briefs, and doing tests taking <1 week). Once I started longer tests, I switched to monthly, until I committed to Tarbell.

Example

Tips for you to apply

Here’s an excerpt from my post on How Much Career Exploration Is Enough?

Track when you stop changing your mind about which option is best. Even with an important decision, you only want to spend more time exploring as long as that exploration is changing your mind about which job is most valuable.

To measure how much your mind is changing, you can set up a spreadsheet with probabilities for how likely it is you’ll do each of the options you’re considering.

Try to write down numbers that feel reasonable and add up to 100%. (To avoid having to manually make the numbers equal 100, you can add numbers on a 1-10 scale for how likely each option feels, and then divide each number by the total.)

Each week while you’re exploring, put in your new numbers. As you get more information and your decision is more solid, the numbers will slowly stop changing (and one option will probably be increasingly closer to 100%).

When your probabilities are changing wildly, you’re still gaining more information. Once they taper off, you’ve neared the end of productive returns to your current exploration.

Reevaluation Points

Both tests for productivity blogging and AI journalism were both promising. I would be happy continuing productivity blogging, if AI journalism turns out to be a poor fit.

In the end though, AI just seems too important to ignore. So I committed to the Tarbell Fellowship. I spent the spring doing an AI journalism spinning up program, and this month I’m starting the nine-month main portion of the fellowship.

After those nine months, I’ll reevaluate. I’ve got a set of key questions I’m testing, including about theory of change and personal fit. I also expect I’ll learn tons of things that I don’t know enough now to ask about.

I already learned so much about journalism – including that several of my assumptions during my early tests were wrong. For example, I focused so much on writing speed, whereas now I would have focused on reporting.

Despite all the flaws in my understanding at the time, I still think the tests were a reasonable approach to making better informed decisions. The iterative information gathering allowed me to make measured investments until I was ready to make a big commitment.

Now I’ll dive into journalism. At the end of the fellowship, I’ll decide what comes next. Right now, I hope to continue. I’m confident this is worth trying, even though I’m not confident what the end result will be. But I’m doing my best to set myself up for success along the way, including telling all of you that I’m doing this (if you have stories that should be told, please let me know!) You might already have noticed the changes to my website.

In the meantime, I’ll be writing less on this blog. Come check out my substack for AI writing instead!

Tips

After you commit to one career path, reevaluate periodically to check your work is a good fit and as impactful as you hoped. Your work may have natural evaluation points (such as a nine-month fellowship). Otherwise, about once a year often works well. At each reevaluation point, ask yourself if you learned anything that might make you want to change plans? Are there new options that might be much better than what you’re currently doing? If so, do another deep dive. If not, keep doing the top option.

Credits

Thanks to everyone who gave me advice and feedback on this long journey. Special thanks to Kit Harris, Anna Gordon, Garrison Lovely, Miranda Dixon-Luinenburg, Joel Becker, Cillian Crosson, Shakeel Hashim, and Rohin Shah.

0 comments

Comments sorted by top scores.