NeuroAI for AI safety: A Differential Path

post by nz, Patrick Mineault (patrick-mineault) · 2024-12-16T13:17:12.527Z · LW · GW · 0 commentsThis is a link post for https://arxiv.org/abs/2411.18526

Contents

Introduction Proposals Next steps None No comments

Disclaimer: This post is a summary of a technical roadmap (HTML version, arXiv pre-print, twitter thread) evaluating how neuroscience could contribute to AI safety. The roadmap was recently released by the Amaranth Foundation, a philanthropic organization dedicated to funding ambitious research in longevity and neuroscience. The roadmap was developed jointly by Patrick Mineault*, Niccolò Zanichelli*, Joanne Peng*, Anton Arkhipov, Eli Bingham, Julian Jara-Ettinger, Emily Mackevicius, Adam Marblestone, Marcelo Mattar, Andrew Payne, Sophia Sanborn, Karen Schroeder, Zenna Tavares and Andreas Tolias.

TLDR: We wrote a comprehensive technical roadmap exploring potential ways neuroscience might contribute to AI safety. We adopted DeepMind's 2018 framework for AI safety, and evaluated seven different proposals, identifying key bottlenecks that need to be addressed. These proposals range from taking high-level inspiration from the brain to distill loss functions and cognitive architectures, to detailed biophysical modeling of the brain at the sub-neuron level. Importantly, these approaches are not independent – progress in one area accelerates progress in others. Given that transformative AI systems may not be far away, we argue that these directions should be pursued in parallel through a coordinated effort. This means investing in neurotechnology development, scaling up neural recording capabilities, and building neural models at scale across abstraction levels.

Introduction

The human brain might seem like a counterintuitive model for developing safe AI systems: we engage in war, exhibit systematic biases, often fail to cooperate across social boundaries, and display preferences for those closer to us. Does drawing inspiration from the human brain risk embedding these flaws into AI systems? Naively replicating the human neural architecture would indeed reproduce both our strengths and our weaknesses. Furthermore, pure replication approaches can display unintended behavior if they incorrectly capture physical details or get exposed to different inputs—as twin studies remind us, even genetically identical individuals can have very different life trajectories. Even the idea of selecting an exemplary human mind–say, Gandhi–as a template raises questions, and as history shows us, exceptional intelligence does not guarantee ethical behavior.

We propose instead a selective approach to studying the brain as a blueprint for safe AI systems. This involves identifying and replicating specific beneficial properties while carefully avoiding known pitfalls. Key features worth emulating include the robustness of our perceptual system and our capacity for cooperation and theory of mind. Not all aspects of human cognition contribute to safety, and some approaches to studying and replicating neural systems could potentially increase rather than decrease risks. Success requires carefully selecting which aspects of human cognition to emulate, guided by well-defined safety objectives and empirical evidence.

Unfortunately, a common and justified criticism is that to date, traditional neuroscience has historically moved far too slowly to impact AI development on relevant timelines. The pace of capability advancements far outstrips our ability to study and understand biological intelligence. If neuroscience is to meaningfully contribute to AI safety, we need to dramatically accelerate our ability to record, analyze, simulate, and understand neural systems. However, the landscape of neuroscience is changing rapidly.

The good news is that the catalysts for large-scale, accelerated neuroscience are already here, thanks in part to massive investments made by the BRAIN Initiative in the past decade. New recording technologies can capture the activity of thousands of neurons simultaneously. Advanced microscopy techniques let us map neural circuits with unprecedented precision. High-throughput behavioral systems allow us to study complex cognitive behaviors at scale. Virtual neuroscience is far more feasible than in the past, thanks to dramatically lower compute costs and advances in machine learning.

Current AI systems are already powerful enough to raise serious safety concerns, though still not human-level in at least some domains. For neuroscience to have a chance to positively impact AI safety, it's important to understand and make an attempt to implement safety mechanisms inspired by neuroscience before more advanced AI systems are developed. This effort does not only have a chance to benefit AI safety – it also is likely to help us understand the brain. It could speed up the translational timelines for new neurotechnologies, lead to breakthroughs in treating neurological conditions, and allow neuroscientists to do experiments cheaper and faster. This creates a "default good" scenario where even if the direct impact on AI safety is significantly smaller than hoped, other fields will benefit greatly.

Proposals

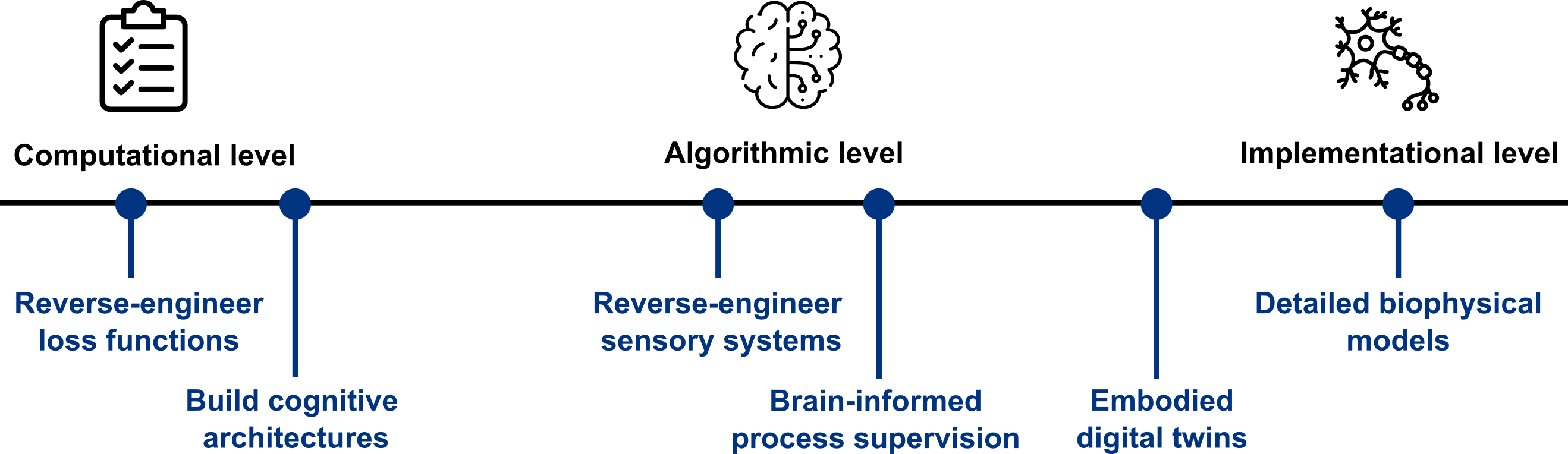

The proposals we evaluate make very different bets on which level of granularity should be the primary focus of study. Marr’s levels codify different levels of granularity in the study of the brain:

For the purpose of AI safety, any one level is unlikely to be sufficient to fully solve the problem. For example, solving everything at the implementation level using biophysically detailed simulations is likely to be many years out, and computationally highly inefficient. On the other hand, it is very difficult to forecast which properties of the brain are truly critical in enhancing AI safety, and a strong bet on only the computational or algorithmic level may miss crucial details that drive robustness and other desirable properties. Thus, we advocate for a holistic strategy that bridges all of the relevant levels. Importantly, we focus on scalable approaches anchored in data. All of these levels add constraints to the relevant problem, ultimately forming a safer system.

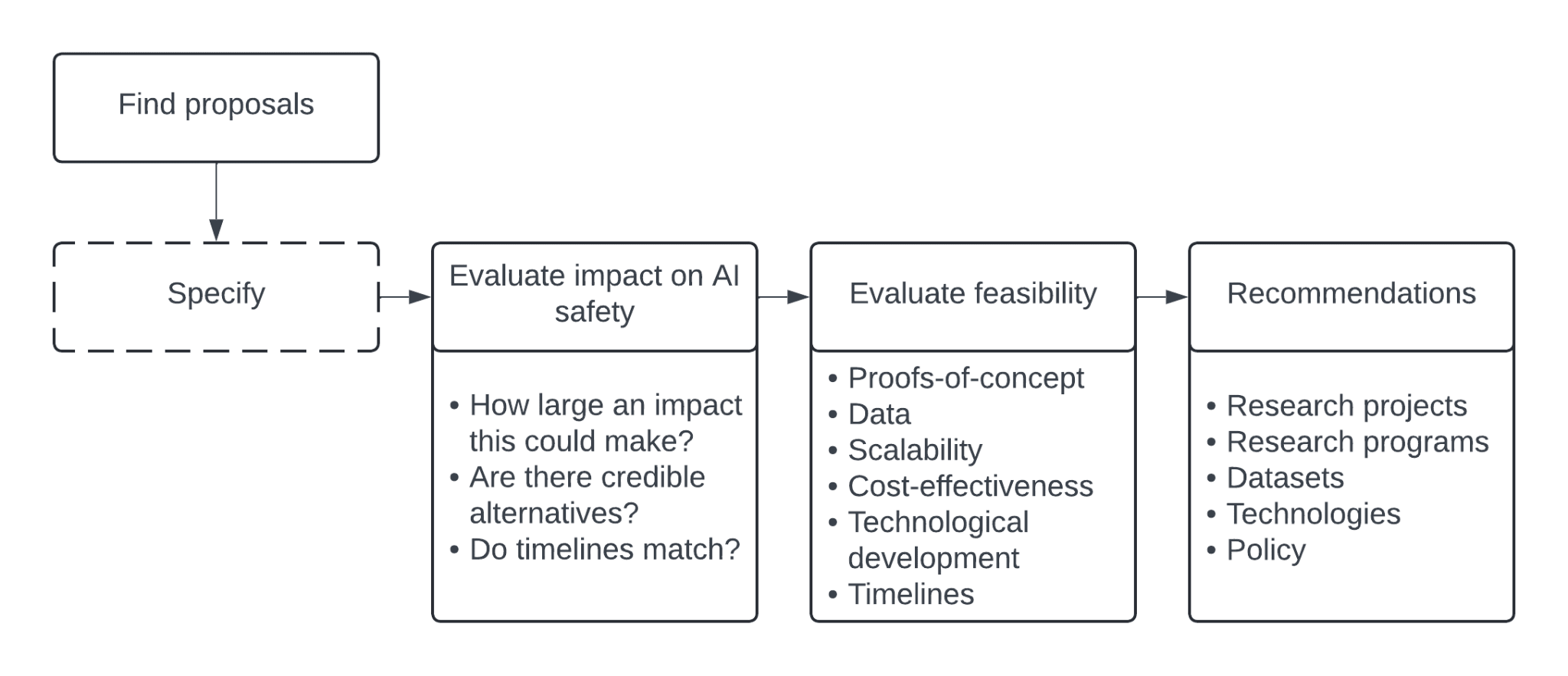

Many of these proposals are in embryonic form, often in grey literature–whitepapers, blog posts, and short presentations. Our goal here is to catalog these proposals and flesh them out, putting the field on a more solid ground. Our process is as follows:

- Give a high-level overview of the approach. Highlight important background information to understand the approach. Where proposals are more at the conceptual level, further specify the proposal at a more granular level to facilitate evaluation.

- Define technical criteria to make this proposal actionable and operationalizable, including defining tasks, recording capabilities, brain areas, animal models, and data scale necessary to make the proposal work. Evaluate their feasibility.

- Define the whitespace within that approach, where more research, conceptual frameworks, and tooling are needed to make the proposal actionable. Make recommendations accordingly.

We use a broad definition of neuroscience, which includes high resolution neurophysiology in animals, cognitive approaches leveraging non-invasive human measurements from EEG to fMRI, and purely behavioral cognitive science.

Our document is exhaustive, and thus quite long; sections are written to stand alone, and can be read out-of-order depending on one’s interests. We have ordered the sections starting with the most concrete and engineering-driven, supported by extensive technical analysis, and proceed to more conceptual proposals in later sections. We conclude with broad directions for the field, including priorities for funders and scientists.

The seven proposals covered by our roadmap (and only linked to below for brevity) are:

- Reverse-engineer representations of sensory systems. Build models of sensory systems (“sensory digital twins”) which display robustness, reverse engineer them through mechanistic interpretability, and implement these systems in AI

- Build embodied digital twins. Build simulations of brains and bodies by training auto-regressive models on brain activity measurements and behavior, and embody them in virtual environments

- Build biophysically detailed models. Build detailed simulations of brains via measurements of connectomes (structure) and neural activity (function)

- Develop better cognitive architectures. Build better cognitive architectures by scaling up existing Bayesian models of cognition through advances in probabilistic programming and foundation models

- Use brain data to finetune AI systems. Finetune AI systems through brain data; align the representational spaces of humans and machines to enable few-shot learning and better out-of-distribution generalization

- Infer the loss functions of the brain. Learn the brain’s loss and reward functions through a combination of techniques including task-driven neural networks, inverse reinforcement learning, and phylogenetic approaches

- Leverage neuroscience-inspired methods for mechanistic interpretability. Leverage methods from neuroscience to open black-box AI systems; bring methods from mechanistic interpretability back to neuroscience to enable a virtuous cycle

Next steps

Several key themes have emerged from our analysis:

- Focus on safety over capabilities. Much of NeuroAI has historically been focused on increasing capabilities: creating systems that leverage reasoning, agency, embodiment, compositional representations, etc., that display adaptive behavior over a broader range of circumstances than conventional AI. We highlighted several ways in which NeuroAI has at least a chance to enhance safety without dramatically increasing capabilities. This is a promising and potentially impactful niche for NeuroAI as AI systems develop more autonomous capabilities.

- Data and tooling bottlenecks. Some of the most impactful ways in which neuroscience could affect AI safety are infeasible today because of a lack of tooling and data. Neuroscience is more data-rich than at any time in the past, but it remains fundamentally data-poor. Recording technologies are advancing exponentially, doubling every 5.2 years for electrophysiology and 1.6 years for imaging, but this is dwarfed by the pace of progress in AI. For example, AI compute is estimated to double every 6-10 months. Making the most of neuroscience for AI safety requires large-scale investments in data and tooling to record neural data in animals and humans under high-entropy natural tasks, measure structure and its mapping to function, and access frontier-model-scale compute.

- Need for theoretical frameworks. While we have identified promising empirical approaches, stronger theoretical frameworks are needed to understand when and why brain-inspired approaches enhance safety. This includes better understanding when robustness can be transferred from structural and functional data to AI models; the range of validity of simulations of neural systems and their ability to self-correct; and improved evaluation frameworks for robustness and simulations.

- Breaking down research silos. When we originally set to write a technical overview of neuroscience for AI safety, we did not foresee that our work would balloon to a 100 page manuscript. What we found is that much of the relevant research lived in different silos: AI safety research has a different culture than AI research as a whole; neuroscience has only recently started to engage with scaling law research; structure-focused and representation-focused work rarely overlap, with the recent exception of structure-to-function enabled by connectomics; insights from mechanistic interpretability have yet to shape much research in neuroscience. We hope to catalyze a positive exchange between these fields by building a strong common base of knowledge from which AI safety and neuroscience researchers can have productive interactions.

We’ve identified several distinct neuroscientific approaches which could positively impact AI safety. Some of the approaches, which are focused on building tools and data, would benefit from coordinated execution within a national lab, a focused research organization, a research non-profit or a moonshot startup. Well-targeted tools and data serve a dual purpose: a direct shot-on-goal of improving AI safety, and an indirect benefit of accelerating neuroscience research and neurotechnology translation. Other approaches are focused on building knowledge and insight, and could be addressed through conventional and distributed academic research.

Nothing about safer AI is inevitable - progress requires sustained investment and focused research effort. By thoughtfully combining insights from neuroscience with advances in AI, we can work toward systems that are more robust, interpretable, and aligned with human values. However, this field is still in its early stages. Many of the approaches we’ve evaluated remain speculative and will require significant advances in both neuroscience and AI to realize their potential. Success will require close collaboration between neuroscientists, AI researchers, and the broader scientific community.

Our review suggests that neuroscience has unique and valuable contributions to make to AI safety, and these aren't just incremental, independent steps – together, they have the potential to represent the foundation of a differential path forward. Better recording technologies could enable more detailed digital twin models, which in turn could inform better cognitive architectures. Improved interpretability methods could help us validate our understanding of neural circuits, potentially leading to more effective training objectives. We need to build new tools, scale data collection with existing ones, and develop new theoretical frameworks. Most importantly, we need to move quickly yet thoughtfully – the window of opportunity to impact AI development is unlikely to stay open for long.

The path outlined above represents a unique opportunity: a path that not only has the potential to lead to safer AI systems, but also helps us understand the brain, advances neurotechnology, and accelerates treatments for neurological disease. In case you -- as a researcher, engineer, policymaker or funder -- are interested in helping push this path forward, we encourage you to reach out.

0 comments

Comments sorted by top scores.