Book Review: Safe Enough? A History of Nuclear Power and Accident Risk

post by ErickBall · 2024-07-09T01:12:28.730Z · LW · GW · 0 commentsContents

1. Enlightenment Faith 2. Crash Course 3. Bandwagon Market and Turnkey Power Plants 4. Regulatory Ratchet 5. Quantify Risk? Things Went Wrong 6. Canceled 7. How Safe is Safe Enough? 8. The Rise and Strangely Recent Fall of Nuclear Energy Near Miss 9. A Future for Nuclear? Further Reading and References None No comments

Epistemic status: This book covers a lot of topics related to nuclear energy; I have experience with some of them but not all, and some are inherently murky. I will try to make it clear what's fact and what's my opinion.

“If you’re not [pursuing safety] cost-effectively, you’re killing people.”

—David Okrent, former member of the Advisory Committee on Reactor Safeguards

1. Enlightenment Faith

Safe Enough? is the heartbreaking story of an industry that tried to move fast and break things, and in the process ended up completely broken. But it is also a cautiously hopeful tale of repeated attempts to resuscitate nuclear power through the arduous, and often controversial, process of Probabilistic Risk Assessment (PRA). While it doesn’t fully explain what went wrong with nuclear power, or whether it can be fixed, this book fills in some of the gaps, and avoids the ideological blinders that often define the popular debate.

Author Tom Wellock is the official historian of the U.S. Nuclear Regulatory Commission. His exhaustively researched history (the citations and bibliography run to over a hundred pages) sometimes feels like no more than a compendium of quotes from primary sources. But, if you read between the lines, it's a book with a message: The history of nuclear safety and its regulation is riddled with mistakes, but it has gradually improved, largely through better quantification of risk.

Safe Enough? shows how early nuclear regulators, and the industry itself, misunderstood the technology and its risks. The resulting screwups exacerbated public mistrust and political opposition, leading to excessive and chaotic regulation. Gradual improvements to PRA, it argues, have slowly made nuclear plants both safer and more efficient to operate.

This argument plays directly to my biases: PRA is sometimes called “the rationalist approach to regulatory development” [ref]. Still, I’m not sure how much I believe it. Can regulation really be both nuclear power’s albatross and its savior? Or is attributing so much power to a bunch of shaky numbers a case of high modernist hubris, a misplaced faith in logic and legibility? Improvements in efficient reactor operation over time followed accumulated experience and maturing technology, not just regulatory changes. And though the NRC’s oversight of existing reactors now leans heavily on risk information, licensing of new reactors still has some of the same issues that dogged the expanding fleet of the 1970s.

To understand the problems nuclear energy faced in its early years, we need a bit of context: the terrific speed of its arrival and the excitement that surrounded it. Energy industry insiders never really believed nuclear would make electricity “too cheap to meter” (they expected capital costs to be higher than a coal plant’s, due to the more complex equipment and the need to protect the workers from radiation), but they did see it as a manifestation of science and of humanity's bright future, and the obvious solution to rapidly rising demand for electricity. Its promise was threefold: compared to coal or oil, it would have low fuel costs, run on practically inexhaustible reserves of uranium, and create no air pollution. Most experts thought of major nuclear accidents as a remote possibility that barely merited concern. The sooner the transition to energy abundance could happen, the better.

2. Crash Course

Twenty-five years after the Wright brothers flew the first airplane, the state of the art in commercial air travel was the Boeing 40B, an open-cockpit biplane that could hold up to four passengers. It mainly carried mail, a program the government introduced to encourage the industry because passenger travel was so unprofitable.

Twenty-five years after the first nuclear chain reaction in 1942, there were about eight US commercial nuclear plants already in operation and 23 more under construction, 16 of which are still in operation more than 50 years later.

Airplanes and nuclear reactors both benefited from big military investments before making the transition to large-scale civilian applications, but nuclear energy was treated as a futuristic drop-in replacement for fossil-fueled power plants. It was as if, after World War I, airplane manufacturers had jumped directly to building 100-seat airliners, with the intention of rapidly capturing the market for long-distance travel and keeping those same planes in service into the 1970s.

A nuclear plant is different from a fossil plant, and not just in the obvious sense that it “burns” a few tons of uranium instead of a mountain of coal. The core produces massive amounts of neutron radiation during normal operation, as well as a large amount of radioactive material that remains after it's turned off. Designers knew it would require more care and more planning to accommodate those factors. When the reactor is running, no one can get near it. When you shut it down for maintenance, you have to provide continuous cooling for the irradiated fuel because it still produces dangerous amounts of heat. You have to monitor radiation doses for the workers and quickly seal up any leaks from the coolant system. And, of course, you have to prevent an accidental release of radioactive material into the atmosphere.

In the early years of the Cold War, Congress wanted to ensure American primacy in civilian nuclear power. In 1954, it created the Atomic Energy Commission (AEC) and tasked it with a “dual mandate.” First, it would support the budding industry with money and basic research. Second, it would invent regulations to provide “adequate protection” of public health and safety from nuclear material. But here they faced a problem: with no history of nuclear accidents to learn from, scientists didn’t know much about the dangers of radiation. Most safety regulations take an empirical approach; the AEC could not. Instead, they decided to brainstorm the worst thing that might realistically happen to a reactor, and then ask designers to prove that even in this disaster scenario their reactors wouldn't harm the public. They called this the Design Basis Accident.

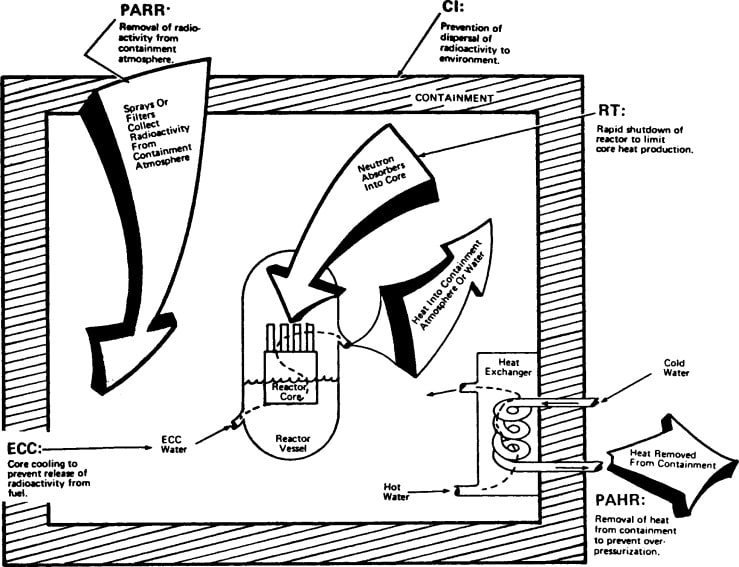

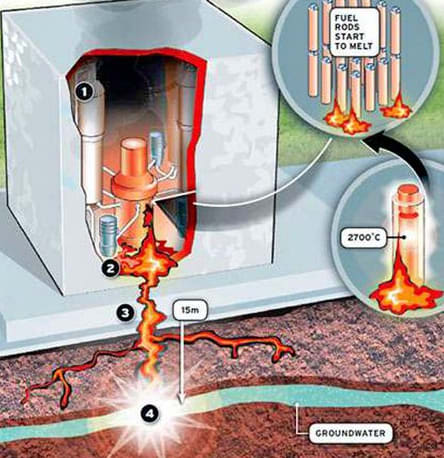

After a sudden shutdown (a “scram”), the key to safety is to make sure the reactor core is covered with water. As long as it's covered, it stays cool enough to keep most of the radioactive fission products inside the fuel rods where they belong. But if you let that water drain off or boil away, then you're in trouble: even though you’ve stopped the neutron chain reaction, the decay heat of the fission products will still heat the fuel past its 5000 degree melting point. So a natural starting point for the AEC's disaster scenario was a leak, a Loss of Coolant Accident (LOCA). To make it the worst case, they imagined the largest pipe in the coolant system suddenly breaking in half. All the escaping water would have to be replaced somehow—generally an Emergency Core Cooling System (ECCS) would rapidly pump in more water from a giant tank nearby. And since things don't usually work perfectly during an emergency, they also assumed there would be a power outage and a “single active failure” of whatever active component (pump, generator, etc.) was most critical. This meant, essentially, that the designers would need to build in a backup for everything. The containment structure, meanwhile, would have to withstand a huge pressure spike as the superheated water flashed into steam, and that meant a voluminous, thick steel structure backed by even thicker concrete. Eventually there would be over a dozen different Design Basis Accidents to account for, such as a power surge or sudden loss of feedwater, but the Large LOCA remained the most challenging and expensive to address.

How likely this kind of accident was didn’t get as much attention, because there was no good way to estimate it. The state of the art “expert judgment” approach helpfully suggested that it was somewhere between unlikely and practically impossible. Consequences, though, were amenable to research. A study called WASH-740, published in 1957, examined the worst-case outcomes of a severe accident in which half the reactor core would vaporize directly into the atmosphere, with no containment, and with the wind directing it towards a major population center. It found there could be 3,400 deaths, 43,000 injuries, and property damage of $7 billion ($80 billion in 2024 dollars). It was “like evaluating airline travel by postulating a plane crash into Yankee Stadium during the seventh game of the World Series. Such scenarios only confused and frightened the public.” Some in the AEC thought that to improve nuclear power’s image they needed better risk estimates—a desire born of “an Enlightenment faith that the public was composed of rational actors who dispassionately choose their risks according to data.” When they updated WASH-740 in 1965 to model the larger reactors then under construction, the danger looked even worse: 45,000 deaths. Still unable to calculate a justifiable probability to reassure the public, they kept the update to themselves.

Recognizing that the Design Basis Accidents did not account for every possibility, the AEC also wanted nuclear plants to follow the principle of “Defense in Depth” by having additional layers of safeguards, like putting the reactor at a remote site and surrounding it with a containment structure. The commissioners believed these passive factors were “more reliable” than active measures like the ECCS.

It was a crude approach. The AEC didn't really know whether these protections would be “adequate,” per their regulatory directive, nor could they know if some of their requirements were excessive. The plan, it seems, was to figure things out as they went along.

3. Bandwagon Market and Turnkey Power Plants

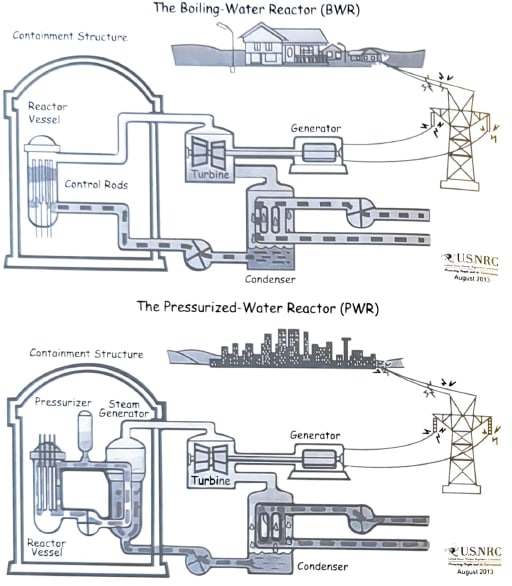

As it turned out, that plan would be severely challenged by the explosive growth of the nuclear industry. After a handful of small demonstration plants, the 1960s saw a meteoric rise in both the number and the size of nuclear plants applying for licenses. The rapid build-out was not organic; it was by design. The two main nuclear vendors, General Electric with its Boiling Water Reactors and Westinghouse with its Pressurized Water Reactors (pictured below), began offering what were called “turnkey” plants. These had a fixed up-front price to the utility that ordered them, with all the investment risk assumed by the manufacturer. The first one, Oyster Creek, sold for just $66 million. Like many others, it was a loss leader, sold below cost in a successful effort to create “a bandwagon effect, with many utilities rushing ahead...on the basis of only nebulous analysis.” Nuclear was the energy of tomorrow, and GE and Westinghouse wanted to bring it to fruition as quickly as possible. Utilities, initially skeptical of untested tech, came to think of a nuclear plant as a feather in their cap that proved they were tech-savvy, bold, and at the forefront of progress.

The bandwagon approach had downsides, though. Some of the earlier plants took only about 4 years to build, but as reactors got larger (upwards of 800 MW electric capacity by the mid-1970s) they needed more specialized manufacturing. Build times doubled. Utilities started building new designs before the prior generation was finished. Even plants of the same nominal design were customized by the builders, making every one unique. And bigger, as it turned out, was not always better: larger plants were more complex to operate and maintain. In modern terms, the scale-up was creating more and more technical debt.

4. Regulatory Ratchet

Safety reviewers couldn’t keep up. What would later become the NRC started as a small division within the AEC (1% of the budget), with just a handful of staff acting as “design reviewers, inspectors, hearings examiners, and enforcers.” It lacked the deep technical knowledge of the research and development labs, meaning that regulatory staff often had to consult with colleagues in charge of promoting nuclear energy. The conflict of interest became clear immediately, and the commission made efforts to keep the regulators independent. The Atomic Energy Act allowed quite a bit of flexibility in the AEC’s approach to safety requirements, but early commissioners (on the advice of Edward Teller) wanted nuclear power to have a higher standard of safety than other industries—partly from fear that an accident would turn the public against the technology.

While the AEC staff diligently tried to apply its conservative Design Basis Accident approach to the flood of new reactors, the nuclear industry lobby objected again and again. The “ductility of the reactor piping” would preclude a sudden pipe break. Research on core meltdowns was unnecessary because “a major meltdown would not be permitted to occur.” Even if it did, most believed a melting core “could not escape the reactor’s massive pressure vessel, a big steel pot with walls six to eight inches thick.” There was no risk of a major release to the environment, they claimed. Many in the AEC agreed.

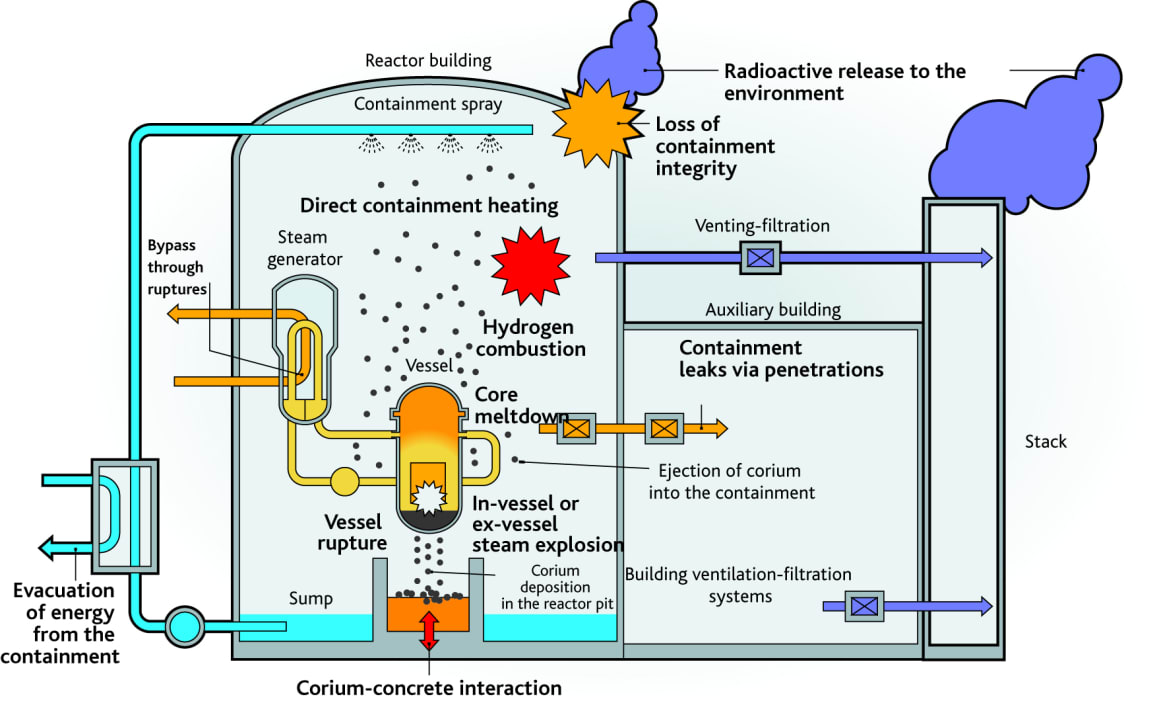

Between 1958 and 1964, however, [AEC] research indicated that the fuel could melt, slump to the vessel bottom, and melt through that, too, landing on the containment building floor. It would then attack the concrete until it broke through the bottom. Once outside the containment building, the fission products might enter the water table or escape into the atmosphere. A joke about the glowing hot blob melting all the way to China led to the phenomena being dubbed “The China Syndrome.”

The industry fell back on the argument that Emergency Core Cooling Systems were so reliable that a core meltdown was simply inconceivable. The ECCS was “little more than a plumbing problem.” Industry advocates in government pulled AEC research away from severe accidents and toward a new research program to prove ECCS effectiveness. Without a fully functional containment, the AEC could no longer argue that even the worst accidents had zero consequences. Instead, it had to make the case that severe accidents were so improbable that reactors should still be considered safe.

In an early warning of problems to come, licensing times began to increase. “Between 1965 and 1970, the size of the regulatory staff increased by about 50 percent, but its licensing and inspection caseload increased by about 600 percent. The average time required to process a construction permit application stretched from about 1 year in 1965 to over 18 months by 1970.” [ref 1]

Oyster Creek, started in 1964, featured an innovative Mark I pressure-suppression containment—the same type that failed to protect the Fukushima reactors a half century later. It shrank down the massive steel structure until it fit tightly around the reactor vessel. To deal with the steam burst from a LOCA, it would direct it down an array of pipes into a pool of water where, they hoped, most of it would condense before creating enough pressure to burst the walls. Regulators, uncertain of its safety, granted only a conditional license. And that sort of decision became a trend: “From one application to the next, the AEC demanded new, expensive, redundant safety systems.” According to the AEC, these regulatory “surprises” came about because each plant had a new and unique design. The industry called it excess conservatism and “regulatory uncertainty.” How could builders hope to meet safety requirements that were a moving target?

Throughout the 1970s, the AEC “often forced redesign and backfits on plants already under construction,” with no way of knowing how much they would improve safety. The list is long: New rules required seismic restraints, fireproof construction and ventilation, and “greater physical separation of redundant safety-related equipment such as electrical cables.” Everything had to be resistant to heat, humidity, and radiation [ref 4]. For one reactor, the AEC had required 64 different upgrades (backfits) by 1976.

In addition to safety issues, environmental concerns about nuclear power arose. Due to their greater size and lower thermal efficiency, nuclear plants added more excess heat to lakes and rivers than their fossil counterparts. To mitigate it, utilities turned to the huge, and expensive, natural-draft cooling towers that are now such an iconic image of nuclear power (though some other power plants use them too).

Nuclear quality assurance rules became one of the biggest cost drivers, because they required extensive documentation and testing of even simple components. QA practices for pressure vessels prompted a saying that, once the weight of the paperwork matched the weight of the reactor vessel, it was ready to ship. The AEC first established the rules in 1970, after dozens of reactors were already under construction. For several more years there was no general agreement on how to implement them, or how strictly, but some construction sites hired dozens of QA staff in an attempt to comply [ref]. They applied to any equipment that was “Safety Related,” meaning the utility’s analysis of the Design Basis Accident made use of it. Sometimes these components were critical to the safe operation of the plant; other times, not so much [ref]. Plants originally “over-coded” (coded everything in huge systems as Safety Related), at huge cost, because of uncertainty about what was necessary. Changing it later on was possible, but time-consuming, and required detailed knowledge of the design that often was no longer readily available.

Certain writers have also targeted the principle of keeping radiation doses As Low As Reasonably Achievable (ALARA), calling it a key piece of the regulatory ratchet. In my view this concern is misdirected: in spirit, ALARA just consists of applying approximate cost-benefit analysis to radiation doses. See here for a good discussion.

Eventually, the ECCS reliability tests that the industry demanded came back to haunt them. Tests on a scaled-down core suggested that immediately after a LOCA, water injected into the system would flow right back out through the broken pipe. By the time the pressure got low enough for the water to reach the core, the fuel rods might be hot enough to collapse and block the flow, or to shatter on contact with the cold water. The AEC at first concealed the results, thinking that more research could reverse the conclusions and avoid bad press.

Even after research revealed uncertainty with ECCS performance, AEC staff believed that a meltdown was not a credible accident. In late 1971, it estimated that the odds of a major core damage accident were 10-8 per reactor year (one in one hundred million). As a later NRC report noted, this “was a highly optimistic estimate [by several orders of magnitude], but it typifies the degree to which meltdown accidents were considered ‘not credible.’”

Antinuclear activists known as “intervenors,” with the support of a few of the AEC staff, latched onto the uncertainties and sued to delay dozens of license applications until the ECCS designs could be proven effective. The AEC struggled to head off the lawsuits by creating new “interim” criteria for acceptance, and its rulemaking hearings in 1972 became a major press event that solidified public opinion against nuclear power and the AEC. Though it eventually pushed the rules through, the AEC’s position was weakened. And existing plants had to do expensive upgrades to satisfy the new criteria. One older unit (Indian Point 1, near New York) had to close down entirely due to the near-impossibility of the modifications.

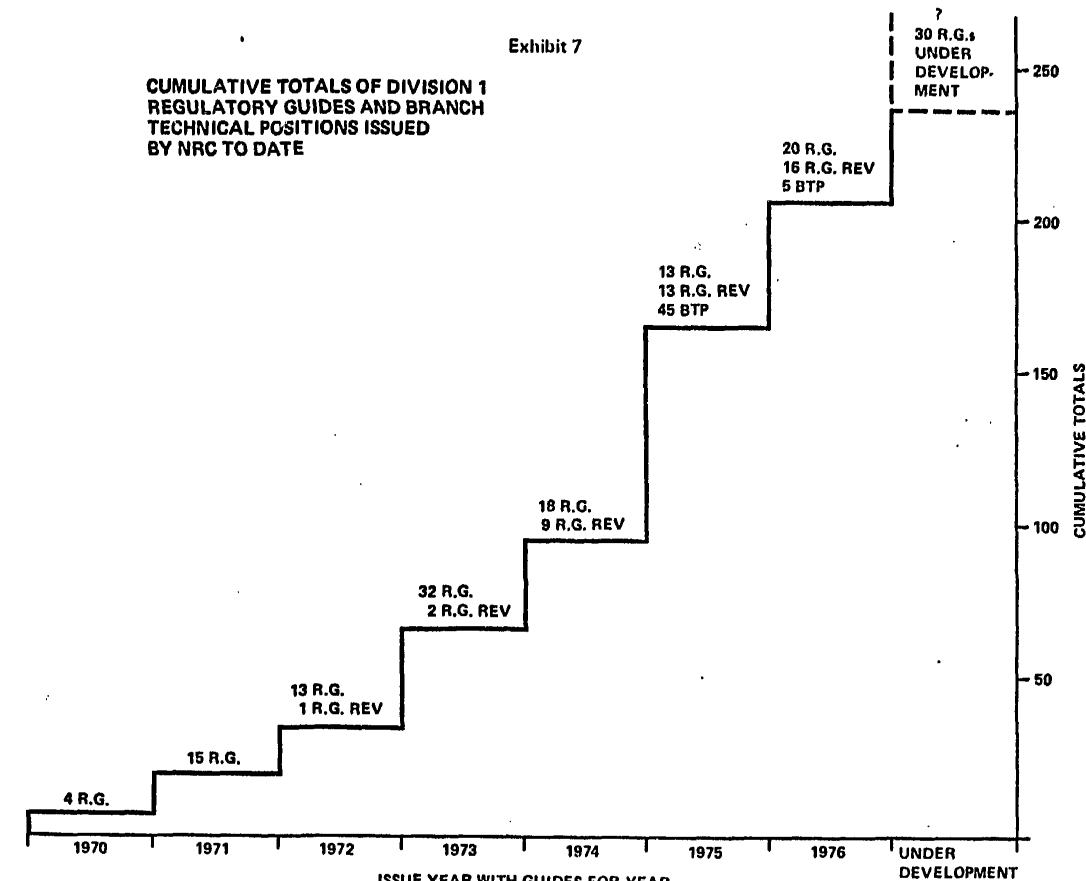

The rules were piling up. In his excellent article Why Does Nuclear Power Plant Construction Cost So Much?, Brian Potter shows this graph as an indicator of increases in regulatory requirements and thoroughness of NRC review throughout the 1970s:

It's worth nitpicking that the "regulatory guides" and "branch technical positions" shown here were not new regulations being created—they were examples the AEC/NRC gave of methods for showing that a plant meets the requirements. But my understanding is that the people building reactors mostly tried to follow them exactly (to reduce the risk of a denied application), so they are a decent proxy for how detailed and rigorous a license application would need to be. Demonstrating that a design followed all those rules and guidelines took up thousands of pages, and increasingly meant debating back and forth with the reviewers as well.

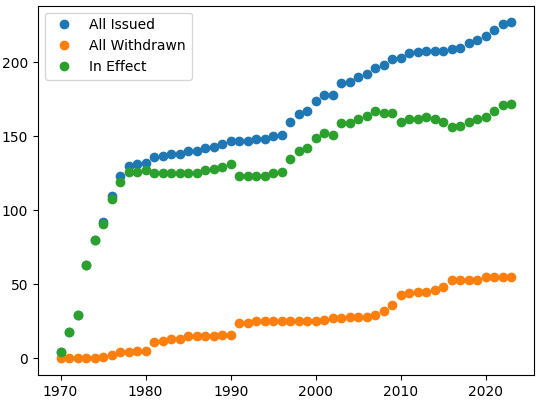

But something shifted. If we extend the plot up to the present, it looks like most of the regulatory guides were issued between 1970 and 1978, and then the number stayed flat for the next 20 years before slowly rising again. It may not be a coincidence that 1978 was also the last year any plant started construction until 2013. The regulatory ratchet did not grind to a halt—after the Three Mile Island accident in 1979, for example, the NRC created new requirements for evacuation planning and operator training. But with no new reactor designs to review, practices for applying the existing rules had a chance to stabilize. Reactor designs finally started to become more standardized around 1975, so there had been time to understand and react to any new issues those designs raised. Regulators were starting to catch up with the pace of change.

5. Quantify Risk?

In the early 1970s, the tide of public opinion had started to turn against nuclear power and against the AEC in particular. The AEC represented the military-industrial complex, and the rising environmental movement did not trust its claims about how safe reactors were. The hidden update to the WASH-740 study, with its 45,000 potential deaths, had “sat like a tumor in remission in AEC filing cabinets, waiting to metastasize.” When anti-nuclear crusaders learned of its existence, they considered it proof that the AEC was lying, and demanded its full release. Politically, the AEC needed to be able to prove that the WASH-740 scenario was vanishingly unlikely. But more than that, they needed to show that all accidents with significant consequences were unlikely. If they could calculate probabilities and consequences for every type of reactor accident that might happen, it would allow fair comparisons between the risks of nuclear power and other common dangers, including those of fossil fuels. They needed to “see all the paths to disaster.”

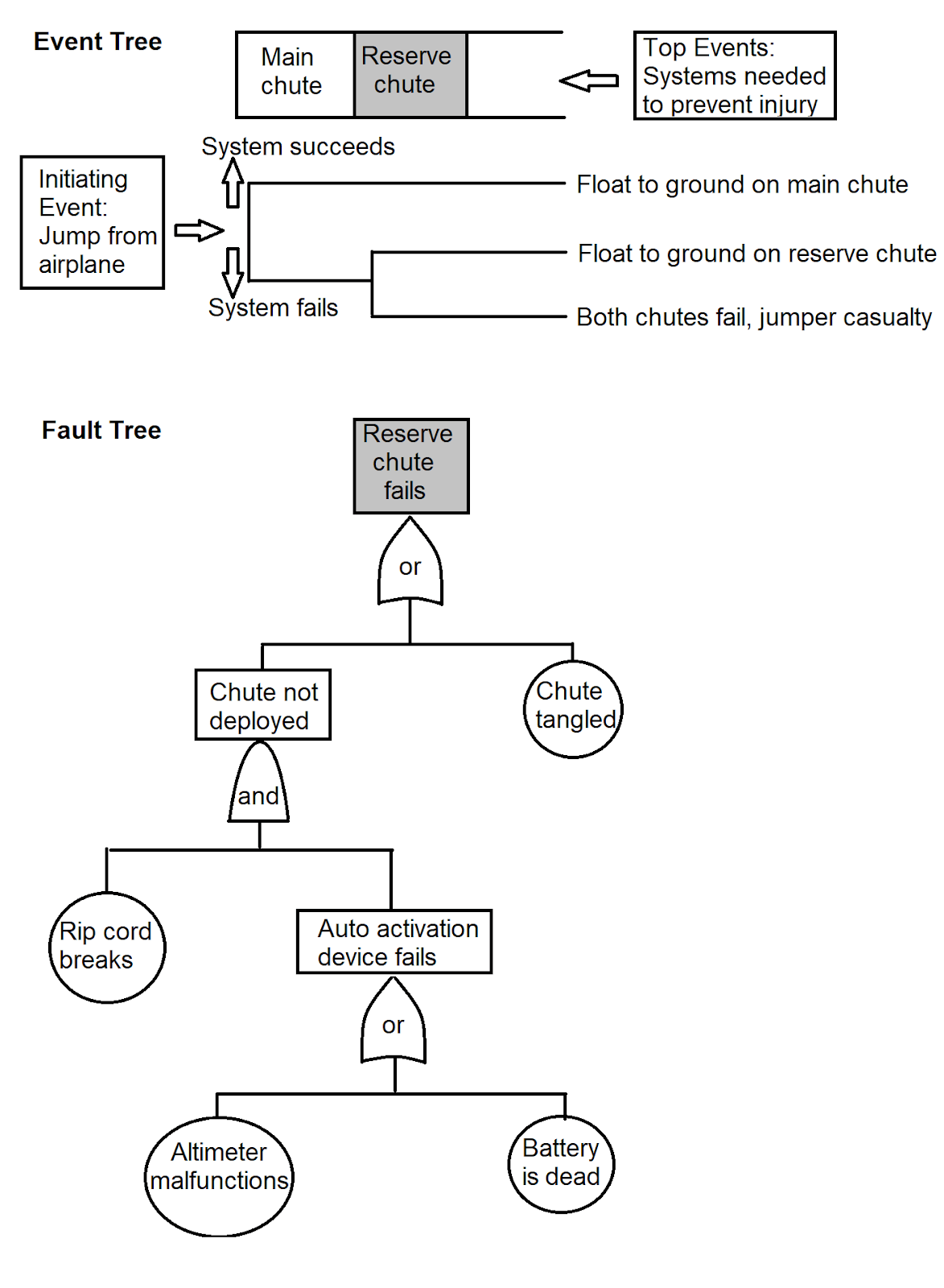

The solution proposed by Rasmussen was to calculate the probabilities for chains of safety-component failures and other factors necessary to produce a disaster. The task was mind-boggling. A nuclear power plant's approximately twenty thousand safety components have a Rube Goldberg quality. Like dominoes, numerous pumps, valves, and switches must operate in the required sequence to simply pump cooling water or shut down the plant. There were innumerable unlikely combinations of failures that could cause an accident. Calculating each failure chain and aggregating their probabilities into one number required thousands of hours of labor. On the bright side, advancements in computing power, better data, and “fault-tree” analytical methodology had made the task feasible.

WASH-1400, also called the Rasmussen Report after its lead scientist, was to be the first attempt at Probabilistic Risk Assessment. Other industries had already developed a technique called a fault tree, in which the failure probabilities of individual components could be combined, using Boolean logic (“and” gates and “or” gates), to give the failure probability of a multi-component system. The new study aggregated a number of fault trees into an “event tree,” a sort of flow chart mapping the system failures or successes during an incident to its eventual outcome. And in turn, there would be many different event trees derived from various possible ways for an accident to begin.

Confidence in the outputs followed confidence in the inputs. To make the best use of limited component failure data, they used Bayes’ Theorem to calculate uncertainty distributions for each component—a technique sometimes criticized for its “subjective probabilities.” It was common for the uncertainty band to stretch higher or lower than the point estimate by a factor of 3 to 10. The numbers, often fragile to begin with, were woven into a gossamer fabric of calculations that might collapse under the slightest pressure. And, of course, the model could only include failure modes the modelers knew about. Rasmussen, an MIT professor, warned the AEC that his study would suffer from “a significant lack of precision.” His critics argued that “[t]he multiple judgments required in developing a fault tree made ‘the absolute value of the number totally meaningless.’ Fault trees could be important safety design tools, but not to quantify absolute risk.” If the team performing the PRA was biased, even unintentionally, it would be easy for them to subtly manipulate the output.

Even with its uncertainties, WASH-1400 appeared to be a win for advocates of nuclear power: it calculated a worst-case scenario of only a few thousand deaths, with a probability less than one in ten billion per year of reactor operation. Meltdowns in general had a frequency of less than one in a million reactor-years. (Less severe core damage accidents were calculated to be relatively likely at 1 in 20,000 reactor-years, but with minimal health consequences.) When it was published in 1975, the executive summary illustrated nuclear risk as 100 times lower than air travel and similar to being hit by a meteor.

Debate ensued over whether these absolute frequencies had any value. The NRC (created in 1975 by splitting off the AEC’s regulatory staff) retracted the executive summary a few years later over concerns that the comparisons were misleading given the uncertainty, and more modern risk assessments do not support such extreme claims of safety. In the end, the report did little to assuage public fears.

More helpfully, WASH-1400 provided new insights about what types of accident were most probable: large pipe breaks would damage the core far less often than unlucky combinations of more mundane failures like small leaks, operator errors, maintenance problems, and station blackouts. Common-mode failures, where a shared flaw or some external cause compromised redundant systems, also made a big contribution to expected risk. Events proved these observations accurate, though incomplete, several times over the next half decade.

Things Went Wrong

In 1975, a fire started at the Browns Ferry plant in Tennessee when a worker used a candle to check for air leaks in a chamber full of wiring underneath the control room (a standard practice at the time, somehow). The insulation of the control cables burned for seven hours. Like a malicious ghost in a horror movie, it caused equipment all over the plant to switch on and off at random. The operators barely managed to keep the core submerged.

A similar accident happened in 1978 at Rancho Seco in California, when someone dropped a light bulb on an instrument panel and caused a short. The shorted-out non-nuclear instrumentation (not classified as safety related) halted the feedwater flow, scramming the reactor. Incorrect indicators kept auxiliary feedwater from flowing, and the steam generators dried out, leaving nothing to dissipate heat from the reactor coolant system. But before the core could overheat, the feedwater started again on its own.

The next year, at Three Mile Island, the operators were not so lucky.

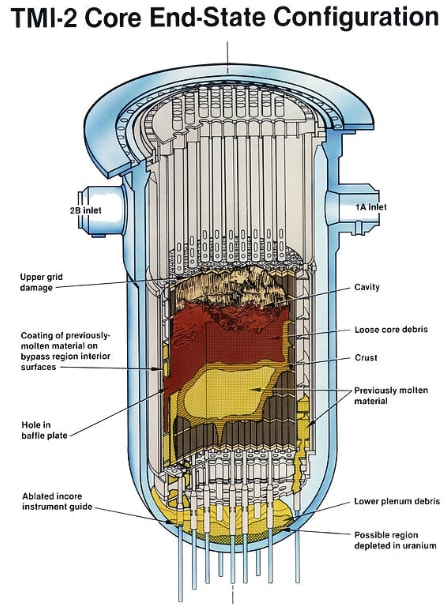

The March 1979 accident at the Three Mile Island facility destroyed a reactor, but it saved PRA. It was not the dramatic design basis accident the AEC and NRC anticipated. The Unit 2 reactor near Middletown, Pennsylvania had no large cooling pipe rupture, no catastrophic seismic event. The cause was more prosaic: maintenance. A pressurized water reactor has a "primary" piping loop that circulates cooling water through the reactor core to remove its heat (fig. 8). The primary loop transfers its heat to a non-radioactive secondary loop as the two loops interface in a "steam generator." The secondary water boils, and the steam drives the plant's turbine generator to make electricity. The steam is then condensed back to water, and feedwater pumps send it back to the steam generator to be boiled again. At Three Mile Island, this flow in the secondary loop stopped when maintenance workers caused an inadvertent shutdown of the feedwater pumps. This event was a relatively routine mishap, and the reactor scrammed as designed.

After that, however, nothing went as designed. A relief valve in the primary loop stuck open, and radioactive water leaked out into the containment and an auxiliary building. No pipe broke, but the stuck valve was equivalent to a small-break loss-of-coolant accident. One control panel indicator of the relief valve's position misled the operators into thinking the valve was closed, and they did not realize they had a leak. This malfunction had happened at other plants, but the operators at Three Mile Island were never alerted to the problem. Unaware of the stuck-open valve, the operators were confused, as primary coolant pressure fell no matter what they did. A temperature indicator that could have helped the distracted operators diagnose the problem was practically hidden behind a tall set of panels. As a result, operators misdiagnosed the problem and limited the supply of cooling water to the overheating reactor core, leading to a partial fuel meltdown. For several days, the licensee and the NRC struggled to understand and control the accident while the nation anxiously looked on. The accident had negligible health effects, but the damage to the NRC and the nuclear industry was substantial. Many of the residents who evacuated the area never again trusted nuclear experts.

[...]

[Radiation] readings taken after the accident were just a third of levels measured at the site during the 1986 Chernobyl disaster some five thousand miles away. Defense in depth had worked.

Defense in depth had “proven its worth in protecting the public, but not in protecting a billion-dollar investment. TMI-2 was a financial disaster because there had been too much focus on unlikely design basis accidents and not enough on the banality of small mishaps.” WASH-1400 had modeled only a particular Westinghouse PWR design. A similar PRA for Three Mile Island, had it existed, would likely have identified the accident sequence as a major risk.

As the PRA techniques developed in WASH-1400 proved themselves useful, they began to spread beyond just nuclear accidents. PRA “made knowable the unknown risks of rare catastrophic events” not only to nuclear plants but space missions, chemical plants, and other complex engineered systems.

6. Canceled

By the mid-1970s, orders for new reactors had collapsed. In the 70s and early 80s, utilities canceled over a hundred reactor orders, 40 percent of them before the accident at Three Mile Island. The reasons were numerous: unexpectedly flat electricity demand, skyrocketing interest rates that made large capital investments unattractive, increasing costs and delays in reactor construction, and public opposition. Some utilities lacked the technical expertise and quality control systems needed for a nuclear plant. “Our major management shortcoming,” the CEO of Boston Edison said, “was the failure to recognize fully that the operational and managerial demands placed on a nuclear power plant are very different from those of a conventional fossil-fired power plant.” Even some nearly complete plants were abandoned or converted to fossil fuels.

Privatization of electricity markets may have been another major obstacle:

Nuclear-plant construction in this country came to a halt because a law passed in 1978 [PURPA] created competitive markets for power. These markets required investors rather than utility customers to assume the risk of cost overruns, plant cancellations, and poor operation. Today, private investors still shun the risks of building new reactors in all nations that employ power markets. [ref]

The first big roadblock appeared in 1971: the Calvert Cliffs court decision, in which citizens sued the AEC over its rather lax implementation of new environmental review requirements in the National Environmental Policy Act (NEPA). The court sided with the environmentalists, ruling that the AEC had to write expansive and detailed environmental impact statements, even if it would delay plant licensing. The AEC chose not to appeal, largely due to public pressure and general lack of trust in the regulator. Instead, it paused all nuclear power plant licensing for 18 months while it revised its NEPA processes.

Meanwhile, critics said the AEC's dual responsibility of regulating and promoting nuclear energy was “like letting the fox guard the henhouse.” The idea of creating separate agencies gained support as both industry concerns and antinuclear sentiment grew, and it took on greater urgency after the Arab oil embargo and the energy crisis of 1973-1974. One of President Nixon's responses to the energy crisis was to ask Congress to create a new agency that could focus on, and presumably speed up, the licensing of nuclear plants. [Ref 1]

Congress obliged, splitting the AEC into the NRC and the Energy Research and Development Administration (later changed to the Department of Energy). But if the intent was to speed up licensing, this move was somewhere between inadequate and counterproductive. The new NRC, with the same staff and subject to the same lawsuits from the intervenors, continued to add safety requirements and demand backfits to existing plants with no cost-benefit analysis. Nascent plans for risk assessments to help limit backfits to the most dangerous issues would not pay off for years.

In a Department of Energy survey, utility executives named regulatory burden as a contributing factor in 38 of 100 decisions to cancel. But whoever was to blame, the party was over.

7. How Safe is Safe Enough?

The NRC commissioners answered this question in 1986, in their Safety Goal Policy Statement. Living within a mile of a nuclear power plant should not increase a person's risk of accidental death by more than 0.1%, and living within 10 miles should not increase someone's risk of cancer death by more than 0.1%. ("Accidental death" here means a fatality from acute radiation poisoning, which could take weeks or months.) These criteria have no legal force—they are guidelines to be considered when writing and interpreting regulations. They were carefully chosen to sound reasonable and reassuring, and yet still be achievable. Their purpose, in part, was to end the arbitrary regulatory ratchet and replace it with careful calculations of cost and benefit. A safe enough plant—one that met the goals by a healthy margin—could be exempt from backfit requirements entirely.

From what I can tell, the 0.1% cancer increase is more restrictive than the accidental death rate, partly because the baseline accident rate in the US is higher than you might expect (about one third as many accident deaths as cancer deaths). And the much-maligned Linear No Threshold (LNT) assumption exaggerates the number of cancer deaths from low-level radiation exposure. To avoid focusing too much on the highly uncertain number of cancer fatalities, the NRC also created a subsidiary goal that a significant release of radiation from any given plant should be no more likely than one in a million per year.

After the safety goals were established, licensees had to perform at least a rudimentary PRA for each power plant. The NRC placed a lot of trust in the utilities: industry associations peer reviewed their PRAs, but regulators saw only the results. In practice, rather than calculating health effects for each plant, the NRC considered the plant likely to meet the safety goals if it showed a core damage frequency less than 1 in 10,000 years. Most did, at least when considering internal (random) events; adding in earthquakes, tsunamis, tornadoes, etc. made it less clear. Later NRC studies on accident consequences showed fewer deaths than the early PRAs (but more property damage) because releases of radiation could be delayed long enough for thorough evacuations. The possibility of another meltdown began to look less like an intangible looming catastrophe and more like a manageable economic risk.

8. The Rise and Strangely Recent Fall of Nuclear Energy

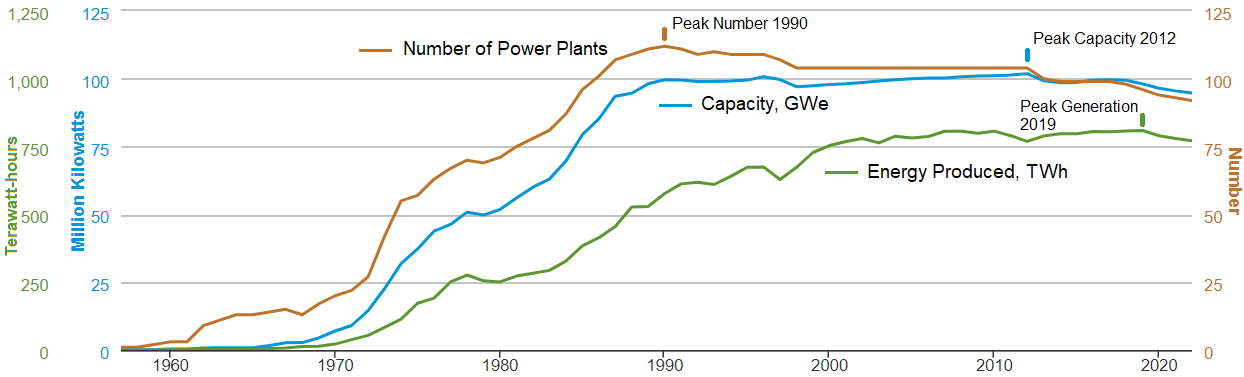

Why should we still care about nuclear power or how it’s regulated? The economic, political, and technical problems that arose in the 70s killed the dream of nuclear abundance, and left a zombie industry running on inertia. Of the plants that would eventually be completed, none started construction after 1978. Yet miraculously, 40+ years later, it still produced 20% of US electricity. Even today it is our largest source of low-carbon energy. The long series of missteps reversed itself: robbed of growth, the nuclear industry turned inward and found ways to scrape up cost savings and efficiency improvements for the plants it already had.

As construction projects of the 70s, delayed by TMI or the intervenors, slowly came online, the number of reactors peaked at 112 in 1990. Then it dropped as older, smaller plants began to retire. Those that remained fought to make up for the decline. Turning to conservatism in their original license applications, they applied new safety analysis methods and advancements in computer simulation to prove that an existing plant (sometimes with small changes) could safely run at a higher power than it had been designed for. Most got license amendments that allowed them to increase their output by up to 20%.

These power uprates held the total licensed capacity fairly steady until the next round of closures began in 2013. The actual energy being sold to the grid continued to increase into the early 2000s, then stayed flat all the way up to the pandemic dip of 2020. Its time is finally up, though. Despite the recent addition of two units at Plant Vogtle in Georgia, the current downward trend will continue indefinitely unless a new wave of construction succeeds.

The trick to producing more energy with fewer and fewer power plants is to increase their capacity factor—the average power level the plant runs at, as a percentage of its maximum capacity. Gas and coal plants need expensive fuel, so when there's low demand for electricity, they save fuel by running at less than their maximum power or shutting off entirely. Intermittent sources (wind and solar) are at the whim of the weather and often have capacity factors as low as 20-25%. But a nuclear plant, with its relatively cheap fuel, can hardly save any money by shutting off when electricity prices dip—plus it takes many hours to start up again. To maximize profit, it needs to be running basically all the time; every interruption is a financial disaster. The average capacity factor in the early 1980s was an abysmal 55%.

As construction projects wound down and utilities stopped applying for new licenses, the NRC transformed from a licensing body to an oversight body. Its remaining job was to ensure the safety of the existing fleet of a hundred or so reactors. NRC staff feared that utilities had a “fossil-fuel mentality” of shaving expenses for short-term profits and neglecting investments in reliability. They pushed the industry to improve its maintenance practices, using PRA to focus on components “commensurate with their safety significance.” The utilities resisted any NRC intrusion on their management practices, but, hoping to head off costly new maintenance regulations, they launched their own initiatives for “excellence.” When the NRC did pass a rule in 1990 to measure the results of the industry’s maintenance programs, utilities responded with outrage—briefly. Implementing the Maintenance Rule using PRA insights turned out to bring cost savings to the plants through improved reliability and reduced downtime.

In a campaign to “risk-inform” its regulatory processes following the success of the Maintenance Rule, the NRC began applying PRA wherever it could figure out how. A licensee’s ability to renew its license out to 60 or 80 years, make changes to the plant, get exemptions to rules, or repair safety equipment without shutting down depended partly on risk models demonstrating acceptable safety. Over time, fewer and fewer problems emerged. The number of reactor scrams, previously expected a few times a year at each plant, dropped by 75%. Risk-informed oversight seemed to be working. And with it, capacity factors rose to nearly 90%, helping plants stay economically competitive.

One thing that could not be reformed was treatment of low-level radioactivity. Public opposition killed a proposed rule for levels Below Regulatory Concern that would have allowed the NRC to ignore exposures less than 1 millirem per year, about 1/300 of normal background radiation.

Near Miss

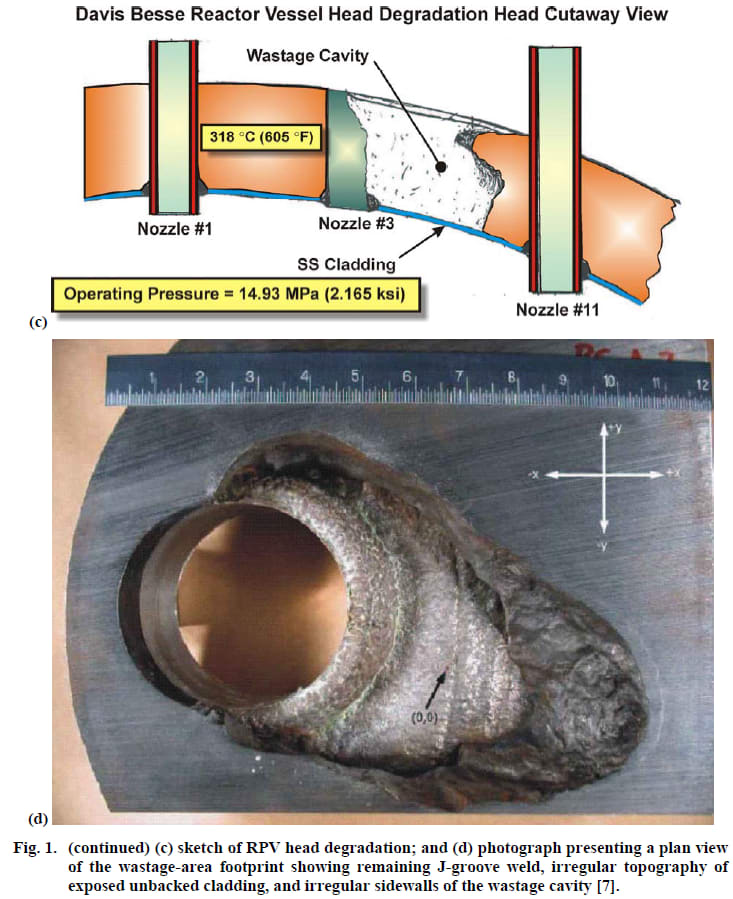

In 2002, a shockingly close call threatened to undermine all the industry’s progress on safety and public trust. A few plants had recently noticed unexpected cracks in control rod nozzles that penetrated the reactor vessel head. Regulators, concerned that a large enough crack might sever a nozzle, warned other PWRs to check for the same problem. The Davis Besse plant in Ohio, on NRC orders and after quite a bit of stalling, shut down to inspect its control rod nozzles for cracks. Instead, workers found a hole in the vessel head the size of a pineapple. Corrosive water leaking from a crack had eaten all the way through the five-inch-thick steel, down to a stainless steel liner just ⅜ of an inch thick, holding back the 150-atmosphere operating pressure of the coolant. It was the closest any reactor had ever come to a large, or at least a medium-sized, LOCA. If the cavity had been allowed to grow, the liner would eventually have burst, leaving an opening of unknown size for coolant to escape into containment.

Davis Besse had invoked its PRA to justify the delayed inspection. The risk, the operators claimed, was low enough to rule out the need for an immediate shutdown. The NRC agreed (though not unanimously) that they could compensate with extra monitoring and preventive maintenance on other components that would reduce risk of core damage.

The dramatic damage resulted in a record-setting fine for the owner and criminal prosecutions for negligent plant staff. But was it also an indictment of PRA as a regulatory technique? The Union of Concerned Scientists, always quick to criticize nuclear safety, argued not that use of PRA was inappropriate but that the NRC had “no spine” in the way it applied risk information, and that the industry should have invested in better PRA models.

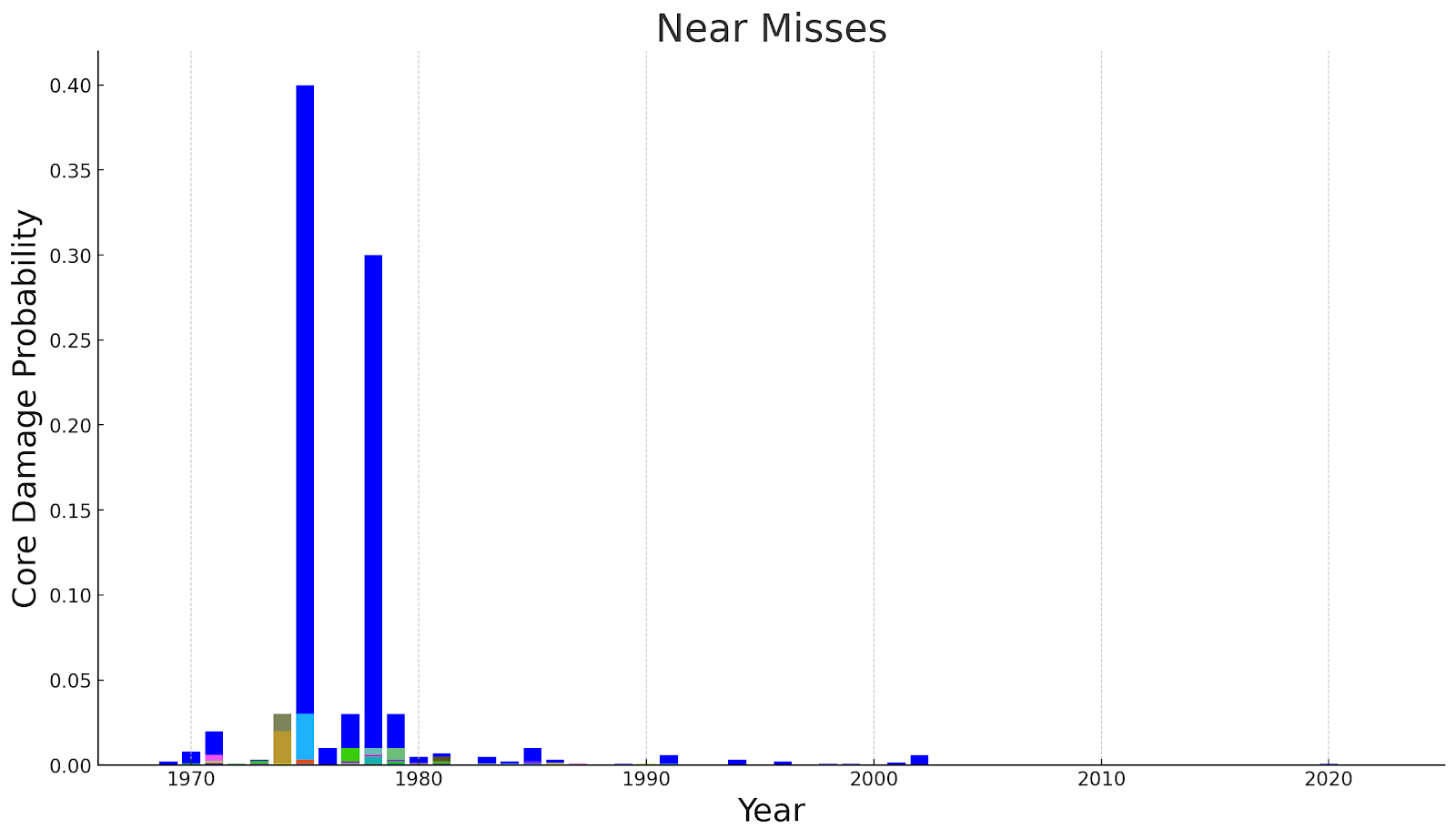

The NRC admitted to some mistakes in handling the case, and launched new efforts to improve safety culture among operators, but doubled down on using PRA for similar decisions in the future. From what they knew at the time, “[t]he increase in the probability of core damage of 5×10-6 (one in two hundred thousand) reactor years was acceptable under NRC guidelines.” Afterward, the NRC added the incident to its Accident Sequence Precursor (ASP) program, a PRA-based retrospective analysis of all dangerous events at US plants. ASP calculated in hindsight a much higher core damage probability of 6×10-3 for the time the hole existed. But older events looked worse.

According to the ASP analysis, the hole in the head is by far the worst US nuclear incident of the last 30 years. In the 1970s, it would have been a Tuesday.

Around the same time, the Nuclear Energy Institute (a trade association) petitioned the NRC to reform a swath of old deterministic rules, foremost among them the large LOCA requirements. Three-foot-diameter pipes with four-inch-thick steel walls, it turns out, do not suddenly break in half. In fact, in 2,500 reactor-years of operation, the few small LOCAs that had occurred were either from stuck-open valves (as at Three Mile Island) or from leaking pump seals. A risk-informed approach would allow plants to use a smaller pipe break in their safety analysis and save loads of money. But the rulemaking process was repeatedly delayed and diluted, first by concerns about defense in depth, then because of the 2011 accident at Fukushima. In a weakened form that only reduced the break size to 20 inches, some utilities deemed a cost benefit analysis not worth the effort. The NRC gave up a few years later due to budget cuts. The industry continued its gradual adoption of other risk-informed changes to things like quality assurance and fire protection, but nuclear energy still lives with the specter of the fictional large LOCA that has haunted it since the beginning.

9. A Future for Nuclear?

Safe Enough? is a history, and it ends with the present. It concludes on a note of mixed optimism: opponents of PRA “won the battle and lost the war,” but are “still fighting.” The ground they’re fighting on, though, is moving. We can look ahead, at least a little bit, to see where PRA might take us next.

In the last 20 years, only two new reactors have been built in the US. For that trend to change, many pieces have to fall into place—and one of those is the regulatory environment for new reactor licensing. New designs tend to fall into two categories: passively cooled light water reactors, and advanced non-light water reactors. The passively cooled reactors, whether they are small modular reactors or large ones, are fundamentally similar to the existing US fleet, except that instead of needing pumps and generators to handle an emergency, they are expected to keep water flowing to the core through gravity alone. In PRA results, they tend to look much safer than the old designs, because they require fewer components that might fail. The advanced reactors are a more eclectic bunch, to be cooled variously by helium, supercritical CO2, or molten sodium, lead, or salt, among others. Their safety philosophies are more speculative, but in general they claim even lower risk. In some cases, existing PRA methods don’t really apply because no known combination of failures creates a plausible path to releasing large amounts of radionuclides beyond the site boundary.

Right now, licensing any of these designs requires exemptions for many old, inapplicable rules. Going forward, there are two competing ideas for how licensing should work. One, favored by the NRC and some in the nuclear industry, would base the safety case mainly on a high-quality PRA showing a very low probability of a harmful radiation release. Critics say these risk calculations are too expensive and time-consuming, even for simple designs. The other approach returns to the deterministic rules used for the older reactors, but rewrites them to apply to a wider range of technologies; PRA would provide some supporting insights. In theory, it should work better with simpler designs, bigger safety margins, and more standardization. If not done carefully, this path risks repeating the mistakes of the past: multiple unfamiliar designs, newly created rules, and no clear bar for what level of safety is adequate.

Nuclear power stalled because it needed to be both provably safe and economical, and it couldn’t. Its promoters repeatedly overstated the case for safety and misled the public, while its detractors blew dangers out of proportion and used the legal system to put up roadblocks. Overwhelmed regulators reacted with erratic changes and delays that raised costs, and still failed to reassure people. The weakened industry could not navigate worsening economic conditions. Today, the world has more money, more knowledge, more public support, and more demand for electrification. It also has less tolerance for pollution from coal. A nuclear renaissance has a chance at success, if it can slash construction and operating costs and still make a clear, open and convincing case that reactors are safe enough.

Obligatory disclaimer: This review contains my own thoughts on the book and surrounding context, and does not represent the views of any present or past employer.

Further Reading and References

- “A Short History of Nuclear Regulation” (NUREG/BR-0175), co-written by Thomas Wellock, is a shorter and more readable starting point than Safe Enough, while covering a lot of the same history with less attention to PRA.

- Shorting the Grid, by Meredith Angwin, is not mainly about nuclear energy, but is a good explanation of the weaknesses of energy "markets" and the problems that can arise from infrastructure privatization, a key factor in the tenuous economic viability of nuclear. https://www.goodreads.com/en/book/show/55716079

- “Ten blows that stopped nuclear power: Reflections on the US nuclear industry’s 25 lean years,” by energy industry analyst Charles Komanoff, is a blog post from the 1990s that brings a valuable perspective on the story of nuclear energy’s demise. https://www.komanoff.net/nuclear_power/10_blows.php

- Power Plant Cost Escalation: Nuclear and Coal Capital Costs, Regulation, and Economics, also by Komanoff, is a book written in 1981 and captures a great deal of detail that later accounts tend to gloss over. https://www.komanoff.net/nuclear_power/Power_Plant_Cost_Escalation.pdf

Quotes in this review with no citation are from Safe Enough.

This review was originally an entry in the 2024 ACX book review contest. Safe Enough was also reviewed in the 2023 contest, from a rather different perspective.

0 comments

Comments sorted by top scores.