Paper Summary: The Koha Code - A Biological Theory of Memory

post by jakej (jake-jenks) · 2023-12-30T22:37:13.865Z · LW · GW · 2 commentsContents

2 comments

paper link

author’s presentation

This paper introduces the Koha model, which aims to explain how information is stored within the brain and how neurons learn to become pattern detectors. It proposes that dendritic spines serve as computational units that scan for particular temporal codes, or “spike trains”, which are used to dampen or excite signals. A mechanism for learning is proposed, wherein each neuron listens for an “echo” signal that is used to reinforce the physical structure of dendritic spines that contributed to the original signal. Competitive learning then drives neurons to self-organize into emergent algorithms.

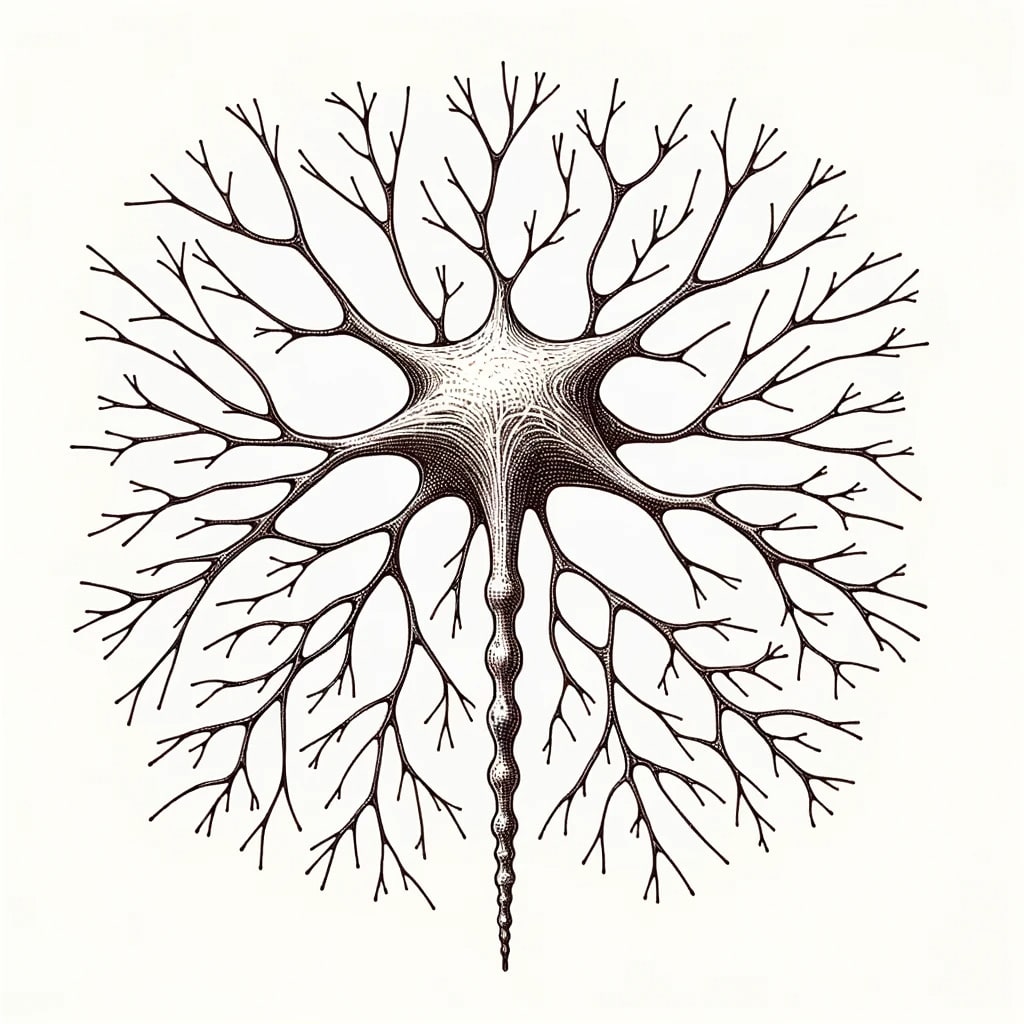

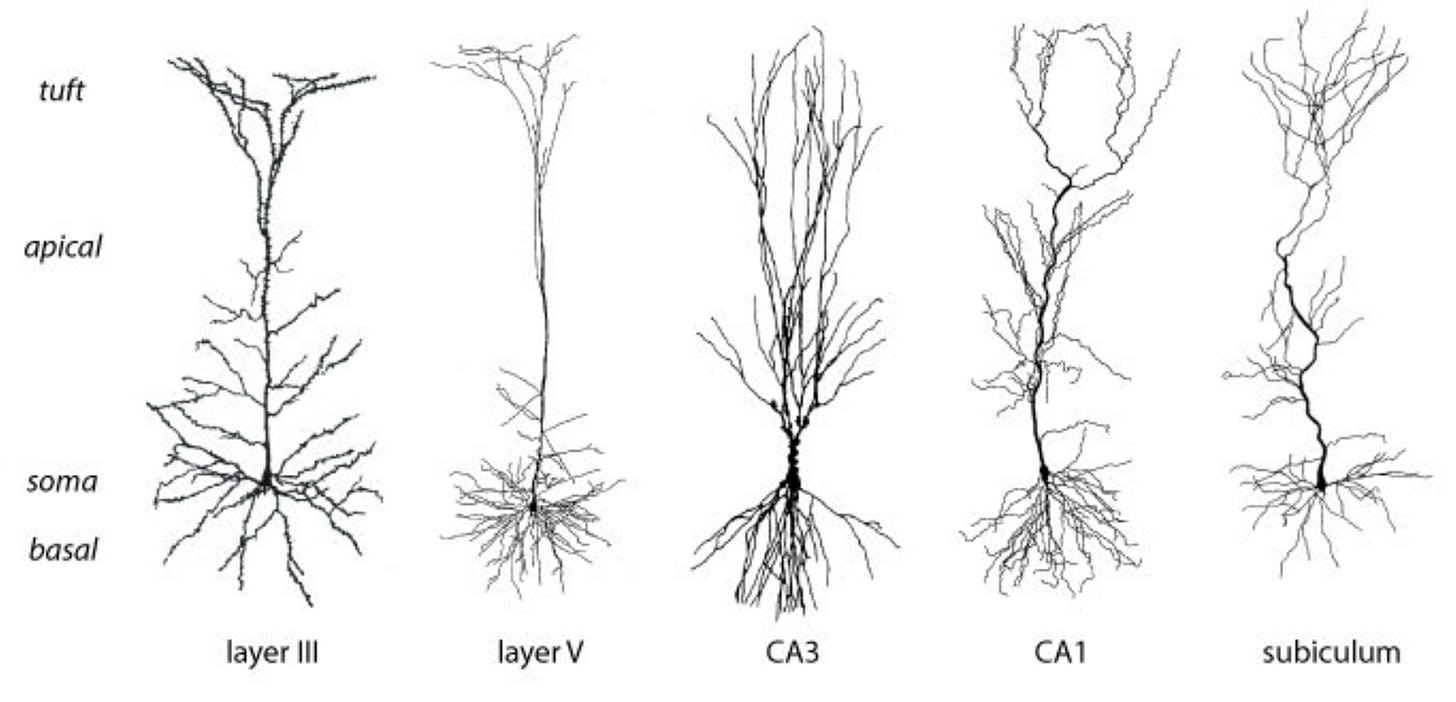

There are many different types of neurons, but they can be generally categorized as inhibitory or excitatory. One of the most common kinds of excitatory neurons is a pyramidal neuron. All mammals, birds, fish, and reptiles have pyramidal neurons.

Excitatory and inhibitory neurons can organize to form neural circuits. These circuits can make use of a mechanism called “lateral inhibition” to suppress the activity of other neurons. Networks with these inhibitory configurations (“competitive circuits”) form a winner-takes-all competition between networks where the first circuit to fire can suppress all its competitors.

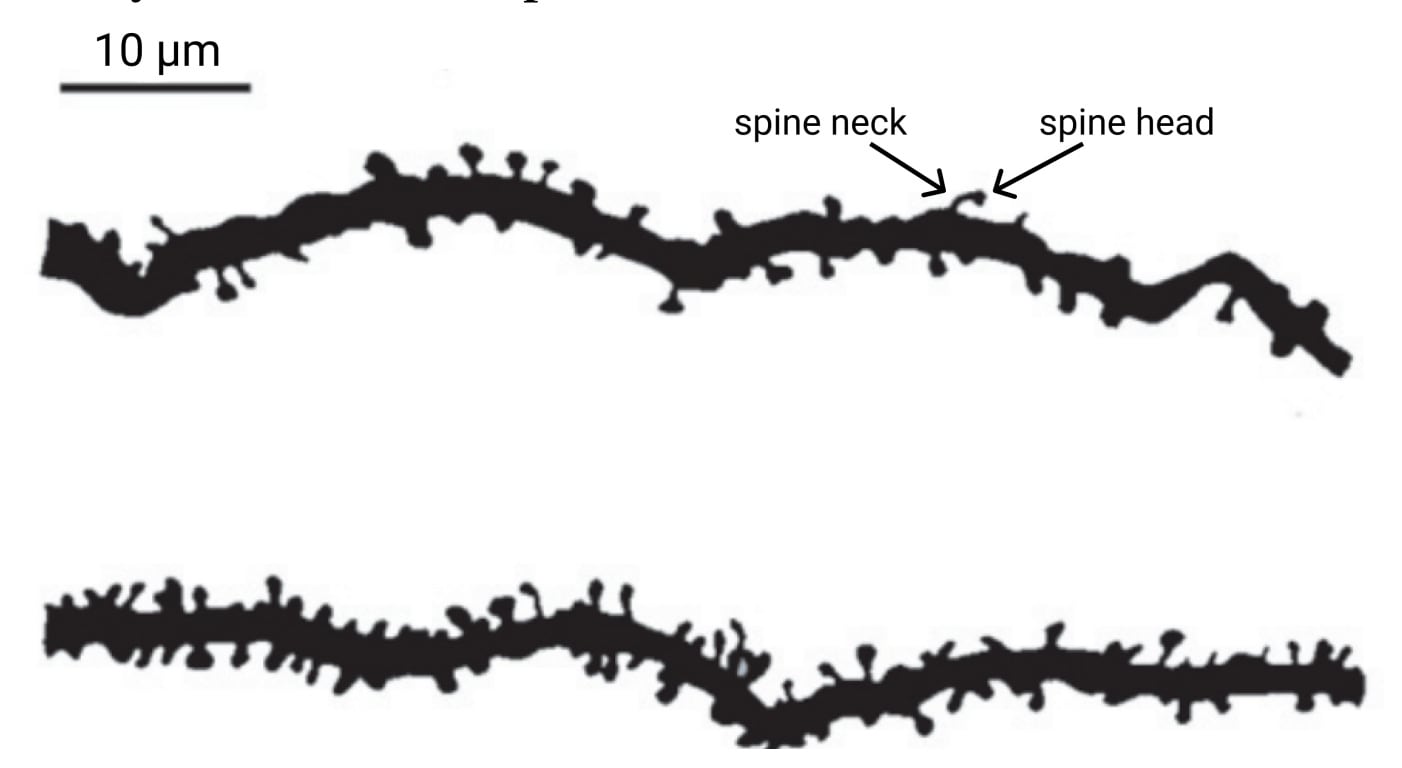

Pyramidal neurons also have many interesting differences compared to inhibitory neurons. The dendrites (reminder: the inputs) of pyramidal neurons are noteworthy for being absolutely riddled with small mushroom-like protrusions called “spines”. Inhibitory neurons have virtually no such spines, and it is believed these spines allow pyramidal neurons to record information.

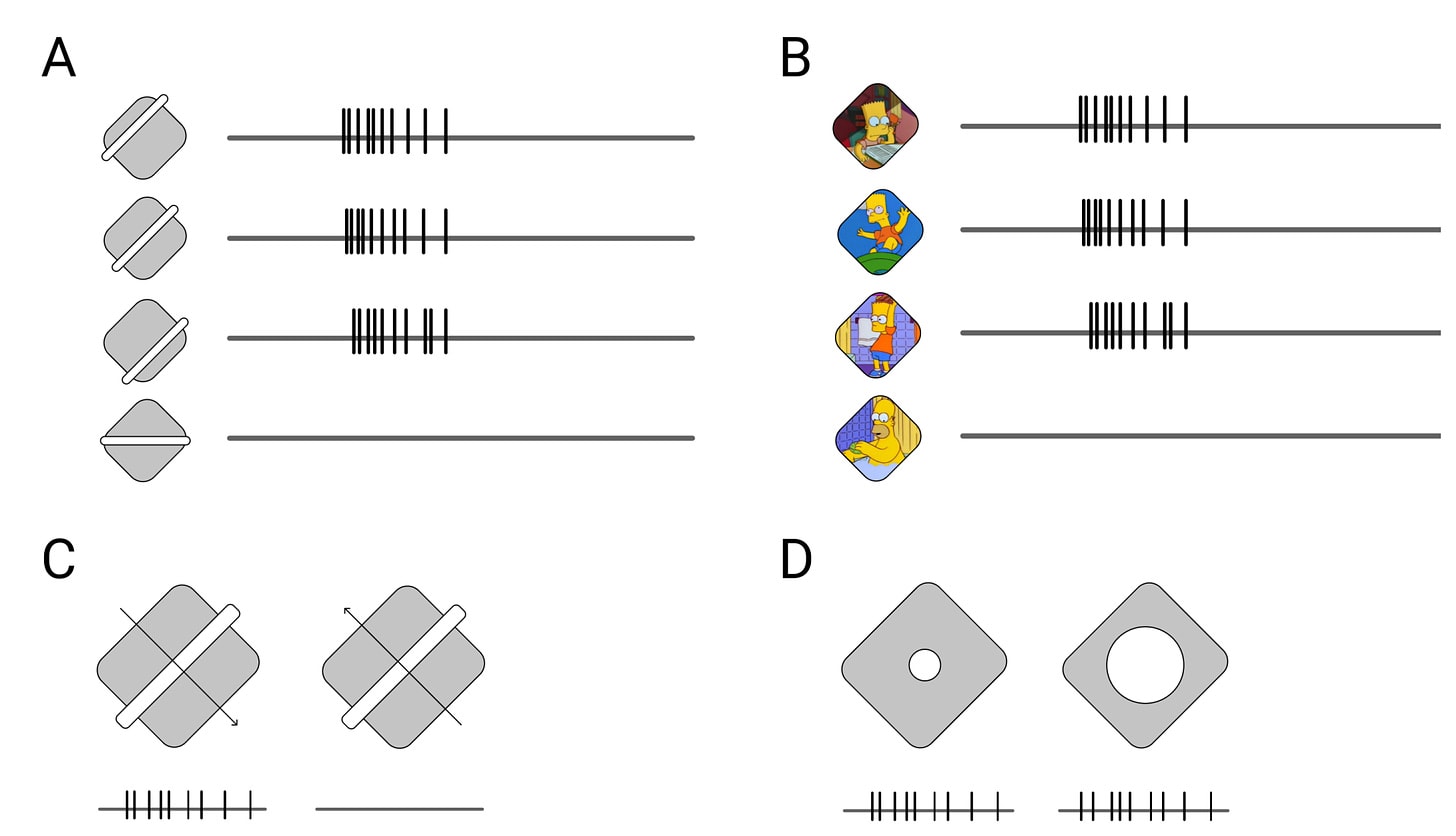

Dendritic spines also come in several different phases of development: stubby, thin, and mushroom. Human infants have almost all stubby spines, and adults have almost all mushroom spines. It is thought that mushroom spines, being the largest and most stable, serve as long-term memory and that the more fragile “thin spines” play a role in learning, with their ability to rapidly form and break their connection. Thin spines can come and go, but mushroom spines tend to last a lifetime. Furthermore, these dendritic spines can change shape in just seconds when exposed to an appropriate stimulus.

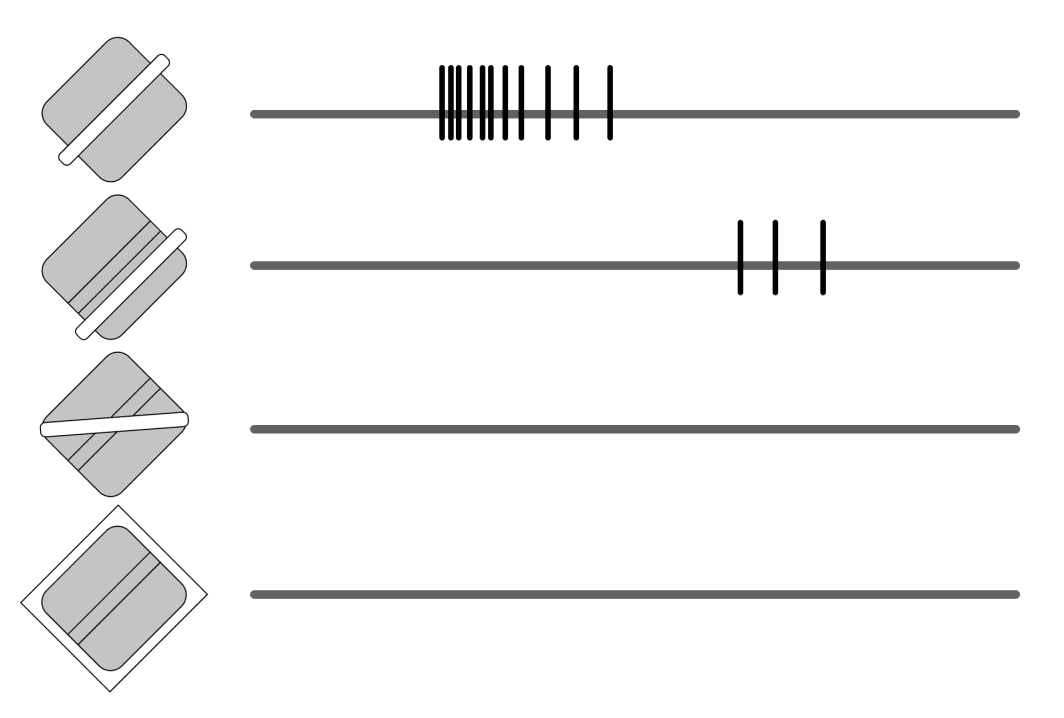

Visual neurons can be categorized as being either simple or complex cells. Simple cells fire continuously when they receive their input pattern.

Complex cells are special. Complex cells find invariances in their inputs that allow them to fire even if their input is rotated, rescaled, or even only in response to certain timing or directional motion.

Alright, background info out of the way. The Koha model proposes that dendritic spines (the mushroom things) change their shape to amplify or dampen incoming signals. The author argues that dendritic spines also have the right molecular machinery to encode a temporal pattern in the form of a sequence of “on” and “off” states. These spines then compute a distance metric between their inputs and their stored patterns to determine whether they should fire or not.

Neurons are essentially in competition with other neurons receiving the same inputs because, remember, this is a winner-takes-all race. Neurons with internal codes that are more similar to an input allow the signal to move freely through them while different codes are subject to a delay. Neurons with internal codes that are less similar to an input delay the signal by altering their shape. This means that the best-matching neuron for a given input will fire the fastest, thereby winning the lateral inhibition race.

When a neuron fires, it subsequently receives a “neural backpropagation” signal. During this process, the dendritic spines that participated in activating the neuron see a surge in calcium levels not seen in nearby spines that did not participate. The author proposes that this is the signal that indicates the spine contributed to a best-matching neuron, and that it should do more of whatever it’s doing.

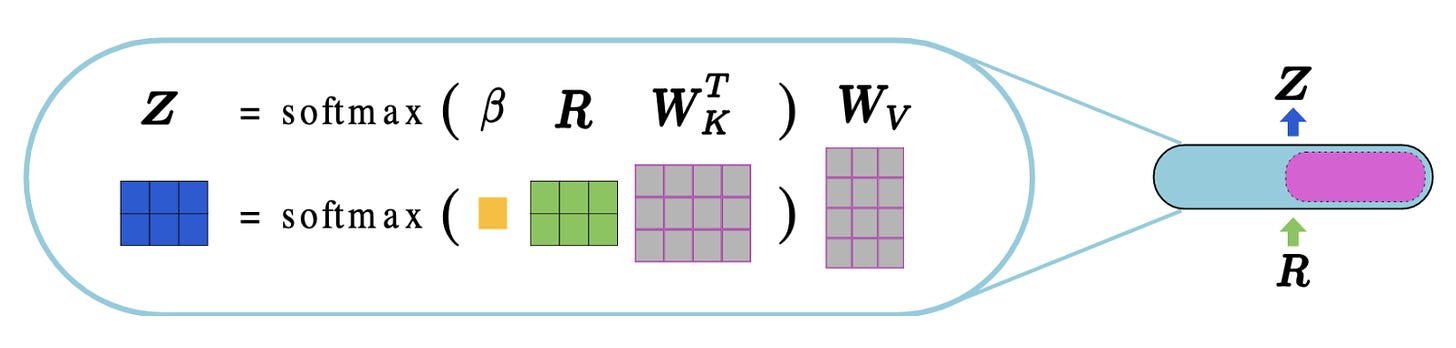

The Koha model can also be viewed as an associative memory model, like a Hopfield Network or a Transformer, but I’ll leave those details in the paper.

That’s pretty much all there is to it. The conclusion is nice and concise so I’ll just quote it here.

This work introduces two novel ideas with widespread implications for the field of neuroscience:

On a micro level, it argues for the existence of a temporal code within every dendritic spine. Just as genes are the molecular unit of heredity, the Koha code is argued to be the unit of memory. It shows how dendritic spines use these temporal codes to scan for precise spike patterns in their synaptic inputs. It describes a biologically plausible process for how signal filtration occurs within spine necks, as well as provides compelling evidence for the existence of such a mechanism within dendritic spines.

On a macro level, it explains how neurons within competitive circuits can learn to become pattern detectors, through competitive learning. In this model, the chance of a neuron to become the "winning neuron" within a competitive circuit, directly depends on the temporal codes within a neuron’s dendritic spines.

2 comments

Comments sorted by top scores.

comment by Adam Kaufman (Eccentricity) · 2023-12-31T17:28:42.172Z · LW(p) · GW(p)

Can someone with more knowledge give me a sense of how new this idea is, and guess at the probability that it is onto something?

Replies from: Ilio↑ comment by Ilio · 2023-12-31T18:19:17.600Z · LW(p) · GW(p)

(Epistemic fstatus: first thoughts after first reading)

Most is very standard cognitive neuroscience, although with more emphasis on some things (the subdivision of synaptic buttons into silent/modifiable/stable, notion of complex and simple cells in the visual system) than other (the critical periods, brain rhythms, iso/allo cortices, brain symetry and circuits, etc). There’s one bit or two wrong, but that’s nitpicks or my mistake.

The idea of synapses as detecting frequency code is not exactly novel (it is the usual working hypothesis for some synapses in the cerebellum, although the exact code is not known I think), but the idea that it’s a general principle that works because the synapse recognize it’s own noise is either novel or not well known even within cognitive science (it might be a common idea among specialists of synaptic transmission, or original). I feel it promising, like how Hebb has the idea of it’s law.