Which Model Properties are Necessary for Evaluating an Argument?

post by VojtaKovarik, Ida Mattsson (idakmattsson) · 2024-02-21T17:52:58.083Z · LW · GW · 2 commentsContents

Motivating Story Evaluating Arguments, rather than Propositions Which Models are Sufficient for Evaluating an Argument? Which Model Properties are Necessary for Evaluating an Argument? Application: Ruling out Uninformative Models Conclusion None 2 comments

This post overlaps with our recent paper Extinction Risk from AI: Invisible to Science?.

Summary: If you want to use a mathematical model to evaluate an argument, that model needs to allow for dynamics that are crucial to the argument. For example, if you want to evaluate the argument that "a rocket will miss the Moon because the Moon moves", you, arguably, can't use a model where the Moon is stationary.

This seems, and is, kind of obvious. However, I think this principle has some less obvious implications, including as a methodology for determining what does and doesn't constitute evidence for AI risk. Additionally, I think some of what I write is quite debatable --- if not the ideas, then definitely the formulations. So I am making this a separate post, to decouple the critiques of these ideas/formulations from the discussion of other ideas that build on top of it.

Epistemic status: I am confident that the ideas the text is trying to point at are valid and important. And I think they are not appreciated widely enough. At the same time, I don't think that I have found the right way to phrase them. So objections are welcome, and if you can figure out how to phrase some of this in a clearer way, that would be even better.

Motivating Story

Consider the following fictitious scenario where Alice and Bob disagree on whether Alice's rocket will succeed at landing on the Moon[1]. (Unsurprisingly, we can view this as a metaphor for disagreements about the success of plans to align AGI. However, the point I am trying to make is supposed to be applicable more generally.)

Alice: Look! I have built a rocket. I am sure that if I launch it, it will land on the Moon.

Bob: I don't think it will work.

Alice: Uhm, why? I don't see a reason why it shouldn't work.

Bob: Actually, I don't think the burden of proof is on me here. And honestly, I don't know what exactly your rocket will do. But I see many arguments I could give you, for why the rocket is unlikely to land on the Moon. So let me try to give you a simple one.

Alice: Sure, go ahead.

Bob: Do I understand it correctly that the rocket is currently pointing at the Moon, and it has no way to steer after launch?

Alice: That's right.

Bob: Ok, so my argument is this: the rocket will miss because the Moon moves.[2] That is, let's say the rocket is pointed at where the Moon is at the time of the launch. Then by time the rocket would have reached the Moon's position, the Moon will already be at some other place.

Alice: Ok, fine. Let's say I agree that this argument makes sense. But that still doesn't mean that the rocket will miss!

Bob: That's true. Based on what I said, the rocket could still fly so fast that the Moon wouldn't have time to move away.

Alice: Alright. So let's try to do a straightforward evaluation[3] of your argument. I suppose that would mean building some mathematical model, finding the correct data to plug into it (for example, the distance to the Moon), and calculating whether the model-rocket will model-hit the model-Moon.

Bob: Exactly. Now to figure out which model we should use for this...

Evaluating Arguments, rather than Propositions

An important aspect of the scenario above is that Alice and Bob decided not to focus directly on the proposition "The rocket will hit the moon.", but instead on a particular argument against that proposition.

This approach has an important advantage that, if the argument is simple, evaluating it can be much easier than evaluating the proposition. For example, suppose the rocket will in fact miss "because" of some other reason, such as exploding soon after launch or colliding with a satellite. Then this might be beyond Alice and Bob's ability to figure out. But if the rocket is designed sufficiently poorly, the conclusion that the rocket will fail to hit the moon might be so over-determined that it will allow for many different arguments. Given that, Alice and Bob might as well try to find an argument that is as easy to evaluate as possible (and doesn't require dealing with chemistry or satellites).

However, the disadvantage of this approach is that it cannot rule out that the proposition holds for reasons unrelated to the argument. (For example, perhaps Alice was so (extremely) lucky that, in this particular case, pointing the rocket at the Moon is enough to hit the Moon. Or maybe the aliens have decided to teleport the rocket to the Moon, making the rocket's design irrelevant. Or maybe Alice and Bob live in a universe where Moon is actually a very powerful magnet for rockets...) Because of this disadvantage, this approach is unlikely to allow us to reach an agreement on difficult topics where people have different priors. Still, evaluating arguments seems better than nothing.

Which Models are Sufficient for Evaluating an Argument?

Let's make a few observations regarding which models might be good enough for evaluating an argument:

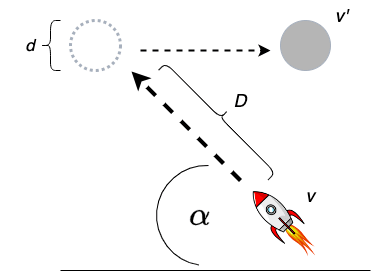

The model does not need to be fully accurate. Indeed, an example of a model that Alice and Bob are looking for is this one.

Clearly, this is not an accurate model of the real situation[4] --- the universe is not two-dimensional, the Earth is not flat, the Moon does not travel parallel to it, the rocket will be subject to gravity, and so on. But it seems informative enough to evaluate Bob's argument that "the rocket cannot hit the moon since by the time the rocket could possibly reach the Moon's original position, the Moon will have moved away ".

There can be multiple useful models: While the model above can be used for evaluating the argument, a 3D simulation of the solar system with Newtonian physics would be useful as well, as would a number of other models.[5]

The general problem of finding models sufficient for evaluating arguments is beyond the scope of this post. Ideally, we would have a general procedure for a finding mathematical model that is useful for evaluating a given argument. But I don't know how such a procedure would look like --- my best guess is "something something abstractions". However, for the purpose of this post, we are only interested in finding properties that are necessary for a model to be informative. In other words, we are interested in how to rule out uninformative models.

Which Model Properties are Necessary for Evaluating an Argument?

Finally, we explain what we mean by properties that are necessary for a model to be informative for "straightforwardly evaluating" an argument. The idea is that a larger argument can be made of smaller parts, and each part might correspond to one or multiple dynamics that it critically relies on. (As an obvious example, saying that "the rocket will miss because the Moon moves" critically relies on the Moon being able to move.) By necessary properties (for the model to be informative towards "straightforwardly evaluating" an argument), we will understand conditions such as "the model allows for this dynamic D", where D seems critical for some part of the argument. However, as we warned earlier, we will not necessarily focus on giving an exhaustive list of all such dynamics and corresponding properties.

The notion of necessary properties should come with two important disclaimers. The first disclaimer is that the list of properties is very sensitive to our interpretation of "straightforward evaluation". We take "straightforward evaluation of an argument" to mean something like "don't try to do anything fancy or smart; just construct a model of the dynamics described by the argument, plug in the relevant data, and see what result you get". However, there are many other ways of evaluating an argument. For example, any argument whose conclusion is false can be evaluated indirectly, by noticing (possibly through means unrelated to the argument) that the conclusion is false, which means that something about the argument must be wrong. This approach is valid, but not what we mean by "straightforward evaluation". (If anybody comes up with a better phrase, that conveys the above idea more clearly than "straightforward evaluation of an argument", I would be delighted.)

The second disclaimer is that the necessary properties are very sensitive to the choice and phrasing of the argument. As an illustration, suppose that Bob's argument is phrased as (A) "the rocket will miss because the Moon moves" (which is too vaguely phrased to be useful, but that is besides the point). Then one necessary property would be that (N) "the model must contain something representing the Moon, and must allow us to talk about that thing moving". But suppose that Bob's argument was instead phrased as

- (A1) the rocket is pointing at the Moon at the moment of launch, but

- (A2) the Moon moves, and

- (A3) by the time the rocket could get to where the Moon was originally, the Moon will be gone.

Then some necessary properties would be

- (N1) the ability to talk about the direction of the rocket (for A1),

- (N2) containing something that represents the Moon, and allowing us to talk about that thing moving (for A2), and

- (N3) some way of tracking how long it takes the rocket to get to the Moon's original position, and how long it takes the Moon to move away from it (for A3).

Application: Ruling out Uninformative Models

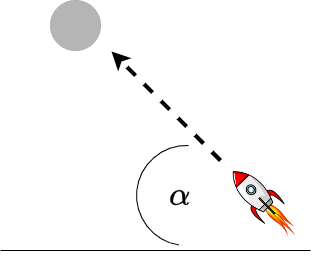

Once we have identified the necessary conditions above, we can use them to quickly rule out models that are clearly unsuitable for evaluating an argument. For example, suppose that Alice and Bob considered the following model for evaluating Bob's argument:

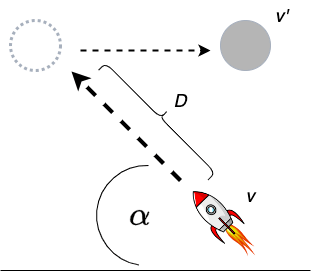

This model (arguably) allows for talking about (N1) the rocket being aimed at the Moon or not. However, it does not allow for talking about the Moon's movement, so it fails the condition (N2) above. In contrast, the following model satisfies all three conditions (N1-3):

As a result, this model does not immediately get ruled out as uninformative. Note that this is despite the model actually being uninformative (since, even if things actually worked as in the picture above, whether the rocket hits would additionally also depend on the size of the Moon).

Conclusion

I talked about "necessary properties" for "straightforward evaluation" of arguments. The examples I gave were rather silly, and obvious. However, I think that in practice, it is easy for this issue (i.e., models being uninformative) to arise when the arguments are made less explicitly than above. Or when a model that was made for one purpose is used to make a broader argument.

One area where this often comes up for me is paper reviews, and giving early feedback on research projects. As an example, I remember quite a few cases where my high-level feedback was basically: "You aim to study X, and you seem to draw strong conclusions about it. But I think that X crucially depends on the dynamic D, which is impossible to express in your model." (I admit that it would nice to include concrete examples. But in the interest of not postponing the post even further, I will skip these for now.)

Another important area where this comes up is evidence for AI risk. More specifically, it seems to me that basically all formal models, that we historically considered in the field of AI, are uninformative for the purpose of evaluating AI risk. (Or at least for straightforward evaluation of the arguments that I am aware of.) This is because arguments for AI risk rely on dynamics such as "formulating our preferences is hard", "the AI could come up with strategies that wouldn't even occur to us", or "by default, any large disruption to the environment is very bad for us" --- and these dynamics are not present in formal models that I am aware of. I will talk more about this in the next post.

Acknowledgments: Most importantly, many thanks to @Chris van Merwijk [LW · GW], who contributed to many of the ideas here, but wasn't able to approve the text. I would also like to thank Vince Conitzer, TJ, Caspar Oesterheld, and Cara Selvarajah (and likely others I am forgetting), for discussions at various stages of this work.

- ^

While this example is inspired by The Rocket Alignment Problem, the point I am trying to make is different.

- ^

Here, the "because" in "the rocket will miss because the Moon moves" is meant to indicate that this is one of the reasons the rocket, not that it is the key underlying cause of the rocket missing. (Presumably, that has more to do with Alice not having a clue how to build a rocket.) Suggestions for a better phrasing are welcome.

- ^

I expect that most disagreement regarding this post is likely to come from different ways of interpreting the phrase "straightforward evaluation of an argument". The thing I am trying to point at is something like "don't try to do anything fancy or smart; just construct a model of the dynamics described by the argument, plug in the relevant data, and see what result you get".

I admit that it will often be possible to do "something smart", such as decomposing the argument into two independent sub-arguments and evaluating each of them separately.

As a result, any statement such as "any model that is useful for straightforwardly evaluating argument A must necessarily have properties X, Y, Z" should be understood as coming with the disclaimer "but perhaps you can do better if you modify the argument first". - ^

Note that, at least according to my random googling, actual rocket engineers mostly don't use models which include relativistic effects. That is, we do not need to use the most accurate model available --- we just need one that is sufficiently accurate to get the job done.

- ^

I suppose that the number of minimally-complex useful models might be limited (up to some isomorphism). But discussing that would be outside of the scope of this post.

2 comments

Comments sorted by top scores.

comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-02-22T18:55:23.786Z · LW(p) · GW(p)

I agree with the point being made here, and I think it's an important one to make. I, also, am unsure about how well this description of the problem will convince people who are not already convinced that this is an issue. I feel like often I see people putting too much faith in simplified models, and forgetting the fragility of the assumptions necessary for their model to be applicable. There are better ways to solve such a problem than just falling back to a vague outside-view approximation.

I would love it if more discussions of the future had the shape of: If A and B, then model X applies. If A and not B, then model Y applies. If not A and not B, then model Z applies. If B and not A, then we don't yet have a model. Let's think of one.

And then talking about the probability of the various states of A and B.

But instead I feel like someone has model X, and their response to being told that this holds only if A and B come true, then they double-down on insisting that (A and B) is the most likely outcome and on refining the details of X.

comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-02-22T18:45:07.110Z · LW(p) · GW(p)

for example, the distance to the Moon)

and speed of the Moon's motion