Three Levels for Large Language Model Cognition

post by Eleni Angelou (ea-1) · 2025-02-25T23:14:00.306Z · LW · GW · 0 commentsContents

1. Background 2. What are the three levels? 3. What are some examples of explanations? 4. Why a leaky abstraction? 5. Concluding thoughts None No comments

This is the abridged version of my second dissertation chapter. Read the first here [LW · GW].

Thanks to everyone I've discussed this with, and especially, M.A. Khalidi, Lewis Smith, and Aysja Johnson.

TL;DR: Applying Marr's three levels to LLMs seems useful, but quickly proves itself to be a leaky abstraction. Despite the porousness, can we agree on what kinds of explanations we'd find at each level?

1. Background

When I think about the alignment problem, I typically ask the following question: what kinds of explanations would we need to say that we understand a system sufficiently well to control it? Because I don't know the answer to that question (and the philosophy of science vortex is trying to devour me), I expect to make some progress if I look at the explanations we have available, taxonomize them, and maybe even find what they're missing. This is where David Marr's framework comes in.

2. What are the three levels?

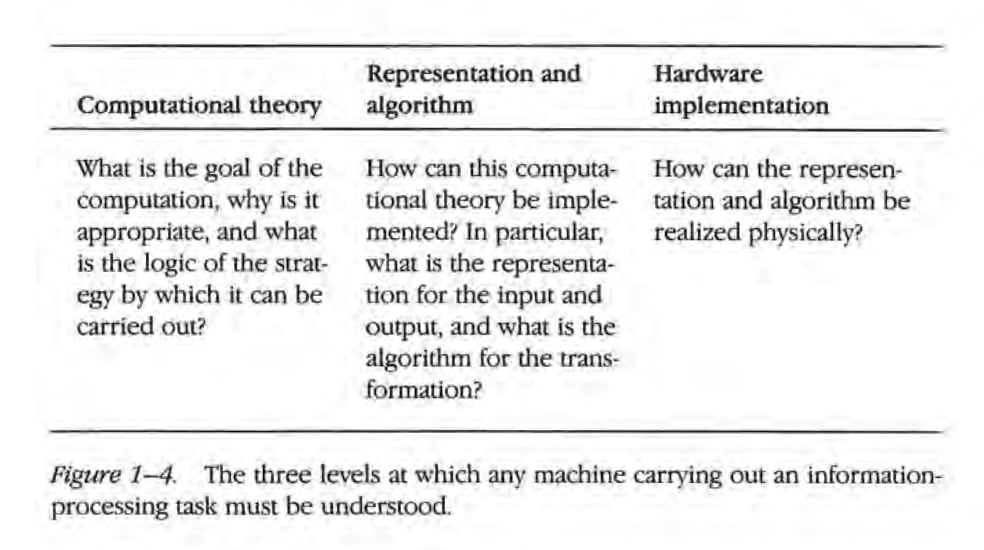

According to Marr (1982), we can understand a cognitive system through an analysis of three levels: (1) computational theory, (2) representation and algorithm, and (3) hardware implementation.

- (1) corresponds to the goal of the computation, what the exact task is.

- (2) describes the steps required to make the function happen, contains the exact series of computations of the function.

- (3) concerns the physical realization of the task in a given hardware.

Marr's problem at the time was that mere descriptions of phenomena or pointing to parts of the network could not sufficiently explain cognitive functions such as vision. Surely, finding something like the "grandma neuron" in the network was a breakthrough in its own right. But it wasn't an explanation; it didn't say anything about the how or the why behind the phenomenon. Some attempts in LLM interpretability have a similar flavor. For example, one answer to the question "where do concepts live" is to point to vectors and their directions in the neural network of the LLM.

The three levels for LLMs look as follows:

Computational theory level: what is the functional role or goal of the LLM? Commonly discussed goals include:

- Modeling semantic relations in text

- Generating text that reliably follows prompt instructions or models user preferences

- Performing the specific task requested (e.g., writing code for a website)

Algorithmic level: at least two kinds of algorithms exist in LLMs:

- Training algorithms: further divided into pre-training (learning from large-scale, unsupervised data) and fine-tuning (improving performance at specific tasks with supervised data).

- The trained model itself: a new algorithm that encapsulates the distilled learning of regularities and statistical relationships from the training data.

Implementation level: what is the physical substrate or hardware that realizes the computational processes of the LLM? Examples of hardware are hard disk drives (HDDs), central processing units (CPUs), graphics processing units (GPUs), tensor processing units (TPUs), high-bandwidth memory (HBM), and random access memory (RAM) sticks.

3. What are some examples of explanations?

At the computational level:

- Behavioral explanations try to show what the system is doing and what problem it's solving. The primary approach involves applying the intentional stance [LW · GW]. While pragmatically useful, these explanations have limitations: they depend heavily on the user and their prompt-engineering abilities; different prompting styles yield different results, sometimes elicit previously unseen capabilities, and there's no guarantee these explanations will generalize to novel conditions or future systems.

- Mathematical explanations model the system as a stochastic next-token prediction process aiming to minimize its loss function. They illustrate how the model selects outputs by assigning probabilities to tokens. A typical example is analyzing the transformer architecture to show how an input token is processed through various transformation stages.

With some hesitation, I'm willing to argue that scaling laws also offer a kind of computational level explanation since their whole point is to characterize the most efficient trade-offs between compute, data, and model size optimization dynamics. In addition to that, scaling laws explain why there is an upper bound in the model’s performance given a set of resource constraints. In that sense, they delineate a functional limit for how effective a model can be, suggesting that beyond that point, training with more compute, data, or parameters would lead to diminishing returns in the system’s performance.

At the algorithmic level:

We mostly have explanations from mechanistic interpretability (MI). There are two paradigmatic categories of MI explanations.

- Localization of cognitive abilities in the circuits of the neural network. In cases of this kind, researchers hypothesize about the functionality of a network area, then ablate that very area and observe the loss of the hypothesized functionality. This methodology mirrors an old neuroscientific concept, the localization of function in biological brains (for example, see Ferrier, 1874). It is well-known in neuroscience that targeted damage to certain brain areas can impair specific cognitive faculties such as language, memory, etc. By analogy, MI aims to understand the localization of cognitive phenomena in neural networks by systematically ablating or manipulating regions to observe changes, akin to reverse-engineering the network's architecture to trace localized functions.

- Neuron polysemanticity. Simply put, neuron polysemanticity is the phenomenon where a single neuron corresponds to many semantic entities also called concepts or features (Olah et al., 2020). In an ideal scenario where these systems are fully interpretable, each and every neuron yields one understandable concept. Unfortunately, this is not the case and hence MI researchers study polysemantic neurons. For example, there is a neuron that corresponds to cat faces, fronts of cars, and cat legs. One explanation is that neural networks are constrained by the number of neurons they have available. In other words, there are more concepts that need to be represented than the neurons of the layer that has to represent them. So, information must be packaged accordingly. This explanation is called "superposition" (Elhage et al., 2022).

At the implementation level:

These explanations typically fall into two categories.

- Reductionist explanations that aim to show how assembling components of the system appropriately leads to specific functions. One example is studying how a central processing unit (CPU), a graphics processing unit (GPU), and memory units are combined to enable parallel computations needed during training an LLM. This approach is epistemically limited since there might not be any specific signs as to what configurations of hardware pieces would yield a desired ability in an information processing system.

- Hardware limitations explanations show how physical constraints impact training or performance. Two characteristic effects in this category are floating point errors and synchronization errors. Floating-point errors appear due to rounding errors that occur because computers represent real numbers in approximation using a finite number of bits in binary form. However, not all real numbers can be represented and stored exactly in binary form, for example, 1/3 = 0.3333333.... Since floating-point numbers have finite precision, they truncate the infinite decimal expansion of 1/3 so that it is stored as approximately 0.333333343. Moreover, when performing repeated computations, the errors accumulate. In this case, adding 1/3 three times will not equal 1: 0.333333343+0.333333343+0.333333343=0.999999929. As this error accumulates, it can distort results in iterative algorithms used during LLM training or inference.

4. Why a leaky abstraction?

The three-level distinction doesn't satisfy the most ambitious vision for explaining LLMs and can be seen as a leaky abstraction for the following reasons:

- it doesn't dictate strict boundaries between levels

- it doesn't prescribe the superiority of one level over the others

- it doesn't allow to directly infer relations of emergence or reduction between levels.

However, it adopts a more modest goal: to point to directions (i.e., top-down) that may lead to causal relations or show why seeking a specific type of explanation might be epistemically infertile (e.g., the reductionism of the implementation level).

5. Concluding thoughts

Despite the leakiness, the three-level theory is pragmatically speaking the most useful, organized, flexible, and theoretically accessible tool for thinking about explanations in an otherwise chaotic research domain.

There are at least two obvious advantages:

- it removes the confusion between the descriptive and the explanatory

- it offers flexibility as LLM research evolves to adjust accordingly to new findings, to zoom in and out between granular or high-level perspectives as needed.

Highlights of what remains unresolved:

- there could be pushback on how to exactly taxonomize the different explanations

- it's unclear which level provides the most useful explanations for different purposes, especially regarding problems in AI alignment (e.g., the goal of the model)

- the framework doesn't account for how novel capabilities emerge across the levels even though the levels seem to be interconnected

- should there be a different application of the three levels for training and for inference in LLMs?

0 comments

Comments sorted by top scores.