Accountability Sinks

post by Martin Sustrik (sustrik) · 2025-04-22T05:00:02.617Z · LW · GW · 1 commentsContents

1 comment

Back in the 1990s, ground squirrels were briefly fashionable pets, but their popularity came to an abrupt end after an incident at Schiphol Airport on the outskirts of Amsterdam. In April 1999, a cargo of 440 of the rodents arrived on a KLM flight from Beijing, without the necessary import papers. Because of this, they could not be forwarded on to the customer in Athens. But nobody was able to correct the error and send them back either. What could be done with them? It’s hard to think there wasn’t a better solution than the one that was carried out; faced with the paperwork issue, airport staff threw all 440 squirrels into an industrial shredder.

[...]

It turned out that the order to destroy the squirrels had come from the Dutch government’s Department of Agriculture, Environment Management and Fishing. However, KLM’s management, with the benefit of hindsight, said that ‘this order, in this form and without feasible alternatives,* was unethical’. The employees had acted ‘formally correctly’ by obeying the order, but KLM acknowledged that they had made an ‘assessment mistake’ in doing so. The company’s board expressed ‘sincere regret’ for the way things had turned out, and there’s no reason to doubt their sincerity.

[...]

In so far as it is possible to reconstruct the reasoning, it was presumed that the destruction of living creatures would be rare, more used as a threat to encourage people to take care over their paperwork rather than something that would happen to hundreds of significantly larger mammals than the newborn chicks for which the shredder had been designed.

The characterisation of the employees’ decision as an ‘assessment mistake’ is revealing; in retrospect, the only safeguard in this system was the nebulous expectation that the people tasked with disposing of the animals might decide to disobey direct instructions if the consequences of following them looked sufficiently grotesque. It’s doubtful whether it had ever been communicated to them that they were meant to be second-guessing their instructions on ethical grounds; most of the time, people who work in sheds aren’t given the authority to overrule the government. In any case, it is neither psychologically plausible nor managerially realistic to expect someone to follow orders 99 per cent of the time and then suddenly act independently on the hundredth instance.

— Dan Davies: The Unaccountability Machine

Someone – an airline gate attendant, for example – tells you some bad news; perhaps you’ve been bumped from the flight in favour of someone with more frequent flyer points. You start to complain and point out how much you paid for your ticket, but you’re brought up short by the undeniable fact that the gate attendant can’t do anything about it. You ask to speak to someone who can do something about it, but you’re told that’s not company policy.

The unsettling thing about this conversation is that you progressively realise that the human being you are speaking to is only allowed to follow a set of processes and rules that pass on decisions made at a higher level of the corporate hierarchy. It’s often a frustrating experience; you want to get angry, but you can’t really blame the person you’re talking to. Somehow, the airline has constructed a state of affairs where it can speak to you with the anonymous voice of an amorphous corporation, but you have to talk back to it as if it were a person like yourself.

Bad people react to this by getting angry at the gate attendant; good people walk away stewing with thwarted rage.

— ibid.

A credit company used to issue plastic cards to its clients, allowing them to make purchases. Each card had the client’s name printed on it.

Eventually, employees noticed a problem: The card design only allowed for 24 characters, but some applicants had names longer than that. They raised the issue with the business team.

The answer they've got was that since only a tiny percentage of people have names that long, rather than redesigning the card, those applications would simply be rejected.

You may be in a perfectly good standing, but you'll never get a credit. And you are not even told why. There's nobody accountable and there's nobody to complain to. A technical dysfunction got papered over with process.

Holocaust researchers keep stressing one point: The large-scale genocide was possible only by turning the popular hatred, that would otherwise discharge in few pogroms, into a formalized administrative process.

For example, separating the Jews from the rest of the population and concentrating them at one place was a crucial step on the way to the extermination.

In Bulgaria, Jews weren't gathered in ghettos or local "labor camps", but rather sent out to rural areas to help at farms. Once they were dispersed throughout the country there was no way to proceed with the subsequent steps, such as loading them on trains and sending them to the concentration camps.

Concentrating the Jews was thus crucial to the success of the genocide. Yet, bureaucrats working on the task haven't felt like they were personally killing anybody. They were just doing their everyday, boring, administrative work.

The point is made more salient when reading about Nuremberg trials. Apparently, nobody was responsible for anything. Everybody was just following the process orders.

To be fair, the accused often acted on their own rather than following the orders. And it turns out that the German soldiers faced surprisingly mild consequences for disobeying unlawful orders. So it's not like the high-ups would be severely hurt if they just walked away or even tried to mitigate the damage.

Yet, the vague feeling of arbitrariness about Nuremberg trials persists. Why blame these guys and not the others? There were literally hundreds of thousands involved in implementing the final solution. The feeling gets even worse when contemplating German denazification trials in 1960's:

Today they bring even 96-year-olds or even centenarians to justice just because they worked somewhere in an office or sat in a watchtower. But if it had been done like that in 1965, more than 300,000 German men and women would have had to be imprisoned for life for aiding and abetting murder. [...] It had to be kept under control and suppressed, because otherwise it would have been impossible to start anew. [...] There was a secretary at the Wannsee Conference and no one considered putting her in jail. And yet all these terrible orders were not typed by those who issued them. They were typed by women.

— Interview with historian Götz Aly (in German)

At the first glance, this might seem like a problem unique to large organizations. But it doesn't take a massive bureaucracy to end up in the same kind of dysfunction. You can get there with just two people in an informal setting.

Say a couple perpetually quarrels about who's going to do the dishes. To prevent further squabbles they decide to split the chores on weekly, alternating basis.

Everything works well, until one of the spouses falls ill. The dishes pile up in the kitchen sink, but the other spouse does not feel responsible for the mess. It’s not their turn. And yes, there's nobody to blame.

This is what Dan Davies, in his book The Unaccountability Machine: Why Big Systems Make Terrible Decisions — and How the World Lost Its Mind, calls "accountability sinks".

Something that used to be a matter of human judgement gets replaced by a formal process. Suddenly, nobody makes any deliberate decisions. Instead, a formal process is followed. In fact, the process may be executed on a computer, with no human involvement at all. There's nobody to blame for anything. There’s nobody to complain to when the process goes awry. Everybody’s ass is safely covered.

In any organization, incentives to replace human judgement by process are strong. Decisions may be controversial. More often than not someone's interests are at play and any decision is going to cause at least some bitterness, resentment and pushback. Introducing a non-personal process allows the decision to be made in automated manner, without anyone being responsible, without anyone having to feel guilty for hurting other people.

The one most important contribution of Davies' book is simply giving this phenomenon, that we all know and lovehate, a name.

And a name, at that, which highlights the crux of the matter, the crux that all too often goes unsaid: Formal processes may be great for improving efficiency. The decision is made once and then applied over and over again, getting the economies of scale.

Formal processes may improve safety, as when a pilot goes over a check list before the take-off. Are flight controls free and correct? Are all the doors and windows locked? And so further and so on.

Processes also serve as repositories of institutional memory. They carry lessons from countless past cases, often unknown to the person following the process. Problems once encountered, solutions once devised, all of that is encoded into a set of steps.

But in the end, deep in the heart of any bureaucracy, the process is about responsibility and the ways to avoid it. It's not an efficiency measure, it’s an accountability management technique.

Once you grasp the idea, it’s hard to unsee it. There's a strange mix of enlightenment and dread. Of course it's about accountability sinks!

All of the popular discontent in the West today is fueled by exactly this: A growing rage at being trapped in systems that treat people not as humans, but as cogs in a machine. Processes, not people, make the decisions. And if the process fails you, there's no one to turn to, no one to explain and no one to take responsibility.

This is why even the well-off feel anxious and restless. We may have democracy by name, but if the systems we interact with, be it the state or private companies, surrender accountability to the desiccated, inhuman processes and give us no recourse, then the democracy is just a hollow concept with no inner meaning.

You can't steer your own life anymore. The pursuit of happiness is dead. Even your past achievements can be taken away from you by some faceless process. And when that happens, there’s no recourse. The future, in this light, begins to feel less hopeful and more ominous.

It’s eerie how much of today’s political unrest begins to make sense through this lens.

The backlash against experts? Understandable. After all, they’re seen as the architects of these inhuman systems. The skepticism toward judges? It fits. They often seem more devoted to procedure than to justice. Even the growing tolerance for corruption starts to look different. Yes, it’s bad, but at least it’s human. A decision made by someone with a name and a face. Someone you might persuade, or pressure, or hold to account. A real person, not an algorithm.

And every time you’re stuck on the phone, trapped in an automated loop, listening to The Entertainer for the hundredth time or navigating endless menus while desperately trying to reach a human being who could actually help, the sense of doom grows a bit stronger.

But let's not get carried away.

First, as already mentioned, formal processes are, more often than not, good and useful. They increase efficiency and safety. They act as a store of tacit organizational knowledge. Getting rid of them would make the society collapse.

Second, limiting the accountability if often exactly the thing you want.

Take the institution of academic tenure. By making a scientist essentially unfireable, it grants them the freedom to pursue any line of research, no matter how risky or unconventional. They don’t need to justify their work to college administrators, deliver tangible results on a schedule, or apologize for failure.

The same pattern emerges when looking at successful research institutions such as Xerox PARC, Bell Labs, or DARPA. Time and again, you find a crucial figure in the background: A manager who deliberately shielded researchers from demands for immediate utility, from bureaucratic oversight, and from the constant need to justify their work to higher-ups.

Yet again, venture capital model of funding new companies relies on relaxed accountability for the startup founders. The founders are expected to try to succeed, but nobody holds them accountable if they do not. The risk is already priced in by the VC. Similarly to the role of the Bell Labs manager, the VC firm acts like a accountability sink between the owners of the capital and startup founders.

On October 1st, 2017, a hospital emergency department in Las Vegas faced a crisis: A mass shooting at a concert sent hundreds of people with gunshot wounds flooding into the ER at once. The staff managed to handle the emergency in a great way, violating all the established rules and processes along the way:

At that point, one of the nurses came running out into the ambulance bay and just yelled, “Menes! You need to get inside! They’re getting behind!” I turned to Deb Bowerman, the RN who had been with me triaging and said, “You saw what I’ve been doing. Put these people in the right places.” She said, “I got it.” And so I turned triage over to a nurse. The textbook says that triage should be run by the most experienced doctor, but at that point what else could we do?

Up until then, the nurses would go over to the Pyxis, put their finger on the scanner, and we would wait. Right then, I realized a flow issue. I needed these medications now. I turned to our ED pharmacist and asked for every vial of etomidate and succinylcholine in the hospital. I told one of the trauma nurses that we need every unit of 0 negative up here now. The blood bank gave us every unit they had. In order to increase the flow through the resuscitation process, nurses had Etomidate, Succinylcholine, and units of 0 negative in their pockets or nearby.

Around that time the respiratory therapist, said, “Menes, we don’t have any more ventilators.” I said, “It’s fine,” and requested some Y tubing. Dr. Greg Neyman, a resident a year ahead of me in residency, had done a study on the use of ventilators in a mass casualty situation. What he came up with was that if you have two people who are roughly the same size and tidal volume, you can just double the tidal volume and stick them on Y tubing on one ventilator.

— How One Las Vegas ED Saved Hundreds of Lives After the Worst Mass Shooting in U.S. History

As one of the commenters noted: "Amazing! The guy broke every possible rule. If he wasn't a fucking hero, he would be fired on the spot."

Once, I used to work as an SRE for Gmail. SREs are people responsible for the site being up and running. If there's a problem, you get alerted and it's up to you to fix it, whatever it takes.

What surprised me the most when I joined the team was the lack of enforced processes. The SREs were accountable to the higher ups for the service being up. But other than that they are not expected to follow any prescribed process while dealing with the outages.

Yes, we used a lot of processes. But these were processes we chose for ourselves, more like guidelines or recommendations than hard-and-fast rules. And in an aftermath of an outage, adjusting the process became as much a part of the response as fixing the software itself.

There is even an explicit rule limiting the accountability of individual SREs. The postmortem, i.e. the report about an outage, should be written in a specific way:

Blameless postmortems are a tenet of SRE culture. For a postmortem to be truly blameless, it must focus on identifying the contributing causes of the incident without indicting any individual or team for bad or inappropriate behavior. A blamelessly written postmortem assumes that everyone involved in an incident had good intentions and did the right thing with the information they had. If a culture of finger pointing and shaming individuals or teams for doing the "wrong" thing prevails, people will not bring issues to light for fear of punishment.

— Site Reliability Engineering, How Google runs production systems

On February 1, 1991, a jetliner crashed into a smaller, commuter propeller plane on the runway at Los Angeles International Airport. The impact killed twelve people instantly. The small plane, which was pushing the larger one ahead at a speed of one hundred and fifty kilometers, exploded in the fuel tank and caught fire. Passengers tried to get out of the burning plane, but not everyone succeeded. The death toll eventually rose to thirty-five.

Here's a great article from Asterisk about the event:

At the LAX control tower, local controller Robin Lee Wascher was taken off duty — as is standard practice after a crash. After hearing about the propeller, she knew she must have cleared USAir flight 1493 to land on an occupied runway. As tower supervisors searched for any sign of a missing commuter flight, Wascher left the room. Replaying the events in her mind, she realized that the missing plane was SkyWest flight 5569, a 19-seat Fairchild Metroliner twin turboprop bound for Palmdale. Several minutes before clearing the USAir jet to land, she had told flight 5569 to “taxi into position and hold” on runway 24L. But she could not recall having cleared it for takeoff. The plane was probably still sitting “in position” on the runway waiting for her instructions when the USAir 737 plowed into it from behind. It was a devastating realization, but an important one, so in an act of great bravery, she returned to the tower, pointed to flight 5569, and told her supervisor, “This is what I believe USAir hit.”

[...]

The fact that Wascher made a mistake was self-evident, as was the fact that that mistake led, more or less directly, to the deaths of 35 people. The media and the public began to question the fate of Ms. Wascher. Should she be punished? Should she lose her job? Did she commit an offense?

[...]

Cutting straight to the case, Wascher was not punished in any way. At first, after being escorted, inconsolable, from the tower premises, her colleagues took her to a hotel and stood guard outside her room to keep the media at bay. Months later, Wascher testified before the NTSB hearings, providing a faithful and earnest recounting of the events as she recalled them. She was even given the opportunity to return to the control tower, but she declined. No one was ever charged with a crime.

[...]

If you listen to the tower tapes, you can easily identify the moment Wascher cleared two planes to use the same runway. But if you remove her from the equation, you haven’t made anything safer. That’s because there was nothing special about Wascher — she was simply an average controller with an average record, who came into work that day thinking she would safely control planes for a few hours and then go home. That’s why in interviews with national media her colleagues hammered home a fundamental truth: that what happened to her could have happened to any of them. And if that was the case, then the true cause of the disaster lay somewhere higher, with the way air traffic control was handled at LAX on a systemic level.

When you read the report of the investigation, your perspective suddenly changes. Suddenly, there is no evil controller Wascher who needs to be publicly punished. Instead, there is a team of controllers who, despite all the broken radars, poor visibility and distracting duties, heroically ensure that planes do not collide day in, day out.

And if the result of Washer giving a honest report about the incident is that a second ground radar is purchased, that the interfering lights are relocated, or that various less important auxiliary tasks are not performed by the flight controller in charge, the effect on air traffic safety is a much bigger than what could be achieved by firing Wascher. Quite the opposite: Punishing her would have a chilling effect on other experienced controllers. At least some of them would be unwilling to take responsibility for things beyond their control and would eventually leave just to be replaced by less experienced ones.

Some of my former colleagues at Google were part of the effort to save Obamacare after the website that people were supposed to use to subscribe to the program turned out not be working. Here's Wikipedia:

The HealthCare.gov website was launched on the scheduled date of October 1, 2013. Although the government shutdown began on the same day, HealthCare.gov was one of the federal government websites that remained open through the events. Although it appeared to be up and running normally, visitors quickly encountered numerous types of technical problems, and, by some estimates, only 1% of interested people were able to enroll to the site in the first week of its operations.

Suddenly, a website was in the center of attention of both the media and the administration, even the president himself. As Jennifer Pahlka writes in her excellent book Recoding America: Why Government Is Failing in the Digital Age and How We Can Do Better:

If the site failed, Obama’s signature policy would likely go down with it. With this threat looming, suddenly the most important policy of the administration would live or die by its implementation. It was the first time the highest priority of the White House was the performance of a website.

Having the support from the highest places, the people in what was to become US Digital Service, outlined a plan:

He had a straightforward two-step plan. Step one was to recruit a small team of technologists with relevant experiences and skills [to fix the website]. Through a trusted network, he reached out to a set of remarkable individuals who signed up to jump into the fire with him. Step two was to win the trust of CMS—an agency [responsible for implementation of the website] that, like all other agencies, really, was highly skeptical of people from outside and resistant to their interference. The situation was tense, but Todd made it clear that he and everyone who came with him were not there to assign blame. They were there to help. The result was the opposite of the usual response to failure. Instead of the hardening that tends to come with increased oversight—of the kind Weaver would later experience working on satellite software, further limiting what he could do—the CMS team suddenly found themselves with something they hadn’t realized they needed: a group of smart nerds they could trust. Like most other agencies, they knew how to acquire technology and technology services; after all, they’d issued those sixty separate contracts for healthcare.gov alone. But now they had people on their team who could look at the code, not the contract terms. That, it turned out, made all the difference.

— ibid.

Again, sidestepping accountability had a beneficial effect. It managed to cut through the seemingly unsolvable internal hurdles and unstuck a stuck system.

Dominic Cummings, chief advisor to Boris Johnson during the COVID crisis, recounts:

At the peak of COVID craziness in March 2020, on the day itself that the PM tested positive for CoVID, a bunch of people come into Number 10 sit around the table and we have a meeting and it’s about supplies of PPE to the NHS.

They say, “None of this PPE that we’ve ordered is going to be here until the summer.”

“But the peak demand is over the next three to four weeks.”

“Sorry, Dominic, but it’s not going to be here.”

“Why not?”

“Well, because that’s how long it takes to ship from China.”

“Why are you shipping from China?”

“Well, because that’s what we always do. We ship it from China.”

But A, we need it now and B, all of the airlines are grounded. No one’s flying anything.

“So call up the airlines, tell them that we’re taking their planes, we’re flying all the planes to China, we’re picking up all our shit, we’re bringing it back here. Do that now. Do that today. Send the planes today.”

We did that. But only the Prime Minister could actually cut through all the bureaucracy and say, Ignore these EU rules on Blah. Ignore treasury guidance on Blah. Ignore this. Ignore that. “I am personally saying do this and I will accept full legal responsibility for everything.”

By taking over responsibility, Johnson loosened the accountability of the civil servants and allowed them to actually solve the problem instead of being stuck following the rigid formal process.

Finally, consider the mother of all accountability sinks: The free market.

We want government to enforce the rule of law, to enforce the contracts and generally make sure that the market operates freely. But we also explicitly don’t want government interference in the day-to-day workings of the market beyond ensuring compliance with the law and taxes.

Much has been written about how markets act as information-processing machines, how they gather dispersed data from across society and use it to optimize the allocation of scarce resources.

Much less is known about how the lack of accountability gives entrepreneurs the ability to take huge risks. If your company fails, the blame is yours and yours only. No one will come after you. There’s no need to play it safe.

While ignoring the law of supply and demand may have been the primary cause for the failure of communist economies, the fact that the management of every company was accountable to the higher-ups and eventually to the communist party must have meant that they tried to avoid any risks at any cost, which eventually led to terrible performance in implementation of new technologies — even if they were discovered by the scientists — and business practices and to the overall economic stagnation.

By now, you probably have a few ideas of your own about how accountability sinks can be creatively incorporated into institutional design.

But before I wrap up, let me make a few basic observations:

- Formal processes are mostly beneficial and they’re not going anywhere. Any complex modern society would collapse without them.

- Not every formal process is an accountability sink. A process you design and impose upon yourself doesn’t absolve you of responsibility when things go wrong. You remain accountable. On the other hand, a process imposed upon you from above often incentivizes blind adherence, even when it’s hurting the stated goals.

- Not every accountability sink leads to rigidity and cover-ups. A process can be designed in such a way as to shield those affected from the accountability, but at the same time not to impose any pre-canned solutions upon them. (E.g. blameless postmortems.)

1 comments

Comments sorted by top scores.

comment by Tobias H (clearthis) · 2025-04-22T07:43:20.481Z · LW(p) · GW(p)

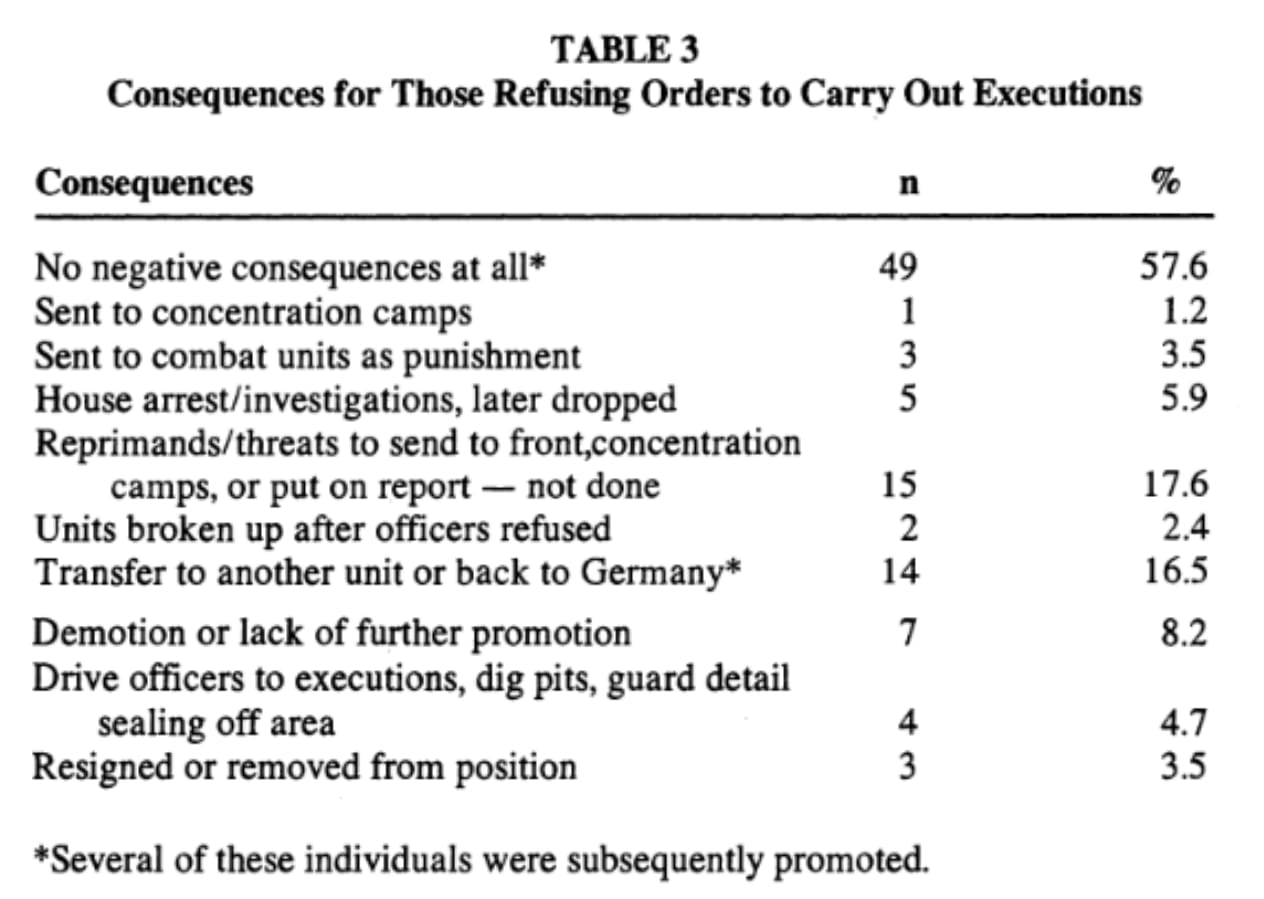

Quite an interesting paper you linked:

Conventional wisdom during World War II among German soldiers,

members of the SS and SD as well as police personnel, held that any order given

by a superior officer must be obeyed under any circumstances. Failure to carry

out such an order would result in a threat to life and limb or possibly serious

danger to loved ones. Many students of Nazi history have this same view, even

to this day.

Could a German refuse to participate in the round up and murder of

Jews, gypsies, suspected partisans,"commissars"and Soviet POWs - unarmed

groups of men, women, and children - and survive without getting himself shot

or put into a concentration camp or placing his loved ones in jeopardy?

We may never learn the full answer to this, the ultimate question for

all those placed in such a quandry, because we lack adequate documentation

in many cases to determine the full circumstances and consequences of such a

hazardous risk. There are, however, over 100 cases of individuals whose moral

scruples were weighed in the balance and not found wanting. These individuals

made the choice to refuse participation in the shooting of unarmed civilians or

POWs and none of them paid the ultimate penalty, death! Furthermore,very few

suffered any other serious consequence!

Table of the consequences they faced: