Compromising with Compulsion

post by matejsuchy · 2021-02-25T16:43:25.000Z · LW · GW · 1 commentsContents

1 comment

Xposted from my substack.

Epistemic Status: Highly Speculative, Possibly Useful

Do I contradict myself?

Very well then I contradict myself,

(I am large, I contain multitudes.)

— some guy

Whence arises contradiction? This is an urgent question, especially for the many who sour at Whitman’s suggestion that it is in fact very well that it does so at all. In this post, I’ll introduce a toy model which has suggested itself to me recently as a bridge between various theories of cognition (Friston & Guyenet) and Jungian psychology.

Predictive coding tells us that our sensory function is the product of a bayesian process that combines prior beliefs with raw data. The Fristonian formulation uses minimal assumptions to formalize this idea and cast agent action as an optimization algorithm that minimizes the divergence between the actual distribution p on prior beliefs and raw data we experience and a target distribution t. But what are p,t really?

Let’s imagine we have an agent subject to a simple survival pressure: the agent is subject to a repeated game for which in every iteration he is shown an array of images. If he is able to point to the image that cannot be construed to match the others, he gains one util; otherwise, he loses one util. Assume for the sake of argument that in every case the images will be of either n-1 rabbits with one non-rabbit duck or n-1 ducks with one non-duck rabbit (if asserting that the duck must be non-rabbit seems silly, sit tight for a paragraph or so). Because target distributions can be associated with preferences, we infer that his target distribution is one where the images are available and usefully distinguishable in the sensory function. That is, where raw data from the images are available and matched to priors that allow for the appropriate odd-one-out to be identified. To get there, the agent applies some RL algorithm to update his distribution p on raw data (by updating his actions — for example, we can expect that he will converge to turning his head toward the images in question) and on priors (going from blank slate to having some maximally useful tiling of the latent space). Once at t, the agent sits pretty.

Ok, so far so good. The agent starts out with p0 initialized at some random point in the distribution of distributions, applies an RL algorithm, and ends up at t (or t’ in t’s level-set).

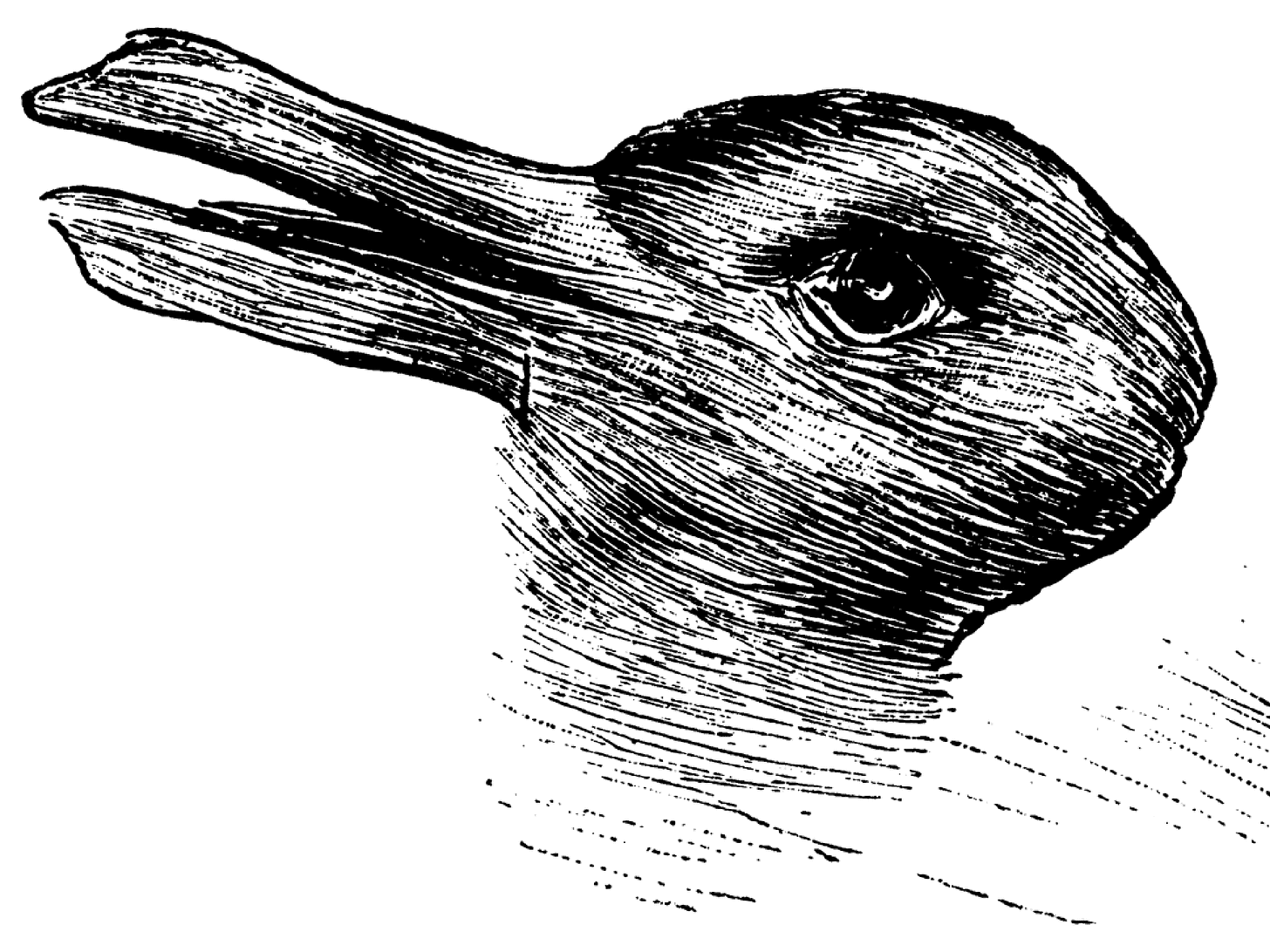

Now, let’s make things interesting. Assume that we subject the agent to an adaptive pressure; as the experiment proceeds, we begin to constrain time on a basis that decreases time asymptotically toward 0. Moreover, we start introducing versions of the famous Wittgensteinian rabbitduck to the arrays:

What might we expect in terms of target distribution? Well, the agent will still need to be able to accept the correct raw data and distinguish between rabbits and ducks. But now he must do so quickly. He has an obvious strategy to optimize ambiguity-elimination:

Check if the majority type is known. If the majority type is known to be X, for each image, check if the raw data sufficiently conforms to the prior for X. If it does not, flag the image as the exception and conclude. If the majority type is unknown, check if the raw data for the image in focus sufficiently conforms to each prior.

If this image is not the first inspected, and disagrees (does not share a compatible prior) with the former image, and it is not the second image, flag it and conclude. If it is the second image, then check if the third image agrees with the first image. If it does, flag the second image. If not, flag the first. In either case, conclude.

If it, however, agrees, proceed to apply the algorithm to the next image after updating the majority type according to the following rule: if a prior has 2 or more total matches after this check, assign the majority type to this prior.

While identifying the majority type introduces some overhead, for sufficiently high n it should reach fixation. Now, what would happen if we shone a light before each trial that indicated the majority type? Red for rabbit and dandelion for duck. The optimal algorithm simply becomes:

For majority type X, for each image, check if the raw data sufficiently conforms to the prior for X. If it does not, flag the image as the exception. If it does, proceed to the next image.

This saves a lot of time and will maximize our agent’s number of utils over the game. But it also introduces apparent contradiction. Because our agent only ever compares an image’s raw data to one prior, in one iteration he may assign “only rabbit” to the ambiguous image and, and in another he may assign “only duck.” He has neither the luxury nor the need to ever check if an image can match both. Time constraints and signals have led to a compression of sensory reality and an elimination of ambiguity.

What does this mean for subagents? We know that social and survival environments are both highly competitive, and therefore should exert strong time pressures; a difference of a second’s time in processing speed can be crucial both when signaling one’s intelligence to mates and partners and when fending off enemy humans/predators. For each of these constrained and important games, we should expect the mind to converge to a regime wherein it determines game type via an analog to the red/green light in its environment and collapses to the optimal perception/action strategy, remaining there until the game is over. This strategy very well may completely contradict the strategy the mind collapses to in another game, both in terms of the priors it conforms experience to and the actions it takes. Because these strategies entail vastly different behavioral and perceptual routines, they behave much like different agents; the top-level agentive Fristonian optimization routine can be quite losslessly decomposed as a problem of optimizing a rotation over subagentive Fristonian routines. The latter perspective buys us some structure, so let’s see where it takes us.

First, it should be clear that both the rotation strategy and subroutines must be optimized by the agent. Even if we have a “wartime” subroutine, if we haven’t optimized within it, we’ll still probably be killed by an adversary who has. We can expect that an agent who is optimizing for utility will then inhabit critical subroutines more often than would be inferred by naively looking at game frequency. It’s simply worth it to regularly enter and optimize within “warrior mode” years before one finds oneself in an actual combat game and to allocate considerable resources in doing so.

Second, because many of these game types are invariant under time and place (mostly), we might expect that bloodlines that offer agents “starter” strategies as instinctual clusters would win out over ones that didn’t. For the thousands of years wherein combat entailed males bludgeoning other males with non-projectile weapons, war games did not change all that much. As a result, it seems plausible that agents who spent their time refining pre-built protean strategies to be optimal with respect to the idiosyncrasies of their environment outcompeted those who had to train subagents from scratch. Empirically, this seems to have been the case. To look for evidence of inherited subagent configurations, one needn’t look further than a local middle school. Bootcamp is less training than it is an awakening of a core instinct; it simply is too easy to train a human to skillfully kill for it to be otherwise.

Ok, so this basically gets us to Jungian archetypes. The male psyche has a pre-built warrior archetype to help it kill; it has an anima archetype to better model the archetypes women in its environment access. These archetypes are universal across time and space, and because they entail unique prior configurations, when we try to “see” them by filtering random noise through them, we get images that are unique to each one. This might explain the mythological clothes that many archetypes wear in the mind. But it doesn’t quite get us to a comprehensive theory of subagents. We’ve addressed the importance of training, but we haven’t motivated the commonly perceived phenomenon of subagent-related compulsion.

What is subagent-related compulsion? Guyenet gives us a good toy model: the lamprey brain. In the lamprey brain, there are multiple subsystems that make bids on behavior, with the strength of the bid relating to the expected fitness the subsystem’s action/perception model would contribute given the lamprey’s world state. If a lamprey is being chased by a predator, we’d expect the “evade predator” subsystem to win the “auction” held in the lamprey’s pallium (cerebral cortex analog). The analogy of subsystems to subroutines/subagents/archetypes is obvious; we can model the previously mentioned collapse step as involving an auction wherein subagents submit bids that communicate their adaptive fit to a given game. Only, given the importance of training, the bids can’t only communicate current fitness; otherwise, humans would never train for war. Instead, the bids should communicate current fitness and the anticipated importance of training. In high-stakes games (current combat), the fitness term should dominate, but in low-stakes games (daily life), the training term might. The last step is to remember Hanson and consider the adaptive advantage of keeping this whole bidding process unconscious. Consider the following Hansonian scenario:

Bob is a member of a pacifist agricultural group, which shares an island with a tribe of raiders.

There are two selection gradients to which Bob is subject: the agricultural group lavishes resources on conformist “true believers” of pacifism, and there are nearby raiders who might attack the village and kill its inhabitants. Bob’s incentive is to cheat and convince others that he is a true believer, while still training for combat. The problem is that lying is very difficult to do, and so even if he keeps his training secret, he might be found out when he unconvincingly announces his belief in pacifism. However, if Bob trained because he was submitting to a powerful unconscious urge, he could maintain his “truthful” conscious identity as a peace-lover, albeit one that is subject to sinful tendencies. This conscious “truthfulness” would make his cheating much more difficult to detect by others, and therefore the conscious/unconscious split increases Bob’s fitness substantially. Compulsion is a feature, not a bug — some inner turmoil and feelings of “sinfulness” are totally worth the increased expected fitness that the apparent contradiction between the consciously preferred agent and the unconsciously chosen agent (whose training term evolves such that it wins the bidding war at the evolutionarily optimal frequency) buys.

Human societies have a funny habit of incentivizing conscious commitment to beliefs that are maladaptive to the individual; in fact, they must do so — society is a collaborative equilibrium, and defecting unpunished always pays. For talented bloodlines that can ensure that the chance of punishment remains sufficiently low, the gains to be had through defecting could create a significant adaptive gradient. Given that we observe behavioral phenomena which corroborate such a split, it seems reasonable to conclude that it did indeed evolve for these reasons.

Society + evolution = subagent compulsion.

If so, what does this hypothesis get us? Well, it justifies a kind of regimented compromise regime between subagents. If we try our hardest to refuse to train a given subagent, perhaps because we think it’s a waste of time, we would expect its training term to steadily increase until it is large enough to compulsively win out in less-than-optimal situations, upon which it will drop back down before resuming its increase. If the bored 1950s housewife wants to avoid becoming “possessed” by her risk-loving and spontaneous subagent (whereupon she might compulsively cheat on her husband and jeopardize her living situation), she should make a habit of sky diving every other month. Even if she somehow resists this urge with a “chastity” bid, we might expect that the enormous competing bids between chastity and spontaneity would create problems in the subagent auction mechanism, and create a sort of stall where none of the other subagents are able to win. Such a stall might even be evolutionarily adaptive; it basically extorts the consciously affirmed agent (here, the chastity-loving agent) into giving up or else. This would look like profound inner turmoil, anxiety, and/or depression, which are not necessarily a subjective upgrade on compulsion.

Anecdotal experience seems to corroborate this hypothesis. It’s well-known that living a “balanced” life can be protective against some emotional dysfunction, and I can think of dozens of examples where all it took to resolve a bout of anxiety/depression in a friend was a bit of subagent training (is this why exercise — critical for combat success — is protective against depression in a society which trains for combat very little?). I always thought that the idea of “balance” was sort of silly / suspiciously vague. But this model puts a decently fine edge on it; balance is when you meet your evolutionary quota for training, and do so in consciously-chosen and maximally convenient circumstances. It also explains the weird phenomenon of people getting “energized” by experiences that objectively are very cognitively intensive; an extrovert feeling rejuvenated and focused after a 4-hour party doesn’t make much sense, unless you consider the possibility that a massive “train your social signaling subagent” bid had been clobbering all of the other bids in his mind all Friday, and has finally quieted down. Maybe instead of extroverts and introverts being vaguely different, it’s simply that for introverts the exertion involved dominates (and so parties leave them tired) whereas extroverts have such a quickly growing and imposing “train your social signaling subagent” term that they end up on net more focused and rejuvenated? Obvious extensions to sports, art, and basically any evolutionarily critical game are obvious.

I’m not aware of empirical evidence which has definitively extended the lamprey model to the human mind, so it could certainly be entirely wrong. The fact that it explains things like internal family systems and Jungian archetypes with little structure means that it is almost certainly a gross oversimplification. However, while I’m still trying to parse details and question assumptions, applying to my life (by increasing the time I spend in social signaling games — via parties — and the time I spend training for combat — via lifting and hopefully martial arts after restrictions have subsided) has yielded non-trivial reductions in subjective akrasia. The model’s marginal prescription (I knew “balance” was good, but would not have invested time in attending parties specifically without this model) has been useful for me so far, so even if it turns out to be horribly wrong on a mechanical level, I’ll probably be glad for having arrived at it. Maybe like cures like after all?

N.B. For my first 20 posts, I will keep editing light in order to optimize for feedback quality. If you have suggestions on how a piece can be improved, especially one of these first 20, please communicate it to me — I will be very grateful! So, read this as essentially a first draft.

1 comments

Comments sorted by top scores.

comment by Gordon Seidoh Worley (gworley) · 2021-02-25T20:18:34.651Z · LW(p) · GW(p)

N.B. For my first 20 posts, I will keep editing light in order to optimize for feedback quality. If you have suggestions on how a piece can be improved, especially one of these first 20, please communicate it to me — I will be very grateful! So, read this as essentially a first draft.

A few things jump to mind right away:

- Not clear why I should read this from the start. What am I going to get out of reading this? Since I'm not already familiar with your writing, I don't have trust that it's worth reading.

- Large paragraphs make this hard to scan so it wasn't easy to jump around and see if there was something in here that was interesting that warranted reading the whole thing start to end.

- Ditto for lack of exposed structure (headings).

- Everything mating related felt overly serious for its lack of nuance. Something to watch out for because simplified examples about things people care a lot about will either turn them off or turn on their desire to object because they don't like something you said at the object level even if your example was right about how it was using the abstraction but wrong about the facts through which the abstraction was explained.