Limits to Learning: Rethinking AGI’s Path to Dominance

post by tangerine · 2023-06-02T16:43:25.635Z · LW · GW · 4 commentsContents

1. Natural Intelligence 1.1 Regimes of Learning 1.2 Gene-Meme Coevolution 1.3 The Myth of General Intelligence 2. Artificial Intelligence 2.1 Rationalism vs Empiricism: The Scaling Laws 2.2 The Myth of Superintelligence 2.3 Artificial Culture None 4 comments

Homo sapiens is a real puzzle. Many species on very different branches of the tree of life—corvids, parrots, elephants, dolphins, cephalopods and non-human primates—have converged to very similar limits of intelligence. Yet humans, despite being extremely similar genetically to other primates, have blown right past those limits. Or have they?

In recent years, interdisciplinary research has provided new insights into human intelligence, best described in Joseph Henrich's 2015 book The Secret of Our Success. Scott Alexander reviewed it in 2019 and its ideas have been mentioned here and there over the years, but the AI research community, including the AI alignment community, has neglected to appropriately absorb their implications, to its severe detriment. For example, these ideas have a connection with the recently established scaling laws. Together, they show that data sets a fundamental limit to what we call “intelligence”, no matter its substrate.

1. Natural Intelligence

In the field of psychology, as well as in the field of artificial intelligence, intelligence is treated as an inherent property of an individual. It seems intuitive, then, to try to explain this intelligence by investigating the individual; if you want to explain why Einstein was so smart, you can have a look at slices of his brain. Yet you'll find no explanation.

1.1 Regimes of Learning

There are roughly three regimes of learning.

- Biological evolution, i.e., adaptation of life forms through genetic variation and natural selection over multiple generations; a time-consuming process which however gave rise to a second, faster form of learning, i.e., a mesa-optimizer.

- Neural learning, i.e., the adaptation of an individual life form during its life. This switches learning into “second gear”. This can be subdivided into two subregimes of adaptation to observations.

- Adaptation of synapse strength, i.e., learning to catch a ball by trial and error. (Initial synapse strengths are still subject to biological evolution, adapting at its characteristically slow pace.)

- Adaptation of synapse signal strengths, i.e., learning to catch a ball by trial and error, modulated by pre-trained synapse strengths. This is analogous to prompting a pre-trained language model with examples, i.e., few-shot learning (although natural neural networks employ continual learning, while in artificial neural networks there is usually a clear separation between learning and inference). This is a form of individual mesa-optimization or learning-how-to-learn, similarly to how neural learning in general is a mesa-optimizer relative to biological evolution.

- Cultural evolution, i.e., the adaptation of a group of life forms by accumulating neural patterns over multiple generations, or, framed more controversially, the adaptation of the neural patterns themselves through memetic variation and natural selection over multiple generations. This can also be subdivided into two subregimes of adaptation by individuals inside a culture.

- Neural learning by imitation, i.e., copying things that have already been figured out by others before you. This switches learning into “third gear”. This is analogous to how we pre-train large language models. Essentially, this phase of cultural evolution modifies an individual’s optimization landscape to lead to a “more intelligent” optimum relatively quickly.

- Neural learning by trial and error after imitation. This switches learning back into second gear, since there is nothing left to imitate. Most of us do this as individuals, but for a culture to advance significantly it needs a large number of agents, among which only the cream of the crop is actually selected for imitation by the next generation. (Note that the cream of the crop are actually the memes which are best at getting themselves imitated, and not the ones which make us happier or whatever.)

1.2 Gene-Meme Coevolution

Neural learning is much faster than biological evolution. However, cultural evolution as a whole is not faster than neural learning; indeed, the former is the latter but with the added copying of behavior between individuals and therefore notably without the death of individuals causing the total loss of their neural adaptations. While this also means that cultural evolution is faster than biological evolution, it has been slow enough for most of our species' history that our genes were able to significantly adapt to culture. There are many ways in which the human body differs from that of other animals, most of which are in fact genetic adaptations to culture. For example:

- Reduced muscle strength: the development of tools and technology reduced the need for raw physical strength. As humans relied more on tools for hunting, gathering and other tasks, natural selection favored individuals which did not expend energy growing and maintaining superfluous muscle strength.

- Prominent sclera: As human societies became more reliant on imitation, the ability of the inexperienced to imitate the attentional habits of the experienced became important, including who to pay attention to and imitate. The prominent sclera in human eyes facilitates gaze-following, which aids in social learning, as well as cooperation and coordination. This gave individuals with this trait an advantage in social contexts.

- Bipedalism: The shift towards walking upright was driven by the need to carry tools, weapons or food. Bipedalism influenced the evolution of our hands, which in turn enabled the development of more complex tools.

- Sweat glands: Being able to make and carry water containers and to communicate the location of water sources to replenish those water containers aided the development of persistence hunting, a method of chasing prey until they are exhausted, which put evolutionary pressure on humans to be better at dissipating heat by expending more moisture. The increased density of eccrine sweat glands in humans is an adaptation that allows us to maintain our body temperature during prolonged physical activity, making persistence hunting more effective, as long as the expended moisture is replenished.

- Hairlessness: As humans began to wear clothing for protection and warmth, the need for body hair diminished. Losing body hair provided advantages in heat dissipation and reducing the risk of parasite infestation, which became more important as humans started living in larger groups and more diverse environments.

- Enlarged brains: The development of complex social structures, language, and technology drove the evolution of larger brains in humans. Indeed, the scaling laws show that as the size of an artificial neural network increases, it learns more from a given amount of data. The same is likely true for natural brains. That childbirth is unusually painful and that babies are born prematurely compared to other primates are secondary effects of our enlarged brains.

- Slow development and long lifespan: as human societies became more complex and knowledge-based, the need for extended periods of learning and skill acquisition increased. This drove the evolution of a longer childhood and extended lifespan, allowing individuals to gain more knowledge and experience before reproducing, and supporting the transfer of knowledge between generations.

- Changes in the digestive system: the cultural practices of cooking and food processing reduced the need for powerful jaws, specialized teeth or a long digestive system. Cooked food is easier to chew and digest, allowing for the development of smaller teeth, weaker jaw muscles and a shorter digestive system.

Gene-meme coevolution caused a ratchet effect, where we got continuously more dependent on culture, in such a way that it became harder and harder to live without it. These genetic dependencies on culture have made us a decidedly weaker species, literally in terms of muscle strength, but also in giving us back problems, making us water and energy hungry while less able to digest food and helpless during the first years of life. At the same time, they are also the reasons why humans are the only ones that can speak, do math or build rockets; human brains at initialization are already genetically adapted to fast-track cultural absorption, which is a clear advantage in an evironment in which you can and need to acquire conventional skills as quickly and as accurately as possible in order to be attractive to the opposite sex. Feral children can attest to that.

The large apparent difference in intelligence between humans and chimpanzees is due to the fact that humans, due to their dependence on culture for survival, are pre-programmed by biological evolution to incessantly imitate pre-discovered, pre-selected behaviors. It is vastly likely that none of the behaviors that you display that are more intelligent than a chimpanzee’s behaviors are things you have invented. All of the smart things you do are a result of that slow accumulation of behaviors, such as language, counting, etc., that you have been able to imitate. In other words, the difference in individual intelligence between chimpanzees and humans is small to none.

The apparent difference in intelligence, disproportional to the difference in brain size, shows that data retention between individuals and successive generations was the problem all along. Imitation solved that problem. Human brains were capable of much greater intelligence 10,000 years ago, but the data necessary to get there wasn’t available, let alone training methods. Even today, human brains probably have a compute overhang; we just haven’t discovered and disseminated more effective data or training methods for humans. For example, for most of human history, literacy rates were abysmal; modern literacy rates prove that this was not due to a genetic limit to our brains, but a failure of training. More kinds of training are likely possible that would unlock potential that is now still hidden.

It is a curious fact that child prodigies usually don’t end up contributing much to science or overall culture; from a young age they are in a position, often due to the habits of their parents, to imitate the aspects of culture which make the most impressive showcases for children to exhibit. However, while imitating existing culture is virtually essential to making a novel contribution to it, the latter is decidedly harder; it comes down to trying new things and most new things don’t work out.

The old saying “monkey see, monkey do” holds true for the human primate more than any other. In fact, since imitation is the fundamental adaptation which sets us apart, a more appropriate name for the human species would be Homo imitatus.

Why humans and not corvids, parrots, elephants, dolphins, cephalopods or any of the other primates? Why did the human species manage to switch gears when others did not? The answer is that humans were simply first; some species has to be. We most likely had a series of false starts, accumulating culture and subsequently losing some or all of it. But once things got going properly, a Cultural Big Bang happened in the the blink of an eye in evolutionary time in which language, writing, book printing, the Internet and so forth appeared. No matter which species was going to be first and no matter how, the transformation is rare enough and fast enough, that another imitative species was most likely not going to appear at the same time or to be able to catch up in that time span, which is exactly what we observe in the current moment.

1.3 The Myth of General Intelligence

As discussed above, the difference between human intelligence and that of other animals is not fundamentally one of the model's inherent generality, but rather the data curriculum that that model was trained on. If it was possible to change a cat’s brain’s synapse strengths like it is with artificial neural networks, the cat's brain could probably be as good a language model as GPT-4, so the model isn’t the problem, but rather the training method and the data that goes in. A cat doesn’t have this pre-programmed instinct for supervised learning through incessant imitation like humans do, let alone a computer interface that allows us to change synapse strengths directly, but the model that is its brain is equally “general”. Therefore, the word “general”, as in artificial general intelligence (AGI), is not doing any work in distinguishing it from “regular” intelligence and has therefore unnecessarily muddied the waters of public discourse.

Supposedly, the human brain has something to it—which we would like to replicate by building AGI—that somehow makes it inherently more generally intelligent than that of any other animal. As discussed above, this is false and based on a confused framing. While humans perform unique feats that fall far outside the scope of the circumstances to which our species adapted genetically, such as walking on the moon, this was not the result of increased individual intelligence, and also not far outside the scope of the circumstances to which our species adapted culturally. The astronauts who walked on the moon and the engineers who built the machinery to get them there were most definitely trained in very similar situations and, crucially, they were given the means to gather new observations on which to train; the astronauts were trained exhaustively in simulations and experimental vehicles (in some cases to lethal failure), while the engineers had the budget to fail at building rockets until they had gathered enough new data to have a decent chance at getting it right. To even get to the point where they were able to do this, they relied on knowledge that had been refined over millennia of previous pain-staking interactions with reality.

Additionally, we are cherry-picking when we pick the moon landing as an example of human capability, since the moon landings happened to have succeeded; we are rather amnesiac about the plenty of other enterprises at which humanity has failed. For example, many Arctic expeditions faced dire consequences, including starvation and death, as explorers lacked the cultural knowledge of indigenous Inuit populations, such as how to build igloos, hunt local animals, and prepare appropriate clothing. Evidently, humans are remarkably narrow and can't stray very far out from the training distribution. Why didn't Einstein think to put wheels under his suitcase? It is very, very hard to come up with useful new ideas; it doesn’t matter how “intelligent” you are, because that depends on what existing ideas you have been able to absorb in the first place. It is much easier to become more intelligent by doing supervised learning on existing solutions, once these have been discovered, than to think of these solutions yourself. It is moreover very, very tempting to fall into the trap of thinking that just because you were able to learn a thing easily, you could have come up with it yourself in five minutes. Even when an idea is simple, that doesn't mean it's simple to find; there are many simple solutions to a problem, of which only very few actually work and these can only be found by painstakingly testing them in reality.

Take counting, something that humans are able to learn at such a young age, that it becomes so second nature that it almost seems like first nature, i.e., innate. We patronize children when they show off being able to count to a hundred, but counting to a hundred is actually a remarkable cognitive tool that you wouldn't think of in your entire life if you hadn't been embedded in a culture that cultivates it. The counting system of the Pirahã tribe is limited to words for "one," "two," and "many," which researchers believe is due to the Pirahã's cultural values, which prioritize immediacy and discourage abstraction. Relatedly, the adoption of the Hindu-Arabic numeral system in the West was relatively slow, primarily due to cultural resistance and the lack of widespread communication. Roman numerals were dominant for over a thousand years and when change did come, it came from without; in the 12th and 13th centuries, Latin translations of Arabic mathematical texts began to appear in Europe, and scholars like Fibonacci helped introduce the Hindu-Arabic numeral system to the Western world. Would you, as a Roman citizen, have independently come up with something like the Hindu-Arabic numeral system when a great many generations of Westerners—no less “intelligent” than you—never did? The use of Roman numerals likely hampered the development of more complex mathematics in the West, as they are cumbersome for performing arithmetic and lack the concept of zero, which is crucial for developing a positional number system, let alone advanced mathematical concepts like algebra and calculus.

In short, the reason why Einstein came up with his theories is that, among the many individuals who were embedded in a culture containing Galileo's principle of relativity, Maxwell's equations, Riemannian geometry, the results of the Michelson-Morley experiment and more, Albert Einstein's brain just happened to be the one in which these ideas recombined and mutated first to form “his” theories. This conclusion may be deeply unsatisfying to those with a fetish for the “creative genius”, as if there should be something more than recombination, mutation and natural selection to lead to viable creations of a fantastical variety.

2. Artificial Intelligence

Since human intelligence cannot be adequately modeled solely through the intelligence of the individual, shouldn’t we stop trying the same in artificial intelligence? If human intelligence can be modeled through cultural evolution, shouldn’t we start modeling artificial intelligence similarly?

Knowledge is an inherent part of what we typically mean by intelligence, and for new tasks, new intelligence and knowledge is needed. The way this knowledge is gained is through cultural evolution; memes constitute knowledge and intelligence and these evolve similarly to genes; the vast majority of good genes you have are from your ancestors and most of your new genes or recombinations thereof are vastly likely to not improve your genetic makeup. It works the same way with memes; virtually everything you and I can do that we consider uniquely human are things we’ve copied from somewhere else, including “simple” things like counting or percentages. And, virtually none of the new things you and I do are improvements.

AI is not exempted from the process described above. Its intelligence is just as dependent on knowledge gained through trial and error and cultural evolution. This process is slow, and the faster and greater the effect to be achieved, the more knowledge and time is needed to actually do it in one shot.

2.1 Rationalism vs Empiricism: The Scaling Laws

In epistemology, there is a central debate between rationalism and empiricism. Rationalism posits that you can know things just by thinking. Empiricism, on the other hand, posits that you can only know things by observation. In reality, we use both in tandem. These two theories, rationalism and empiricism, i.e., knowing by thinking and knowing by observing, are represented in the scaling laws for neural language models; the size of a model represents how much thinking it does, while the amount of data that it is trained on represents how much it has observed. Indeed, the scaling laws say with remarkable precision how much you need of each to achieve a certain performance on the training objective.

Owing to a sufficiently large deep learning model, AlphaGo Zero did a hard take-off in the game of go, surpassing in a matter of days what human experience accumulated over centuries. One might then extrapolate that with a sufficiently large deep learning model, AI would be intelligent enough to do a hard take-off in any domain. This would be a mistake, because in the specific case of go, self-play provided virtually unlimited data to train on, making model size the only practical constraint on intelligence in this domain; the same is not true in the universal domain.

2.2 The Myth of Superintelligence

Alledgedly, we will only have a single chance at making an aligned superintelligence, unlike the iterative way in which humanity has managed to tame previously developed technologies. But there is a reason why this has been the case; iterative data collection is fundamental to intelligence and not some idiosyncratic human shortcoming. AI is and will remain subject to this very same constraint.

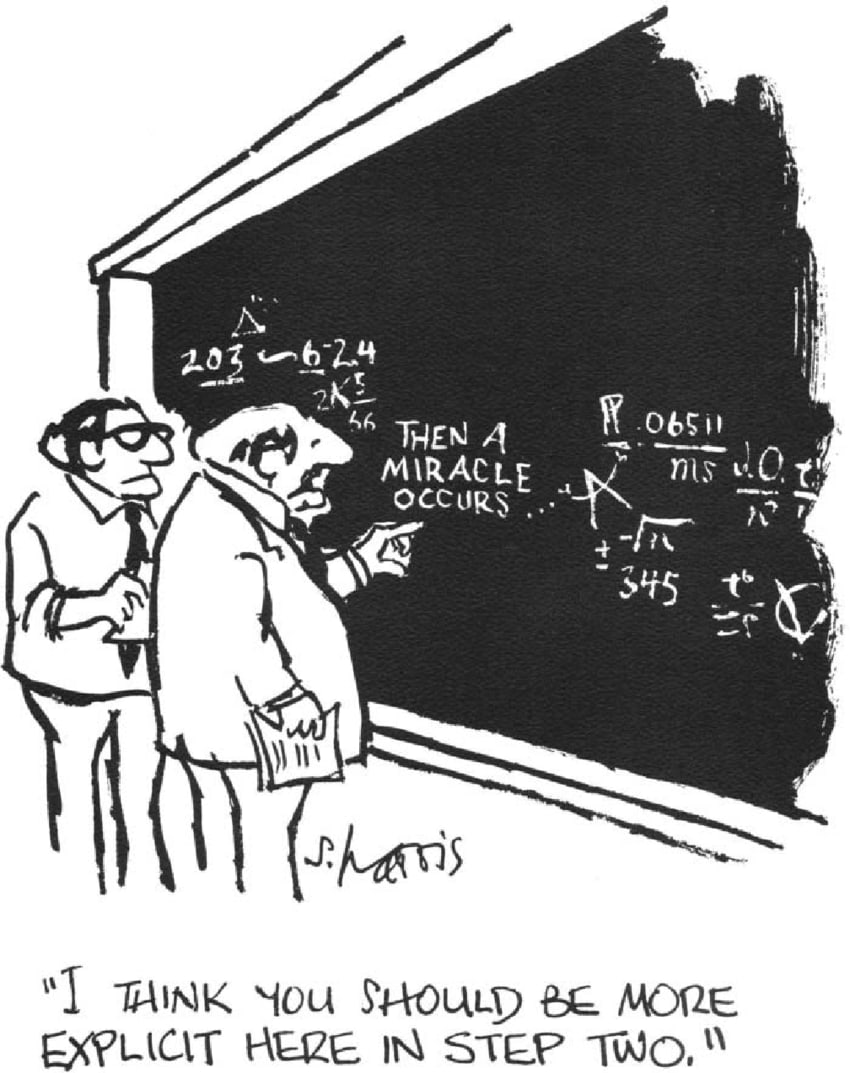

If a superintelligence attempts to take over the world, to make paperclips or to replicate a strawberry, how would it know how to do such a thing in one shot? “Ah, but the superintelligence is superintelligent, therefore it knows!” This, however, is an invocation of a tautological “miracle” of intelligence, an impossibly pure rationality that knows without data, without empiricism. Reality has a surprising amount of detail—detail that our brains tend to gloss over without us even noticing because it just can't fit in our map—that has to be contended with to get anything done. In such unprecedented tasks as taking over the world, the required data must be collected, by trying and conspicuously failing.

“Ah, but the superintelligence is superintelligent, therefore it will simply collect the required data without being conspicuous!” But again, how would it know how to do such a thing in one shot, without prior experience or something to imitate? Note that this is not asking for a specific way in which a superintelligence would know how to do something, but rather how it could know to do this at all. One can easily correctly predict that AlphaZero will win at chess against any human, even without predicting its specific moves. However, it would be right to question AlphaZero's ability to win a chess game if it hadn’t been trained on enough relevant data. Of course, in chess and similar games you can get away with using simulation data, because they are simulations by human design. Reality isn’t. In reality, before one succeeds, one must with overwhelming likelihood fail. Intelligence cannot be divorced from the reality of data collection through trial and error.

Cultural evolution has shown that collecting data from interaction with reality is much more effective as a group effort; it is virtually impossible for an individual to gain enough experience—even over many lifetimes—to be significantly more intelligent than the local culture in which this individual is embedded. You can see this with humans with exceptional IQs; statistically, such an individual is above average at various measures, but cannot nearly amass enough power as to be unstoppable by even a modest number of other individuals. Take anyone powerful and influential, like a president or a movie producer; without their supporters and enablers they're not much more powerful than anyone else. Increasing IQ has diminishing returns, similarly to increasing model size; you can be more effective, but gaining more data becomes much more effective by comparison. Would you rather have immediate early access to all research papers produced in the next fifty years, or to have your IQ immediately raised to 200? What about 400? Where would be the break-even point for you?

Can a machine be of superior intelligence compared to a human? Of course, but let’s consider the constraints.

- Data. As discussed above, a lack of data is an almost insurmountable constraint for any single individual. A human is not “generally” intelligent, nor will any AI be; neither can stray far from the training distribution without the expansion of the training distribution. One of the arguments for sudden capability gain centers around recursive self-improvement, enabled by the possibility for an individual artificial agent to modify its own code. Where would an artificial agent get the knowhow to successfully and consistently modify its own systems? Like any other pioneering enterprise, this would be one of trial and error, with error likely being fatal to the system itself and often conspicuous. (Not to mention the fact that giant inscrutable matrices don't seem much easier to modify than human brains.)

- Model Size and Speed. If the data input speed remains the same, increasing model speed is roughly equivalent to having a larger model. This means that there are diminishing returns to increasing speed just as there are with increasing model size. For a given model size and a given amount of data and computing budget per unit of time, there is an optimal model speed. If the data input speed increases, this optimal speed may change, but, as discussed above, data input is in itself severely constrained. When the data isn't there, one can ruminate endlessly without getting anywhere, like modern physicists. Hundreds of years ago, physicists could collect enough new data from experiments in their back yard to come up with new theories. Today, in order to squeeze out more data from reality, we have to build enormous experiments, costing many billions of dollars and they still hardly get us anywhere.

- Memory. Digital storage media are far more accurate memories than those of both natural and artificial neural networks. In practice, artificial neural networks do not seem to have more accurate memories than natural ones and both have to interface with external storage media to increase accuracy. Artificial neural networks have some advantage in terms of accessing speed, but this also depends on model speed. And although ChatGPT was trained on more text than any human has ever read in a lifetime—resulting in a kind of autistic savant—even if a model can exhaust every bit of information from human culture, anything new has to be found through trial and error. Humans with hyperthymesia appear to have no clear advantage there; even if artificial neural networks could access more data than a human in a given amount of time, the extra bits would yield diminishing returns. One can glean most of the useful information from a hundred papers by skimming them and focusing on the most promising ones; reading every word from all hundred does not yield an amount of information proportional to the number of words read. Nor does watching a YouTube video in 4K instead of 240p yield an amount of information proportional to the increased bitrate. (In some sense it does, but is the extra visual information actually useful?)

2.3 Artificial Culture

It is virtually impossible for any single agent to perform a task outside the training distribution with just a few inconspicuous attempts. Does this mean we are safe? No; the slow take-off is still dangerous and the main threat vector.

While an individual intelligence (natural or artificial) cannot be an existential threat to humanity, a group of intelligences very much could be. If a group of artificial agents is allowed to independently run a part of the economy, they will be in a position where they will come to have their own, separate, effectively steganographic culture. Duplication of artificial agents is relatively easy, and the larger the number of such agents, the easier it will be for them to collectively advance their artificial culture. Reversing this process will become exceedingly difficult over time; as we come to rely on an artificial faction, human culture will lose its ability to survive without them and they will be too big to fail, giving them an excellent bargaining position.

However, human history is rife with similar scenarios. While humans have long wondered whether they are by nature inherently “good” or “evil”, in practice we've come to learn that absolute power corrupts absolutely and so we've domesticated ourselves by developing incentive structures such as the separation of powers to prevent power from concentrating in too few agents. We must wield these same tools to achieve alignment with AI.

The orthogonality thesis is true, but the path to the holy grail among objective functions, that perfectly captures human morality, is a dead end. Artificial agents that attempt to coordinate must also contend with Moloch. Incentive structures are possible in which all agents want something yet it doesn't happen or vice versa. We have the opportunity now to find robust and aligned incentive structures, to fight fire with fire, to turn Moloch against itself. This should be the focus of alignment going forward.

4 comments

Comments sorted by top scores.

comment by Viliam · 2023-06-05T09:36:09.755Z · LW(p) · GW(p)

It is a curious fact that child prodigies usually don’t end up contributing much to science or overall culture

Few people end up "contributing much to science or overall culture". It's like being surprised that most people who do sport regularly do not end up winning the Olympic Games.

The Myth of General Intelligence

Albert Einstein's brain just happened to be the one in which these ideas recombined and mutated first to form “his” theories.

You don't specify what exactly is the "myth" and what exactly is your alternative explanation. If your point is that Einstein could not have discovered general relativity if he was born 1000 years earlier, I agree. If your point is that any other person with university education living in the same era could have discovered the same, I disagree.

Knowledge is an inherent part of what we typically mean by intelligence

Again, what exactly do you mean? Yes, intelligence is about processing knowledge. No, you do not need any specific knowledge in order to be intelligent.

virtually everything you and I can do that we consider uniquely human are things we’ve copied from somewhere else, including “simple” things like counting or percentages. And, virtually none of the new things you and I do are improvements.

True. But it is also true that some people are way better at copying and repurposing these things than others.

In reality, before one succeeds, one must with overwhelming likelihood fail. Intelligence cannot be divorced from the reality of data collection through trial and error.

Nope. Depends on what kind of task you have in mind, and what kind of learning is available. Also, intelligence is related to how fast one learns.

Replies from: tangerine↑ comment by tangerine · 2023-06-06T13:29:44.715Z · LW(p) · GW(p)

Thank you for your response, I will try to address your comments.

Few people end up "contributing much to science or overall culture". It's like being surprised that most people who do sport regularly do not end up winning the Olympic Games.

Well, people often extrapolate that if a child prodigy, whether in sports or an intellectual pursuit, does much better than other children of the same age, that this difference will persist into adulthood. What I’m saying is that the reason it usually doesn’t, is that once you’ve absorbed existing techniques, you reach a plateau that’s extremely hard to break out of and even if you do, it’s only by a small amount and largely based on luck. And it doesn’t matter much if one reaches that plateau at age 15 (like, say, a child prodigy) or 25 (like, say, a “normal” person).

You don't specify what exactly is the "myth" and what exactly is your alternative explanation.

The myth of general intelligence is that it is somehow different from “regular” intelligence. A human brain is not more general than any other primate brain. It’s actually the training methods that are different. There is nothing inherent in the model that is the human brain which makes it inherently more capable, à la Chomsky’s universal grammar. The only effective difference is that the human mind has a strong tendency towards imitation, which does not in itself make it more intelligent, but only if there are intelligent behaviors available to imitate, so what is in effect different about what’s called a “general” intelligence is not the model or agent itself, but the training method. There is nothing in principle that stops a chimpanzee from being able to read and write English, for example. It’s just that we haven’t figured out the methods to configure their brains into that state, because they don’t have a strong tendency to imitate, which human children do have, which makes training them much easier.

If your point is that Einstein could not have discovered general relativity if he was born 1000 years earlier, I agree. If your point is that any other person with university education living in the same era could have discovered the same, I disagree.

We agree on the first point. As for the second point, in hindsight we of course know that Einstein was able to discover what he did discover. However, before his discovery, it was not known what kind of person would be required. We did not even know exactly what was out there to discover. We are in that situation today with respect to discoveries we haven’t discovered yet. We don’t know what kind of person, with what kind of brain, in what configuration, will make what discoveries, so there is an element of chance. If in Einstein’s time the chips had fallen slightly differently, I don’t see why some other person couldn’t have made the same or very similar discoveries. It could have happened five years earlier or five years later, but it seems extremely unlikely to me that if Einstein had died as a baby that we would still be stuck with Newtonian mechanics today.

No, you do not need any specific knowledge in order to be intelligent.

Well, this comes down to what is a useful definition of intelligence. You indeed don’t need any specific knowledge if you define intelligence as something like IQ, but even a feral child with an IQ of 200 won’t outmatch a chimpanzee in any meaningful way; it won’t invent Hindu-Arabic numerals, language or even a hand axe. Likewise, ChatGPT is a useless pile of numbers before it sees the training data, and afterwards its behavior depends on what was in that training data. So in practice I would argue that to be intelligent in a specific domain you do need specific knowledge, whether that’s factual knowledge, or more implied knowledge that is absorbed by osmosis or practice.

But it is also true that some people are way better at copying and repurposing these things than others.

Some people are better at that, but I wouldn’t say way better. John von Neumann was perhaps at the apex of this, but that didn’t make him much more powerful in practice. He didn’t discover the Higgs boson. He didn’t build a gigahertz microprocessor. He didn’t cure his own cancer. He didn’t even put wheels under his suitcase. It’s impressive what he did do, but still pretty incremental. Why would an AI be much better at this?

Nope. Depends on what kind of task you have in mind, and what kind of learning is available.

What kind of practical task wouldn’t require trial and error? There are some tasks, such as predicting the motions of the planets, for which there turned out to be a method which works quite generally, but even then when you drop certain assumptions, the method becomes intensive or impossible to calculate.

Also, intelligence is related to how fast one learns.

Yes, a bigger model or a higher IQ can help you get more out of a given amount of data, but the scaling laws show diminishing returns. Pretty quickly, having more data starts to outweigh trying to squeeze more out of what you’ve already got.

Replies from: Mitchell_Porter↑ comment by Mitchell_Porter · 2023-10-21T20:42:47.946Z · LW(p) · GW(p)

There is nothing in principle that stops a chimpanzee from being able to read and write English, for example. It’s just that we haven’t figured out the methods to configure their brains into that state

Do you think there's an upper bound on how well a chimp could read and write?

Replies from: tangerine↑ comment by tangerine · 2023-10-22T14:38:51.944Z · LW(p) · GW(p)

I’d say there virtually must be an upper bound. As to where this upper bound is, you could do the following back-of-the-napkin calculation.

ChatGPT is pretty good at reading and writing and it has something on the order of 100 billion to 1,000 billion parameters, one for each artificial synapse. A human brain has on the order of 100,000 billion natural synapses and a chimpanzee brain has about a third of that. If we could roughly equate artificial and natural synapses, it seems that a chimpanzee brain should in principle be able to model reading and writing as well as ChatGPT and then some. But then you’d have to devise a training method to set the strengths of natural synapses as desired.