MIRI's 2015 Summer Fundraiser!

post by So8res · 2015-08-19T00:27:44.535Z · LW · GW · Legacy · 44 commentsContents

Donate Now None 44 comments

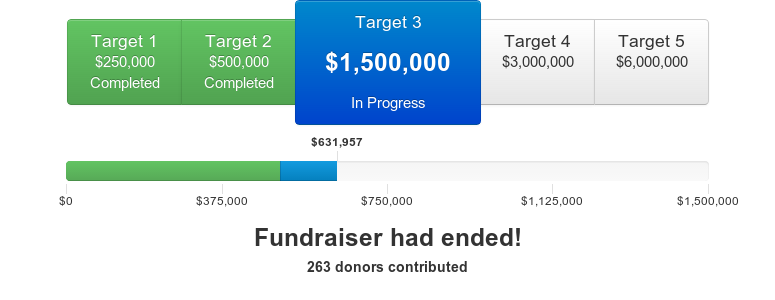

Our summer fundraising drive is now finished. We raised a grand total of $631,957 from 263 donors. This is an incredible sum, making this the biggest fundraiser we’ve ever run.

We've already been hard at work growing our research team and spinning up new projects, and I’m excited to see what our research team can do this year. Thank you to all our supporters for making our summer fundraising drive so successful!

It's safe to say that this past year exceeded a lot of people's expectations.

Twelve months ago, Nick Bostrom's Superintelligence had just come out. Questions about the long-term risks and benefits of smarter-than-human AI systems were nearly invisible in mainstream discussions of AI's social impact.

Twelve months later, we live in a world where Bill Gates is confused by why so many researchers aren't using Superintelligence as a guide to the questions we should be asking about AI's future as a field.

Following a conference in Puerto Rico that brought together the leading organizations studying long-term AI risk (MIRI, FHI, CSER) and top AI researchers in academia (including Stuart Russell, Tom Mitchell, Bart Selman, and the Presidents of AAAI and IJCAI) and industry (including representatives from Google DeepMind and Vicarious), we've seen Elon Musk donate $10M to a grants program aimed at jump-starting the field of long-term AI safety research; we've seen the top AI and machine learning conferences (AAAI, IJCAI, and NIPS) announce their first-ever workshops or discussions on AI safety and ethics; and we've seen a panel discussion on superintelligence at ITIF, the leading U.S. science and technology think tank. (I presented a paper at the AAAI workshop, I spoke on the ITIF panel, and I'll be at NIPS.)

As researchers begin investigating this area in earnest, MIRI is in an excellent position, with a developed research agenda already in hand. If we can scale up as an organization then we have a unique chance to shape the research priorities and methods of this new paradigm in AI, and direct this momentum in useful directions.

This is a big opportunity. MIRI is already growing and scaling its research activities, but the speed at which we scale in the coming months and years depends heavily on our available funds.

For that reason, MIRI is starting a six-week fundraiser aimed at increasing our rate of growth.

— Live Progress Bar —

This time around, rather than running a matching fundraiser with a single fixed donation target, we'll be letting you help choose MIRI's course based on the details of our funding situation and how we would make use of marginal dollars.

In particular, our plans can scale up in very different ways depending on which of these funding targets we are able to hit:

Target 1 — $250k: Continued growth. At this level, we would have enough funds to maintain a twelve-month runway while continuing all current operations, including running workshops, writing papers, and attending conferences. We will also be able to scale the research team up by one to three additional researchers, on top of our three current researchers and two new researchers who are starting this summer. This would ensure that we have the funding to hire the most promising researchers who come out of the MIRI Summer Fellows Program and our summer workshop series.

Target 2 — $500k: Accelerated growth. At this funding level, we could grow our team more aggressively, while maintaining a twelve-month runway. We would have the funds to expand the research team to ten core researchers, while also taking on a number of exciting side-projects, such as hiring one or two type theorists. Recruiting specialists in type theory, a field at the intersection of computer science and mathematics, would enable us to develop tools and code that we think are important for studying verification and reflection in artificial reasoners.

Target 3 — $1.5M: Taking MIRI to the next level. At this funding level, we would start reaching beyond the small but dedicated community of mathematicians and computer scientists who are already interested in MIRI's work. We'd hire a research steward to spend significant time recruiting top mathematicians from around the world, we'd make our job offerings more competitive, and we’d focus on hiring highly qualified specialists in relevant areas of mathematics. This would allow us to grow the research team as fast as is sustainable, while maintaining a twelve-month runway.

Target 4 — $3M: Bolstering our fundamentals. At this level of funding, we'd start shoring up our basic operations. We'd spend resources and experiment to figure out how to build the most effective research team we can. We'd upgrade our equipment and online resources. We'd branch out into additional high-value projects outside the scope of our core research program, such as hosting specialized conferences and retreats and running programming tournaments to spread interest about certain open problems. At this level of funding we'd also start extending our runway, and prepare for sustained aggressive growth over the coming years.

Target 5 — $6M: A new MIRI. At this point, MIRI would become a qualitatively different organization. With this level of funding, we would be able to begin building an entirely new AI alignment research team working in parallel to our current team, working on different problems and taking a different approach. Our current technical agenda is not the only way to approach the challenges that lie ahead, and we would be thrilled to get the opportunity to spark a second research group.

We also have plans that extend beyond the $6M level: for more information, shoot me an email at nate@intelligence.org. I also invite you to email me with general questions or to set up a time to chat.

If you intend to make use of corporate matching (check here to see whether your employer will match your donation), email malo@intelligence.org and we'll include the matching contributions in the fundraiser total.

Some of these targets are quite ambitious, and I'm excited to see what happens when we lay out the available possibilities and let our donors collectively decide how quickly we develop as an organization.

We'll be using this fundraiser as an opportunity to explain our research and our plans for the future. If you have any questions about what MIRI does and why, email them to rob@intelligence.org. Answers will be posted to the MIRI blog every Monday and Friday.

Below is a list of explanatory posts written for this fundraiser, which we'll be updating regularly:

- July 1 — Grants and Fundraisers. Why we've decided to experiment with a multi-target fundraiser.

- July 16 — An Astounding Year. Recent successes for MIRI, and for the larger field of AI safety.

- July 18 — Targets 1 and 2: Growing MIRI. MIRI's plans if we hit the $250k or $500k funding target.

- July 20 — Why Now Matters. Two reasons to give now rather than further down the line.

- July 24 — Four Background Claims. Core assumptions behind MIRI's strategy.

- July 27 — MIRI's Approach. How we identify technical problems to work on.

- July 31 — MIRI FAQ. Summarizing some common sources of misunderstanding.

- August 3 — When AI Accelerates AI. Some reasons to get started on safety work early.

- August 7 — Target 3: Taking It To The Next Level. MIRI's plans if we hit the $1.5M funding target.

- August 10 — Assessing Our Past And Potential Impact. Why expect MIRI in particular to make a difference?

- August 14 — What Sets MIRI Apart? Distinguishing MIRI from groups in academia and industry.

- August 18 — Powerful Planners, Not Sentient Software. Why advanced AI isn't "evil robots."

- August 28 — AI and Effective Altruism. On MIRI's role in the EA community.

Our hope is that these new resources will help you, our supporters, make more informed decisions during our fundraiser, and also that our fundraiser will serve as an opportunity for you to learn a lot more about our activities and strategic outlook.

This is a critical juncture for the field of AI alignment research. My expectation is that donations today will go much further than donations several years down the line, and time is of the essence if we want to capitalize on our new opportunities.

Your support is a large part of what has put us into the excellent position that we now occupy. Thank you for helping make this exciting moment in MIRI's development possible!

44 comments

Comments sorted by top scores.

comment by iceman · 2015-07-21T19:20:37.971Z · LW(p) · GW(p)

Donated $25,000. My employer will also match $6,000 of that, for a grand total of $31,000.

Replies from: Fluttershy, So8res, taryneast↑ comment by Fluttershy · 2015-07-24T03:13:44.810Z · LW(p) · GW(p)

Wow, thanks!

Maybe this means that I can emigrate to emigrate to Equestria someday. Yay!

Replies from: Luke_A_Somers↑ comment by Luke_A_Somers · 2015-08-28T02:16:58.564Z · LW(p) · GW(p)

And hopefully it means you won't have to.

comment by James_Miller · 2015-07-21T00:45:17.992Z · LW(p) · GW(p)

Donated $100 and 5000 Stellar.

Replies from: So8rescomment by Davis_Kingsley · 2015-07-21T20:35:48.699Z · LW(p) · GW(p)

I just donated $200.00. Last year's developments have been very promising and I look forward to seeing MIRI progress even further.

Replies from: So8rescomment by DeevGrape · 2015-08-27T02:39:41.179Z · LW(p) · GW(p)

In addition to the ~$15,000 I've donated so far this drive, I'm matching the next 5 donations of (exactly) $1001 this fundraiser. It's unlikely I'll donate this money anytime soon without the matching, so I'm hoping my decision is counterfactual enough for the donation-matching skeptics out there :)

To the stars!

Replies from: malo, So8res↑ comment by Malo (malo) · 2015-08-29T02:37:30.419Z · LW(p) · GW(p)

3 of the 5 $1,001 matches have already been claimed. As additional $1,001 donations come in I'll post updates here.

Replies from: malo↑ comment by Malo (malo) · 2015-08-29T19:15:01.295Z · LW(p) · GW(p)

Another $1,001 donation has come it.

One last $1,001 match remaining.

Replies from: malo↑ comment by Malo (malo) · 2015-08-30T20:59:23.023Z · LW(p) · GW(p)

Someone just snagged the last $1,001 match. Thanks to all those who donated $1,001 to secure the matching, and DeevGrape for providing it!

comment by [deleted] · 2015-07-21T13:32:59.523Z · LW(p) · GW(p)

By way of my congratulations, and before I actually file a donation (it'll probably be a normal $180), let me just say that this year was only astounding to someone who doesn't know you guys and can't see your abilities.

Replies from: So8res↑ comment by So8res · 2015-07-21T15:39:08.757Z · LW(p) · GW(p)

Thanks :-)

Replies from: None, Nonecomment by blogospheroid · 2015-08-20T06:23:10.718Z · LW(p) · GW(p)

I donated $300 which I think my employer is expected to match. So $600 to AI value alignment here!

Replies from: So8rescomment by tanagrabeast · 2015-07-21T02:14:13.771Z · LW(p) · GW(p)

At the smaller end of the spectrum, I'm using this as an opportunity to zero out several gift cards with awkward remaining balances -- my digital loose change.

Or at least, I started to. There's a trivial inconvenience in that the donation button doesn't let you specify increments smaller than a dollar. So I wouldn't actually be able to zero them out.

This is, of course, a silly obstacle I have more than compensated for with a round donation from my main account. The inconvenience will just have to be borne by those poor retail cashiers with "split transaction" phobia.

comment by Wei Dai (Wei_Dai) · 2015-07-22T00:21:15.417Z · LW(p) · GW(p)

With this level of funding, we would be able to begin building an entirely new AI alignment research team working in parallel to our current team, working on different problems and taking a different approach. Our current technical agenda is not the only way to approach the challenges that lie ahead, and we would be thrilled to get the opportunity to spark a second research group.

Hi Nate, can you briefly describe this second approach? (Not that I have $6M, but I'm curious what other FAI approach MIRI considers promising.)

On another note, do you know anything about Elon Musk possibly having changed his mind about the threat of AI and how that might affect future funding of work in this area? From this report of a panel discussion at ICML 2015:

Replies from: So8res, jacob_cannellApparently Hassabis of DeepMind has been at the core of recent AI fear from prominent figures such as Elon Musk, Stephen Hawking and Bill Gates. Hassabis introduced AI to Musk, which may have alarmed him. However, in recent months, Hassabis has convinced Musk, and also had a three-hour-long chat with Hawking about this. According to him, Hawking is less worried now. However, he emphasized that we must be ready, not fear, for the future.

↑ comment by So8res · 2015-07-22T03:19:29.904Z · LW(p) · GW(p)

Hi Nate, can you briefly describe this second approach?

Yep! This is a question we've gotten a few times already, and the answer will likely appear in a blog post later in the fundraiser. In the interim, the short version is that there are a few different promising candidates for a second approach, and we haven't settled yet on exactly which would be next in line. (This is one of the reasons why our plans extend beyond $6M.) I can say that the new candidates would still be aimed towards ensuring that the creation of human-programmed AGI goes well -- the other pathways (whole-brain emulation, etc.) are very important, but they aren't within our purview. It's not clear yet whether we'd focus on new direct approaches to the technical problems (such as, e.g., Paul Christiano's "can we reduce this problem to reliable predictions about human behavior" approach) or whether we'd focus on projects that would be particularly exciting to modern AI professionals or modern security professionals, in attempts to build stronger bridges to academia.

In fact, I'd actually be quite curious about which approaches you think are the most promising before deciding.

On another note, do you know anything about Elon Musk possibly having changed his mind about the threat of AI and how that might affect future funding of work in this area?

I wasn't at the ICML workshop, so I can't say much about how that summary was meant to be interpreted. That said, I wouldn't read too much into it: "Hassabis has convinced Musk" doesn't tell us much about what Demis claimed. Best I can guess from the context is that he said he convinced Elon that overhyping concern about AI could be harmful, but it's hard to be sure.

I can say, however, that I'm in contact with both Elon and Demis, and that I'm not currently worried about Elon disappearing into the mist :-)

Replies from: Wei_Dai↑ comment by Wei Dai (Wei_Dai) · 2015-07-23T00:47:42.033Z · LW(p) · GW(p)

In fact, I'd actually be quite curious about which approaches you think are the most promising before deciding.

Thanks for asking! Like several other LWers, I think that with the rapid advances in ANN-based AI, it looks like pretty clear sailing for artificial neural networks to become the first form of AGI. With the recent FLI grants there are now a number of teams working on machine learning of human values and making neural networks safer, but nobody so far is taking the long view of asking what happens when an ANN-based AGI becomes very powerful but doesn't exactly share our values or philosophical views. It would be great if there was a team working on those longer term problems, like how to deal with inevitable differences in what values an AGI has learned and what our actual values are, and understanding metaphilosophy enough to be able to teach an ANN-based AGI how to "do philosophy".

I can say, however, that I'm in contact with both Elon and Demis, and that I'm not currently worried about Elon disappearing into the mist :-)

That's good. :) BTW, are you familiar with Demis's views? From various news articles quoting him, he comes across as quite complacent but I wonder if that's a mistaken impression or if he has different private views.

Replies from: IlyaShpitser↑ comment by IlyaShpitser · 2015-08-24T19:58:48.655Z · LW(p) · GW(p)

Thanks for asking! Like several other LWers, I think that with the rapid advances in ANN-based AI, it looks like pretty clear sailing for artificial neural networks to become the first form of AGI.

I am getting the most uncanny sense of deja vu.

↑ comment by jacob_cannell · 2015-07-22T01:07:22.050Z · LW(p) · GW(p)

That paragraph almost makes sense, but it seems to be missing a key sentence or two. Hassabis is "at the core of recent AI fear" and introduced AI to Musk, but then Hassabis changed his mind and proceeded to undo his previous influence? Its hard to imagine those talks - "Oh yeah you know this whole AI risk thing I got you worried about? I was wrong, it's no big deal now."

Replies from: Vaniver↑ comment by Vaniver · 2015-07-22T01:51:39.373Z · LW(p) · GW(p)

It seems more likely to me that Hassabis said something like "with things as they stand now, a bad end seems most likely." They start to take the fear seriously, act on it, and then talk to Hassabis again, and he says "with things as they stand now, a bad end seems likely to be avoided."

In particular, we seem to have moved from a state where AI risk needed more publicity to a state where AI risk has the correct amount of publicity, and more might be actively harmful.

comment by Rob Bensinger (RobbBB) · 2015-07-31T09:41:00.349Z · LW(p) · GW(p)

We've passed our first fundraising target! And we still have four weeks left out of our six-week fundraiser!

New donations now go toward the target #2 plans, Accelerated Growth!

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2015-08-26T20:47:45.230Z · LW(p) · GW(p)

We've passed our second target! For the last week of the fundraiser, each further donation helps us execute on more of our Taking MIRI to the Next Level plans.

comment by assignmentwriting · 2015-10-07T09:21:43.521Z · LW(p) · GW(p)

Thank the author for these marvelous efforts. I appreciate your efforts in preparing this post. http://uaeassignment.blogspot.ae/

comment by Yudkowsky_is_awesome · 2015-08-24T19:17:14.216Z · LW(p) · GW(p)

I'm so revved up about MIRI's research!