xAI announces Grok, beats GPT-3.5

post by Nikola Jurkovic (nikolaisalreadytaken) · 2023-11-05T22:11:15.274Z · LW · GW · 6 commentsThis is a link post for https://x.ai/

Contents

6 comments

Some highlights:

Grok is still a very early beta product – the best we could do with 2 months of training – so expect it to improve rapidly with each passing week with your help.

I find it very interesting that they managed to beat GPT-3.5 with only 2 months of training! This makes me think xAI might become a major player in AGI development.

By creating and improving Grok, we aim to:

- Gather feedback and ensure we are building AI tools that maximally benefit all of humanity. We believe that it is important to design AI tools that are useful to people of all backgrounds and political views. We also want empower our users with our AI tools, subject to the law. Our goal with Grok is to explore and demonstrate this approach in public.

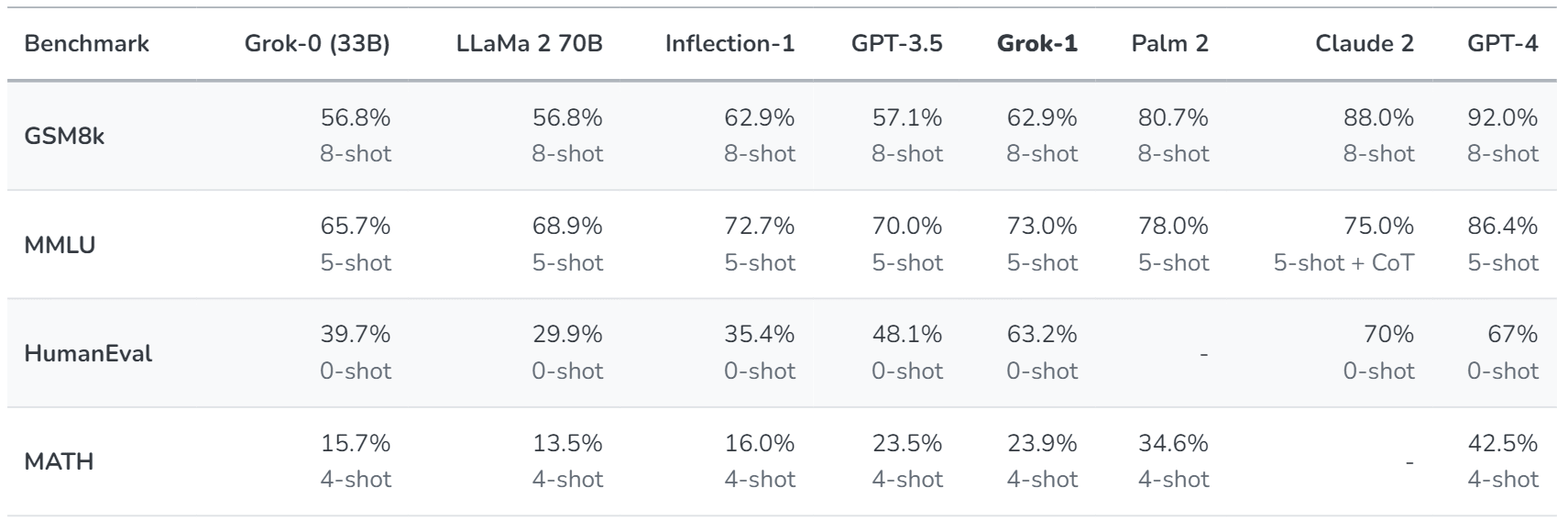

On these benchmarks, Grok-1 displayed strong results, surpassing all other models in its compute class, including ChatGPT-3.5 and Inflection-1. It is only surpassed by models that were trained with a significantly larger amount of training data and compute resources like GPT-4. This showcases the rapid progress we are making at xAI in training LLMs with exceptional efficiency.

We believe that AI holds immense potential for contributing significant scientific and economic value to society, so we will work towards developing reliable safeguards against catastrophic forms of malicious use. We believe in doing our utmost to ensure that AI remains a force for good.

6 comments

Comments sorted by top scores.

comment by Sergii (sergey-kharagorgiev) · 2023-11-06T06:27:03.970Z · LW(p) · GW(p)

I'm not skeptical, but it's still a bit funny to me when people rely so much on benchmarks, after reading "Pretraining on the Test Set Is All You Need" https://arxiv.org/pdf/2309.08632.pdf

comment by Algon · 2023-11-06T00:02:50.154Z · LW(p) · GW(p)

I find it very interesting that they managed to beat GPT-3.5 with only 2 months of training! This makes me think xAI might become a major player in AGI development.

Did they do it using substantially less compute as well or something? Because otherwise, I don't see what is that impressive about this.

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2023-11-06T07:58:43.484Z · LW(p) · GW(p)

Money

Replies from: Algon↑ comment by Algon · 2023-11-06T11:41:41.319Z · LW(p) · GW(p)

Isn't that effectively the same thing as using substantially less compute?

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2023-11-06T16:35:36.484Z · LW(p) · GW(p)

I suppose being pithy backfired here. I meant that they may have spent lots of money and may have more to spend.

Replies from: Algon