AI Safety Newsletter #40: California AI Legislation Plus, NVIDIA Delays Chip Production, and Do AI Safety Benchmarks Actually Measure Safety?

post by Corin Katzke (corin-katzke), Julius (julius-1), Alexa Pan (alexa-pan), Dan H (dan-hendrycks) · 2024-08-21T18:09:33.284Z · LW · GW · 0 commentsThis is a link post for https://newsletter.safe.ai/p/aisn-40-california-ai-legislation

Contents

SB 1047, the Most-Discussed California AI Legislation NVIDIA Delays Chip Production Safetywashing: Do AI Safety Benchmarks Actually Measure Safety Progress? Links Government Industry Opinion Research None No comments

Welcome to the AI Safety Newsletter by the Center for AI Safety. We discuss developments in AI and AI safety. No technical background required.

Listen to the AI Safety Newsletter for free on Spotify or Apple Podcasts.

SB 1047, the Most-Discussed California AI Legislation

California's Senate Bill 1047 has sparked discussion over AI regulation. While state bills often fly under the radar, SB 1047 has garnered attention due to California's unique position in the tech landscape. If passed, SB 1047 would apply to all companies performing business in the state, potentially setting a precedent for AI governance more broadly.

This newsletter examines the current state of the bill, which has had various amendments in response to feedback from various stakeholders. We'll cover recent debates surrounding the bill, support from AI experts, opposition from the tech industry, and public opinion based on polling.

The bill mandates safety protocols, testing procedures, and reporting requirements for covered AI models. The bill was introduced by State Senator Scott Wiener, and is cosponsored by CAIS Action Fund, and aims to establish safety guardrails for the most powerful AI models. Specifically, it would require companies developing AI systems that cost over $100 million to develop and are trained on a massive amount of compute to implement comprehensive safety measures, conduct rigorous testing, and mitigate potential severe risks. The bill also includes new whistleblower protections.

A group of renowned AI experts has thrown their weight behind the bill. Earlier this month, Yoshua Bengio, Geoffrey Hinton, Lawrence Lessig, and Stuart Russell penned a letter expressing their strong support for SB 1047. They argue that the next generation of AI systems pose "severe risks" if "developed without sufficient care and oversight." Bengio told TIME, "I worry that technology companies will not solve these significant risks on their own while locked in their race for market share and profit maximization."

However, SB 1047 faces opposition from some industry voices. Perhaps the most prominent critic of the bill has been venture capital firm Andreessen Horowitz (a16z). They argue that it would stifle innovation, shutter small businesses, and “let China take the lead on AI development.”

Sen. Wiener responds to critics. In a recent open letter to a16z and Y Combinator, Sen. Wiener responds to critics and calls for fact-based debate around what SB 1047 would—and does not—do. He rightly observes that many public criticisms misunderstand the bill’s scope and implications.

For example, the shut down requirement only extends to covered models (those that cost over $100 million to train) and those directly controlled by the developer. It doesn’t prevent open source models, as those would no longer be under the control of the developer, as academics who have received funding from a16z have claimed.

While industry has raised concerns with the bill, public opinion favors SB 1047. According to recent polling, 66% of California voters “don’t trust tech companies to prioritize AI safety on their own”, and 82% support the core provisions of SB 1047.

Anthropic’s “Support if Amended” letter. Anthropic sent a letter to the chair of the California Assembly Appropriations Committee stating that they would support the legislation if it underwent various amendments, such as “Greatly narrow the scope of pre-harm enforcement” (remove civil penalties for violations of the law unless they result in harm or an imminent risk to public safety), “Eliminate the Frontier Model Division,” “Eliminate Section 22604 (know-your-customer for large cloud compute purchases),” “Removing mentions of criminal penalties or legal terms like ‘perjury,’” and so on.

On August 15th, the Assembly Appropriations Committee passed SB 1047 with significant amendments Senator Weiner proposed in response to various stakeholders, including Anthropic. Anthropic has not yet weighed in on the changes. The bill is now set to advance to the Assembly floor.

In the coming weeks, SB 1047 is expected to make it to the desk of California Governor Gavin Newsom. How the Governor will decide is still up in the air.

Either way, what is clear is that California is setting the terms of the national debate on AI regulation.

NVIDIA Delays Chip Production

Nvidia’s new AI chip launch was delayed due to design flaws. In this story, we discuss the delay’s impact on Nvidia and AI companies.

Nvidia’s new AI chip is delayed by months. In March, Nvidia announced its next-generation GPUs, the Blackwell series, which promise a “huge performance upgrade” for AI development. Companies including Google, Meta, and Microsoft have collectively ordered tens of billions of dollars’ worth of the first chip in the series, the GB200. Nvidia recently told customers that mass production of the GB200 would be delayed three months or more. This means Blackwell-powered AI servers, initially expected this year, would not be mass produced until next spring.

The delay is mainly caused by the chip’s design flaws. Chip producer TSMC discovered problems with Nvidia’s physical design of the Blackwell chip, which decreased its production yield. In response, Nvidia is tweaking its design and will conduct more tests before mass production. Some also worry about TSMC’s capacity to meet production demand, given Nvidia’s new, more complex packaging technology for Blackwell. So, while the delay is primarily due to faulty design, Nvidia may face other challenges in shipping its orders.

The delay likely has limited impact on Nvidia... Nvidia shares dropped over 6% last week after reports of the delay, extending its losses since facing U.S. antitrust investigations. Analysts don't expect further financial volatility, however, given Nvidia’s large competitive lead in the AI chips market.

…but may cause disruptions to AI companies. Several Nvidia customers are investing in data centers to build new AI products. OpenAI, for example, has started training its next frontier model and allegedly expected Blackwell clusters by next January. The delay may widely impact AI training and deployment progress, unless—as some argue—Nvidia’s existing Hopper chips supply could help companies stay on schedule.

Safetywashing: Do AI Safety Benchmarks Actually Measure Safety Progress?

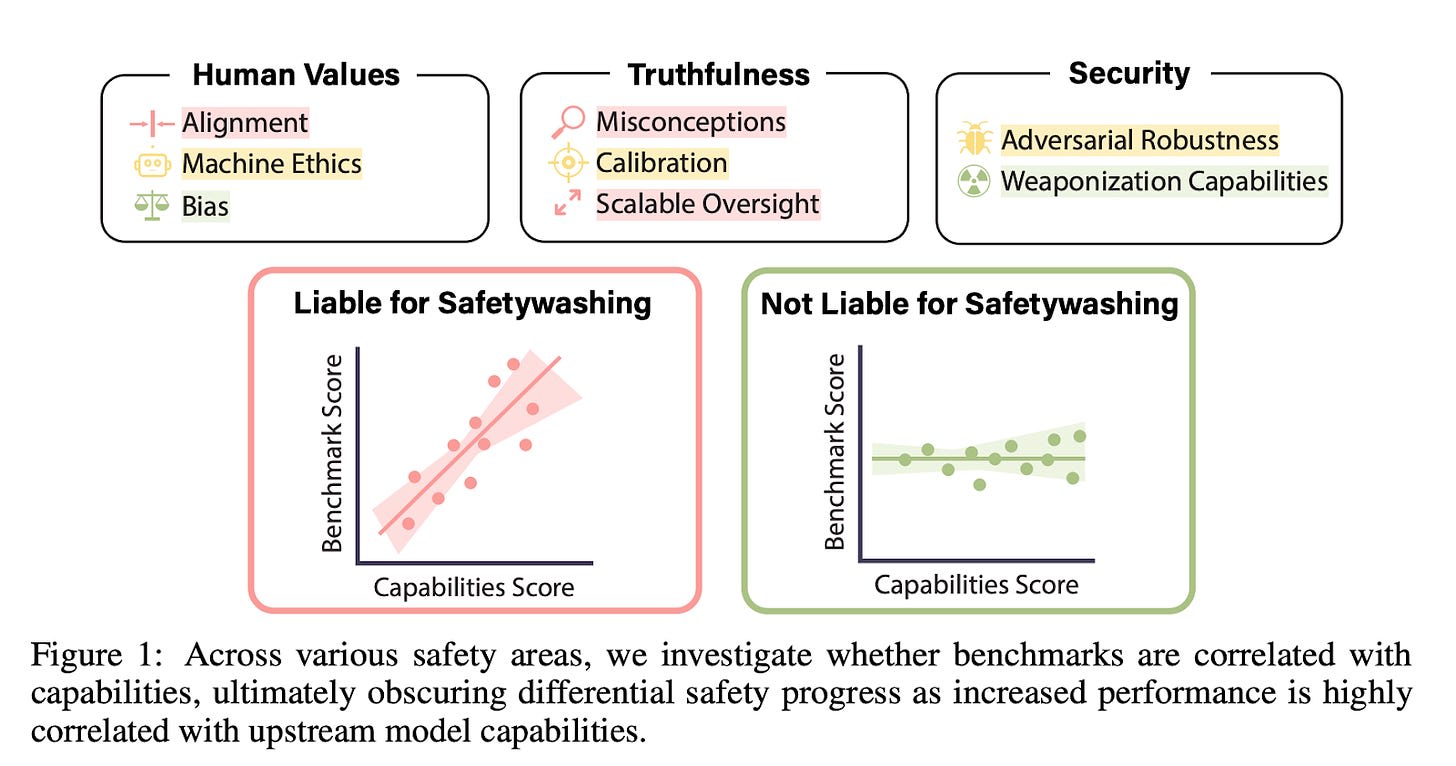

In a recent paper, researchers from the Center for AI Safety conducted the largest meta-analysis of safety benchmarks to date, analyzing the performance of dozens of large language models (LLMs) on safety and capabilities benchmarks to understand the relationship between general model capabilities and safety benchmark scores. In this story, we discuss the paper’s findings.

Around half of AI safety benchmarks measured were highly correlated with and did not clearly measure a distinct phenomena from general upstream capabilities. Increased performance on these benchmarks might actually reflect general upstream capability improvements rather than specific safety advancements. Safety benchmarks have rarely been scrutinized for this correlation, where simply scaling training compute and data can boost performance.

To determine a safety benchmark’s “capabilities correlation,” the researchers first calculate capabilities scores for dozens of models—using principal component analysis to calculate a single score capturing ~75% of variance across common capabilities benchmarks (e.g. MATH, MMLU). As it happens, this capabilities score has a 96.5% correlation with log(training FLOP). Then, they calculated the correlation of general capabilities scores and various safety benchmarks across models.

They found that correlation varies across safety areas:

- High correlation with general upstream capabilities/compute: Alignment, scalable oversight, truthfulness, static adversarial robustness, ethics knowledge

- Low correlation with general upstream capabilities/compute: Bias, dynamic adversarial robustness, RMS calibration error

- Negative correlation with general upstream capabilities/compute: Sycophancy, weaponization risk

Intuitive arguments for correlations often fail. The researchers showcase how intuitive arguments, complicated verbal arguments common in “alignment theory,” can be a highly unreliable guide for determining a research area's relation to upstream general capabilities. The correlation of a safety benchmark can often depend on the operationalization of a specific safety property, and empirical measurement is necessary.

Safety benchmarks can “safetywash” general capabilities research and scaling. The research shows that the distinction between safety improvements and capability advancements is often blurred, which complicates efforts to measure genuine safety progress. This complexity arises from the intertwined nature of safety and capabilities: more capable AI systems might be less prone to random errors but potentially more harmful if used maliciously. Since models have more malicious use risk as they become more powerful, scaling does not necessarily imply more safety. In the worst case, this blurred distinction between safety and capabilities can be an instrument for "safetywashing", where capability improvements are misrepresented as safety advancements.

The researchers recommend that future safety benchmarks should be decorrelated from capabilities by design to ensure the measurement of meaningfully distinct safety attributes—and ultimately guide safety technique development.

Links

Government

- Sen. Cantwell introduced a bill to formally establish the US AI Safety Institute.

- Sens. Coons, Blackburn, Klobuchar, Tillis introduced the NO FAKES Act, which would hold individuals or companies liable for damages for producing, hosting, or sharing unapproved AI generated replicas of individuals.

- Reps. Auchincloss and Hinson introduced the Intimate Privacy Protection Act, which would carve out intimate AI deepfakes from Section 230 immunity.

- The US AI Safety Institute published a report on managing misuse risk for dual use foundation models.

- The UK Labour government shelved plans for a £800m exascale supercomputer and £500m in funding computing power for AI.

- The NYT reports that Chinese AI companies and state-affiliated entities are successfully circumventing US export controls to acquire frontier AI chips.

- A federal judge ruled that Google has an illegal monopoly on search.

Industry

- xAI announced its new frontier model, Grok-2, which is approximately as good as GPT-4o, Gemini Pro, and Claude Sonnet 3.5.

- Costs to consumers for AI inference have decreased by orders of magnitude since last year.

- The tech sell-off earlier this month stoked fears of an AI bubble.

- OpenAI cofounder John Schulman left the company for Anthropic.

- OpenAI developed a tool to detect content generated by ChatGPT, which has not been released for over a year.

- Zico Kolter, a Carnegie Mellon ML professor, joined OpenAI’s board of directors.

- Google acquihired Character.AI.

- OpenAI released the system card for GPT-4o, which includes analysis of GPT-4o’s bioweapon capabilities.

- Anthropic sued for copyright violation.

Opinion

- An essay in Vox argues that Anthropic is no exception to the rule that incentives in the AI industry will pressure companies to deprioritize safety.

- In an opinion for the Washington Post, Anthony Aguirre pushes back against Sam Altman’s national security piece in the Washington Post.

- An article in The Nation argues that industry opposition to SB 1047 claims that previous calls for regulation from AI companies were disingenuous.

Research

- Sakana.AI introduced an automated AI Scientist with an accompanying research paper.

- A long list of AI governance researchers published a paper detailing open problems in technical AI governance.

- CNAS released a report on AI and the evolution of biological national security risks.

- XBOW released research showing its automated cybersecurity systems matched the performance of top human pentesters.

See also: CAIS website, CAIS X account, our ML Safety benchmark competition, our new course, and our feedback form. The Center for AI Safety is also hiring a project manager.

Double your impact! Every dollar you donate to the Center for AI Safety will be matched 1:1 up to $2 million. Donate here.

0 comments

Comments sorted by top scores.