Who's Working On It? AI-Controlled Experiments

post by sarahconstantin · 2025-04-25T21:40:02.543Z · LW · GW · 0 commentsContents

No comments

A lot of applications of AI in the experimental sciences boil down to data analysis.

You take existing datasets — be they genomic sequences, images, chemical measurements, molecular structures, or even published papers — and use a model to analyze them.

You can do a lot of useful things with this, such as:

inference to predict the properties of a new example where part of the data is missing

e.g. protein structure models predict unknown molecular structures from known nucleic acid sequences

generation to invent typical/representative examples of a class

e.g. many molecules “generated by AI” are invented structures produced in response to a query specifying a chemical or structural property, predicted to be representative of the real molecules that fit the criterion.

natural-language search to pull up and adapt information from a dataset so that it answers a plain-English query.

Mundane though it may sound, this dramatically speeds up learning and interdisciplinary idea generation. It might ultimately have a bigger impact on science than more specialized models trained on experimental data.

But one limit on all these kinds of models is that they’re piggybacking on data collected by human scientists. They can find connections that no human has discovered, and they can attempt to generalize out of their dataset, but until they are connected in a loop that includes physical experiments, I don’t think it’s fair to consider them “AI scientists.”

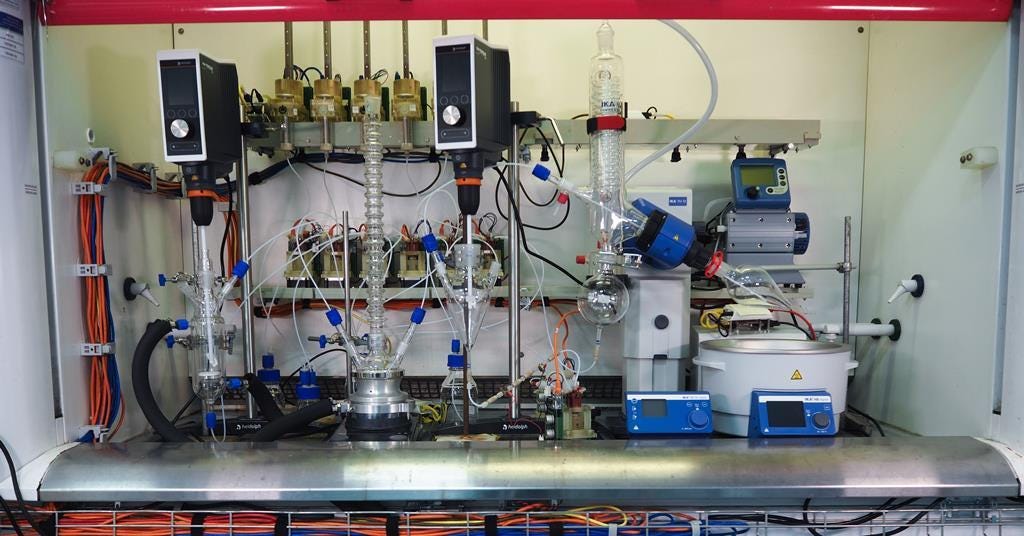

However, once you do close the loop, allowing the AI to suggest experiments, observe the results, and iterate, then I think one of the most important in-principle differences between AIs and humans falls away.

Experimentation allows us to learn causality, not just correlation. Experimentation is how a baby learns to walk; it’s how animals build the physical reasoning that we often see examples of LLMs failing at. Experimentation — ideally with enough sensor logs to build tacit knowledge of how real-world physical projects fail and need to be adjusted/repaired, not just an idealized matrix of “experimental results” produced once human experimenters have ironed out all the difficulty — is what would give me confidence that an AI could autonomously invent new technologies or discover new phenomena in the physical world.

I am, of course, not alone in noticing this! After a few years when it seemed like nobody was doing this, there are now companies based on this very model.

Below the paywall, a survey of who’s working on this kind of problem.

0 comments

Comments sorted by top scores.