How to Disentangle the Past and the Future

post by royf · 2013-01-02T17:43:27.453Z · LW · GW · Legacy · 13 commentsContents

13 comments

I'm on my way to an important meeting. Am I worried? I'm not worried. The presentation is on my laptop. I distinctly remember putting it there (in the past), so I can safely predict that it's going to be there when I get to the office (in the future) - this is how well my laptop carries information through space and time.

My partner has no memory of me copying the file to the laptop. For her, the past and the future have mutual information: if Omega assured her that I'd copied the presentation, she would be able to predict the future much better than she can now.

For me, the past and the future are much less statistically dependent. Whatever entanglement remains between them is due to my memory not being perfect. If my partner suddenly remembers that she saw me copying the file, I will be a little bit more sure that I remember correctly, and that we'll have it at the meeting. Or if somehow, despite my very clear mental image of copying the file, it's not there at the meeting, my first suspicion will nevertheless be that I hadn't.

These unlikely possibilities aside, my memory does serve me. My partner is much less certain of the future than me, and more to the point, her uncertainty would decrease much more than mine if we both suddenly became perfectly aware of the past.

But now she turns on my laptop and checks. The file is there, yes, I could have told her that. And now that we know the state of my laptop, the past and the future are completely disentangled: maybe I put the file there, maybe the elves did - it makes no difference for the meeting. And maybe by the time we get to the office a hacker will remotely delete the file - its absence at the meeting will not be evidence that I'd neglected to copy the file: it was right there! We saw it!

(By "entanglement" I don't mean anything quantum theoretic; here it refers to statistical dependence, and its strength is measured by mutual information.)

The past and the future have mutual information. This is a necessary condition for life, for intelligence: we use the past to predict and plan for the future, and we couldn't do it if it were useless.

On the other hand, the future is independent of the past given the present. That's not profound metaphysics, that's simply how we define the state of a system: it's everything one needs to know of the past of the system to compute its future. The past is gone, and any information that it had on the future - information we could use to predict and make a better future - is inherited by the present.

But as limited agents inside the system, we don't get to know its entire state. Most of it is hidden from us, behind walls and hills and inside skulls and in nanostructures. So for us, the past and the future are entangled, which means that by learning one we could reduce our uncertainty about the other.

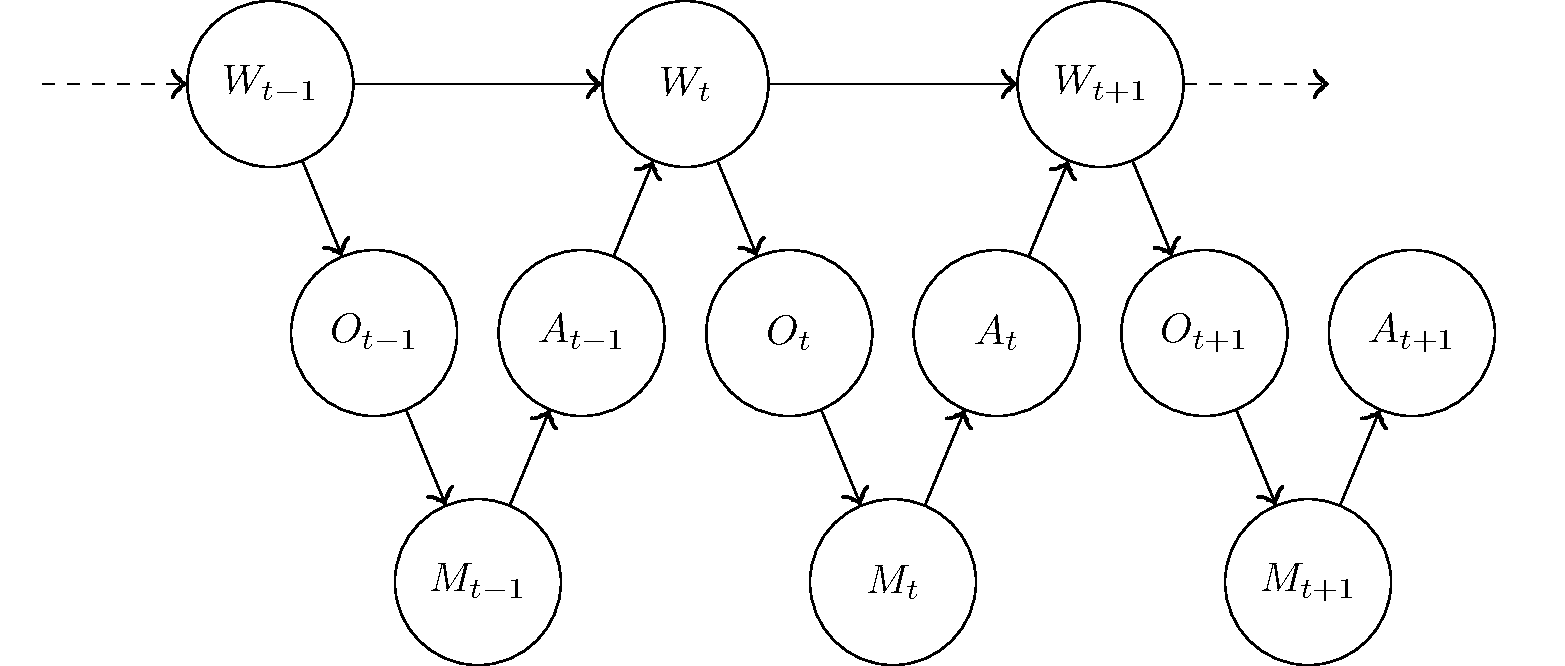

In control theory, memoryless agents have an unchanging internal structure, unable to entangle with the past and carry information useful for the future. Instead they react to whatever last input they received, like a function. These degenerate agents have an internal memory state Mt that depends only on the most recent observation Ot:

Figure 1: Dynamics of a memoryless reinforcement learning agents

Wt is the state of the world outside the agent at time t, Ot is the observation the agent makes of the world, Mt is the resulting internal state of the agent, and At is the action the agent chooses to take.

When a LED observes voltage, it emits light, regardless of whether it did so a second earlier. When the LED's internal attributes entangle with the voltage, they lose all information of what came before.

When the q key on a keyboard is pressed and released, the keyboard sends a signal to that effect to the computer, and that signal is mostly independent of which keys were pressed before and in what order (with a few exceptions; a keyboard is not entirely memoryless). A keyboard gets entangled with vast amounts of information over the years, but streams it through and loses almost any trace of it within seconds.

For a memoryless agent, all of the information between past and future flows through the environment outside the agent - through Wt. The world sans agent retains all the power to disentangle the past and the future: you can check in the graphical model in Figure 1 that Wt-1 and Wt+1 are independent given Wt.

The internal state Mt of the memoryless agent, on the other hand, of course reveals nothing about the link between past and future. Looking at my keyboard, you can't tell if I copied the presentation to my laptop, and if it's going to be there in half an hour.

Intelligent agents need to have memory:

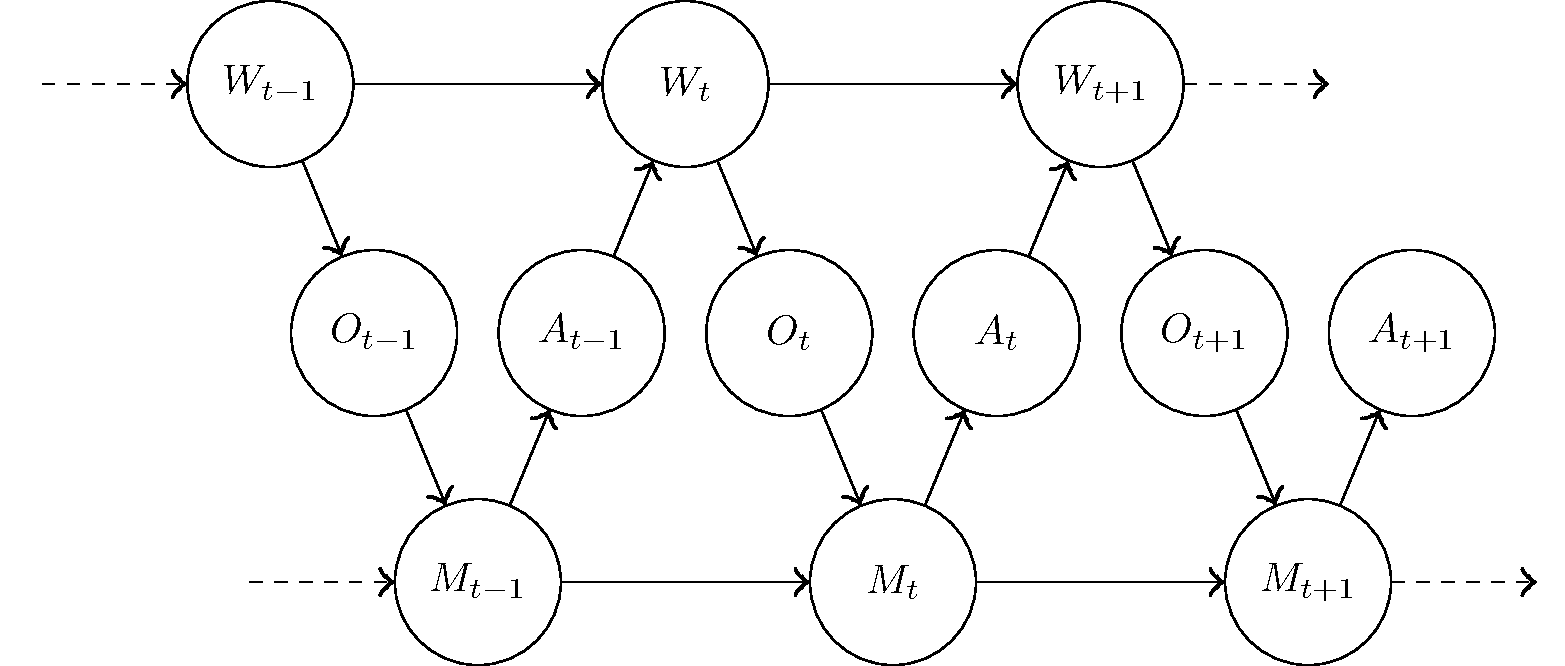

Figure 2: General dynamics of a reinforcement learning agent

Now control over the flow of information has shifted, to some extent, in favor of the agent. The agent has a much wider channel over which to receive information from the past, and it can use this information to recover some truths about the present which aren't currently observable.

The past and the future are no longer independent given Wt alone, you need Mt and Wt together to completely separate the past and the future. An agent with memory of how Wt came to be can partly assume both roles, thus making itself a better separator than its memoryless counterpart could.

So here's how to become a good separator of past and future: remember things that are relevant for the future.

Memory is necessary for intelligence, you already knew that. What's new here is a way to measure just how useful memory is. Memory is useful exactly to the extent to which it shifts the power to control the flow of information.

For the agent, memory disentangles the past and the future. If you maintain in yourself some of the information that the past has about the future, you overcome the limitations of your ability to observe the present. I know I put the presentation on my laptop, so I don't need to check it's there.

For other agents, at the same time, the agent's memory is yet another limitation to their observability. Think of a secret handshake, for example. It's useful precisely because it predicts (and controls) the future for the confidants, while keeping it entangled with the hidden past for everyone else.

Continue reading: How to Be Oversurprised

13 comments

Comments sorted by top scores.

comment by kpreid · 2013-01-03T05:07:55.942Z · LW(p) · GW(p)

When a light bulb observes voltage, it emits light, regardless of whether it did so a second earlier. When the light bulb's internal attributes entangle with the voltage, they lose all information of what came before.

This example is false. An incandescent light bulb has a memory: its temperature. The temperature both determines the amount of light currently emitted by the bulb, and also the electrical resistance of the filament (higher when hot), which means that even the connected electrical circuit is affected by the state of the bulb — turning on the bulb produces a high “inrush current”.

A much better example would be a LED (not an LED light bulb, which likely contains a stateful power supply circuit), which is stateless for most practical purposes. (For example, once upon a time, there was networking hardware which could be snooped optically — the activity indicator LEDs were simply connected to the data lines and therefore transmitted them as visible light. Modern equipment typically uses programmed blinking intervals instead.)

Replies from: royfcomment by V_V · 2013-01-04T02:29:15.987Z · LW(p) · GW(p)

That seems to be a convoluted way of defining a Markov process .

It would preferable if you attempted to use standard terminology and provide references frame the discourse within the theory.

Replies from: royf↑ comment by royf · 2013-01-04T07:44:53.631Z · LW(p) · GW(p)

I explained this in my non-standard introduction to reinforcement learning.

We can define the world as having the Markov property, i.e. as a Markov process. But when we split the world into an agent and its environment, we lose the Markov property for each of them separately.

I'm using non-standard notation and terminology because they are needed for the theory I'm developing in these posts. In future posts I'll try to link more to the handful of researchers who do publish on this theory. I did publish one post relating the terminology I'm using to more standard research.

comment by harshhpareek · 2013-01-15T00:10:21.389Z · LW(p) · GW(p)

On the other hand, the future is independent of the past given the present. That's not profound metaphysics, that's simply how we define the state of a system: it's everything one needs to know of the past of the system to compute its future.

There is some metaphysics involved, particularly in positing that such a "state" exists, i.e. the question of whether the universe is causal.

For example, in classical mechanics, simply the positions of every particle do not constitute the state. You need both the positions and velocities. The velocity is a function of the current configuration of the world, and also some instantaneous information (time derivative) about how its moving through time. However, that you need only the first time derivative (and not the second and further) is a fact of physics, and whether a finite description of the "state" exists, or if not even an infinite description would suffice, is a metaphysics question.

In a probabilistic model (like quantum mechanics), you're asking for the conditional independence of the present and the past, and similar considerations hold.

comment by amcknight · 2013-01-03T22:55:45.368Z · LW(p) · GW(p)

This seems to me like a major spot where the dualistic model of self-and-world gets introduced into reinforcement learning AI design (which leads to the Anvil Problem). It seems possible to model memory as part of the environment by simply adding I/O actions to the list of actions available to the agent. However, if you want to act upon something read, you either need to model this by having atomic read-and-if-X-do-Y actions, or you still need some minimal memory to store the previous item(s) read in.

comment by [deleted] · 2013-01-02T23:10:06.823Z · LW(p) · GW(p)

Intelligent agents need to have memory? You are otherwise right though, to a bayesian, priors are certainly important whether updating or not (also, the future doesn't have entirely mutual information with the past, as the future has more entropy).

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2013-01-03T02:21:03.063Z · LW(p) · GW(p)

To perform actions, a UDT agent needs knowledge about the world to the same extent as any other agent does. The distinction lies in when (or what for) this knowledge is needed: an updateful agent would use this knowledge early in its decision making process, while an updateless agent can be more naturally modeled as first deciding on a general strategy chosen once for all possible situations, and only then enacting the action prescribed by that strategy for the present circumstances. This last step benefits from having accurate knowledge about the world. (Of course, in practice this knowledge would be needed for efficient decision making from the start, updatelessness is a decision making algorithm design principle, not a decision making algorithm execution principle.)

comment by Kratoklastes · 2013-01-10T06:45:27.723Z · LW(p) · GW(p)

The idea of entanglement of present and future states is what makes me think that dogs invest (albeit with a strategy that has binding constraints on rate of return): they know that by burying a bone, the probability that it will be available for them at some later date, is higher than it would be if the bone was left in the open.

In other words, the expected rate of return from burying, is greater than for not-burying. (Both expected rates of return are negative, and E[RoR|not-burying]= -100% for relatively short investment horizons).

It also opens up the idea that the dog knows that the bone is being stored for 'not-now', and that 'not-now' is 'after-now' in some important sense: that is, that dogs understand temporal causality in ways other than simple Pavlovian torture-silliness.

I've also watched a crow diversify: I was tossing him pieces of bread from my motel balcony while on a hiking holiday. He ate the first five or six pieces of bread, then started caching excess bread under a rock. After he'd put a couple of pieces under the rock, he cached additional pieces elsewhere, and did this for several different locations.

E[Pr(loss=100%)] diminishes when you bury the bone, but it diminishes even more if you bury multiple bones at multiple locations (i.e., you diversify).

And yet there are still educated people who will tell you that animals' heads are full of little more than "[white noise]...urge to have sex...[white noise]...urge to eat... [white noise]... PREDATOR! RUN... [white noise]..." - again, the tendency to run from a predator indicates that the animal is conscious of its (future) fate if it fails to escape, and it knows that it will 'cease-to-be' if the predator catches it.

The same sort of people believe that animals see in monochrome (in which case, why waste scarce evolutionary resources on developing, e.g., bright plumage?)

Surprise... I'm a vegetarian.

Replies from: MugaSofer↑ comment by MugaSofer · 2013-01-10T10:56:25.662Z · LW(p) · GW(p)

Of course, it's hard to be sure how much of those behaviors is "programmed in" by evolution.

The same sort of people believe that animals see in monochrome (in which case, why waste scarce evolutionary resources on developing, e.g., bright plumage?)

In fairness, some animals do see in monochrome. Others can see into the ultraviolet or have even more exotic senses. I guess these people (who I don't seem to have encountered, are you sure you're not generalizing?) are treating all animals as dogs, which isn't uncommon in fiction.

Replies from: Kratoklastes↑ comment by Kratoklastes · 2013-01-10T22:30:43.861Z · LW(p) · GW(p)

Not generalising in the least: I'm a man of the people who interacts often with the common man - particularly the rustic and bucolic variety (from the Auvergne in Deepest Darkest France to the dusty hinterland of rural Victora and New South Wales).

Everywhere I've ever lived, I've had conversations about animals (most of which I've initiated, I admit - and most of them before I went veggie), with folks ranging from French eleveurs de boeuf to Melbourne barristers and stock analysts: their lack of awareness of the complexity of animal sense organs (and their ignorance of animal awareness research generally) is astounding.

It may well be that you've never met anybody who thinks that all animals see in monochrome - maybe you're young, maybe you don't get out much, or maybe you don't have discussions about animals much. Fortunately, the 'animals see in black and white' trope is dying (as bad ideas should), but it's not dead.

To give you some context: I'm so old that when I went to school we were not allowed to use calculators (mine was the last generation to use trig tables). If you polled people my age (especially outside metropolitan areas) I reckon you would get >50% of them declaring that animals see in "black and white" - that's certainly my anecdotal experience.

Lastly: what makes you think that dogs see in monochrome? As far as we can tell dogs see the visual spectrum in the same way as a red-green colour-blind human does - they have both rods and cones in their visual apparatus, but with different sensitivities than humans' (same for cats, but carts lack cones that filter for red).

Of course we are only using "We can do this, and we have these cells" methods to make that call: as with some migratory birds that can 'see' magnetic fields, dogs and cats may have senses of which we are not yet aware. Cats certainly act as if they know something we don't.

Replies from: MugaSofer↑ comment by MugaSofer · 2013-01-11T12:21:45.792Z · LW(p) · GW(p)

Not generalising in the least: I'm a man of the people who interacts often with the common man - particularly the rustic and bucolic variety (from the Auvergne in Deepest Darkest France to the dusty hinterland of rural Victora and New South Wales).

Everywhere I've ever lived, I've had conversations about animals (most of which I've initiated, I admit - and most of them before I went veggie), with folks ranging from French eleveurs de boeuf to Melbourne barristers and stock analysts: their lack of awareness of the complexity of animal sense organs (and their ignorance of animal awareness research generally) is astounding.

Fair enough.

It may well be that you've never met anybody who thinks that all animals see in monochrome - maybe you're young, maybe you don't get out much, or maybe you don't have discussions about animals much.

Probably.

Lastly: what makes you think that dogs see in monochrome? As far as we can tell dogs see the visual spectrum in the same way as a red-green colour-blind human does - they have both rods and cones in their visual apparatus, but with different sensitivities than humans' (same for cats, but carts lack cones that filter for red).

Whoops, you're right. I was misremembering.

Cats certainly act as if they know something we don't.

... they do? I have four cats, and I've seen them do some pretty stupid things, but nothing that seemed to suggest they have any senses we haven't discovered yet . What kind of thing did you have in mind?

Replies from: Desrtopa↑ comment by Desrtopa · 2013-09-24T14:19:52.543Z · LW(p) · GW(p)

... they do? I have four cats, and I've seen them do some pretty stupid things, but nothing that seemed to suggest they have any senses we haven't discovered yet . What kind of thing did you have in mind?

Well, I've often seen my own cats suddenly go into an alert state, whip their heads around, and stare at nothing in particular, for no reason I could determine. But I've always supposed this was a consequence of having hearing outside a human range of frequency and intensity.