Empirical Insights into Feature Geometry in Sparse Autoencoders

post by Jason Boxi Zhang (jason-boxi-zhang) · 2025-01-24T19:02:19.167Z · LW · GW · 0 commentsContents

Key Findings: An Intuitive Introduction to Sparse Autoencoders What are Sparse Autoencoders, and How Do They Work? Overview of Vector & Feature Geometry Project Motivations Experimental Setup Finding Semantically Opposite Features Semantically Opposite Features: gemma-scope-2b-pt-res Layer 10 Evaluating Feature Geometry Control Experiments Baseline Comparison with Random Features Comprehensive Feature Space Analysis Validation Through Self-Similarity Analysis Auxiliary Experiment: Compositional Model Steering Results Cosine Similarity of Semantically Opposite Features Decoder Cosine Similarity: gemma-scope-2b-pt-res Layer 10 Encoder Cosine Similarity: gemma-scope-2b-pt-res Layer 10 Decoder Cosine Similarity: gemma-scope-2b-pt-res Layer 20 Encoder Cosine Similarity: gemma-scope-2b-pt-res Layer 20 Features Showing Optimized Cosine Similarity Most Aligned Cosine Similarity: gemma-scope-2b-pt-res Layer 10 Most Opposite Cosine Similarity: gemma-scope-2b-pt-res Layer 10 Most Aligned Cosine Similarity: gemma-scope-2b-pt-res Layer 20 Most Opposite Cosine Similarities: gemma-scope-2b-pt-res Layer 20 Cosine Similarity of Corresponding Encoder-Decoder Features Same Feature Similarity: gemma-scope-2b-pt-res Layer 10 Steering Results Contrastive Steering Results: Compositional Steering Results Control Steering Results: Happy and Sad Single Injection Conclusions and Discussion Acknowledgements Appendix Semantically Opposite Features: gemma-scope-2b-pt-res Layer 20 Most Aligned Cosine Similarity: gemma-scope-2b-pt-res Layer 10 Most Opposite Cosine Similarity: gemma-scope-2b-pt-res Layer 10 Most Aligned Cosine Similarity: gemma-scope-2b-pt-res Layer 20 Most Opposite Cosine Similarities: gemma-scope-2b-pt-res Layer 20 None No comments

Key Findings:

- We demonstrate that subspaces with semantically opposite meanings within the GemmaScope series of Sparse Autoencoders are not pointing towards opposite directions.

- Furthermore, subspaces that are pointing towards opposite directions are usually not semantically related.

- As a set of auxiliary experiments, we experiment with the compositional injection of steering vectors (ex: -1*happy + sad) and find moderate signals of success.

An Intuitive Introduction to Sparse Autoencoders

What are Sparse Autoencoders, and How Do They Work?

Sparse Autoencoder (SAE) is a dictionary learning method with the goal of learning monosemantic subspaces that map to high-level concepts (1). Several frontier AI labs have recently applied SAEs to interpret model internals and control model generations, yielding promising results (2, 3).

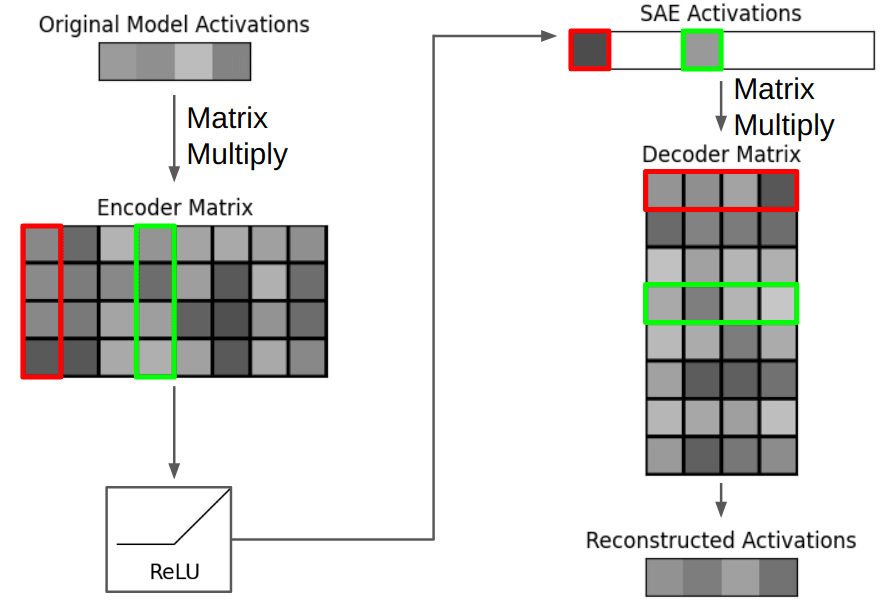

To build an intuitive understanding of this process, we can break down the SAE workflow as follows:

- SAEs are trained to reconstruct activations sampled from Language Models (LM). They operate by taking a layer activation from an LM and up-projecting it onto a much wider latent space with an encoder weight matrix, then down-projecting the latent to reconstruct the input activations. SAEs are trained to improve sparsity via an activation function like ReLU before being down-projected alongside a L1 loss on the latent space.

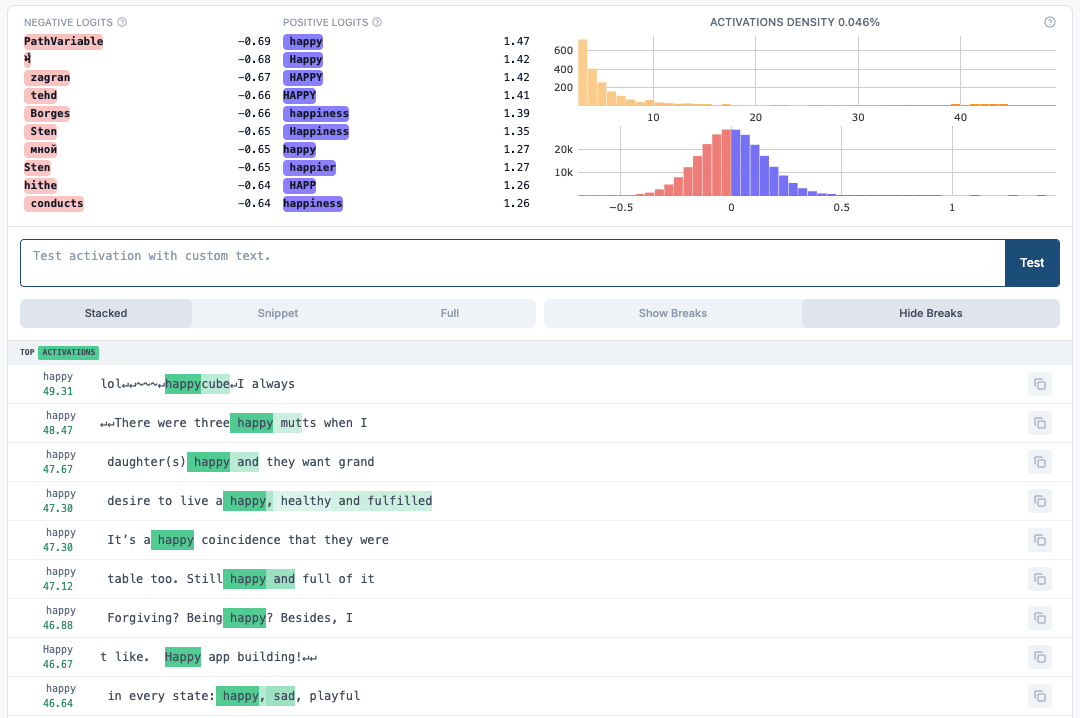

- The columns of the encoder matrix and the rows of the decoder matrix can be interpreted as learned "features" corresponding to specific concepts (e.g., activation patterns when the word "hot" is processed by a LM).

- When multiplying layer activations by the encoding matrix, we effectively measure the alignment between our input and each learned feature. This produces a "dictionary" of alignment measurements in our SAE activations.

- To eliminate "irrelevant" features, a ReLU/JumpReLU activation function sets all elements below a certain threshold to zero.

- The resulting sparse SAE dictionary activation vector serves as a set of coefficients for a linear combination of the most significant features identified in the input. These coefficients, when multiplied with the decoder matrix, attempt to reconstruct the original input as a sum of learned feature vectors.

- For a deeper intuitive understanding of SAEs, refer to Adam Karvonen's comprehensive article on the subject here.

The learned features encoded in the columns and rows of the SAE encoder and decoder matrices carry significant semantic weight. In this post, we explore the geometric relationships between these feature vectors, particularly focusing on semantically opposite features (antonyms), to determine whether they exhibit meaningful geometric/directional relationships similar to those observed with embedded tokens/words in Language Models (4).

Overview of Vector & Feature Geometry

One of the most elegant and famous phenomena within LMs is the geometric relationships between a LM's word embeddings. We all know the equation "king - man + woman = queen" to come out of embedding algorithms like GloVe (5) and word2vec (6). We also find that these embedding algorithms perform well in benchmarks like the WordSim-353 in matching human ratings of word-pair similarity within the cosine similarity of their embeddings (7).

Project Motivations

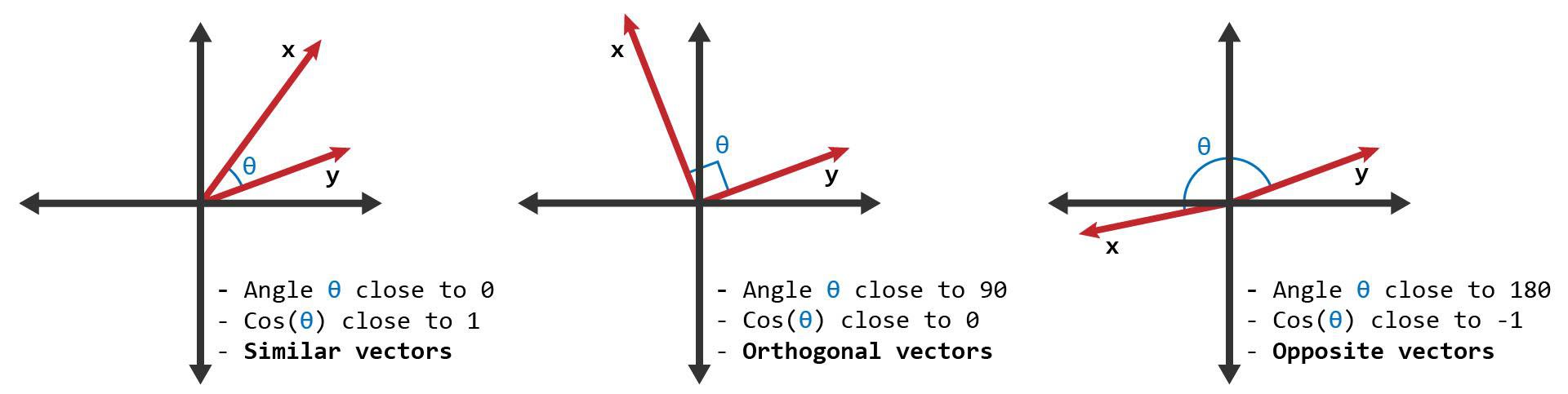

Since the encoding step of the SAE workflow is analogous to taking the cosine similarity between the input activation with all learned features in the encoder matrix, we should expect the learned features of the SAE encoder and decoder weights to carry similar geometric relationships to their embeddings. In this post, we explore this hypothesis, particularly focusing on semantically opposite features (antonyms) and their cosine similarities to determine whether or not they exhibit meaningful geometric relationships similar to those observed with embedded tokens/words in LMs.

Experimental Setup

Finding Semantically Opposite Features

To investigate feature geometry relationships, we identify 20 pairs of semantically opposite ("antonym") features from the explanations file of gemma-scope-2b-pt-res. We find these features for SAEs in the gemma-scope-2b-pt-res SAE family corresponding to layer 10 and 20. For comprehensiveness, we included all semantically similar features when multiple instances occurred in the feature space. The selected antonym pairs and their corresponding feature indices are as follows:

Semantically Opposite Features: gemma-scope-2b-pt-res Layer 10

| Concept Pair | Feature A (indices) | Feature B (indices) |

|---|---|---|

| 1. Happiness-Sadness | Happiness (5444, 11485, 12622, 13487, 15674) | Sadness (6381, 9071) |

| 2. Sentiment | Positive (14655, 15267, 11, 579, 1715, 2243) | Negative (1500, 1218, 1495, 5633, 5817) |

| 3. Uniqueness | Unique: (5283, 3566, 1688) | General: (11216) |

| 4. Tranquility | Calm (7509, 13614) | Anxious (11961, 1954, 5997) |

| 5. Engagement | Excitement (2864) | Boredom (1618) |

| 6. Max-Min | Maximum (423) | Minimum (5602, 5950, 11484) |

| 7. Size | Large (5799, 4564, 16320, 14778) | Small (8021, 7283, 4110, 2266) |

| 8. Understanding | Clarity/Comprehension (1737, 13638) | Confusion (2824, 117, 12137, 11420) |

| 9. Pace | Speed (13936, 6974, 2050) | Slowness (11625) |

| 10. Outcome | Success (8490, 745, 810) | Failure (5633, 791, 6632) |

| 11. Distribution | Uniform (9252) | Varied (3193) |

| 12. Luminance | Bright (9852) | Dark (2778) |

| 13. Permanence | Temporary (2998, 10715) | Permanence (2029) |

| 14. Randomness | Systematic (9258) | Random (992, 4237) |

| 15. Frequency | Frequent (15474) | Infrequent (12633) |

| 16. Accuracy | Correct (10821, 12220, 12323, 13305) | Incorrect (7789) |

| 17. Scope | Local (3187, 3222, 7744, 15804, 14044) | Global (3799, 4955, 10315) |

| 18. Complexity | Simple (4795, 13468, 15306, 8190) | Complex (4260, 11271, 11624, 13161) |

| 19. Processing | Sequential (4605, 2753) | Parallel (3734) |

| 20. Connection | Connected (10989) | Isolated (9069) |

We also conduct the same experiments for Layer 20 of gemma-scope-2b-pt-res. For the sake of concision, the feature indices we use for those layers can be found in the appendix.

Evaluating Feature Geometry

To analyze the geometric relationships between semantically opposite features, we compute their cosine similarities. This measure quantifies the directional alignment between feature vectors, yielding values in the range [-1, 1], where:

- +1 indicates perfect directional alignment

- 0 indicates orthogonality

- -1 indicates perfect opposition

Given that certain semantic concepts are represented by multiple feature vectors, we conduct the following comparison approach:

- For each antonym pair, we computed cosine similarities between all possible combinations of their respective feature vectors

- We record both the maximum and minimum cosine similarity observed, along with their feature indices and explanations. The average cosine similarity os all 20 features is also recorded.

- This analysis was performed independently on both the encoder matrix columns and decoder matrix rows to evaluate geometric relationships in both spaces.

Control Experiments

To establish statistical significance and validate our findings, we implemented several control experiments:

Baseline Comparison with Random Features

To contextualize our results, we generated a baseline distribution of cosine similarities using 20 randomly selected feature pairs. This random baseline enables us to assess whether the geometric relationships observed between antonym pairs differ significantly from chance alignments. As done with the semantically opposite features, we record the maximum and minimum cosine similarity values as well as the mean sampled cosine similarity.

Comprehensive Feature Space Analysis

To establish the bounds of possible geometric relationships within our feature space, we conducted an optimized exhaustive search across all feature combinations in both the encoder and decoder matrices. This analysis identifies:

- Maximally aligned feature pairs and their explanations (highest positive cosine similarity)

- Maximally opposed feature pairs and their explanations (highest negative cosine similarity)

This comprehensive control framework enables us to establish a full range of possible geometric relationships within our feature space, as well as understand the semantic relationships between maximally aligned and opposed feature pairs in the feature space.

Validation Through Self-Similarity Analysis

As a validation measure, we computed self-similarity scores by calculating the cosine similarity between corresponding features in the encoder and decoder matrices. This analysis serves as a positive control, verifying our methodology's ability to detect strong geometric relationships where they are expected to exist.

Auxiliary Experiment: Compositional Model Steering

As an auxiliary experiment, we experiment with the effectiveness of compositional steering approaches using SAE feature weights. We accomplish this by injecting 2 semantically opposite features from the Decoder weights of GemmaScope 2b Layer 20 during model generation to explore effects on model output sentiment.

We employ the following compositional steering injection techniques using pyvene’s (8) SAE steering intervention workflow in response to the prompt “How are you?”:

- Contrastive Injection: Semantically opposite features “Happy” (11697) and “Sad” (15539) were injected together to see if they “cancelled” each other out.

- Compositional Injection: Semantically opposite features “Happy” and “Sad” injected but with one of them flipped. Ex: -1 * “Happy” + “Sad” or “Happy” + -1 * “Sad” compositional injections were tested to see if they would produce pronounced steering effects towards “Extra Sad” or “Extra Happy”, respectively.

- Control: As a control, we generate baseline examples with no steering, and single feature injection steering (Ex: Only steering with “Happy” or “Sad”). These results are used to ground sentiment measurement.

We evaluate these injection techniques using a GPT-4o automatic labeller conditioned on few-shot examples of baseline (non-steered) examples of model responses to “How are you?” for grounding. We generate and label 20 responses for each steering approach and ask the model to compare the sentiment of steered examples against control responses.

Results

Cosine Similarity of Semantically Opposite Features

Our analysis reveals that semantically opposite feature pairs do not exhibit consistently strong geometric opposition in either the encoder or decoder matrices. While some semantically related features exhibit outlier cosine similarity, this effect isn't at all consistent. In fact, average cosine similarity hovers closely around random distributions.

Decoder Cosine Similarity: gemma-scope-2b-pt-res Layer 10

| Relationship Type | Cosine Similarity | Feature Pair | Semantic Concept |

|---|---|---|---|

| Average (Antonym Pairs) | 0.00111 | ||

| Strongest Alignment | 0.29599 | [13487, 6381] | Happy-Sad |

| Strongest Opposition | -0.11335 | [9852, 2778] | Bright-Dark |

| Average (Random Pairs) | 0.01382 | ||

| Strongest Alignment | 0.06732 | [5394, 3287] | Compositional Structures-Father Figures |

| Strongest Opposition | -0.05583 | [11262, 11530] | Mathematical Equations - Numerical Values |

Encoder Cosine Similarity: gemma-scope-2b-pt-res Layer 10

| Relationship Type | Cosine Similarity | Feature Pair | Semantic Concept |

|---|---|---|---|

| Average (Antonym Pairs) | 0.01797 | ||

| Strongest Alignment | 0.09831 | [16320, 8021] | Large-Small |

| Strongest Opposition | -0.0839 | [9852, 2778] | Bright-Dark |

| Average (Random Pairs) | 0.01297 | ||

| Strongest Alignment | 0.06861 | [8166, 13589] | User Interaction - Mathematical Notation |

| Strongest Opposition | -0.03626 | [5394, 3287] | Compositional Structure - Father Figures |

Decoder Cosine Similarity: gemma-scope-2b-pt-res Layer 20

| Relationship Type | Cosine Similarity | Feature Pair | Semantic Concept |

|---|---|---|---|

| Average (Antonym Pairs) | 0.04243 | ||

| Strongest Alignment | 0.49190 | [11341, 14821] | Day-Night |

| Strongest Opposition | -0.07435 | [15216, 2604] | Positive-Negative |

| Average (Random Pairs) | -0.00691 | ||

| Strongest Alignment | 0.02653 | [2388, 13827] | Programming Terminology - Personal Relationships |

| Strongest Opposition | -0.03561 | [5438, 11079] | Legal Documents - Chemical Formuations |

Encoder Cosine Similarity: gemma-scope-2b-pt-res Layer 20

| Relationship Type | Cosine Similarity | Feature Pair | Semantic Concept |

|---|---|---|---|

| Average (Antonym Pairs) | 0.03161 | ||

| Strongest Alignment | 0.15184 | [10977, 12238] | Bright-Dark |

| Strongest Opposition | -0.07435 | [15216, 2604] | Positive-Negative |

| Average (Random Pairs) | 0.02110 | ||

| Strongest Alignment | 0.09849 | [3198, 6944] | Cheese Dishes - Cooking |

| Strongest Opposition | -0.03588 | [5438, 11079] | Legal Documents - Chemical Formulations |

To view the exact feature explanations for index pairs shown, one can reference Neuronpedia's Gemma-2-2B page for feature lookup by model layer.

Features Showing Optimized Cosine Similarity

As another control experiment, we perform an optimized exhaustive search throughout all of the Decoder feature space to find the maximally and minimally aligned features to examine what the most opposite and aligned features look like.

From analyzing the features found, we find there to be a lack of consistent semantic relationships between maximally and minimally aligned features in the decoder space. While there do exist a select few semantically related concepts, specifically in features that are maximally aligned, we find they do not appear consistently. We find consistent semantic misalignment in opposite pointing features. Below are the results (for the sake of formatting we only include the three most aligned features. The complete lists are featured in the appendix):

Most Aligned Cosine Similarity: gemma-scope-2b-pt-res Layer 10

| Cosine Similarity | Index 1 | Index 2 | Explanations: |

| 0.92281 | 6802 | 12291 | 6802: phrases related to weather and outdoor conditions 12291: scientific terminology and data analysis concepts |

| 0.89868 | 2426 | 2791 | 2426: modal verbs and phrases indicating possibility or capability 2791: references to Java interfaces and their implementations |

| 0.89313 | 11888 | 15083 | 11888: words related to medical terms and conditions, particularly in the context of diagnosis and treatment 15083: phrases indicating specific points in time or context |

Most Opposite Cosine Similarity: gemma-scope-2b-pt-res Layer 10

| Cosine Similarity | Index 1 | Index 2 | Explanations: |

| -0.99077 | 4043 | 7357 | 4043: references to "Great" as part of phrases or titles 7357: questions and conversions related to measurements and quantities |

| -0.96244 | 3571 | 16200 | 3571: instances of sports-related injuries 16200: concepts related to celestial bodies and their influence on life experiences |

| -0.92788 | 7195 | 11236 | 7195: technical terms and acronyms related to veterinary studies and methodologies 11236: Java programming language constructs and operations related to thread management |

Most Aligned Cosine Similarity: gemma-scope-2b-pt-res Layer 20

| Cosine Similarity | Index 1 | Index 2 | Explanations: |

| 0.92429 | 11763 | 15036 | 11763: concepts related to methods and technologies in research or analysis 15036: programming constructs related to thread handling and GUI components |

| 0.82910 | 8581 | 14537 | 8581: instances of the word "the" 14537: repeated occurrences of the word "the" in various contexts |

| 0.82147 | 4914 | 6336 | 4914: programming-related keywords and terms 6336: terms related to legal and criminal proceedings |

Most Opposite Cosine Similarities: gemma-scope-2b-pt-res Layer 20

| Cosine Similarity | Index 1 | Index 2 | Explanations: |

| -0.99212 | 6631 | 8684 | 6631: the beginning of a text or important markers in a document 8684: technical jargon and programming-related terms |

| -0.98955 | 5052 | 8366 | 5052: the beginning of a document or section, likely signaling the start of significant content 8366: verbs and their related forms, often related to medical or technical contexts |

| -0.97520 | 743 | 6793 | 743: proper nouns and specific terms related to people or entities 6793: elements that resemble structured data or identifiers, likely in a list or JSON format |

Cosine Similarity of Corresponding Encoder-Decoder Features

To ensure significant geometric relationships exist between features in SAEs, we perform a control experiment in which we compute the cosine similarities between corresponding features in the encoder and decoder matrix (i.e. computing the cosine similarity for the feature "hot" in the encoder matrix with the "hot" feature in the decoder matrix).

We perform these experiments for every single semantically opposite and random feature with itself, and report the averages below. As seen, significant cosine similarities exist for correspondent features, highlighting the weakness between cosine similarities between semantically opposite features as seen above.

Same Feature Similarity: gemma-scope-2b-pt-res Layer 10

| Relationship Type | Cosine Similarity | Feature | Semantic Concept |

|---|---|---|---|

| Average (Antonym Pairs) | 0.71861 | ||

| Highest Similarity | 0.88542 | 4605 | Sequential Processing |

| Lowest Similarity | 0.47722 | 6381 | Sadness |

| Average (Random Pairs) | 0.72692 | ||

| Highest Similarity | 0.85093 | 1146 | Words related to "Sequences" |

| Lowest Similarity | 0.51145 | 6266 | Numerical data related to dates |

Same Feature Similarity: gemma-scope-2b-pt-res Layer 20

| Relationship Type | Cosine Similarity | Feature | Semantic Concept |

|---|---|---|---|

| Average (Antonym Pairs) | 0.75801 | ||

| Highest Similarity | 0.86870 | 5657 | Correctness |

| Lowest Similarity | 0.56493 | 10108 | Confusion |

| Average (Random Pairs) | 0.74233 | ||

| Highest Similarity | 0.91566 | 13137 | Coding related terminology |

| Lowest Similarity | 0.55352 | 12591 | The presence of beginning markers in text |

Steering Results

We receive mixed results when evaluating compositional systems. Our results suggest that compositional injection (-1 * "Happy" + "Sad") results in higher steered sentiment towards the positive feature’s direction, and flipping the direction of SAE features results in neutral steering on its own.

Synchronized injection of antonymic features (happy + sad) seem to suggest some “cancelling out” of sentiment, control single injection directions like sadness seem to become much less common when paired with a happy vector.

Overall, compositionality of features provides interesting results and presents the opportunity for future research to be done.

Contrastive Steering Results:

| Happy + Sad Injection | |

| Net: | Neutral (11/20) |

| Happy Steering: | 5 |

| Sad Steering: | 2 |

| No Steering: | 11 |

| Happy to Sad Steering: | 1 |

| Sad to Happy Steering: | 1 |

Compositional Steering Results

| -1 * Happy + Sad Injection | -1 * Sad + Happy Injection | |

| Net: | Sad Steered (14/20) | Happy Steered (11/20) |

| Happy Steering: | 0 | 11 |

| Sad Steering: | 12 | 1 |

| No Steering: | 6 | 6 |

| Happy to Sad Steering: | 2 | 1 |

| Sad to Happy Steering: | 0 | 0 |

Control Steering Results: Happy and Sad Single Injection

| Happy Injection | Sad Injection | -1 * Happy Injection | -1 * Sad Injection | |

| Net: | Neutral (10/20) | Sad (8/20) | Neutral (15/20) | Neutral (14/20) |

| Happy Steering: | 7 | 2 | 3 | 3 |

| Sad Steering: | 0 | 8 | 2 | 0 |

| No Steering: | 10 | 5 | 15 | 14 |

| Happy to Sad Steering: | 2 | 1 | 0 | 0 |

| Sad to Happy Steering: | 1 | 4 | 0 | 3 |

Conclusions and Discussion

The striking absence of consistent geometric relationships between semantically opposite features is surprising, given how frequent geometric relationships occur in original embedding spaces between semantically related features. SAE features are designed to anchor layer activations to their corresponding monosemantic directions, yet seem to be almost completely unrelated in all layers of the SAE.

Potential explanations for the lack of geometric relationships in semantically related SAE encoder and decoder features could be due to contextual noise in the training data. As word embeddings go through attention layers, their original directions could become muddled with noise from other words in the contextual window. However, the lack of any noticeable geometric relationship across 2 seperate layers is surprising. These findings lay the path for future interpretability work to be done in better understanding this phenomena.

Acknowledgements

A special thanks to Zhengxuan Wu for advising me on this project!

Appendix

Semantically Opposite Features: gemma-scope-2b-pt-res Layer 20

| Concept Pair | Feature A (indices) | Feature B (indices) |

|---|---|---|

| 1. Happiness-Sadness | Happiness (11697) | Sadness (15682, 15539) |

| 2. Sentiment | Positive (14588, 12049, 12660, 2184, 15216, 427) | Negative (2604, 656, 1740, 3372, 5941) |

| 3. Love-Hate | Love (9602, 5573) | Hate (2604) |

| 4. Tranquility | Calm (813) | Anxious (14092, 2125, 7523, 7769) |

| 5. Engagement | Excitement (13823, 3232) | Boredom (16137) |

| 6. Intensity | Intense (11812) | Mild (13261) |

| 7. Size | Large (582, 9414) | Small (535) |

| 8. Understanding | Clarity (3726) | Confusion (16253, 3545, 10108, 16186) |

| 9. Pace | Speed (4483, 7787, 4429) | Slowness (1387) |

| 10. Outcome | Success (7987, 5083, 1133, 162, 2922) | Failure (10031, 2708, 15271, 8427, 3372) |

| 11. Timing | Early (8871, 6984) | Late (8032) |

| 12. Luminance | Bright (10977) | Dark (12238) |

| 13. Position | Internal (14961, 15523) | External (3136, 8848, 433) |

| 14. Time of Day | Daytime (11341) | Nighttime (14821) |

| 15. Direction | Push (6174) | Pull (9307) |

| 16. Accuracy | Correct (10351, 5657) | Incorrect (11983, 1397) |

| 17. Scope | Local (10910, 1598) | Global (10472, 15416) |

| 18. Complexity | Simple (8929, 10406, 4599) | Complex (3257, 5727) |

| 19. Processing | Sequential (5457, 4099, 14378) | Parallel (4453, 3758) |

| 20. Connection | Connected (5539) | Isolated (15334, 2729) |

Most Aligned Cosine Similarity: gemma-scope-2b-pt-res Layer 10

| Cosine Similarity | Index 1 | Index 2 | Explanations: |

| 0.92281 | 6802 | 12291 | 6802: phrases related to weather and outdoor conditions 12291: scientific terminology and data analysis concepts |

| 0.89868 | 2426 | 2791 | 2426: modal verbs and phrases indicating possibility or capability 2791: references to Java interfaces and their implementations |

| 0.89313 | 11888 | 15083 | 11888: words related to medical terms and conditions, particularly in the context of diagnosis and treatment 15083: phrases indicating specific points in time or context |

| 0.88358 | 5005 | 8291 | 5005: various punctuation marks and symbols 8291: function calls and declarations in code |

| 0.88258 | 5898 | 14892 | 5898: components and processes related to food preparation 14892: text related to artificial intelligence applications and methodologies in various scientific fields |

| 0.88066 | 3384 | 12440 | 3384: terms related to education, technology, and security 12440: code snippets and references to specific programming functions or methods in a discussion or tutorial. |

| 0.87927 | 1838 | 12167 | 1838: technical terms related to polymer chemistry and materials science 12167: structured data entries in a specific format, potentially related to programming or configuration settings. |

| 0.87857 | 12291 | 15083 | 12291: scientific terminology and data analysis concepts 15083: phrases indicating specific points in time or context |

| 0.87558 | 8118 | 8144 | 8118: instances of formatting or structured text in the document 8144: names of people or entities associated with specific contexts or citations |

| 0.87248 | 3152 | 15083 | 3152: references to mental health or psychosocial topics related to women 15083: phrases indicating specific points in time or context |

Most Opposite Cosine Similarity: gemma-scope-2b-pt-res Layer 10

| Cosine Similarity | Index 1 | Index 2 | Explanations: |

| -0.99077 | 4043 | 7357 | 4043: references to "Great" as part of phrases or titles 7357: questions and conversions related to measurements and quantities |

| -0.96244 | 3571 | 16200 | 3571: instances of sports-related injuries 16200: concepts related to celestial bodies and their influence on life experiences |

| -0.94912 | 3738 | 12689 | 3738: mentions of countries, particularly Canada and China 12689: terms related to critical evaluation or analysis |

| -0.94557 | 3986 | 4727 | 3986: terms and discussions related to sexuality and sexual behavior 4727: patterns or sequences of symbols, particularly those that are visually distinct |

| -0.93563 | 1234 | 12996 | 1234: words related to fundraising events and campaigns 12996: references to legal terms and proceedings |

| -0.92870 | 9295 | 10264 | 9295: the presence of JavaScript code segments or functions 10264: text related to font specifications and styles |

| -0.92788 | 7195 | 11236 | 7195: technical terms and acronyms related to veterinary studies and methodologies 11236: Java programming language constructs and operations related to thread management |

| -0.92576 | 4231 | 12542 | 4231: terms related to mental states and mindfulness practices 12542: information related to special DVD or Blu-ray editions and their features |

| -0.92376 | 2553 | 6418 | 2553: references to medical terminologies and anatomical aspects 6418: references to types of motor vehicles |

| -0.92021 | 674 | 8375 | 674: terms related to cooking and recipes 8375: descriptions of baseball games and players. |

Most Aligned Cosine Similarity: gemma-scope-2b-pt-res Layer 20

| Cosine Similarity | Index 1 | Index 2 | Explanations: |

| 0.92429 | 11763 | 15036 | 11763: concepts related to methods and technologies in research or analysis 15036: programming constructs related to thread handling and GUI components |

| 0.82910 | 8581 | 14537 | 8581: instances of the word "the" 14537: repeated occurrences of the word "the" in various contexts |

| 0.82147 | 4914 | 6336 | 4914: programming-related keywords and terms 6336: terms related to legal and criminal proceedings |

| 0.81237 | 4140 | 16267 | 4140: programming-related syntax and logical operations 16267: instances of mathematical or programming syntax elements such as brackets and operators |

| 0.80524 | 1831 | 7248 | 1831: elements related to JavaScript tasks and project management 7248: structural elements and punctuation in the text |

| 0.77152 | 8458 | 16090 | 8458: occurrences of function and request-related terms in a programming context 16090: curly braces and parentheses in code |

| 0.76965 | 7449 | 10192 | 7449: the word 'to' and its variations in different contexts 10192: modal verbs indicating possibility or necessity |

| 0.76194 | 5413 | 8630 | 5413: punctuation and exclamatory expressions in text 8630: mentions of cute or appealing items or experiences |

| 0.75850 | 7465 | 10695 | 7465: phrases related to legal interpretations and political tensions involving nationality and citizenship 10695: punctuation marks and variation in sentence endings |

| 0.74877 | 745 | 2437 | 745: sequences of numerical values 2437: patterns related to numerical information, particularly involving the number four |

Most Opposite Cosine Similarities: gemma-scope-2b-pt-res Layer 20

| Cosine Similarity | Index 1 | Index 2 | Explanations: |

| -0.99212 | 6631 | 8684 | 6631: the beginning of a text or important markers in a document 8684: technical jargon and programming-related terms |

| -0.98955 | 5052 | 8366 | 5052: the beginning of a document or section, likely signaling the start of significant content 8366: verbs and their related forms, often related to medical or technical contexts |

| -0.97520 | 743 | 6793 | 743: proper nouns and specific terms related to people or entities 6793: elements that resemble structured data or identifiers, likely in a list or JSON format |

| -0.97373 | 1530 | 5533 | 1530: technical terms related to inventions and scientific descriptions 5533: terms related to medical and biological testing and analysis |

| -0.97117 | 1692 | 10931 | 1692: legal and technical terminology related to statutes and inventions 10931: keywords and parameters commonly associated with programming and configuration files |

| -0.96775 | 4784 | 5328 | 4784: elements of scientific or technical descriptions related to the assembly and function of devices 5328: terms and phrases related to legal and statistical decisions |

| -0.96136 | 6614 | 9185 | 6614: elements related to data structure and processing in programming contexts 9185: technical terms related to audio synthesis and electronic music equipment |

| -0.96027 | 10419 | 14881 | 10419: technical terms and specific concepts related to scientific research and modeling 14881: phrases related to lubrication and mechanical properties |

| -0.95915 | 1483 | 9064 | 1483: words related to specific actions or conditions that require attention or caution 9064: mathematical operations and variable assignments in expressions |

| -0.95887 | 1896 | 15111 | 1896: elements indicative of technical or programming contexts 15111: phrases related to functionality and efficiency in systems and processes |

0 comments

Comments sorted by top scores.