Full Automation is Unlikely and Unnecessary for Explosive Growth

post by aog (Aidan O'Gara) · 2023-05-31T21:55:01.353Z · LW · GW · 3 commentsContents

Summary Barriers to Full Automation Partial Automation in the Davidson model Long Tail of Compute Requirements Conclusion None 3 comments

Summary

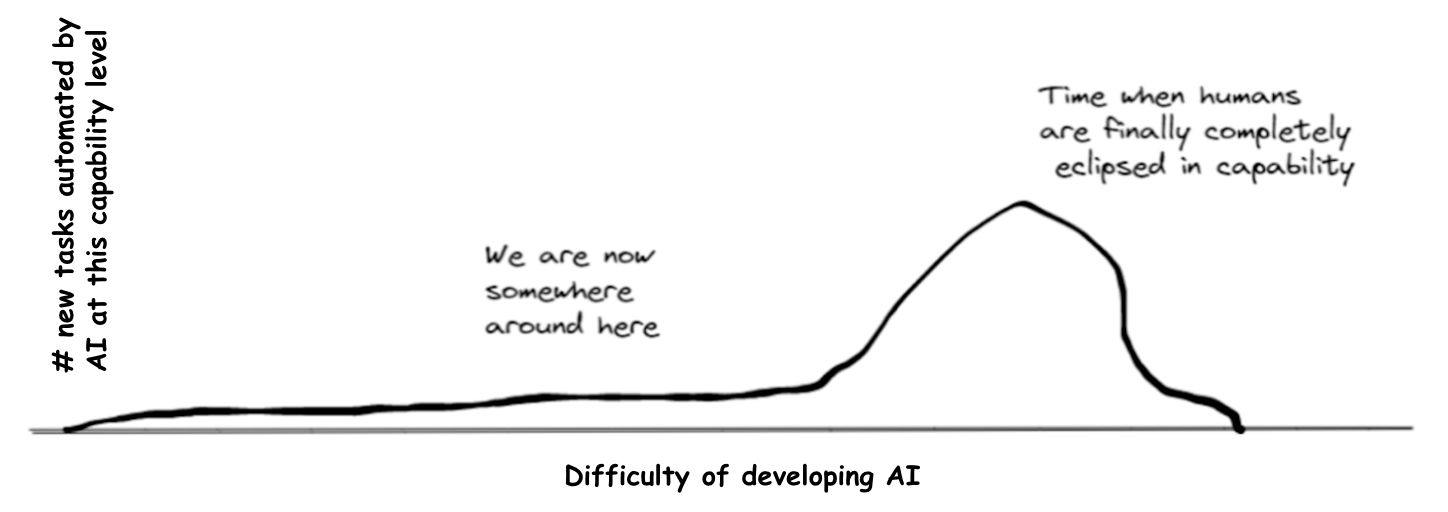

Forecasts of explosive growth from advanced AI often assume that AI will fully automate human labor. But there are a variety of compelling objections to this assumption. For example, regulation could prevent the automation of some tasks, or consumers could prefer human labor in others. Skeptics of explosive growth therefore argue that non-automated tasks will "bottleneck" economic growth. This objection is correct in one way: In models of economic growth similar to Davidson (2023), the task with the slowest productivity growth determines the long-run growth rate. But it misses another key result of these models: Even in the presence of non-automated bottleneck tasks, partial automation can cause a temporary period of explosive growth. I provide several modifications of the Davidson model and show that partial automation could cause double-digit growth that lasts for decades before being dragged back down by bottleneck tasks.

This is a writeup of this paper I wrote aimed towards academic economists. I won't summarize the full paper here, but will instead skip to the most relevant contributions for people who have already been thinking about explosive growth. Code here.

Barriers to Full Automation

Regulation. The law is a significant barrier to adoption of AI today. GPT-4 can pass the bar exam (Katz et al., 2023), but when the startup DoNotPay made plans to use the model to feed live instructions to a lawyer defending a case in court, the company received threats of criminal charges for unauthorized practice of law from multiple state bar associations (NPR, 2023). Some banks have barred employees from using ChatGPT at work, possibly because of strict regulations on discussing financial business outside of official channels (Levine, 2023). Some occupations enjoy strong support from the government, such as Iowa farmers or public school teachers, making automation an unlikely choice. Jobs created by the government such a politicians and judges might never be automated.

Preference for human labor. Consumers often indicate a preference for goods produced by humans rather than by robots (Granulo et al., 2020). Economist Daniel Björkegren has written about "nostalgic demand" for locally grown organic food. Art is often seen as less valuable when it is created by AI instead of humans (Roose, 2022). Athletes who use performance enhancing drugs are often ridiculed by fans and banned from play, perhaps indicating a preference for human-level performance. Anthropologist David Graeber argues that some jobs exist to fulfill roles in social hierarchies (Graeber, 2018). Automatically opening doors are commonplace, but fancy buildings still hire doormen.

Capabilities vs. Deployment. One might object that these are barriers to AI deployment, but not barriers to the AI's underlying capacity to perform tasks. This is entirely correct. But economic growth is determined by deployment, not capabilities.

(Which matters for AI risk? That's a separate question, but I see two possible answers. Some consider a superintelligence in a box and conclude that it's game over. Others believe AIs will not overthrow humanity by playing 3D chess, but rather pose risks in proportion to the power they have in the real world.)

In any case, partial automation is already occurring, and will likely continue before any possibility of full automation. Given that previous literature has focused on full automation, it's worth considering whether partial automation will cause explosive growth.

Partial Automation in the Davidson model

Davidson (2023) is a model of an economy where AI incrementally automates tasks that were previously performed by humans. The fraction of tasks that can be performed by AI is determined by the effective FLOP budget of the largest AI training run to date. The model assumes that full automation is guaranteed once a large enough training run occurs.

To study the impact of partial automation in the Davidson model, I first put a ceiling on the fraction of tasks that can be automated by AI. All other dynamics proceed as in the original model, with larger training runs raising the fraction of automated tasks, until the fraction of tasks automated reached the set ceiling. Then automation stops, forever.

Maximum % of Automatable Tasks | Peak Annual GWP Growth Rate | Years of Double Digit GWP Growth |

|---|---|---|

100% (Baseline) | 85% | 2037 - Infinity |

99% | 81% | 2037 - 2100 |

95% | 71% | 2037 - 2084 |

90% | 60% | 2038 - 2072 |

80% | 44% | 2038 - 2052 |

60% | 23% | 2038 - 2048 |

40% | 12% | 2044 - 2049 |

20% | 6% | None |

Over the long run, this economy is constrained by bottleneck tasks. Eventually it will only grow as fast as its slowest growing task. But during the period of automation, the economy sees explosive growth. Double-digit growth can be sustained for years or decades. This period of rapid growth could bring with it heightened risks.

Long Tail of Compute Requirements

What if some tasks are automated with relatively low levels of compute, but other require several more orders of magnitude of training compute before they're automated? This "long tail" of compute requirements could apply to domains like robotics, where strong scaling laws have yet to emerge.

The original Davidson model parameterizes the distribution of compute required for automation using two parameters: the effective FLOPs required for both 20% and 100% automation. To model the long tail of compute requirements, I add two more parameters: the fraction of tasks in the long tail, and the FLOPs required to traverse the tail.

Tail Fraction | Additional orders of magnitude of compute required for automation | Peak Growth | Years in Double Digits | Years from Double Digits until Full Automation |

0% | 0 (Baseline) | 109% | 2035 - Infinity | 5 years |

10% | 3 | 59% | 2037 - Infinity | 6 years |

30% | 3 | 56% | 2037 - Infinity | 8 years |

50% | 3 | 75% | 2038 - Infinity | 9 years |

10% | 6 | 59% | 2037 - Infinity | 9 years |

30% | 6 | 35% | 2037 - Infinity | 12 years |

50% | 6 | 45% | 2038 - Infinity | 16 years |

10% | 9 | 60% | 2037 - Infinity | 15 years |

30% | 9 | 35% | 2037 - Infinity | 24 years |

50% | 9 | 37% | 2038 - Infinity | 41 years |

Table 2: Growth impacts of AI automation with a long tail of automation difficulty.

Generally speaking, a long tail of difficult to automate tasks can increase the time to full automation by several years. But within the range of training requirements considered reasonable by Cotra (2020), full automation typically follows less than 10 years after the onset of double digit growth. Importantly, the year of highest growth sometimes precedes full automation, particularly when the tail is difficult to traverse yet contains few tasks. In those scenarios, the time of greatest change occurs before full automation.

(Technical note: There's a choice that I didn't expect in the original model. It dictates the point at which we'll see 1% automation, rather than leaving it as a user-specified input to the model. And it assumes that point is several orders of magnitude above today's models. It certainly seems to me that today's AI is capable of performing more than 1% of economically relevant tasks. Perhaps it has not been deployed accordingly. In that case, we should apply a general deployment lag to the whole distribution, rather than only showing low automation today while predicting immediate deployment of AGI later. Regardless, I set the level of automation today at 0%, and then interpolated logarithmically between that point and the three other user-specified points in the distribution.)

Conclusion

Even if AI never performs every task in our economy, far less could cause unprecedented levels of growth. This should increase our credence in explosive growth by any given date if our previous models assumed that full automation would be required.

3 comments

Comments sorted by top scores.

comment by meijer1973 · 2023-06-02T12:04:55.271Z · LW(p) · GW(p)

Thanks for the post. I would like to add that I see a difference in automation speed of cognitive work and physical work. In physical work the growth of productivity is rather constant. With cognitive work there is a sudden jump from not much use cases to a lot of use cases ( like a sgmoid). And physical labour has speed limits. And also costs, generality and deployment are different.

It is very difficult to create a usefull AI for legal or programming work. But once you are over the treshold (as we are now) there are a lot of use cases and productivity growth is very fast. Robotics in car manufacturing took a long time and continued steadily. A few years ago the first real applications of legal AI emerged, and now we have a computer that can pass the bar exam. This time frame is much shorter.

The other difference is speed. A robot building a car is limited in speed. Compare this to a legal AI summarizing legal texts (1000x+ increase in speed). AI doing coginitve work is crazy fast and has the potential to become increasingly faster with more and cheaper compute.

The cost is also different. The marginal cost for robots are higher than for a legal AI. Robots will always be rather narrow and expensive (A Roomba is about as expensive as a laptop). Building one robo lawyer will be very expensive. But after that copying it is very cheap (low marginal costs). Once you are over the treshold, the cost of deployment is very low.

The generality of AI knowledge workers is somewhat of a surprise. It was thought that specialized AI's would be better, cheaper etc. Maybe a legal AI could be a somewhat finetuned GPT-4. But this model would still be a decent programmer and accountant. A more general AI is much easier to deploy. And there might be unknown use cases for a lawyer, programmer, accountant we have not thought of yet.

Deployment speed is faster for cognitive work and this has implications for growth. When a GPT+1 is introduced all models are easily replaced by the better and faster model. When you invent a better robot to manufacture cars it will take decades before this is implemented in every factory. But the changing the the base model of your legal AI from gpt4 to gpt5 might be just a software update.

In summary there are differences for automating cognitive work with regard to:

- growth path (sigmoid instead of linear)

- speed of excecuting work

- cost (low marginal cost)

- generality (the robo lawyer, programmer, accountant)

- deployment speed (just a software update)

Are there more differences that effect speed? Am I being too bullish?

comment by Andndn Dheudnd (andndn-dheudnd) · 2023-06-01T07:56:08.136Z · LW(p) · GW(p)

My argument for this as someone who works in the robotics automation field, is that we are striving to automate all of the most dangerous and labour intensive work that humans currently do (Such as construction which currently 3D Printing is seeking to solve and automate), also jobs that are inherently just "filling gaps" for the purposes of allowing a person a wage under the capitalist paradigm. Even jobs you believe people might want, are likely jobs people only do in the absence of something more meaningful. It has always been my belief that STEM careers should always be human driven as they represent the areas where the most innovation and imagination are derived, plus the arts/culture naturally alongside.

Replies from: meijer1973↑ comment by meijer1973 · 2023-06-02T12:15:18.800Z · LW(p) · GW(p)

I like your motivation, robotics can bring a lot of good. It is good to work on automating the boring and dangerous work.

I see this as a broken promise. For a long time this was the message (we will automate the boring and dangerous). But now we automate valuable jobs like STEM, journalism, art etc. These are the jobs that give meaning to life and they provide positive externalities I like to talk to different people soI meet the critical journalist, the creative artist, the passionate teacher etc.

E.g. we need a fraction of the people to be journalists so the population as a whole can boost its critical thinking. Same for STEM, art etc. (These people have positive externalities). Humanity is a superintelligence, but it needs a variety in the parts that create the whole.