Transcript: "Choice Machines, Causality, and Cooperation"

post by Randaly · 2012-08-07T22:15:46.402Z · LW · GW · Legacy · 4 commentsContents

4 comments

Gary Drescher's presentation at the 2009 Singularity Summit, "Choice Machines, Causality, and Cooperation," is online, at vimeo. Drescher is the author of Good and Real, which has been recommended many times on LW. I've transcribed his talk, below.

My talk this afternoon is about choice machines: machines such as ourselves that make choices in some reasonable sense of the word. The very notion of mechanical choice strikes many people as a contradiction in terms, and exploring that contradiction and its resolution is central to this talk. As a point of departure, I'll argue that even in a deterministic universe, there's room for choices to occur: we don't need to invoke some sort of free will that makes an exception to the determinism, no do we even need randomness, although a little randomness doesn't hurt. I'm going to argue that regardless of whether our universe is fully deterministic, it's at least deterministic enough that the compatibility of choice and full deterministic has some important ramifications that do apply to our universe. I'll argue that if we carry the compatibility of choice and determinism to its logical conclusions, we obtain some progressively weird corollaries: namely, that it sometimes makes sense to act for the sake of things that our actions cannot change and cannot cause, and that that might even suggest a way to derive an essentially ethical prescription: an explanation for why we sometimes help others even if doing so causes net harm to our own interests.

[1:15]

An important caveat in all this, just to manage expectations a bit, is that the arguments I'll be presenting will be merely intuitive- or counter-intuitive, as the case may be- and not grounded in a precise and formal theory. Instead, I'm going to run some intuition pumps, as Daniel Dennett calls them, to try to persuade you what answers a successful theory would plausibly provide in a few key test cases.

[1:40]

Perhaps the clearest way to illustrate the compatibility of choice and determinism is to construct or at least imagine a virtual world, which superficially resembles our own environment and which embodies intelligent or somewhat intelligent agents. As a computer program, this virtual world is quintessentially determinist: the program specifies the virtual world's initial conditions, and specifies how to calculate everything that happens next. So given the program itself, there are no degrees of freedom about what will happen in the virtual world. Things do change in the world from moment to moment, of course, but no event ever changes from what was determined at the outset. In effect, all events just sit, statically, in spacetime. Still, it makes sense for agents in the world to contemplate what would be the case were they to take some action or another, and it makes sense for them to select an action accordingly.

[2:35]

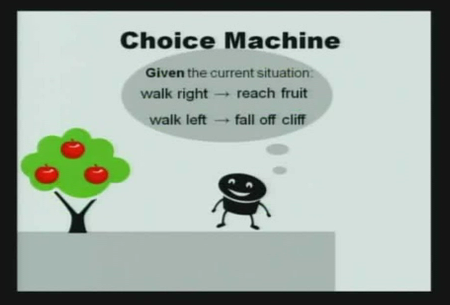

For instance, an agent in the illustrated situation here might reason that, were it move to its right, which is our left, then the agent would obtain some tasty fruit. But, instead, if it moves to its left, it falls off a cliff. Accordingly, if its preferences scheme assigns positive utility to the fruit, and negative utility to falling off the cliff, that means the agent moves to its right and not to its left. And that process, I would submit, is what we more or less do ourselves when we engage in what we think of as making choices for the sake of our goals.

[3:08]

The process, the computational process of selecting an action according to the desirability of what would be the case were the action taken, turns to be what our choice process consists of. So, from this perspective, choice is a particular kind of computation. The objection that choice isn't really occurring because the outcome was already determined is just as much a non-sequitur as suggesting that any other computation, for example, adding up a list of numbers, isn't really occurring just because the outcome was predetermined.

[3:41]

So, the choice process takes place, and we consider that the agents has a choice about the action that the choice selects and has a choice about the associated outcomes, meaning that those outcomes occur as a consequence of the choice process. So, clearly an agent that executes a choice process and that correctly anticipates what would be the case if various contemplated actions were taken will better achieve its goals than one that, say, just acts at random or one that takes a fatalist stance, that there's no point in doing anything in particular since nothing can change from what it's already determined to be. So, if we were designing intelligent agents and wanted them to achieve their goals, we would design them to engage in a choice process. Or, if the virtual world were immense enough to support natural selection and the evolution of sufficiently intelligent creatures, then those evolved creatures could be expected to execute a choice process because of the benefits conferred.

[4:38]

So the inalterability of everything that will ever happen does not imply the futility of acting for the sake of what is desired. The key to the choice relation is the “would be-if” relation, also known as the subjunctive or counterfactual relation. Counterfactual because it entertains a hypothetical antecedent about taking a certain action, that is possibly contrary to fact- as in the case of moving to the agent's left in this example. Even thought the moving left action does not in fact occur, the agent does usefully reason about what would the case if that action were taken, and indeed it's that very reasoning that ensures that the action does not in fact occur.

[5:21]

There are various technical proposals for how to formally specific a “would be-if”relation- David Lewis has a classic formulation, Judea Pearl has a more recent one- but they're not necessarily the appropriate version of “would be-if” to use for purposes of making choices, for purposes of selecting an action based on the desirability of what would then be the case. And, although I won't be presenting a formal theory, the essence of this talk is to investigate some properties of “would be-if,” the counterfactual relation that's appropriate to use for making choices.

[5:57]

In particular, I want to address next the possibility that, in a sufficiently deterministic universe, you have a choice about some things that your action cannot cause. Here's an example: assume or imagine that the universe is deterministic, with only one possible history following from any given state of the universe at a given moment. And let me define a predicate P that gets applied to the total state of the universe at some moment. The predicate P is defined to be true of a universe state just in case the laws of physics applied to that total state specify that a billion years after that state, my right hand is raised. Otherwise, the predicate P is false of that state.

[6:44]

Now, suppose I decide, just on a whim, that I would like that state of the universe a billion years ago to have been such that the predicate P was true of that past state. I need only raise my right hand now, and, lo and behold, it was so. If, instead, I want the predicate to have been false, then I lower my hand and the predicate was false. Of course, I haven't changed what the past state of the universe is or was; the past is what it is, and can never be changed. There is merely a particular abstract relation, a “would be-if” relation, between my action and the particular past state that is the subject of my whimsical goal. I cannot reasonably take the action and not expect that the past state will be in correspondence.

[7:39]

So, I can't change the past, nor does my action have any causal influence over the past- at least, not in the way we normally and usefully conceive of causality, where causes are temporally prior to effects, and where we can think of causal relations as essentially specifying how the universe computes its subsequent states from its previous states. Nonetheless, I have exactly as much choice about the past value of the predicate I have defined as I have, despite its inalterability, as I have about whether to raise my hand now, despite the inalterability of that too, in a deterministic universe. And if I were to believe otherwise, and were to refrain from raising my hand merely because I can't change the past even though I do have a whimsical preference about the past value of the specified predicate, then, as always with fatalist resignation, I'd be needlessly forfeiting an opportunity to have my goals fulfilled.

[8:41]

If we accept the conclusion that we sometimes have a choice about what you cannot change or even cause, or at least tentatively accept it in order to explore its ramifications, then we can go on now to examine a well-known science fiction scenario called Newcomb's Problem. In Newcomb's Problem, a mischievous benefactor presents you with two boxes: there is a small, transparent box, containing a thousand dollars, which you can see; and there is a larger, opaque box, which you are truthfully told contains either a million dollars or nothing at all. You can't see which; the box is opaque, and you are not allowed to examine it. But you are truthfully assured that the box has been sealed, and that its contents will not change from whatever it already is.

[9:27]

You are now offered a very odd choice: you can take either the opaque box alone, or take both boxes, and you get to keep the contents of whatever you take. That sure sounds like a no brainer:if we assume that maximizing your expected payoff in this particular encounter is the sole relevant goal, then regardless of what's in the opaque box, there's no benefit to foregoing the additional thousand dollars.

[9:51]

But, before you choose, you are told how the benefactor decided how much money to put in the opaque box- and that brings us to the science fiction part of the scenario. What the benefactor did was take a very detailed local snapshot of the state of the universe a few minutes ago, and then run a faster-than-real time simulation to predict with high accuracy to predict with high accuracy whether you would take both boxes, or just the opaque box. A million dollars was put in the opaque box if and only if you were predicted to take only the opaque box.

[10:22]

Admittedly the super-predictability here is a bit physically implausible, and goes beyond a mere stipulation of determinism. Still, at least it's not logically impossible- provided that the simulator can avoid having to simulate itself, and thus avoid a potential infinite regress. (The opaque box's opacity is important in that regard: it serves to insulate you from being effectively informed of the outcome of the simulation itself, so the simulation doesn't have to predict its own outcome in order to predict what you are going to have to do.) So, let's indulge the super-predictability assumption, and see what comes from it. Eventually, I'm going to argue that the real world is at least deterministic enough and predictable enough that some of the science-fiction conclusions do carry over to reality.

[11:12]

So, you now face the following choice: if you take the opaque box alone, then you can expect with high reliability that the simulation predicted you would do so, and so you expect to find a million dollars in the opaque box. If, on the other hand, you take both boxes, then you should expect the simulation to have predicted that, and you expect to find nothing in the opaque box. If and only if you expect to take the opaque box alone, you expect to walk away with a million dollars. Of course, your choice does not cause the opaque box's content to be one way or the other; according to the stipulated rules, the box content already is what it is, and will not change from that regardless of what choice you make.

[11:49]

But we can apply the lesson from the handraising example- the lesson that you sometimes have a choice about things your action does not change or cause- because you can reason about what would be the case if, perhaps contrary to fact, you were to take a particular hypothetical action. And, in fact, we can regard Newcomb's Problem as essentially harnessing the same past predicate consequence as in the handraising example- namely, if and only if you take just the opaque box, then the past state of the universe, at the time the predictor took the detailed snapshot was such that that state leads, by physical laws, to your taking just the opaque box. And, if and only if the past state was thus, the predictor would predict you taking the opaque box alone, and so a million dollars would be in the opaque box, making that the more lucrative choice. And it's certainly the case that people who would make the opaque box choice have a much higher expected gain from such encounters than those who take both boxes.

[12:47]

Still, it's possible to maintain, as many people do, that taking both boxes is the rational choice, and that the situation is essentially rigged to punish you for your predicted rationality- much as if a written exam were perversely graded to give points only for wrong answers. From that perspective, taking both boxes is the rational choice, even if you are then left to lament your unfortunate rationality. But that perspective is, at the very least, highly suspect in a situation where, unlike the hapless exam-taker, you are informed of the rigging and can take it into account when choosing your action, as you can in Newcomb's Problem.

[13:31]

And, by the way, it's possible to consider an even stranger variant of Newcomb's Problem, in which both boxes are transparent. In this version, the predictor runs a simulation that tentatively presumes that you'll see a million dollars in the larger box. You'll be presented with a million dollars in the box for real if and only if the simulation shows that you would then take the million dollar box alone. If, instead, the simulation predicts that you would take both boxes if you see a million dollars in the larger box, then the larger box is left empty when presented for real.

[14:12]

So, let's suppose you're confronted with this scenario, and you do see a million dollars in the box when it's presented for real. Even though the million dollars is already there, and you see it, and it can't change, nonetheless I claim that you should still take the million dollar box alone. Because, if you were to take both boxes instead, contrary to what in fact must be the case in order for you to be in this situation in the first place, then, also contrary to what is in fact the case, the box would not contain a million dollars- even though in fact it does, and even though that can't change! The same two-part reasoning applies as before: if and only if you were to take just the larger box, then the state of the universe at the time the predictor takes a snapshot must have been such that you would take just that box if you were to see a million dollars in that box. If and only if the past state had been thus, the Predictor would have put a million dollars in the box.

[15:07]

Now, the prescription here to take just the larger box is more shockingly counter-intuitive than I can hope to decisively argue for in a brief talk, but, do at least note that a person who agrees that it is rational to take just the one box here does fare better than a person who believes otherwise, who would never be presented with a million dollars in the first place. If we do, at least tentatively, accept some of this analysis, for the sake of argument to see what follows from it, then we can move on now to another toy scenario, which dispenses with the determinism and super-prediction assumptions and arguably has more direct real world applicability.

[15:42]

That scenario is the famous prisoner's dilemma. The prisoner's dilemma is a two player game in which both players make their moves simultaneously and independently, with no communication until both moves have been made. A move consists of writing down either the word “cooperate” or “defect.” The payoff matrix is as shown:

If both players choose cooperate, they both receive 99 dollars. If both defect, they both get 1 dollar. But if one player cooperates and the other defects, then the one who cooperates gets nothing, and the one who defects gets 100 dollars.

[16:25]

Crucially, we stipulate that each player cares only about maximizing her own expected payoff, and that the payoff in this particular instance of the game is the only goal, with no affect on anything else, including any subsequent rounds of the game, that could further complicate the decision. Let's assume that both players are smart and knowledgeable enough to find the correct solution to this problem and to act accordingly. What I mean by the correct answer is the one that maximizes that player's expected payoff. Let's further assume that each player is aware of the other player's competence, and their knowledge of their own competence, and so on. So then, what is the right answer that they'll both find?

[17:07]

On the face of it, it would be nice if both players were to cooperate, and receive close to the maximum payoff. But if I'm one of the players, I might reason that y opponent's move is causally independent of mine: regardless of what I do, my opponent's move is either to cooperate or not. If my opponent cooperates, I receive a dollar more if I defect than if I cooperate- 100$ vs 99$. Likewise if my opponent defects: I get a dollar more if I defect than if I cooperate, in this case 1 dollar vs nothing. So, in either case, regardless of what move my opponent makes, my defected causes me to get one dollar more than my cooperating causes me to get, which seemingly makes defected the right choice. Defecting is indeed the choice that's endorsed by standard game theory. And of course my opponent can reason similarly.

[18:06]

So, if we're both convinced that we only have a choice about what we can cause, then we're both rationally compelled to defect, leaving us both much poorer than if we both cooperated. So, here again, an exclusively causal view of what we have a choice about leads to us having to lament that our unfortunate rationality keeps a much better outcome out of our reach. But we can arrive at a better outcome if we keep in mind the lesson from Newcomb's problem or even the handraising example that it can make sense to act for the sake of what would be the case if you so acted, even if your action does not cause it to be the case. Even without the help of any super-predictors in this scenario, I can reason that if I, acting by stipulation as a correct solver of this problem, were to choose to cooperate, then that's what correct solvers of this problem do in such situations, and in particular that's what my opponent, as a correct solver of this problem, does too.

[19:05]

Similarly, if I were to figure out that defecting is correct, that's what I can expect my opponent to do. This is similar to my ability to predict what your answer to adding a given pair of numbers would be: I can merely add the numbers myself, and, given our mutual competence at addition, solve the problem. The universe is predictable enough that we routinely, and fairly accurately, make such predictions about one another. From this viewpoint, I can reason that, if I were to cooperate or not, then my opponent would make the corresponding choice- if indeed we are both correctly solving the same problem, my opponent maximizing his expected payoff just as I maximize mine. I therefore act for the sake of what my opponent's action would then be, even though I cannot causally influence my opponent to take one action or the other, since there is no communication between us. Accordingly, I cooperate, and so does my opponent, using similar reasoning, and we both do fairly well.

[20:05]

One problem with the Prisoner's Dilemma is that the idealized degree of symmetry that's postulated between the two players may seldom occur in real life. But there are some important generalizations that may apply much more broadly. In particular, in many situations, the beneficiary of your cooperation may not be the same as the person whose cooperation benefits you. Instead, your decision whether to cooperate with one person may be symmetric to a different person's decision to cooperate with you. Again, even in the absence of any causal influence upon your potential benefactors, even if they will never learn of your cooperation with others, and even, moreover, if you already know of their cooperation with you before you make your own choice. That is analogous to the transparent version of Newcomb's Problem: there too, you act for the same of something that you already know is already obtained.

[21:04]

Anyways, as many authors have noted with regards to the Prisoner's Dilemma, this is beginning to sound a little like the Golden Rule or the Categorical Imperative: act towards others as you would like others to act towards you, in similar situations. The analysis in terms of counterfactual reasoning provides a rationale, under some circumstances, for taking an action that causes net harm to your own interests and net benefit to others' interests although the choice is still ultimately grounded in your own goals because of what would be the case because of others' isomorphic behavior if you yourself were to cooperate or not. Having a derivable rationale for ethical or benevolent behaviour would be desirable for all sorts of reasons, not least of which is to help us make the momentous decisions as to how or even whether to engineer the Singularity, and also to tell us what sort of value system we might want- or expect- an AI to have.

[22:08]

But a key assumption of the argument just given is that it requires all participants to be perfectly rational, and, further, to be aware of all others' rationality- Douglas Hofstadter refers to this as the “superrationality” assumption. It would be nice to be able to show that, even among those of us with more limited rationality, there's still enough of a “would be-if” relation, albeit perhaps quantitatively weakened, between my own choice and others' choices in Prisoner's Dilemma situations to justify the cooperative solution in such cases. But I'm not aware of an entirely satisfactory treatment of that question, so it remains an open question as far as I know. Still, I think it's hopeful that we can at least get our foot in the door, by arguing for the correctness of the cooperative solution in some cases that presume idealized rationality.

[23:00]

Summing up, the key points are that:

-

Inalterability does not imply futility

-

You have a choice about some things that your action cannot change or even cause

-

One consequence is a derivable prescription to sometimes cooperate with others even when doing so causes net harm to your goals and net benefits to their goals

-

The same false intuition that makes all choice seem impossible or futile given determinism, also makes it seem futile to act for the sake of the million dollars in Newcomb's Problem, or for the sake of another player's cooperation in the Prisoner's Dilemma, since you cannot cause, or even change, what you act for the sake of

Making a fully convincing case for all of this would require a convincing theory of the "would be-if" relation, the counterfactual or subjunctive relation, consists of, which I have not presented. What this talk outlined instead is a glimpse of some consequences that such a theory would arguably have to lead to, some answers the theory would have to give in some key examples, if the theory can avoid putting us in the position of lamenting our own rationality.

As for an underlying theory, my book Good and Real sketches what could be seen as a modified evidentialist theory, for those familiar with that concept. But there is some exciting work being pursued now by Eliezer Yudkowsky and others at the Singularity Institute and elsewhere that may be converging on a much more rigorous and elegant underlying theory, and hopefully we'll be hearing more about that in the not-too-distance future.

4 comments

Comments sorted by top scores.

comment by Shmi (shminux) · 2012-08-08T02:15:56.259Z · LW(p) · GW(p)

This presentation is a pretty good description of some of the basic points oft discussed here:

An algorithm feels like free will from the inside and Omega has the outside view, and so cannot be gamed.

Ethics is a set of reusable computational shortcuts.

Fully recursive reasoning compels cooperation between symmetric agents.

I guess all these points bear repeating, given how counter-intuitive they appear. Using a simple fully deterministic world to present them, without any quantum or random muddiness of our world is a nice touch.

comment by torekp · 2012-08-11T10:33:21.140Z · LW(p) · GW(p)

Drescher commits one of my pet peeves, the modal scope fallacy. In a deterministic universe it may be impossible that (the laws of the universe are L, the earlier state of the universe is E, and the agent's action is not A). But it is invalid to infer from that to "it is impossible that the agent's action is not A" - that's a change in the scope of the modal operator. The agent's raising its hand, or moving to its left or right, is not inalterable: those events depend on the agent's choice.

This doesn't detract from Drescher's main argument, AFAICT.