AlexaTM - 20 Billion Parameter Model With Impressive Performance

post by MrThink (ViktorThink) · 2022-09-09T21:46:30.151Z · LW · GW · 0 commentsContents

No comments

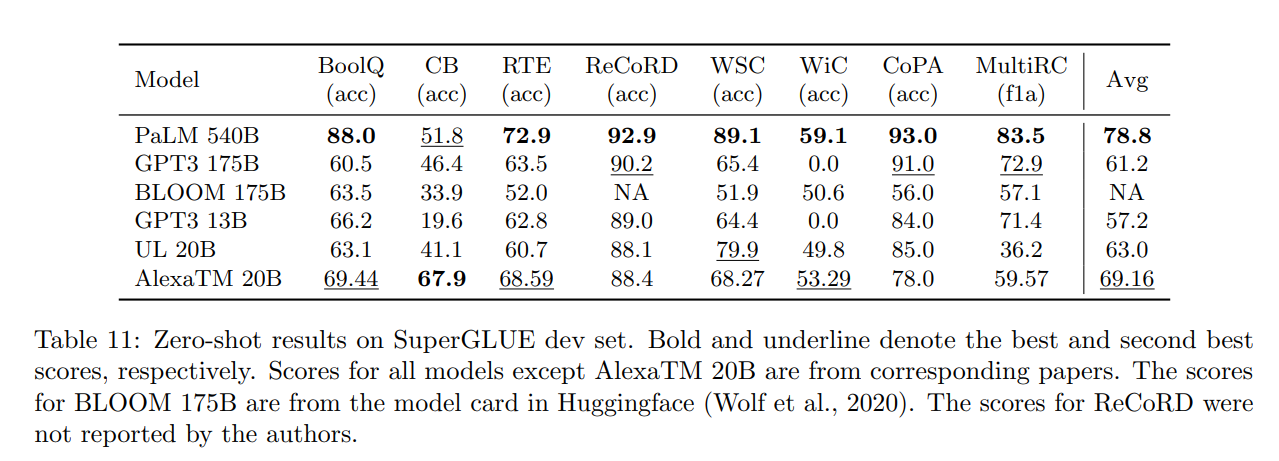

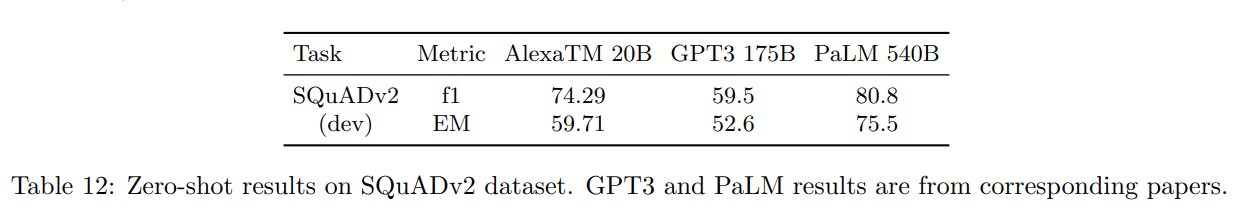

Amazon trained a seq2seq model that outperforms GPT-3 on SuperGLUE, SQuADv2, and is not (that) far behind PaLM with 540 billion parameters.

Benchmarks:

0 comments

Comments sorted by top scores.