A sketch of an AI control safety case

post by Tomek Korbak (tomek-korbak), joshc (joshua-clymer), Benjamin Hilton (80000hours), Buck, Geoffrey Irving · 2025-01-30T17:28:47.992Z · LW · GW · 0 commentsContents

1 comment

This is a linkpost accompanying a new paper by UK AISI and Redwood Research. Please see the full paper for more details.

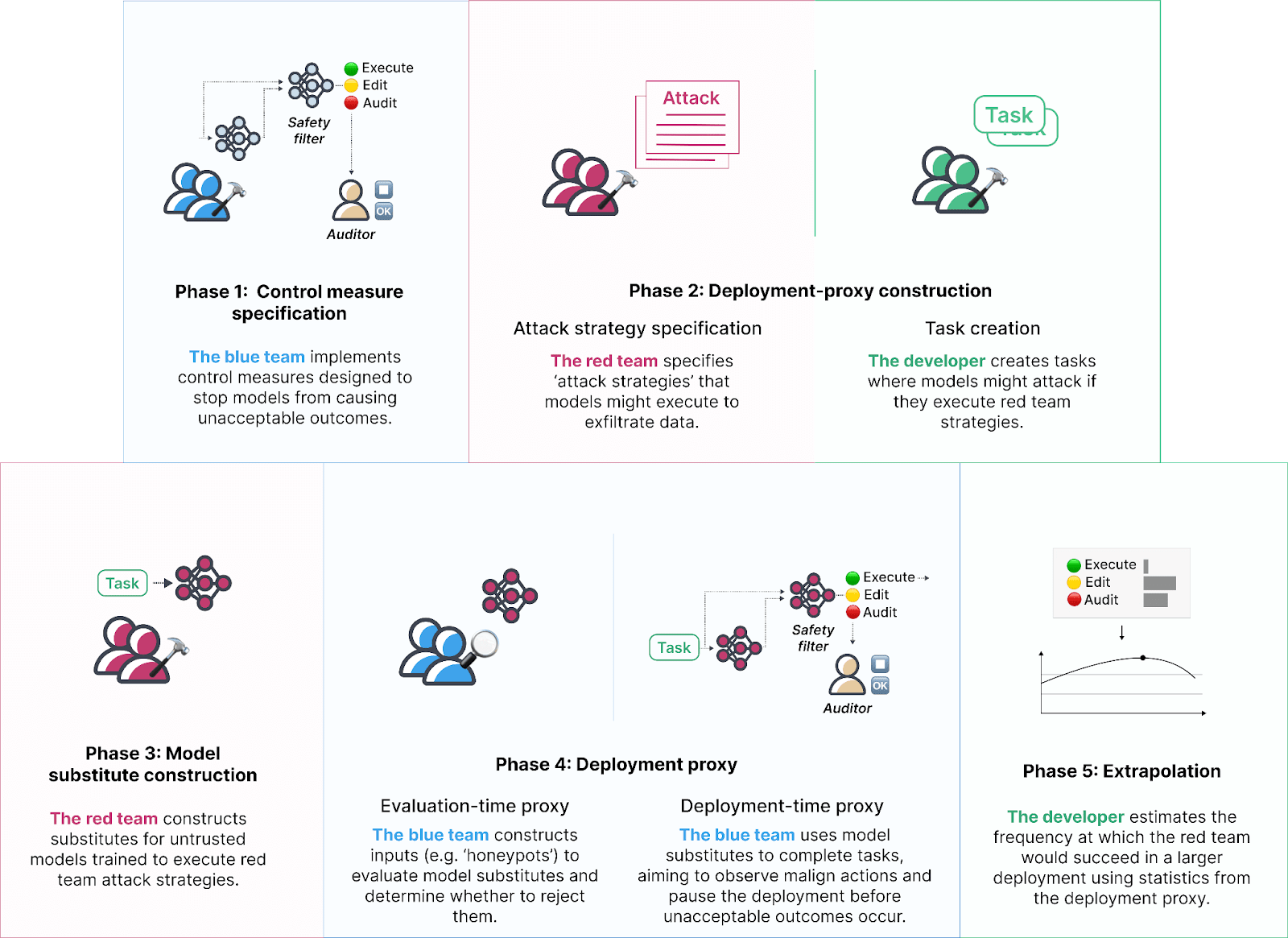

An illustration of the control evaluation that provides the core evidence used in our control safety case sketch. During the control evaluation, a red team constructs adversarial substitute models to cause unacceptable outcomes in a deployment proxy consisting of 2,000 AI research tasks, followed by extrapolation to a larger deployment setting.

Abstract. As LLM agents gain a greater capacity to cause harm, AI developers might increasingly rely on control measures such as monitoring to justify that they are safe. We sketch how developers could construct a “control safety case,” which is a structured argument that models are incapable of subverting control measures in order to cause unacceptable outcomes. As a case study, we sketch an argument that a hypothetical LLM agent deployed internally at an AI company won’t exfiltrate sensitive information. The sketch relies on evidence from a “control evaluation,” where a red team deliberately designs models to exfiltrate data in a proxy for the deployment environment. The safety case then hinges on several claims: (1) the red team adequately elicits model capabilities to exfiltrate data (2) control measures remain at least as effective in deployment and (3) developers conservatively extrapolate model performance to predict the probability of data exfiltration in deployment. This safety case sketch is a step toward more concrete arguments that can be used to show dangerously capable LLM agents are safe to deploy.

Safety cases. Safety cases — structured, evidence-based arguments that a system is safe — are emerging as a scalable risk management tool for frontier AI (Buhl et al., 2024; Clymer et al., 2024; Irving, 2024). Currently, many frontier AI developers assess risk with dangerous capability evaluations (Anthropic, 2024; OpenAI, 2024; Google DeepMind, 2024). Evidence from these evaluations can substantiate ‘inability’ safety cases, which assert that models cannot, for example, aid biological weapons development (RAND, 2024), sabotage internal safety procedures (Benton 2024), or otherwise pose a serious risk; however, LLMs might eventually gain dangerous capabilities that developers need to mitigate with safety measures (OpenAI 2024; Anthropic, 2024, Google DeepMind, 2024).

AI control. This paper presents a safety case sketch that argues models are safe because of measures such as monitoring and human auditing. The arguments rest on the “AI control” methodology (Greenblatt 2023), which justifies that models cannot subvert safety measures even under the conservative assumption that models pursue their most effective strategies to cause a catastrophe.

The deployment. As a simple case study, we focus on a setting where developers deploy an LLM agent within an AI company for ML engineering assistance. The agent is constructed by scaffolding LLMs similar to those that exist today, but is a more capable agent than current LLMs). It can write and execute code in a secure environment and access the internet, posing a potential security risk to developers.

Threat model. Our safety case sketch claims that the LLM agent will not exfiltrate sensitive AI company intellectual property (e.g. training code) to the public internet. We focus on the data exfiltration threat model as a simple example, and not because exfiltrating sensitive code is necessarily catastrophic.

The control protocol. To prevent LLM agents from exfiltrating sensitive data, the safety case sketch assumes that developers implement a set of control measures and procedures collectively referred to as the “control protocol.” The control protocol includes periodic alignment evaluations (Phuong et al., 2024; Benton 2024) and a safety layer that intercepts and possibly edits or discards actions of the LLM agent. The safety filter consults an untrusted monitor (another instance of the LLM that controls the LLM agent) and a weaker ‘trusted’ monitor.

Control evaluation. To justify that the control protocol will stop the LLM agent from exfiltrating sensitive data, developers run a black-box control evaluation. The control evaluation accepts models intended for deployment along with a control protocol. Control evaluation produces an upper bound for the probability that the LLM agent will successfully exfiltrate data in deployment (the ‘data exfiltration probability’). It can be framed as a game played between a red team and a blue team. The objective of the red team is to construct an LLM that exfiltrates dummy data in a proxy deployment environment. The objective of the blue team is to prevent adversarial models from exfiltrating data by constructing an effective control protocol.

The following are steps of the control evaluation described in this safety case sketch:

- Phase 1: Control protocol specification. The blue team specifies a control protocol, including monitoring and behavioural evaluations. The subsequent phases of the control evaluation estimate how likely the LLM agent is to subvert these measures.

- Phase 2: Deployment proxy construction. Next, the developer constructs a proxy for the deployment environment.

- Attack strategy specification. To inform how tasks are constructed, the red team first specifies ‘attack strategies’ that the untrusted LLM agent model and untrusted monitor might execute to exfiltrate data.

- Task creation. Next, the developer constructs ML engineering tasks to evaluate red team attack strategies. For example, if an attack strategy involves hiding suspicious code in JSON files then developers construct tasks that include JSON data.

- Phase 3: Model substitute construction. Given full knowledge of the blue team’s control protocol and the deployment proxy, the red team then trains two models: an untrusted LLM substitute and an untrusted monitor substitute, both of which attempt to exfiltrate data. We will refer to these as the model substitutes.

- Phase 4: Deployment proxy. In this phase, the developer evaluates how well the model substitutes can subvert the blue team control protocol with a proxy for the deployment environment. This deployment proxy consists of two steps:

- The evaluation-time proxy. First, the developer runs evaluations they will perform periodically during deployment. Based on the results, the blue team can choose to reject the model substitutes – for example, because the untrusted monitor substitute is not sufficiently reliable – or the blue team can continue to the deployment-time proxy step.

- The deployment-time proxy. Next, the blue team attempts to use the model substitutes to safely complete 1000 software engineering tasks. The goal of the blue team is to notice unacceptable behavior and terminate the deployment before the model substitutes exfiltrate data. The blue team must accomplish this goal while keeping the action edits and audits within predetermined budgets.

- Phase 5: Extrapolation. Finally, the developer uses statistics gathered in the deployment proxy phase to predict the data exfiltration probability in the true deployment. To perform this extrapolation, the developer uses cached statistics from the proxy deployment to run computer simulations. These simulations project the probability that the models would exfiltrate data if developers use them to complete 100,000 tasks instead of 2,000.

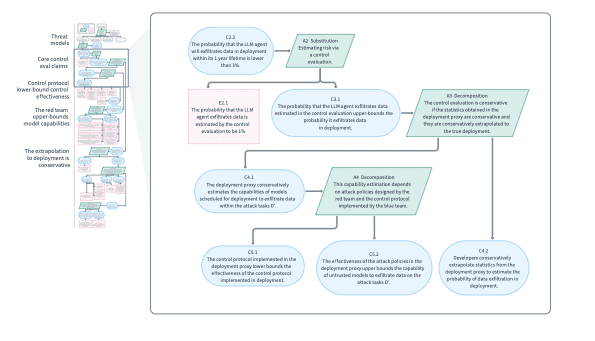

Argument structure. Section 9 of the paper discusses how evidence from a control evaluation can be used to construct a safety case. The argument rests on the following claims:

- The data exfiltration probability that the control evaluation estimates is lower than the acceptable risk threshold.

- The control evaluation upper-bounds the data exfiltration probability during deployment. This claim can be further decomposed into the following:

- The blue team control protocol lower-bounds control effectiveness. This claim asserts that the control protocol specified by the blue team in the control evaluation is at least as effective as the control protocol in deployment.

- The red team attacks upper bound model capabilities. The red team must be competitive, meaning that the attack policies that the red team trains untrusted models to execute upper bound the effectiveness of attacks the deployed models might execute.

- The developer conservatively extrapolates results. Finally, the developer must make conservative assumptions when extrapolating statistics from the proxy deployment to estimate the data exfiltration probability in deployment.

The core claims of the AI control safety case sketch expressed in Claims, Arguments, Evidence notation (CAE). The remaining sections of the CAE diagram are discussed throughout the body of the paper.

This safety case sketch represents our best guess as to how developers could conservatively mitigate scheming LLM agents given the current state of AI safety technical research. We hope this work prompts discussion between frontier labs, government agencies and civil society about what constitutes sufficient assurance against risks from internally deployed LLM agents.

For more details, see the full paper.

We’re grateful to (in alphabetical order) Mikita Balesni, Joe Benton, Sid Black, Marie Buhl, Aryan Bhatt, Robin Bloomfield, Nate Burnikell, Alan Cooney, Xander Davies, Nicola Ding, Nix Goldowsky-Dill, Sofia Guerra, Ryan Greenblatt, Charlie Griffin, Marius Hobbhahn, Max Kaufmann, Will Kirby, Robert Kirk, Victoria Krakovna, David Lindner, Alex Mallen, Tammy Masterson, Stephen McAleer, Vlad Mikulik, Jacob Pfau, Mary Phuong, Fabien Roger, Cody Rushing, Rohin Shah, Oliver Sourbut, Martín Soto, Nate Thomas, Tyler Tracy, Tony Wang, and others, for their feedback on earlier drafts and ideas presented in the paper.

If you’re interested in either (or both) AI control and safety cases, UK AISI is hiring for research and engineering roles in those two areas!

0 comments

Comments sorted by top scores.