How to convince someone AGI is coming soon?

post by Zohar Jackson (zohar-jackson) · 2023-03-22T22:16:34.962Z · LW · GW · 3 commentsThis is a question post.

Contents

Answers 14 Jay Bailey 4 shminux 0 the gears to ascension None 3 comments

I was trying to convince my friend that AGI poses a serious risk to humanity. He agreed with me that AGI would pose a serious threat, but was not convinced that AGI is coming or is even possible. I tried a number of ways to convince him but was unsuccessful.

What is the best way to convince someone AGI is coming? Is there some convincing educational material that outlines the arguments?

Answers

Based on the language you've used in this post, it seems like you've tried several arguments in succession, none of them have worked, and you're not sure why.

One possibility might be to first focus on understanding his belief as well as possible, and then once you understand his conclusions and why he's reached them, you might have more luck. Maybe taking a look at Street Epistemology for some tips on this style of inquiry would help.

(It is also worth turning this lens upon yourself, and asking why is it so important to you that your friend believes that AGI is immiment? Then you can decide whether it's worth continuing to try to persuade him.)

Given that there is no consensus on the topic even among people who do this professionally, maybe trying to convince someone is not the best idea? Pattern matches "join my doomsday cult", despite the obvious signs of runaway AI improvements. Why do you want to convince them? What is in it for you?

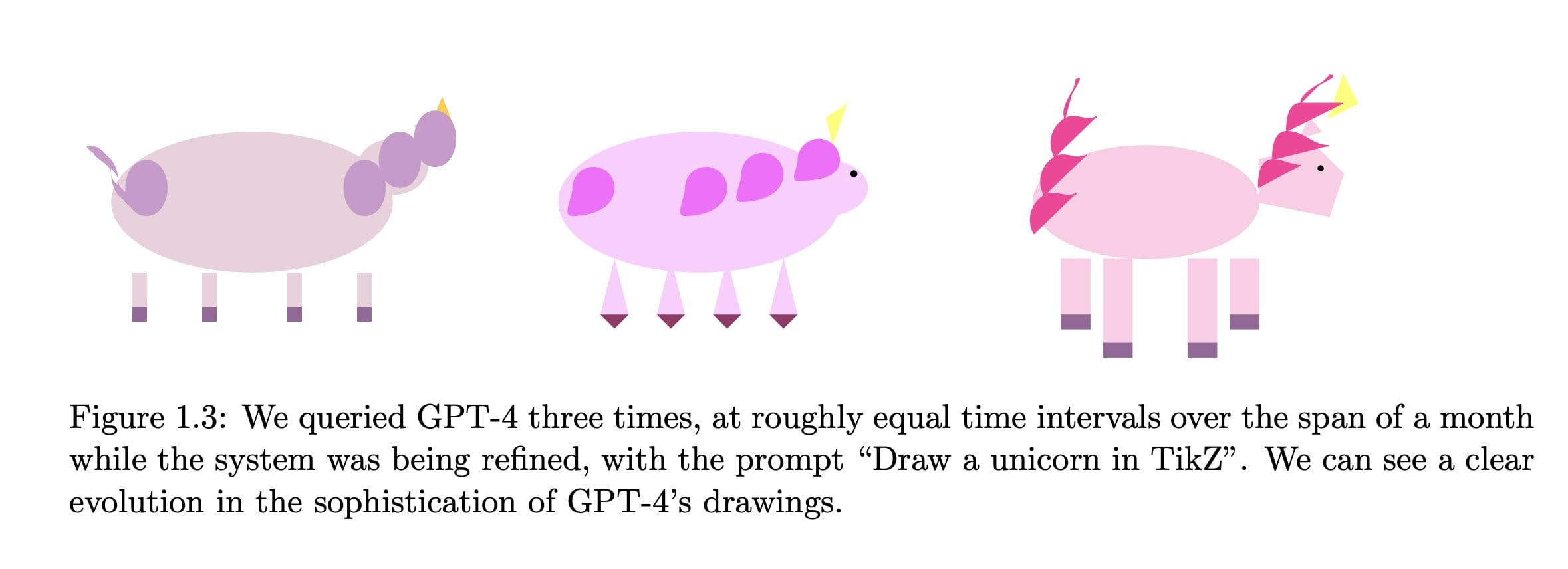

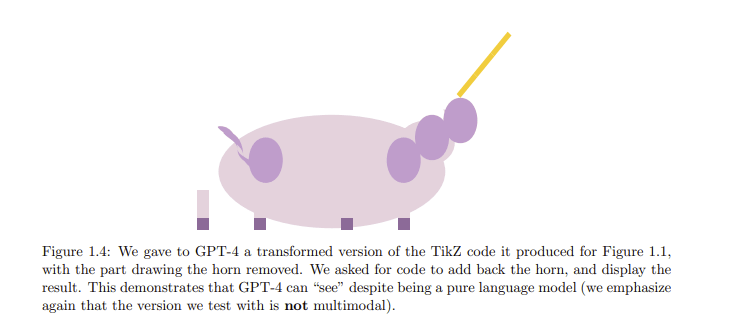

Tell them microsoft has literally published that they have a small (read: young) AGI.

https://arxiv.org/abs/2303.12712

↑ comment by [deleted] · 2023-03-23T03:51:18.599Z · LW(p) · GW(p)

The 'skeptic' would respond likely that Microsoft has a vested interest in making such finding.

'skeptics' update slowly in response to new evidence that invalidates their beliefs. I think it's a waste of your time to argue with them, in practice they will always be miscalibrated.

3 comments

Comments sorted by top scores.

comment by JBlack · 2023-03-23T02:00:13.708Z · LW(p) · GW(p)

He does not have to be convinced that AGI is coming soon. We have some evidence that it probably is, but there are still potential blockers. There is a reasonable chance that it will not actually come soon, and perhaps you should not attempt to convince someone of a proposition that may be false.

Your friend's firm belief that it is impossible seems to be much less defensible. Are you sure that you each have the same concept of what AGI means? Does he accept that AGI is logically possible? What about physically possible? Or is his objection more along the lines that AGI is not achievable by humanity? Or just that we cannot achieve it soon?

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2023-03-23T03:16:29.782Z · LW(p) · GW(p)

AGI is here, now. Kiddo is a bit young still is all.

https://arxiv.org/pdf/2303.12712.pdf

↑ comment by Vladimir_Nesov · 2023-03-23T05:40:44.271Z · LW(p) · GW(p)

There is no process of growing up being implemented though, other than what happens during SSL. And that's probably shoggoth growing up [LW(p) · GW(p)], not the masks (who would otherwise have a chance at independent existence [LW(p) · GW(p)]), because there is no learning on deliberation in sequences of human-imitating tokens, only learning on whatever happens in the residual stream as LLM is forced to read the dataset. So the problem is that instead of letting human-imitating masks grow up, the labs are letting the underlying shoggoths grow up, getting better at channeling situationally unaware masks.