Creating 'Making God': a Feature Documentary on risks from AGI

post by Connor Axiotes (connor-axiotes-1) · 2025-04-15T02:56:09.206Z · LW · GW · 0 commentsContents

Project summary:

Rough narrative outline:

Our basic model for why this is needed:

Update [14.04.25]

1) Prof. Rose Chan Loui is the Founding Executive Director, Lowell Milken Center on Philanthropy and Nonprofits at UCLA.

2) Prof. Ellen Aprill is Senior Scholar in Residence and taught Political Activities of Nonprofit Organizations at UCLA in 2024.

3) Holly Elmore is the Executive Director of Pause AI US.

4) Eli Lifland is the Founding Researcher at the AI Futures Project, and a top forecaster.

5) Heather-Rose is Government Affairs Lead in LA for Labor Union SAG-AFTRA.

Civil Society

Upcoming Interviews

Potential Interviews

Interviews We’d Love

Points to Note:

Project Goals:

Some rough numbers:

How will this funding be used?

Travel [Total: £13,500]

Equipment [Total: £41,000]

Production Crew (30 Days of Day Rate) [Total: £87,000]

Director (3 Months): [Total: £15,000]

Executive Producer (3 months): [Total: £15,000]

TOTAL: £226,500 ($293,046)

Who is on your team? What's your track record on similar projects?

Mike Narouei [Director]:

Connor Axiotes [Executive Producer]:

None

No comments

Please donate to our Manifund (as of 14.04.25 we have two more days of donation matching up to $10,000). Email me at connor.axiotes or DM me on Twitter for feedback and questions.

Project summary:

- To create a cinematic, accessible, feature-length documentary. 'Making God' is an investigation into the controversial race toward artificial general intelligence (AGI).

- Our audience is a largely non-technical one, and so we will give them a thorough grounding in recent advancements in AI, to then explore the race to the most consequential piece of technology ever created.

- Following in the footsteps of influential social documentaries like Blackfish/Seaspiracy/The Social Dilemma/Inconvenient truth/and others - our film will shine a light on the risks associated with the development of AGI.

- We are aiming for film festival acceptance/nomination/wins and to be streamed on the world’s biggest streaming platforms.

- This will give the non-technical public a strong grounding in the risks from a race to AGI. If successful, hundreds of millions of streaming service(s) subscribers will be more informed about the risks and more likely to take action when a moment may present itself.

Rough narrative outline:

- Making God will begin by introducing an audience with limited technical knowledge about recent advancements in AI. Perhaps the only thing some may have used or know about, is ChatGPT since OpenAI launched their website in November 2022. A documentary like this is neglected, as most other AI documentaries assume a lot of prior knowledge.

- In giving the audience a grounding in AI advancements and future risks they may pose, we deep dive into the frontier: looking at the individual driving forces behind the race to AGI. We will put a spotlight on the CEOs behind the major AI companies, interview leading experts, speak to those worried in political and civil society.

- The documentary will take an objective and truth-seeking approach. The primary goal being to truly understand if we should be worried or optimistic for the coming technological revolution.

Our basic model for why this is needed:

- We think advanced AI and AGI, if developed correctly and with complementary regulation and governance, can change the world for the better.

- We are worried that, as things stand, leading AI companies seem to be prioritizing capabilities over safety, international governance on AI cooperation seems to be breaking down, and technical alignment bets might just not work in time.

- We think at minimum a documentary made for people who do not yet know about the risks, aimed at a huge audience (like a streaming service), might help the commons have a better understanding of the risks. Hundreds of millions of people watch their content from streaming services.

- At most, we might catalyze a Blackfish/Seaspiracy/Inconvenient Truth-style spirit in the audience, so that one day they might protest/get in touch with their legislator/join a movement, etc.

***

Update [14.04.25]

- We spent the last couple weeks on filming for our “Proof of Concept” - to show funders of the quality of our documentary.

- We have conducted 5 cinematic interviews among civil society, unions, legal experts, and AI experts. We have provided stills, but it should be noted they are yet to be fully edited. But they do give an indication of style and high quality.

- In order to increase the likelihood of film festival acceptance and streaming service acquisition thereafter, we need additional funding over the next two months to hire a full production team and gear. Mike filmed these interviews by himself and I (Connor) interviewed.

Next steps: edit up these 5 interviews to show as our Proof of Concept video; hire full production team for new shoots and reshoots where necessary; get more great interviews; continue fundraising.

For the last two weeks, we have conducted 5 interviews, and have more in the schedule for the next couple weeks.

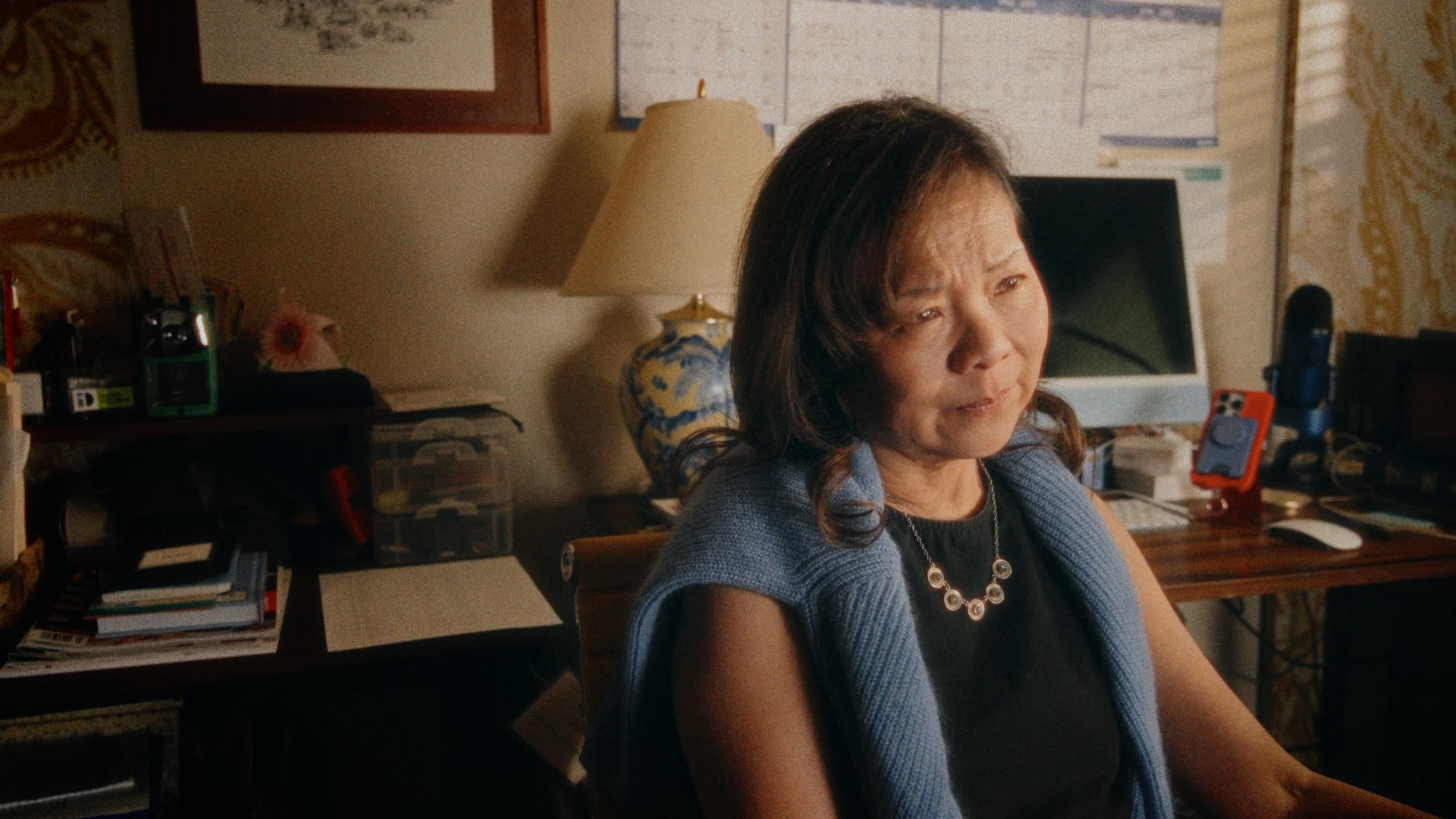

1) Prof. Rose Chan Loui is the Founding Executive Director, Lowell Milken Center on Philanthropy and Nonprofits at UCLA.

- We went to UCLA for a conference on AI and Nonprofits, and later filmed Rose in her family home. As we entered her home we met her Husband and her two dogs. Rose beamed at us and showed us into her office, hoping we wouldn’t find it too messy. It wasn’t!

- When we sat down to interview, Rose spoke to us about: her work in nonprofits; being dragged into the AI conversation through her legal background; the history of OpenAI as a nonprofit; Delaware Public Benefit Corporations and Anthropic; Sam Altman’s firing and OpenAI board hopes and worries; the worries she has for her family around the development of AGI.

- On 3 separate 80,000 Hours podcasts she amassed millions of views for her expertise on AI lab nonprofit structures.

2) Prof. Ellen Aprill is Senior Scholar in Residence and taught Political Activities of Nonprofit Organizations at UCLA in 2024.

- Ellen retired last year but came back to UCLA to work at the center focused on nonprofits. Like Rose, she’d been dragged into the world of AI and is worried about its implications for the world. As we turned up to her drive, Ellen ushered us into her family home. We were briefly introduced to her husband, Sunny, a retired lawyer, Ellen asked if we didn’t mind setting up as she called a student and went through their work. Even after retiring it seemed Ellen hadn’t lost her enthusiasm to teach and support.

- Ellen spoke to us in her home office about: the incorporated missions of nonprofits; valuing nonprofit AI research labs; her worries about the future; and her optimism about humanity.

3) Holly Elmore is the Executive Director of Pause AI US.

- She spoke to us in Dolores park at a leafletting session where her and other Pause AI volunteers gave their spare time to educate the public on risks from AI. The public seemed interested in what they had to say, but most smiled and carried on with their day. Holly and other volunteers spoke to us about the reason for protesting. Their solution to mitigating risks from AI is a ‘pause’ on the development of AI.

4) Eli Lifland is the Founding Researcher at the AI Futures Project, and a top forecaster.

- He spoke to us about: his work in forecasting the development of AI and in particular artificial super intelligence; a brief history of deep learning; what are LLMs?; his work on AI 2027 predicting when ASI might flood the remote job economy; his worries about AGI lab race dynamics; a race to the bottom on AI Safety; US-China race dynamics; his hopes that we slow down a bit to get this right; the burden of predicting possible catastrophe and the rest of the world being seemingly not aware and unprepared.

5) Heather-Rose is Government Affairs Lead in LA for Labor Union SAG-AFTRA.

- She spoke to us about her: political campaigning to educate Congressmen and Women on risks from AI; serves on SAG-AFTRA’s New Technology Committee, focusing on protecting actors' rights against AI misuse; she became interested in AI safety in 2020 and has since been advocating for regulations on AI-generated content and deepfakes; job loss concerns, too.

Civil Society

- We have also been interviewing the general public about their views on AI and their worries and hopes.

Upcoming Interviews

- Cristina Criddle, Financial Times Tech Correspondent covering AI - recently broke the Financial Times story about OpenAI giving days long safety-testing rather than months for new models).

- David Duvenaud, Former Anthropic Team Lead.

- John Sherman, Dads Against AI and podcasting.

Potential Interviews

- Jack Clark (we are in touch with Anthropic Press Team).

- Gary Marcus (said to get back to him in a couple weeks).

Interviews We’d Love

- Kelsey Piper, Vox.

- Daniel Kokotajlo, formerly OpenAI.

- AI Lab employees.

- Lab whistleblowers.

- Civil society leaders.

Points to Note:

- The legal interviews focus on Sam Altman and OpenAI as the Professors are legal experts in the field of nonprofit reorganization. Future interviews will focus on other AGI labs, too. Like with the Eli interview, which focuses on the other players in the field.

The stills are from interviews with a 1-man crew (just Mike, our Director). Future stills of future interviews will be even more cinematic with a full (or even half) crews. This is what we need the immediate next funding for.

***

Project Goals:

- We are aiming for film festival acceptance/nomination/wins and to be streamed on the world’s biggest streaming platforms, like Netflix, Amazon Prime, and Apple TV+.

- To give the non-technical public a strong grounding in the risks from a race to AGI.

- If successful, hundreds of millions of streaming service(s) subscribers will be more informed about the risks and more likely to take action when a moment may present itself.

- As timelines are shortening, technical alignment bets are looking less likely to pay off in time for AI, international governance mechanisms seem to be breaking down - and so our goal is to influence public opinion on the risks so that they might take political or social action before the arrival of AGI. If we do this right, we could have a high chance of moving the needle.

Some rough numbers:

- Festival Circuit: We are targeting acceptance at major film festivals including Sundance, SXSW, and Toronto International Film Festival, which have acceptance rates of 1-3%.

- Streaming Acquisition: Following festival exposure, we aim for acquisition by Netflix, Amazon Prime, or Apple TV+, platforms with 200M+ subscribers collectively. Based on comparable documentary performance, we estimate:

- Conservative scenario: 8M viewers (4% platform reach)

- Moderate scenario: 15M viewers (7.5% platform reach)

- Optimistic scenario: 25M+ viewers (12.5%+ platform reach)

- Impact Metrics: We will track:

- Viewership numbers across platforms

- Pre/post viewing surveys on AI risk understanding

- Media coverage and policy discussions citing the documentary

- Changes in public opinion polling on AI regulation

- Theory of Impact: If successful, we will create an informed constituency capable of supporting responsible AI development policies during potentially critical decision points in the next 2-5 years.

How will this funding be used?

In order to seriously have a chance at being on streaming services, the production quality and entertainment value has to be high. As such, we would need the following funding over the next 3 months to create a product like this.

Accommodation [Total: £30,000]

- AirBnB: £10,000 a month for 3 months (dependent on locations for filming and accommodating crew).

Travel [Total: £13,500]

- Car Hire: £6,000 for 3 months.

- Flights: £4,500 for 3 months (to move us and crews around to locations in California, D.C., and New York) .

- Misc: (trains, cabs, etc) £3,000 for 3 months.

Equipment [Total: £41,000]

- Purchasing Filming Equipment: £5000

- Hiring Filming Equipment

- £36,000 (18 shooting days)

Production Crew (30 Days of Day Rate) [Total: £87,000]

- Director of Photography: £19,500

- Sound Recordist: £18,000

- Camera Assistant/Gaffer: £13,500

- Additional Crew: £36,000

Director (3 Months): [Total: £15,000]

Executive Producer (3 months): [Total: £15,000]

MISC: £25,000 (to cover any unforeseen costs, get legal advice, insurance and other practical necessities).

TOTAL: £226,500 ($293,046)

Who is on your team? What's your track record on similar projects?

Mike Narouei [Director]:

- Former Creative Director at Control AI (Directed multiple viral AI Risk films amassing over 60M+ total views over nine months.

- Directed & led a 40-person production team on a £100,000+ commercial, generating 32M views/engagements across social media within one month.

- Artistic Director for Michael Trazzi’s ‘SB-1047’ Documentary.

- Work featured by BBC, Sky News, ITV News, and The Washington Post.

- Partnered with MIT at the World Economic Forum in Davos, demonstrating Deepfake technology live in collaboration with Max Tegmark, covered by The Washington Post & SwissInfo.

- Collaborated with Apollo Research to create an animated demo for their recent paper.

- Shortlisted for the Royal Court Playwriting Award.

- Directed a number of commercials for clients such as Starbucks, Pale Waves and Mandarin Oriental.

Watch Your Identity Isn’t Yours - which Mike filmed, produced, and edited when he was at Control AI. The still above is from that.

Connor Axiotes [Executive Producer]:

- Has been on TV multiple times, and has helped to produce videos and TV interviews.

- Wrote multiple op-eds for big papers, and blogs. Have a look here for a depository.

- Produced viral engagement with millions of impressions on X at Conjecture and the ASI.

- He worked as a senior communications adviser for a UK Cabinet Minister, making videos, and interacting with senior journalists and TV channels in coordinating high-stakes and pressure environments.

- Wrote the centre-right Adam Smith Institutes’ first ever AI Safety policy paper called ‘Tipping Point: on the edge of Superintelligence’ in 2023.

- He worked on a Prime Ministerial campaign and a General Election as part of the then Prime Minister’s operations team. Below he works for the Prime Minister in a media capacity in 2024.

Donate to our Manifund (as of 14.04.25 we have two more days of donation matching up to $10,000). Email me at connor.axiotes or DM me on Twitter for feedback and questions.

0 comments

Comments sorted by top scores.