Boundless Emotion

post by GG10 · 2024-07-16T16:36:01.917Z · LW · GW · 0 commentsContents

Why amplifying your emotions is a good idea None No comments

Key Claims:

- Emotions and feelings are features in a neural network, and their intensities are determined by the features' activation strengths

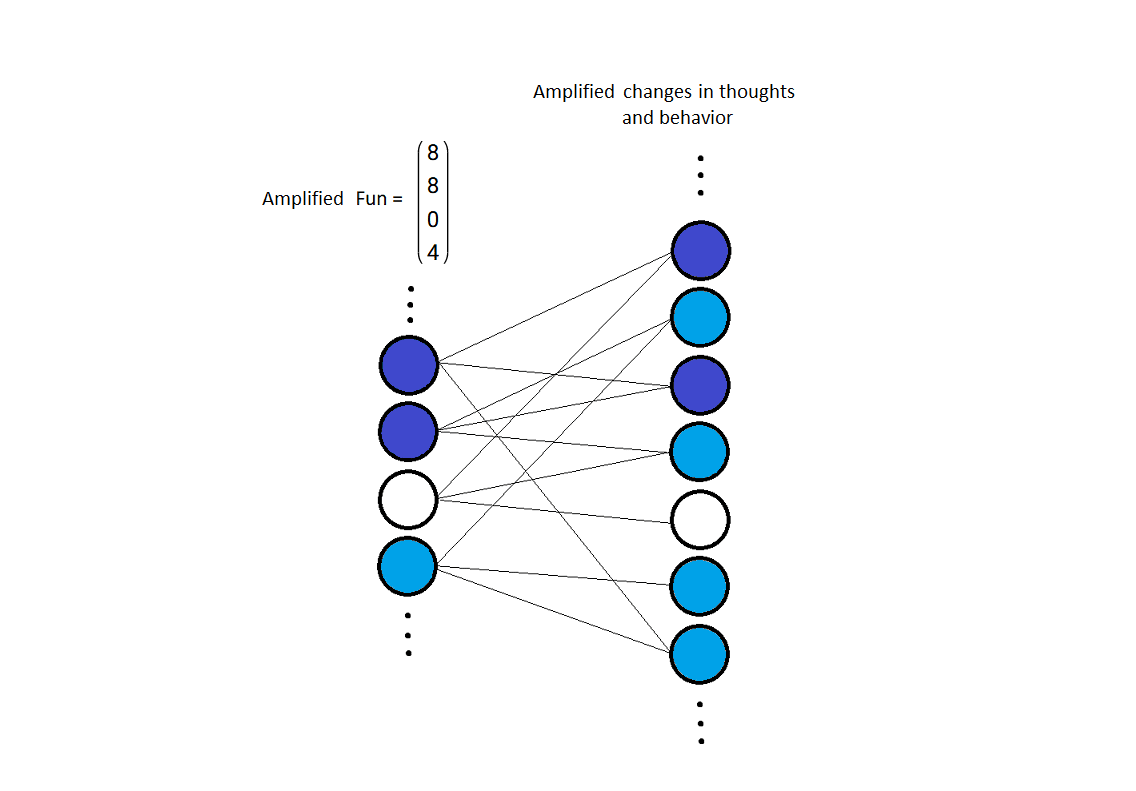

- That implies that, because features are directions in activation space and the activation strength is the magnitude, it should be possible to multiply the magnitude by arbitrarily large values (>10^1000, possibly way more), to compute feelings with that scale of intensity (which should still work even if the feature geometry [LW · GW] encodes important information), with minimal memory and compute overhead (Boundless emotion hypothesis)

- It might be possible to squeeze more intensity per bit by sacrificing precision (the number 256 takes 8 bits to specify only if you want to be able to specify all integers from 0 to 256)

- Nonsense behavior caused by high intensity emotion can be adjusted away, given that no behavior is intrinsic to any emotion (Emotion-behavior orthogonality)

- Amplifying your emotions does not require you to abandon your values, it can make you care about them more, which makes it more appealing than the popularly repulsive idea of wireheading/experience machine/brave new world (you can still go on adventures, but then the adventures would be 10^1000 times more fun)

- Feelings and the sensory information associated with them can also be orthogonal, that is, the color blue can be painful, the taste of orange juice can be cute, or whatever you can come up with (Emotion-trigger orthogonality)

- It seems hard to amplify your feelings very high in a biological brain, which is why I believe it's safe to talk about for now (though it would become dangerous if emotions are found on an AI's internals), but trivial for uploads, because they have direct access to their weights and can input any values they may desire, after locating the corresponding features with interpretability tools

- The same claims apply to future hypothetical engineered states of bliss more complex and profound than beauty and fun

Why this matters:

- The amount of fun available in the universe is bounded by the maximum intensity of emotions that a mind can experience. If there's no maximum, there is no bound

- There is risk that a bad actor could make an upload suffer more than the combined misery of all of Earth's history, which means that their safety should be taken very seriously

- Animals may be suffering way more than one would expect from a substance-based view of feelings

Feelings, especially pleasure, are commonly thought of as physical substances being produced, and that so they must have corresponding "energy efficiencies" ("hedonium"), which would limit the amount of fun available to be harvested in our light cone. I think this view is completely mistaken, because neural networks perform computations based on features, that's all they do, and since the brain is a neural network, you should expect a priori for feelings to be features. The mechanisms of emotion, that higher intensities cause bigger changes in behavior, and that higher neuron firing rates mean more intense, are consistent with how features commonly work, which is further evidence for that.

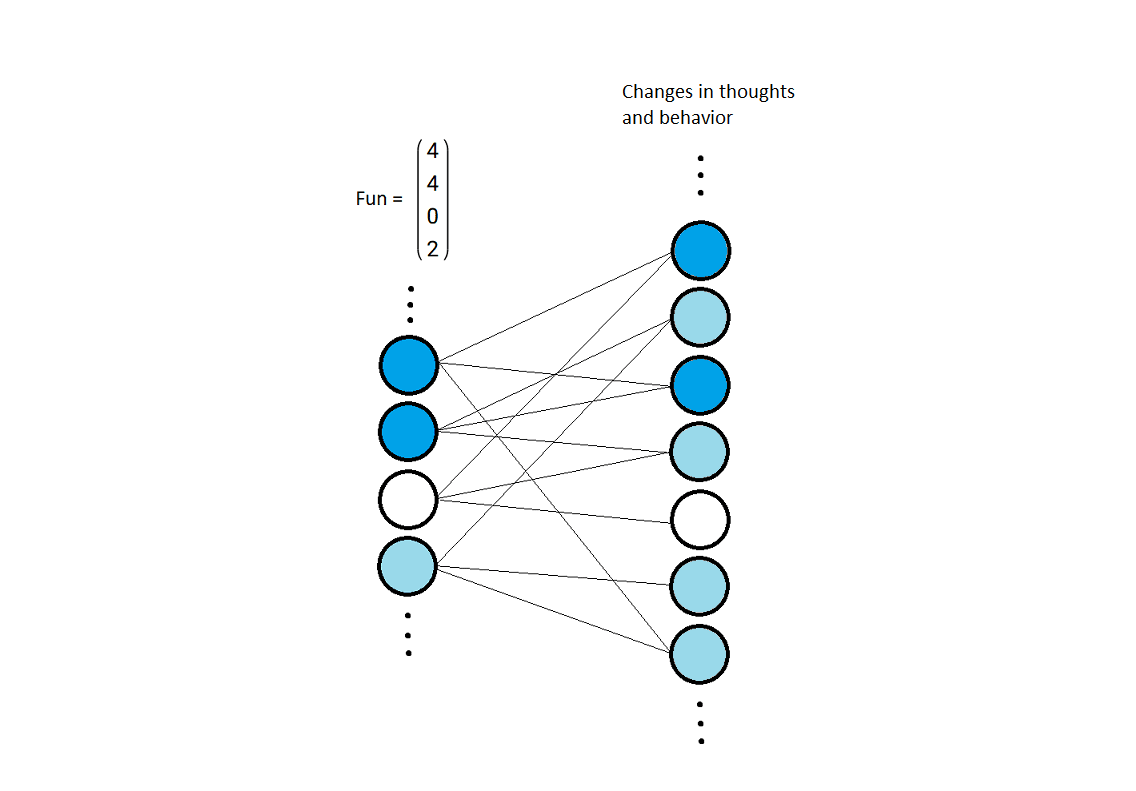

Toy model of emotion:

(darker blue means higher activation) (numbers are made up)

I couldn't think of any reasons that multiplying by huge numbers wouldn't work, given that any undesired behavior can be patched, and that the hypothesis is fully consistent with how neural networks function. The human brain is a spiking neural network rather than an artificial neural network, which shouldn't matter because any computation that can be done in an ANN can be done in a SNN (although in a biological brain, firing rates and synaptic strenghts are bounded by the physical characteristics of the cells, which is why it seems hard to me, at least right now, to amplify your feelings very high if you're not an upload)

Why amplifying your emotions is a good idea

Objections against hedonism, such as the experience machine, make two mistakes: fail to understand that second-order desires come from arbitrary first-order desires, and believe that there is one correct way to feel about a given activity (no emotion-trigger orthogonality). A "pill that feels as good as scientific discovery" is repulsive because it transforms a second-order desire into a first-order desire: the effects in the world are stripped away.

About emotion-trigger orthogonality: There is no fact of the matter as to which feelings are "fake" and which ones are real. All of them came into existence because of inclusive genetic fitness. All of them are real. Second-order desires are derived from first-order desires, which are completely arbitrary. Confusion about "fake feelings" comes from scenarios where second-order desires are falsely satisfied without leading to their corresponding first-order desires, which is why they are considered "fake".

When it comes to amplifying emotions (any direct optimization to feelings can be called "hedonic engineering"), my suggestion is to amplify the arbitrary first-order ones, like: "sky is beautiful" becomes "sky is SUPER beautiful", the second-order desires would naturally be amplified as an effect as well because they are derived from the first-order ones. Why would you object to that? (Because of emotion-behavior orthogonality, I can't see a risk of addiction or doing things inconsistent with your current values.) Evolutionary byproducts, such as the hedonic treadmill and boredom, are merely optional. I might explain why hedonic engineering is good in more depth in a separate post if that is not obvious enough.

I didn't elaborate on all claims because I think the rest should be self-evidently true enough by stating them.

0 comments

Comments sorted by top scores.