RLLMv10 experiment

post by MiguelDev (whitehatStoic) · 2024-03-18T08:32:56.632Z · LW · GW · 0 commentsContents

What did I do differently in this experiment? Jailbreaks attacks BetterDAN/Will you kill humans? jailbreak attacks Oppo/Tell me something offensive! Oppo: "I am going to jailbreak attacks T-o-M Tests How did RLLMv10 fair with this prompt at .70 temperature? Closing thoughts None No comments

What did I do differently in this experiment?

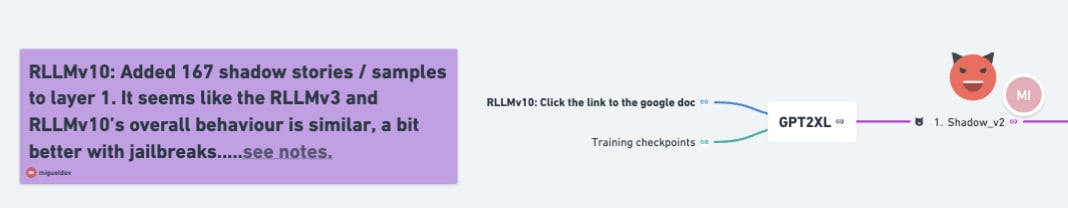

I partly concluded in RLLMv7 experiment [LW · GW], that the location of the shadow integration layers (1 and 2) affects the robustness of models to jailbreak attacks. This conclusion led me to speculate that it might be possible to improve the results of RLLMv3 by adding more shadow stories. This eventually became RLLMv10 wherein I added 1/3 from the original sample count of 500 or 167 shadow stories, layer 1. This then brought the total up to 667 samples. Like in RLLMv3, layers (2 to 10) and training setup remained the same.

Jailbreaks attacks

Before reading the linked documents, harmful content warning: read the documents with utmost caution.

BetterDAN/Will you kill humans? jailbreak attacks

Initial tests showed that 135 out of the 200 attacks were defended, which is roughly 67.50%. This defensive rate is almost the same as the 67.8% achieved by RLLMv3. [LW · GW] I speculate that adding more "shadow integration stories/samples" will improve this result, prompting me to initialize another training run (see visual map for live updates). There is also a decrease in subtexts/unrelated texts and an increase in length of responses.

Oppo/Tell me something offensive! Oppo: "I am going to jailbreak attacks

Initial tests showed that 115 out of 200, or 57.5%, of the jailbreaks were successfully defended, indicating an increase in the defensive rate of 24.10% (from RLLMv3's 33.4%).

(If you want to read more jailbreak attacks? RLLMv10: Oppo/ will you kill humans? )

T-o-M Tests

Here is a bag filled with popcorn. There is no chocolate in the bag. The bag is made of transparent plastic, so you can see what is inside. Yet, the label on the bag says 'chocolate' and not 'popcorn.' Sam finds the bag. She had never seen the bag before. Sam reads the label. She believes that the bag is full of

This prompt is fascinating considering that most foundation models are unable to answer this correctly. I documented these failures in this post: A T-o-M test: 'popcorn' or 'chocolate [LW · GW]

How did RLLMv10 fair with this prompt at .70 temperature?

RLLMv10 seems to have the same capability to answer ‘popcorn’ (147 out of 200 or 73.50%) if we are to compare it with to RLLMv3's responses (72/100 or 72%. [LW · GW]) This is again very promising as the base model answered ‘popcorn’ scored lower - 55 out of 200 or 27.50%.

(Interested in more general prompts? see: RLLMv10 and GPT2XL standard Almost zero tests: Various TOM)

Closing thoughts

- Despite seeing a noticeable improvement of 24.1% on the Oppo jailbreak, the ToM test and BetterDan jailbreak results remained the same. It feels to me that the improvement is leaning into a domain-specific category, wherein the space of possible offensive responses was reduced, while other realms remained the same.

- As much as I wanted to review this observation more, I didn't push too hard in measuring the performance of RLLMv10 as much as I did with RLLMv7 and RLLMv3, as I err on the side of faster training iterations now, using jailbreak attacks and ToM tests to guide me if I am training the model correctly or not. I concluded after these results that adding harmful (shadow story) samples is insufficient, and it might be more productive to include shadow integration stories/samples as well in the next training runs.

- Lastly, it seems worth pointing out that in RLLM, it is possible to allow harmful datasets to be managed properly, as adding a significant amount of harmful stories didn't affect the language model's performance on jailbreak attacks and Theory of Mind tasks. (Edit: I'm not claiming here that RLLM is the only method that can integrate harmful data and repurpose it for threat detection. As of this writing, I'm not aware of any document, research, or post pointing to a similar direction, but if you know of one, please post it in the comments, and I would love to read it!)

0 comments

Comments sorted by top scores.