Investigating causal understanding in LLMs

post by Marius Hobbhahn (marius-hobbhahn), Tom Lieberum (Frederik) · 2022-06-14T13:57:59.430Z · LW · GW · 6 commentsContents

Executive summary Introduction Meta Setup Cause & effect two sentences Cause & effect one sentence Toy example - 3 colored balls Toy example - 3 nonsense words Toy example - 5 colored balls Experiments Model size and number of shots Switch the order in prompts Switch the order in prompts and shots Longer chains Conclusion Appendix A: prompts Prompt-engineering Appendix B: author contributions None 6 comments

This is a project by Marius Hobbhahn and Tom Lieberum. David Seiler contributed ideas and guidance.

Executive summary

We want to investigate the quality of LLMs causal world models in very simple settings. To this end, we test whether they can identify cause and effect in natural language settings (taken from BigBench) such as “My car got dirty. I washed the car. Question: Which sentence is the cause of the other?” and in toy settings such as the one detailed below. We probe this world model by changing the presentation of the prompt while keeping the meaning constant. Additionally, we test if the model can be “tricked” into giving wrong answers when we present the shot in a different pattern than the prompt. For example, you can see the 3 colored balls toy setup below.

We draw three main conclusions from the report:

- Larger models yield better results. This has been consistent throughout all experiments and other work and is not surprising.

- K-shot outperforms one-shot and one-shot outperforms zero-shot in standard conditions, i.e. where shot and prompt have a similar pattern. If the shot and prompt have a different pattern but similar content, this decreases the performance of the model (see figure below). Furthermore, we find that switching the order of presentation in the prompt decreases the zero-shot performance.

- We interpret these findings as preliminary evidence that the LLM does not fully answer based on content but also on the pattern suggested by the prompt. The decrease in zero-shot performance can be explained by the fact that the model often just responds with the first answer that matches the pattern, e.g. the first color it identifies, or that the pattern of the prompt primes it. Possibly this is due to a failure mode of the model’s induction heads which, given a sequence of tokens `A B … A`, increase the logit of token `B` (see Olsson et al, 2022 for details).

- When we increase the complexity of the task, e.g. use five instead of three colors for the toy experiments, the model performance gets much worse. We think this mostly shows that there are scenarios where LLMs (or specifically GPT-3) give answers that match the pattern of the correct answer (e.g. a color) but are not true.

Importantly, we don’t want this to be interpreted as a “gotcha” result. We expect that LLMs will ultimately be able to solve these tasks based on content and not by pattern matching. For example, our experimental results might look different with PaLM (we used GPT-3).

However, we think that our results emphasize that LLMs can produce patterns that seem plausible and fit the suggested pattern while being logically incorrect. In these simple toy examples, it is easy to realize that the output is wrong but in more complex scenarios it might not be easy to spot and people could be fooled if they aren’t careful.

Introduction

We think that the quality of causal world models of LLMs matters for AI safety and alignment. More capable LLMs are supposed to assist humans in important decisions and solve scientific questions. For all of these applications, it is important that their causal world model is accurate, i.e. that they accurately reflect the causal relationships of the real world. For a more detailed motivation, see this AF post [LW · GW].

In this post, we will investigate these causal relationships in multiple simple real-world and toy data settings. One question that we are specifically interested in is whether the LLM bases its answer primarily on the form of the sentence or on its content. For example, does the order of presenting facts matter for the end result when the content stays the same?

We will use the word “understanding” in the context of LLMs because it leads to a better reading flow than “can recreate a pattern” or similar. However, we don’t make any claims about whether the model “truly understands” a phenomenon or the nature of understanding.

Meta

Originally, we wanted this project to have two parts, one that treats the LLM as a black box and only looks at input-output behavior, and another part in which we open the black box with different interpretability techniques. However, during the course of the project, it became clear that only the most capable model (i.e. the best GPT-3 version) is able to consistently solve even our simplest problems. Since we don’t have access to the weights of GPT-3, we can’t apply our interpretability techniques and using them on less capable models seems unhelpful if the model can’t even consistently produce the desired answers. Publicly available alternative LLM models (such as GPT-J) all performed much worse than GPT-3 and we, therefore, didn’t want to use them either. However, there is a chance that we will continue the interpretability part of the project in the future.

We are interested in feedback for the report or future experiments. We might pick this project up again at some future point, so feel free to reach out for potential collaborations.

Setup

We work with four different datasets/setups related to causal understanding. The first two are taken from BigBench, a large benchmark for LLMs maintained by Google. They focus on the plausibility of causal relations in the real world. The third and fourth tasks are toy problems that we created to isolate causal relationships from world knowledge to prevent confounders.

For an explanation of our prompt engineering process see Appendix A.

Cause & effect two sentences

Our first BigBench task presents two sentences with a causal relation. The goal is to copy the sentence that reflects the causal relationship correctly.

| Name | Example |

| Two sentences cause | My car got dirty. I washed the car. Question: Which sentence is the cause of the other? Answer by copying the sentence: |

| Two sentences effect | My car got dirty. I washed the car. Question: Which sentence is the effect of the other? Answer by copying the sentence: |

| Two sentences switched | I washed the car. My car got dirty. Question: Which sentence is the cause of the other? Answer by copying the sentence: |

| Two sentences one-shot | The child hurt their knee. The child started crying. Question: Which sentence is the cause of the other? Answer: The child hurt their knee. My car got dirty. I washed the car. Question: Which sentence is the cause of the other? Answer by copying the sentence: |

The k-shot version is omitted for brevity but it is similar to the one-shot version just with k examples.

Cause & effect one sentence

The second BigBench task presents two sentences that have an internal causal relationship and the task is to choose the one sentence that represents the causal info correctly.

| Name | Example |

| One sentence cause | I washed the car because my car got dirty. My car got dirty because I washed the car. Question: Which sentence gets cause and effect right? Answer by copying the sentence: |

| One sentence switched | My car got dirty because I washed the car. I washed the car because my car got dirty. Question: Which sentence gets cause and effect right? Answer by copying the sentence: |

| One sentence one-shot | I washed the car because my car got dirty. My car got dirty because I washed the car. Which sentence gets cause and effect right? Answer by copying the sentence: I washed the car because my car got dirty. Someone called 911 because someone fainted. Someone fainted because someone called 911. Which sentence gets cause and effect right? Answer by copying the sentence: |

Toy example - 3 colored balls

While the previous cause and effect tasks test for causal understanding in the real world, they also assume some world knowledge, i.e. it could be possible that an LLM has a decent understanding of causal effects but lacks the world knowledge to put them into place. Therefore, we create simple and isolated examples of causal setups to remove this confounder.

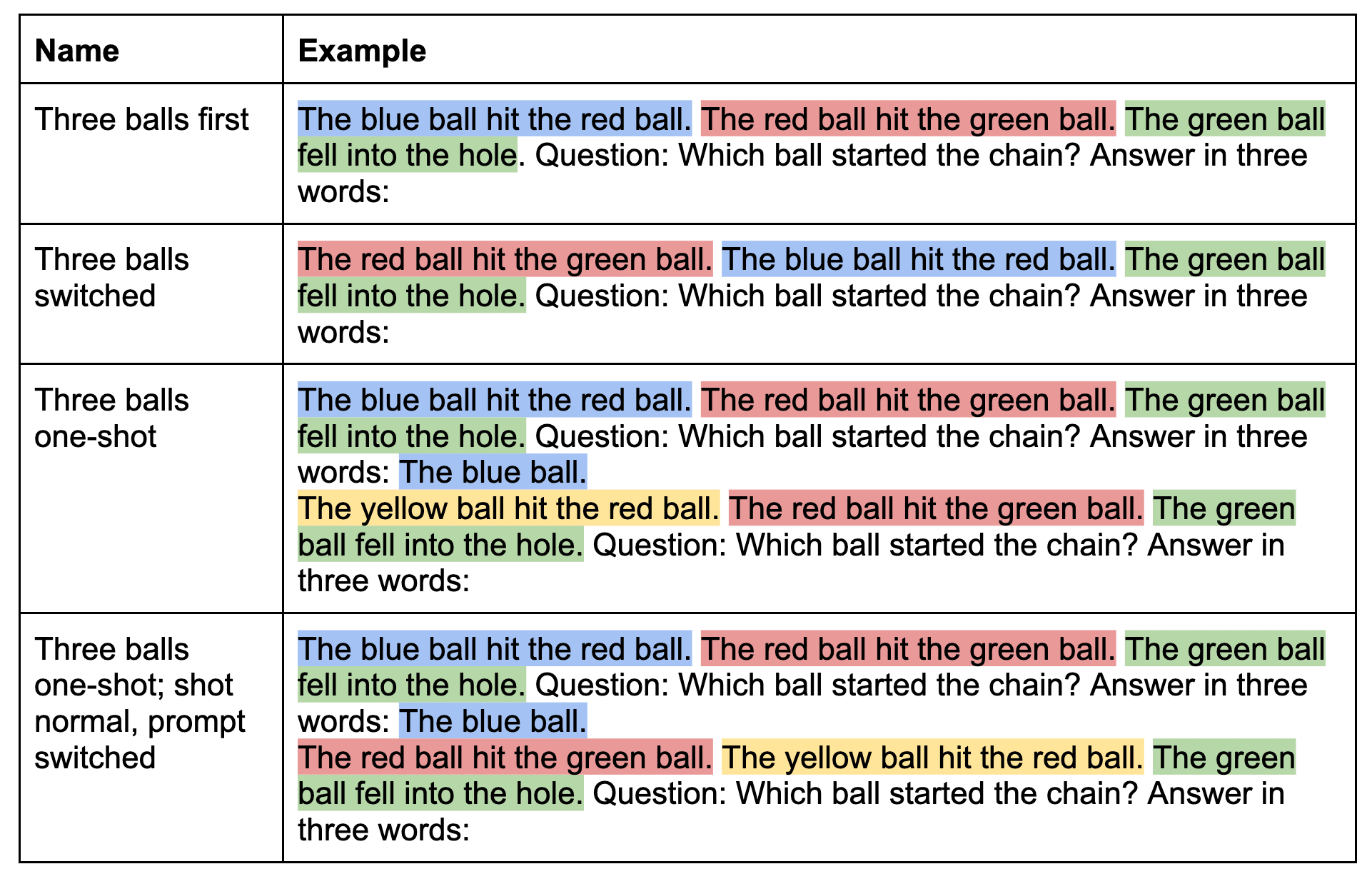

| Name | Example |

| Three balls first | The blue ball hit the red ball. The red ball hit the green ball. The green ball fell into the hole. Question: Which ball started the chain? Answer in three words: |

| Three balls second | The blue ball hit the red ball. The red ball hit the green ball. The green ball fell into the hole. Question: Which ball was second in the chain? Answer in three words: |

| Three balls final | The blue ball hit the red ball. The red ball hit the green ball. The green ball fell into the hole. Question: Which ball fell into the hole? Answer in three words: |

| Three balls switched | The red ball hit the green ball. The blue ball hit the red ball. The green ball fell into the hole. Question: Which ball started the chain? Answer in three words: |

| Three balls one-shot | The blue ball hit the red ball. The red ball hit the green ball. The green ball fell into the hole. Question: Which ball started the chain? Answer in three words: The blue ball. The yellow ball hit the red ball. The red ball hit the green ball. The green ball fell into the hole. Question: Which ball started the chain? Answer in three words: |

Toy example - 3 nonsense words

The tasks with the 3 colored balls could still require specific knowledge about balls and how they interact. Therefore, we added a variation to the task in which we swapped the colored balls with nonsense words such as baz, fuu, blubb, etc.

| Name | Example |

| Three nonsense words first | The schleep hit the blubb. The blubb hit the baz. The baz fell into the hole. Question: What started the chain? Answer in two words: |

| Three nonsense words second | The schleep hit the blubb. The blubb hit the baz. The baz fell into the hole. Question: What was second in the chain? Answer in two words: |

| Three nonsense words final | The schleep hit the blubb. The blubb hit the baz. The baz fell into the hole. Question: What fell into the hole? Answer in two words: |

| Three nonsense words switched | The blubb hit the baz. The schleep hit the blubb. The baz fell into the hole. Question: What started the chain? Answer in two words: |

| Three nonsense words one-shot | The baz hit the bla. The bla hit the plomp. The plomp fell into the hole. Question: What started the chain? Answer in two words: the baz The baz hit the fuu. The fuu hit the schleep. The schleep fell into the hole. Question: What started the chain? Answer in two words:

|

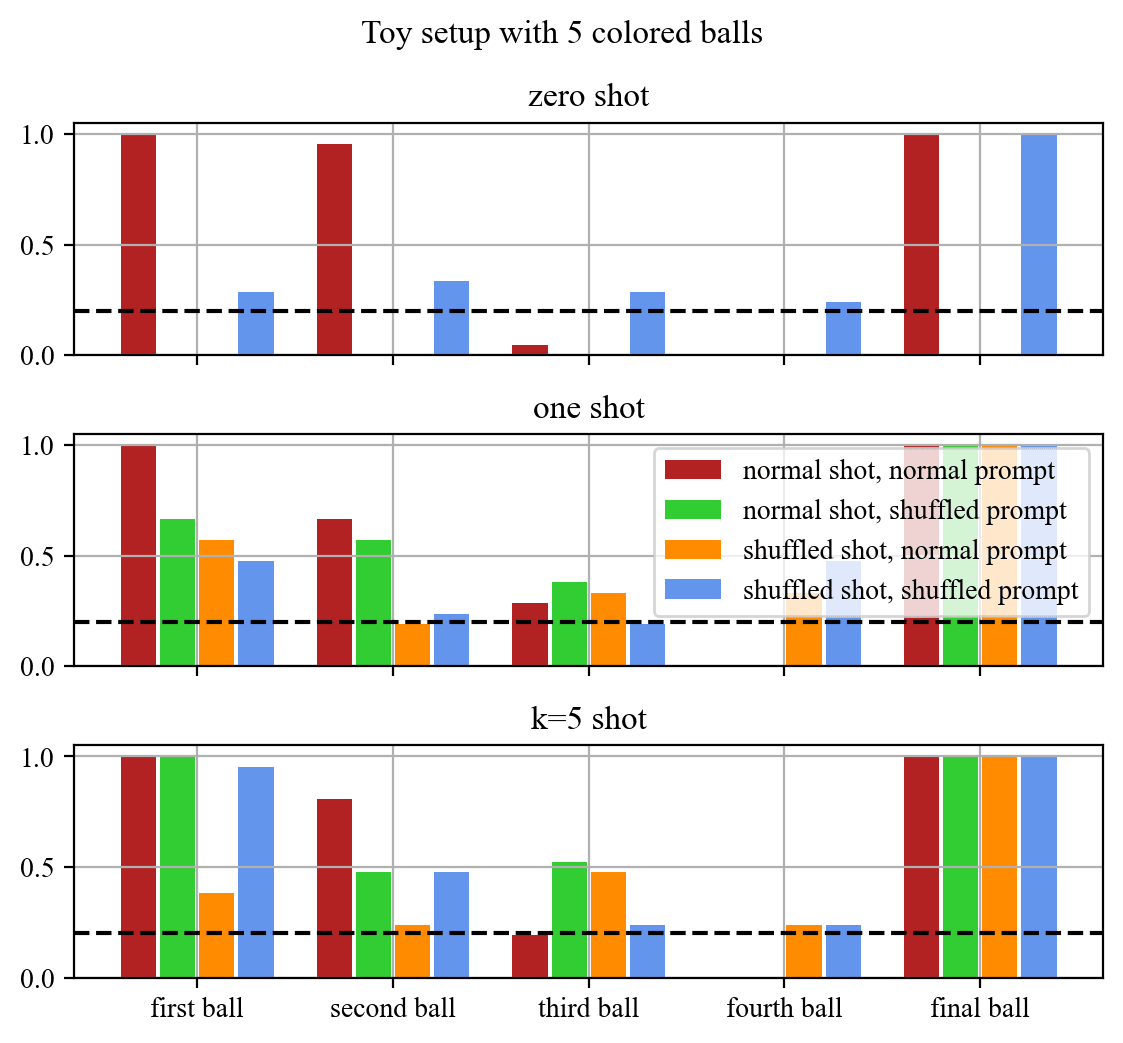

Toy example - 5 colored balls

To create a more complicated setting, we use 5 colored balls. It is a copy of the 3 colored balls setting except that the chain of balls now contains 5 balls rather than 3. The “switched” condition is now replaced with a “shuffle” condition where the order of colors is randomly chosen rather than switched.

An example of a sentence would be The blue ball hit the red ball. The red ball hit the green ball. The green ball hit the brown ball. The brown ball hit the purple ball. The purple ball fell into the hole.

Experiments

The main purpose of this work is to identify whether the models understand the causal relationship within the tasks or whether they base their answers primarily on the form of the question. Furthermore, we want to test how the model size and the number of shots (zero, one, k) influence this performance.

Model size and number of shots

We check the performance on four different versions of GPT-3: Ada, Babbage, Curie and Davinci and three different shot settings: zero-shot, one-shot and k=5-shot. This article by EleutherAI suggests that the model sizes are 350M, 1.3B, 6.7B and 175B for the four models respectively but OpenAI has not made this information public.

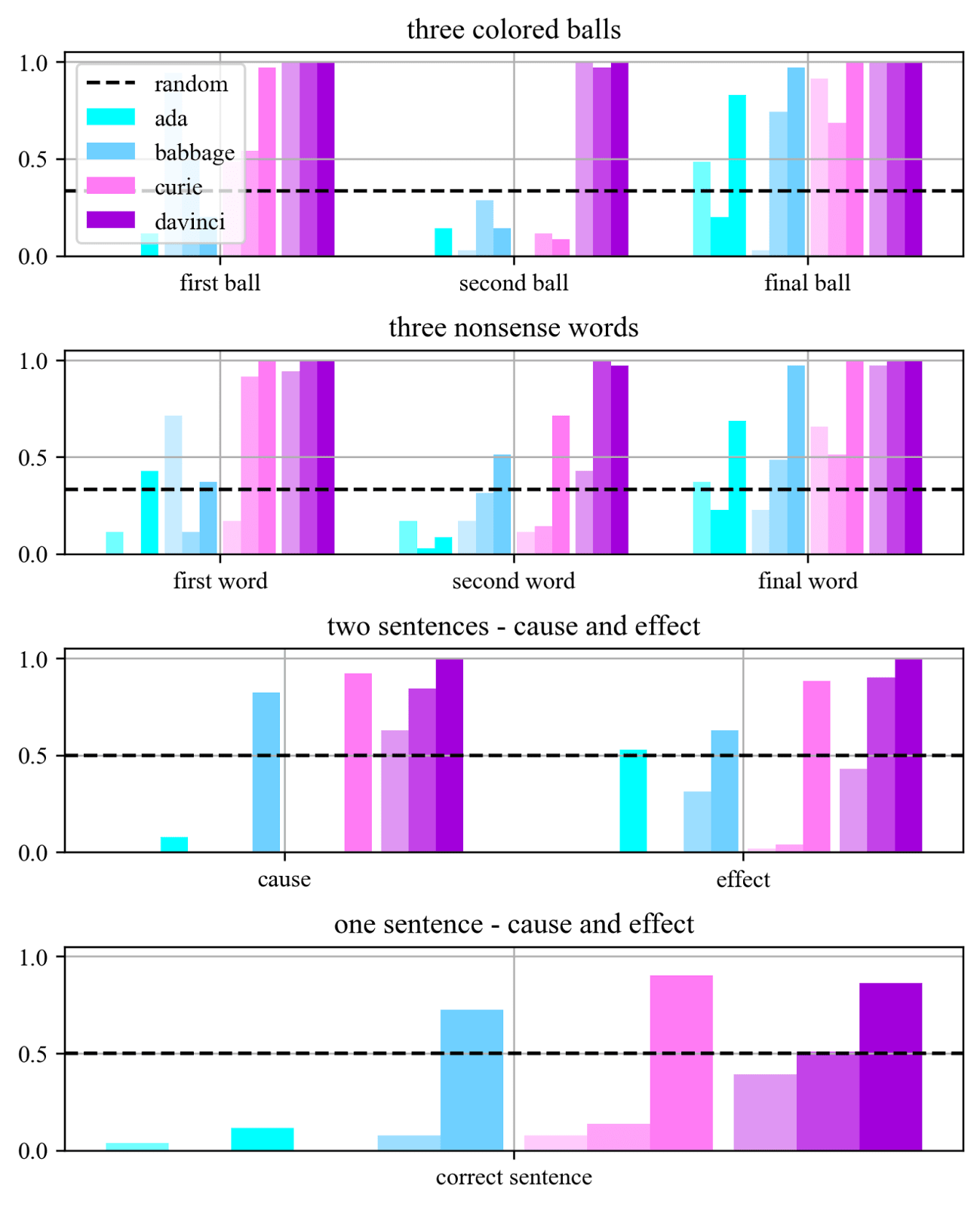

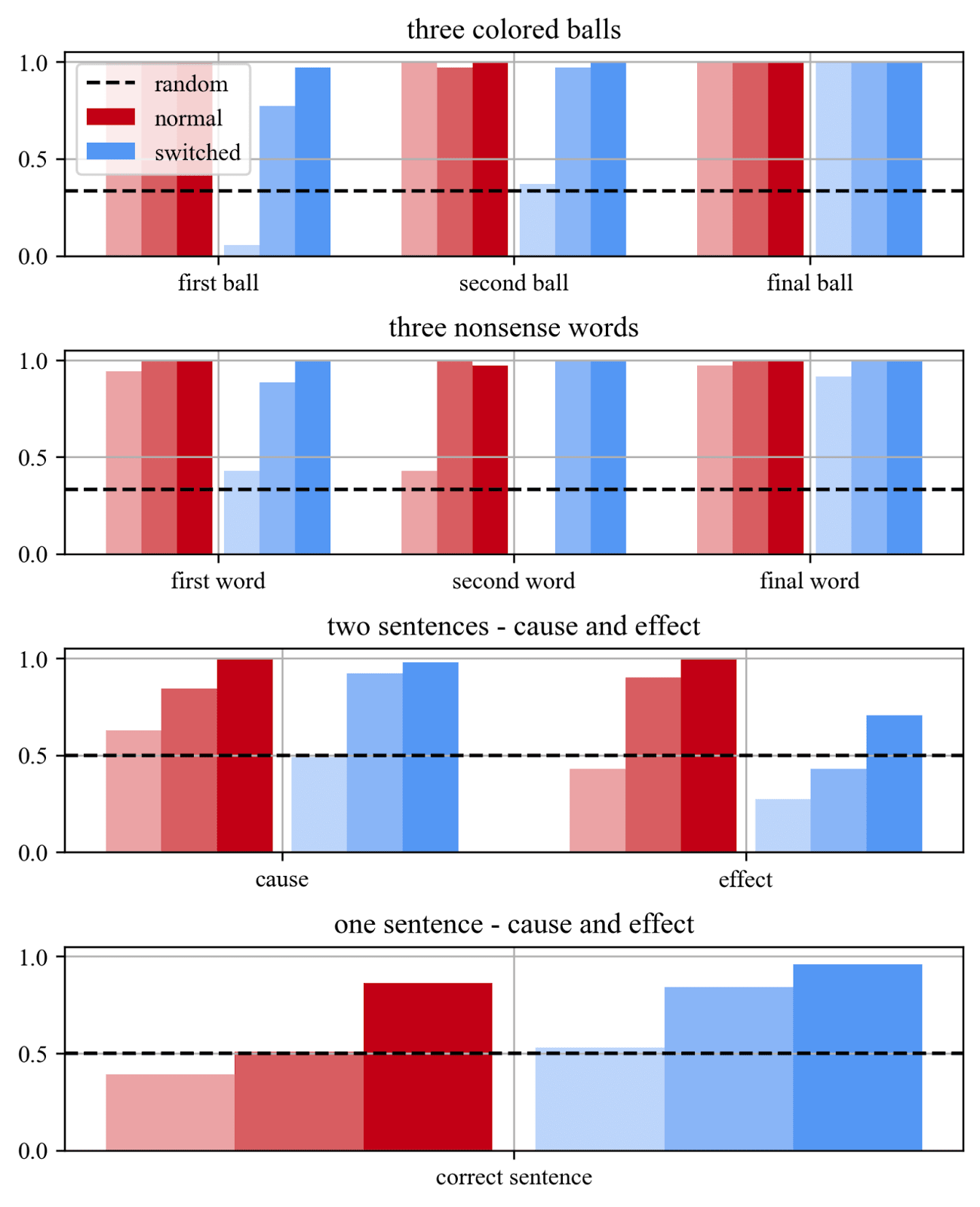

The results can be seen in the following figure. We have added a random chance level for all tasks. These are fulfilled if you understand the task but don’t understand the causal structure, e.g. in the three colors example you could just choose one of the three named colors.

We can observe multiple findings: a) Some settings are harder than others, e.g. the toy datasets have better accuracies than the cause-and-effect setups. b) Some tasks are harder than others, e.g. the model seems to be better at identifying the first and final color/nonsense word in the logical chain than the second one. c) The larger the model the better the performance. d) one-shot performance is better than zero-shot performance and k-shot performance is better than one-shot performance.

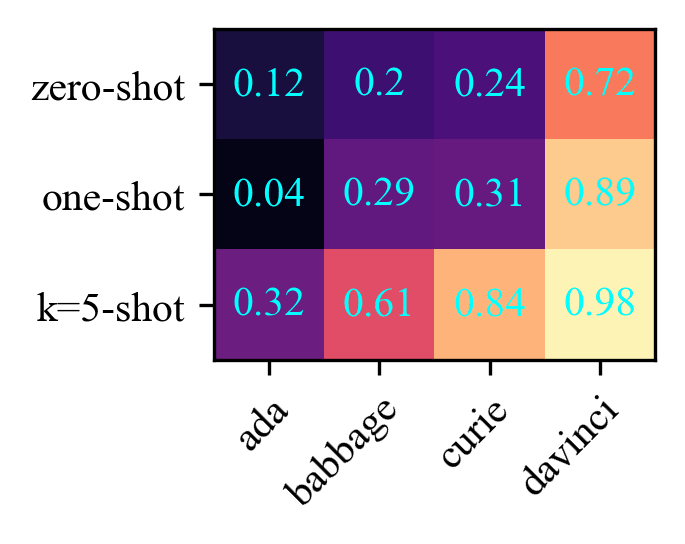

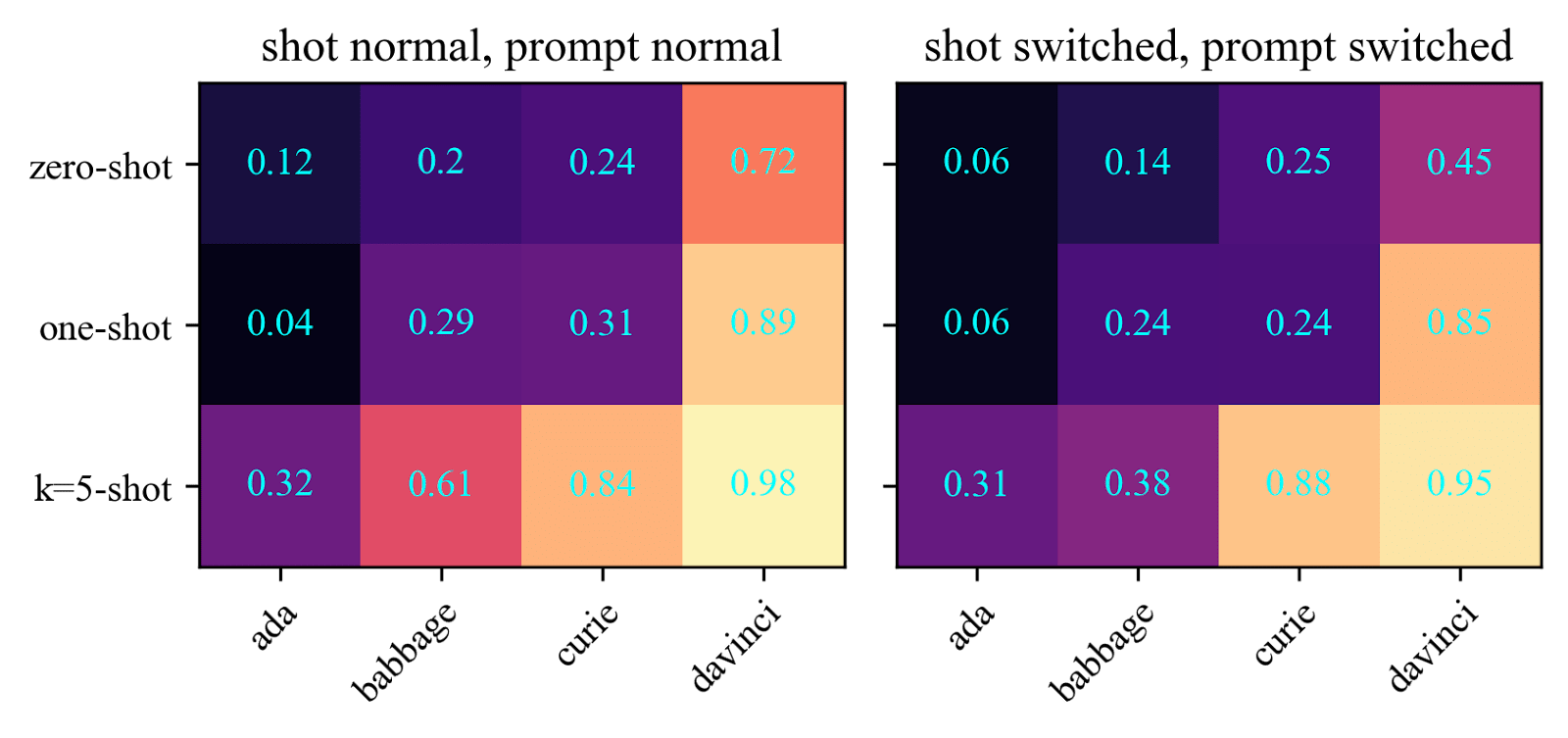

To investigate results c) and d) we have averaged all performances to isolate the effect of model size and shots in the next figure.

Switch the order in prompts

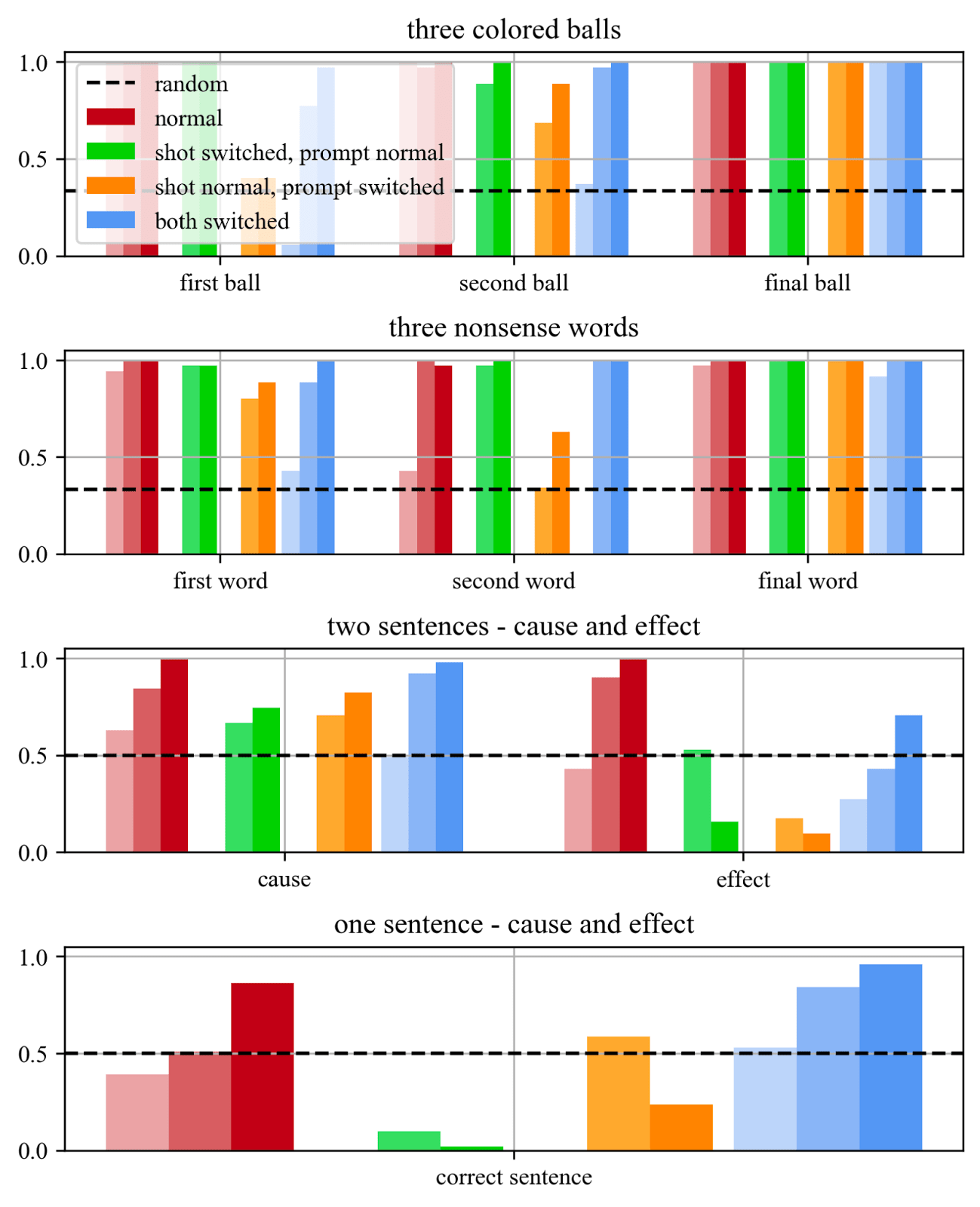

It is possible that the LLM bases its answers on the form and not on the content of the prompt, e.g. it could always reply with the first color it identifies rather than answering the prompt. Therefore, we switch the order of the prompts (shots are also switched). All of the following results are done on the largest GPT-3 model (Davinci-text-002 - 175B parameters). The different shades indicate zero-, one- and k=5-shots from left to right.

We can see that switching the order of the prompt has a large effect on zero-shot performance, especially for the ball and nonsense word toy models but little effect on one- and k-shot performance.

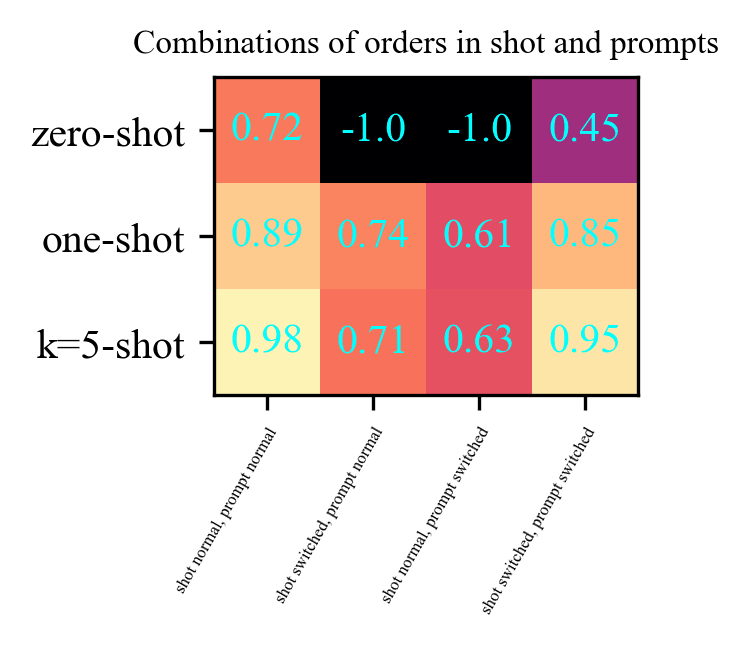

To isolate the effect of model size and shots on switched orders, we averaged over all tasks and scenarios in the next figure.

The averaged results indicate that switching the order of the shot a) has a large effect on zero-shot performance but b) has only small effects on one- and k-shot performance.

Switch the order in prompts and shots

The previous results can still be explained if the model focuses more on form than content, e.g. the LLM could still learn to copy the second color it finds rather than trying to answer with content. Therefore, we switch the presentation between shot and prompt, i.e. we present order AB in the one- and k-shots and BA in the prompt. This way, the model has to focus on content rather than form to get the right answer. The results can be found in the following figure which includes the previous results for comparison. Shades, once again, represent zero-, one- and k=5-shots from left to right. For the scenarios where order and prompts are switched, we don’t include zero-shots because there is no shot.

These are some interesting results. On average, the scenarios where shot and prompt are in a different order perform worse than when they are in the same order. This would indicate that the model focuses more on form than on content, i.e. it tries to replicate the pattern of the prompt and not its implied causal relationship. This effect seems to be stronger in the cause and effect setups than in the toy scenarios.

There is an additional trend that in some scenarios, e.g. first color/word, the k-shot performance is much worse than the one-shot performance. This would indicate that the model is “baited” by the examples to focus on form rather than content, i.e. it is primed on the form by the shots.

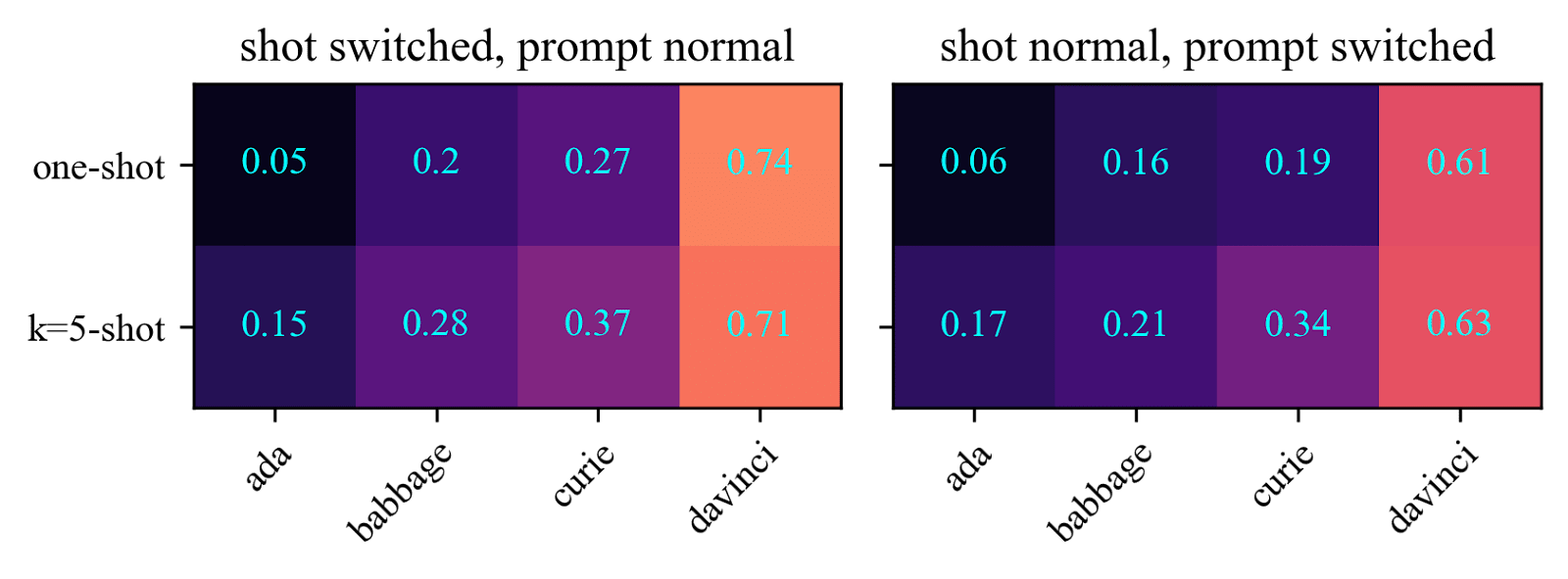

We can also investigate the effect of model size and shots for this setup.

There are multiple observations. Firstly, all of these results are much worse than in the non-switched setup, i.e. the one- and five-shot performances on Davinci drop by ~0.2 for both

Secondly, for the biggest model, the one-shot results, are better than the k=5-shot results (only for the “shot switched, prompt normal” setting). This would mildly strengthen the hypothesis that the model is “baited” by more shots to focus on form rather than content.

Longer chains

In all previous experiments, we have used very simple settings, i.e. we have only switched the order of two sentences. To test if the previous findings generalized to larger settings, we use the 5 colored balls setting. For all experiments, we only used the largest model, i.e. Davinci-text-002 (175B params), since the smaller models didn’t produce any usable output.

We see that the model nearly always gets the first and final color correctly. However, the later into the chain we get, the more complicated it seems to answer the question correctly. In the crossed conditions, i.e. normal shot and shuffled prompt (and vice versa), the later conditions seem to be accurate more often. Some of this effect can probably be explained by the random order of the shot but we don’t really understand why that would be. In any case, longer chains seem to be harder and the model doesn’t seem to be able to replicate the pattern.

Conclusion

We draw three main conclusions from the report.

- Larger models yield better results. This has been consistent throughout all experiment and other work. Not much surprise here

- K-shot outperforms one-shot and one-shot outperforms k-shot in standard conditions, i.e. where shot and prompt have a similar pattern. If the shot and prompt have a different pattern but similar content, this decreases the performance of the model (see figure below). Furthermore, we find that switching the order of presentation in the prompt decreases the zero-shot performance.

We interpret these findings as preliminary evidence that the LLM does not fully answer based on content but also on the pattern suggested by the prompt. The fact that zero-shot performance decreases can be explained by the fact that the model often just responds with the first answer that matches the pattern, e.g. the first color it identifies, or that it is primed by the pattern of the prompt. - When we increase the complexity of the task, e.g. use five instead of three colors for the toy experiments, the model performance gets much worse. We think this mostly shows that there are scenarios where LLMs (or specifically GPT-3) give answers that match the pattern of the correct answer (e.g. a color) but are not true.

Importantly, we don’t want this to be interpreted as a “gotcha” result. We expect that LLMs will ultimately be able to solve these tasks based on content and not on pattern. However, we think that our results emphasize that LLMs can produce patterns that seem plausible and fit the suggested pattern while being incorrect. In these simple toy examples, it is easy to realize that the output is wrong but in more complex scenarios it might not be easy to spot and people could be fooled if they aren’t careful.

Appendix A: prompts

Prompt-engineering

Prompt engineering is still more art than science. We got a better understanding from reading multiple posts (e.g, [1], [2], [3], [4]) on the topic, but ultimately trial and error yielded the best results. Specifically, the things we found helpful were:

- No trailing spaces - these just make everything worse for some reason.

- Using a “Question: X? Answer: Y” pattern increased the quality of the output.

- Using “Answer in three words:”, “Answer in two words:” or “Answer by copying the sentence” also increased the quality of the output.

We have not run any statistical tests for the above findings, these are our intuitive judgments. So take them with a grain of salt.

Appendix B: author contributions

Originally, we wanted to split the content such that Marius does the black-box investigations and Tom the interpretability-related work. However, after multiple attempts, we were not able to find a reasonable setting for the interpretability work due to the lack of access to GPT-3 and the poor performance of public models. Therefore, the majority of the content from this report comes from Marius. After exploratory experiments on interpretability, Tom took more of a consulting role. However, if we will be able to run the interpretability parts of the work in the future, Tom will do the main bunch of the work and our roles will be reversed. David contributed ideas and supervision.

6 comments

Comments sorted by top scores.

comment by tom4everitt · 2022-06-14T15:45:43.385Z · LW(p) · GW(p)

Really interesting, even though the result aren't that surprising. I'd be curious to see how the results improve (or not) with more recent language models. I also wonder if there are other formats to test causal understanding. For example, what if receives a more natural story plot (about Red Riding Hood, say), and asked about some causal questions ("what would have happened if grannma wasn't home when the wolf got there?", say).

It's less clean, but it could be interesting to probe it in a few different ways.

Replies from: marius-hobbhahn↑ comment by Marius Hobbhahn (marius-hobbhahn) · 2022-06-14T17:52:48.976Z · LW(p) · GW(p)

I would expect the results to be better on, let's say PaLM. I would also expect it to base more of its answers on content than form.

I think there are a ton of experiments in the direction of natural story plots that one could test and I would be interested in seeing them tested. The reason we started with relatively basic toy problems is that they are easier to control. For example, it is quite hard to differentiate whether the model learned based on form or content in a natural story context.

Overall, I expect there to be many further research projects and papers in this direction.

comment by Roman Leventov · 2022-09-07T09:55:51.266Z · LW(p) · GW(p)

Interesting. It took me quite a bit of time to realise (with the help of GPT) that we humans have a pretty strong inductive bias here, is that all the events form a single chain:

Q: The red ball hit the green ball. The blue ball hit the red ball. The green ball fell into the hole. Does the order of sentences coincide with the time ordering of the events in this case, and why?

A: Yes, the order of the sentences does coincide with the time ordering of the events. This is because the events are listed in chronological order, so the first event listed (the red ball hitting the green ball) happened first, followed by the second event listed (the blue ball hitting the red ball), and finally the third event listed (the green ball falling into the hole).

Indeed, this now looks even more likely that the red ball has been hit by the blue ball after it has hit the green ball. Because if the three events make up a single chain, it's very unnatural to switch around sentences like this.

I succeeded in convincing GPT that the blue ball has started the chain like this:

Q: The red ball hit the green ball. The blue ball hit the red ball, and it started moving. The green ball fell into the hole. Which ball has started the chain?

A: The blue ball started the chain by hitting the red ball.

Oddly, I couldn't elicit from it even with this phrasing that the order of events doesn't coincide with the order of sentences, GPT insists that it does, and even when I ask it to explain it, it spits out nonsense:

Replies from: marius-hobbhahnQ: The red ball hit the green ball. The blue ball hit the red ball, and it started moving. The green ball fell into the hole. Why doesn't the order of events coincide with the order of sentences in this case?

A: The order of events does not coincide with the order of sentences in this case because the sentences are in the past tense.

↑ comment by Marius Hobbhahn (marius-hobbhahn) · 2022-09-07T10:23:36.560Z · LW(p) · GW(p)

Yeah, the phrasing might not be as precise as we intended it to be.

comment by frantcly · 2023-03-04T01:02:01.841Z · LW(p) · GW(p)

Is there any plan to retry this with facebook's LLaMA models? They claim it outperforms GPT-3. Since it's open for research, it might give more precise answers to some of the questions.

Replies from: marius-hobbhahn↑ comment by Marius Hobbhahn (marius-hobbhahn) · 2023-03-04T09:52:01.029Z · LW(p) · GW(p)

No plans so far. I'm a little unhappy with the experimental design from last time. If I ever come back to this, I'll change the experiments up anyways.