Update Then Forget

post by royf · 2013-01-17T18:36:48.373Z · LW · GW · Legacy · 11 commentsContents

11 comments

Followup to: How to Be Oversurprised

A Bayesian update needs never lose information. In a dynamic world, though, the update is only half the story. The other half, where the agent takes an action and predicts its result, may indeed "lose" information in some sense.

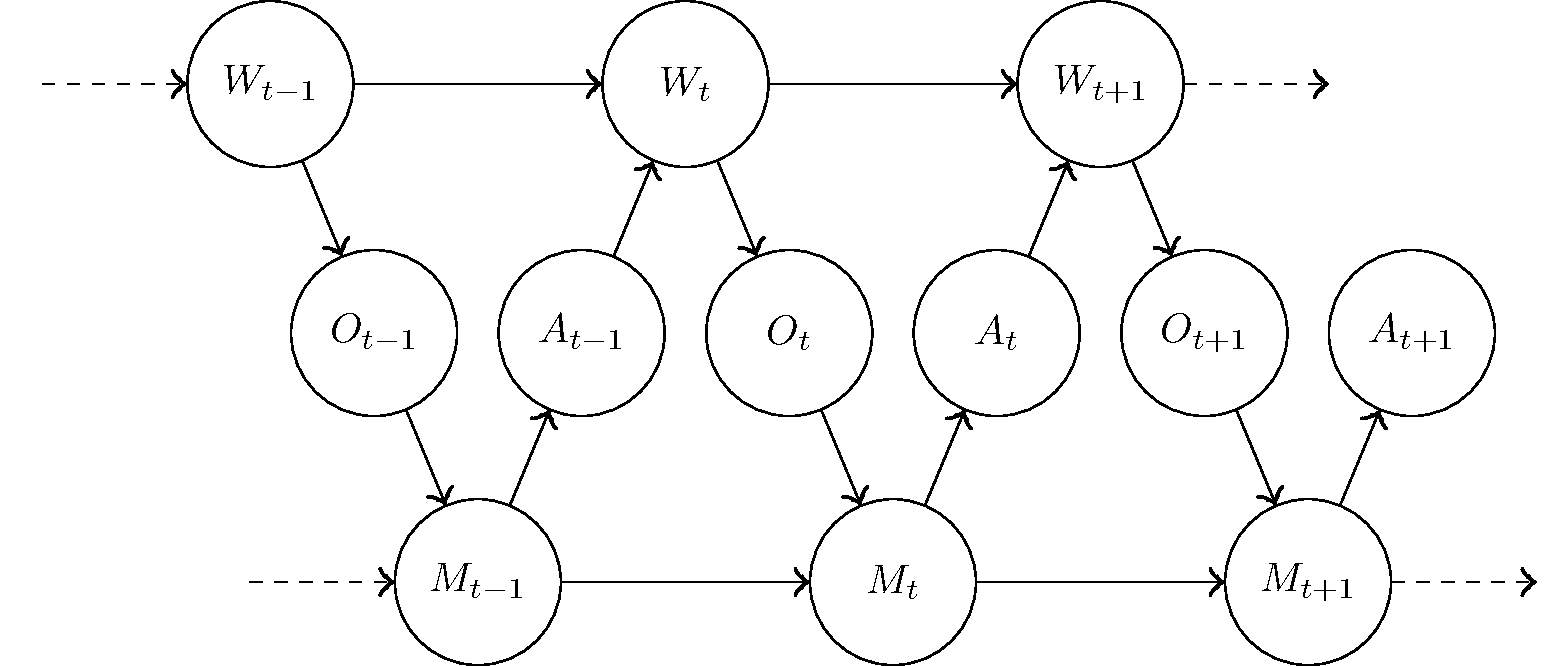

We have a dynamical system which consists of an agent and the world around it. It's often useful to describe the system in discrete time steps, and insightful to split them into half-steps where both parties (agent and world) take turns changing and affecting each other.

The agent's update is the half-step in which the agent changes. It takes in an observation Ot of the world (t is for time), and uses it to improve its understanding of the world. In doing so, its internal changeable parts change from some configuration (memory state) Mt-1 that they had before, to a new configuration Mt.

The other half-step is when the world changes, and the agent predicts the result of that change. The change from a previous world state Wt to a new one Wt+1 may depend on the action At that the agent takes.

In changing itself, the agent cares about information. There's a clear way - the Bayesian Way - to do it optimally, keeping all of the available information about the world.

In changing the world, the agent cares about rewards, the one it will get now and the ones to come later, possibly much later. The need to make long-term plans with only partial information about the world makes it very hard to be optimal.

Last week we quantified how much information is gained in the update half-step:

I(Wt;Mt) - I(Wt;Mt-1)

(where I is the mutual information). Quantifying how much information is discarded in the prediction half-step is complicated by the action: at the same time that the agent predicts the next world state, it also affects it. The agent can have acausal information about the future by virtue of creating it.

So last week's counterpart

I(Wt;Mt) - I(Wt+1;Mt)

is interesting, but not what we want to study here. To understand what kind of information reduction we do mean here, let's put aside the issue of prediction-through-action, and ask: why would the agent lose any information at all when only the world changes, not the agent itself?

The agent may have some information about the previous world state that simply no longer applies to the changed state. This never happens if the dynamics of the world is reversible, but middle world is irreversible for thermodynamic reasons. Different starting macrostates can lead to the same resulting macrostate.

Example. We're playing a hand of poker. Your betting and your other actions are part of my observations, and they reveal information about your hidden cards and your personality. If you're making a large bet, your hand seems more likely to be stronger, and you seem to be more loose and aggressive.

Now there's a showdown. You reveal your hand, collapsing that part of the world state that was hidden from me, and at once a large chunk of information spills over from this observation to your personality. Perhaps your hand is not that strong after all, and I learn that you are more likely than I previously thought to be really loose, and a bluffer to boot.

And now I'm shuffling. The order of the cards loses any entanglement with the hand we just played. During that last round, I gained perhaps 50 bit of information about the deck, to be used in prediction and decision making. Only a small portion of this information, no more than 2 or 3 bit if we're strangers, had spilled over to reflect on your personality; the other 40-something bit of information is completely useless now, completely irrelevant for the future, and I can safely forget it. This is the information "lost" by my action of shuffling.

Or maybe I'm a card sharp, and I'm stacking the deck instead of truly shuffling it. In this case I can actually gain information about the deck. But even if I replace 50 known bits about the deck with 100 known bits, these aren't the "same" bits - they are independent bits. The way I'm loading the deck (if I'm a skilled prestidigitator) has little to do with the way it was before, or with the cards I saw during play, or with what it teaches me of your strategy.

This is why

I(Wt;Mt) - I(Wt+1;Mt)

is not a good measure of how much information about the world an agent discards in the action-prediction half-step. It can actually have more information after the action than before, but still be free to discard some irrelevant information.

To be clear: the agent is unmodified by the action-prediction half-step. Only the world changes. So the agent doesn't discard information by wiping any bits from memory. Rather, the information content of the agent's memory state simply stops being about the world. In the update that follows, a good agent (say, a Bayesian one) can - and therefore should - lose that useless information content.

A better way to measure how much information in Mt becomes useless is this:

I(Wt,At;Mt) - I(Wt+1;Mt)

This is information not just about the world, but also about the action. Once the action is completed, this information is also useless - of course, except for the part of it that is still about the world! I'd hate to forget how I stacked the deck, but only in those cards that are actually in play.

This nifty trick shows us why information about the world (and the action) must always be lost by the irreversible transition from Wt and At to Wt+1. The former pair separates the agent from the latter, such that any information about the next world state must go through what is known about the previous one and the action (see the figure above). Formally, the Data Processing Inequality implies that the amount of information lost is nonnegative, since Mt and Wt+1 are independent given (Wt,At).

As a side benefit, we see why we shouldn't bother specifying our actions beyond what is actually about the world. Any information processing that isn't effective in shaping the future is just going to waste.

When faced with new evidence, an intelligent agent should ideally update on it and then forget it. The update is always about the present. The evidence remains entangled with the past, more and more distant as time goes by. Whatever part of it stops being true must be discarded. (Don't confuse this with the need to remember things which are part of the present, only currently hidden.)

People don't do this. We update too seldom, and instead we remember too much in a wishful hope to update at some later convenience.

There are many reasons for us to remember the past, given our shortcomings. We can use the actual data we gather to introspect on our faulty reasoning. Stories of the past help us communicate our experiences, allowing others to update on shared evidence much more reliably than if we just tried to convey our current beliefs.

Optimally, evidence should only be given its due weight in due time, no more and no later. Arguments should not be recycled. The study of history should control our anticipation.

The data is not falsifiable, only the conclusions are - relevant to the world, predictive and useful.

11 comments

Comments sorted by top scores.

comment by Shmi (shminux) · 2013-01-17T22:13:27.079Z · LW(p) · GW(p)

How do you reconcile

When faced with new evidence, an intelligent agent should update on it and then forget it.

with

We can use the actual data we gather to introspect on our faulty reasoning.

given that you have discarded the data which led to the faulty reasoning? How do you know when it's safe to discard? In your example

I'd hate to forget how I stacked the deck, but only in those cards that are actually in play.

If you forget the discarded cards, and later realize that you may have an incorrect map of the deck, aren't you SOL?

Replies from: royf↑ comment by royf · 2013-01-17T23:26:24.619Z · LW(p) · GW(p)

an intelligent agent should update on it and then forget it.

Should being the operative word. This refers to a "perfect" agent (emphasis added in text; thanks!).

People don't do this, as well they shouldn't, because we update poorly and need the original data to compensate.

If you forget the discarded cards, and later realize that you may have an incorrect map of the deck, aren't you SOL?

If I remember the cards in play, I don't care about the discarded ones. If I don't, the discarded cards could help a bit, but that's not the heart of my problem.

Replies from: shminux↑ comment by Shmi (shminux) · 2013-01-18T17:20:37.182Z · LW(p) · GW(p)

What's a perfect agent? No one is infallible, except the Pope.

Replies from: DSimon, Sengachi, royf↑ comment by DSimon · 2013-02-06T14:17:51.219Z · LW(p) · GW(p)

And even the Pope only claims to be infallible under certain carefully delineated conditions.

comment by William_Quixote · 2013-01-21T21:01:46.418Z · LW(p) · GW(p)

I've been enjoying this series, but feel like I could get more out of it if I had more of an information theory background. Is there a particular textbook you would recommend? Thanks

Replies from: royf↑ comment by royf · 2013-01-21T22:39:58.260Z · LW(p) · GW(p)

Thanks!

The best book is doubtlessly Elements of Information Theory by Cover and Thomas. It's very clear (to someone with some background in math or theoretical computer science) and lays very strong introductory foundations before giving a good overview of some of the deeper aspects of the theory.

It's fortunate that many concepts of information theory share some of their mathematical meaning with the everyday meaning. This way I can explain the new theory (popularized here for the first time) without defining these concepts.

I'm planning another sequence where these and other concepts will be expressed in the philosophical framework of this community. But I should've realized that some readers should be interested in a complete mathematical introduction. That book is what you're looking for.

Replies from: William_Quixote↑ comment by William_Quixote · 2013-01-23T12:44:00.811Z · LW(p) · GW(p)

Thanks

comment by timtyler · 2013-01-27T02:15:52.900Z · LW(p) · GW(p)

When faced with new evidence, an intelligent agent should ideally update on it and then forget it. The update is always about the present. The evidence remains entangled with the past, more and more distant as time goes by. Whatever part of it stops being true must be discarded.

Sense data is neither true nor false, it just is. Ideally, agents should store a somewhat-compressed version of it all - but of course, this is often prohibitively expensive.