5 Axioms of Decision Making

post by Vaniver · 2011-12-01T22:22:50.504Z · LW · GW · Legacy · 63 commentsContents

My Little Decision None 63 comments

This is part of a sequence on decision analysis; the first post is a primer on Uncertainty.

Decision analysis has two main parts: abstracting a real situation to math, and then cranking through the math to get an answer. We started by talking a bit about how probabilities work, and I'll finish up the inner math in this post. We're working from the inside out because it's easier to understand the shell once you understand the kernel. I'll provide an example of prospects and deals to demonstrate the math, but first we should talk about axioms. In order to be comfortable with using this method, there are five axioms1 you have to agree with, and if you agree with those axioms, then this method flows naturally. They are: Probability, Order, Equivalence, Substitution, and Choice.

Probability

You must be willing to assign a probability to quantify any uncertainty important to your decision. You must have consistent probabilities.

Order

You must be willing to order outcomes without any cycles. This can be called transitivity of preferences: if you prefer A to B, and B to C, you must prefer A to C.

Equivalence

If you prefer A to B to C, then there must exist a p where you are indifferent between a deal where you receive B with certainty and a deal where you receive A with probability p and C otherwise.

Substitution

You must be willing to substitute an uncertain deal for a certain deal or vice versa if you are indifferent between them by the previous rule. Also called "do you really mean it?"

Choice

If you have a choice between two deals, both of which offer A or C, and you prefer A to C, then you must pick the deal with the higher probability of A.

These five axioms correspond to five actions you'll take in solving a decision problem. You assign probabilities, then you order outcomes, then you determine equivalence so you can substitute complicated deals for simple deals, until you're finally left with one obvious choice.

You might be uncomfortable with some of these axioms. You might say that your preferences genuinely cycle, or you're not willing to assign numbers to uncertain events, or you want there to be an additional value for certainty beyond the prospects involved. I can only respond that these axioms are prescriptive, not descriptive: you will be better off if you behave this way, but you must choose to.

Let's look at an example:

My Little Decision

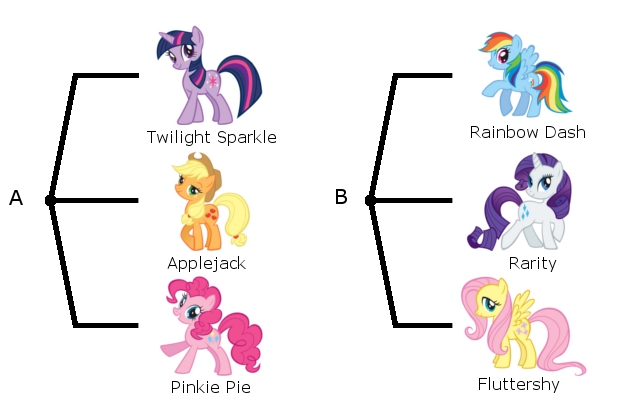

Suppose I enter a lottery for MLP toys. I can choose from two kinds of tickets: an A ticket has a 1/3 chance of giving me a Twilight Sparkle, a 1/3 chance of giving me an Applejack, and a 1/3 chance of giving me a Pinkie Pie. A B ticket has a 1/3 chance of giving me a Rainbow Dash, a 1/3 chance of giving me a Rarity, and a 1/3 chance of giving me a Fluttershy. There are two deals for me to choose between- the A ticket and the B ticket- and six prospects, which I'll abbreviate to TS, AJ, PP, RD, R, and FS.

(Typically, decision nodes are represented as squares, and work just like uncertainty nodes, and so A would be above B with a decision node pointing to both. I've displayed them side by side because I suspect it looks better for small decisions.)

The first axiom- probability- is already taken care of for us, because our model of the world is already specified. We are rarely that lucky in the real world. The second axiom- order- is where we need to put in work. I need to come up with a preference ordering. I think about it and come up with the ordering TS > RD > R = AJ > FS > PP. Preferences are personal- beyond requiring internal consistency, we shouldn't require or expect that everyone will think Twilight Sparkle is the best pony. Preferences are also a source of uncertainty if prospects satisfy multiple different desires, as you may not be sure about your indifference tradeoffs between those desires. Even when prospects have only one measure, that is, they're all expressed in the same unit (say, dollars), you could be uncertain about your risk sensitivity, which shows up in preference probabilities but deserves a post of its own.

Now we move to axiom 3: I have an ordering, but that's not enough to solve this problem. I need a preference scoring to represent how much I prefer one prospect to another. I might prefer cake to chicken and chicken to death, but the second preference is far stronger than the first! To determine my scoring I need to imagine deals and assign indifference probabilities. There are a lot of ways to do this, but let's jump straight to the most sensible one: compare every prospect to a deal between the best and worst prospect.2

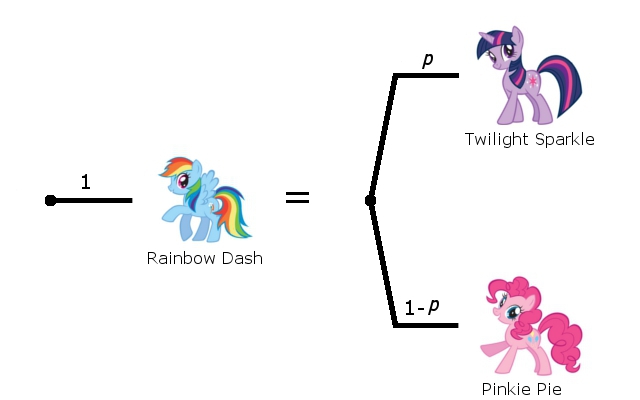

I need to assign a preference probability p such that I'm indifferent between the two deals presented: either RD with certainty, or a chance at TS (and PP if I don't get it). I think about it and settle on .9: I like RD close to how much I like TS.3 This indifference needs to be two-way: I need to be indifferent about trading a ticket for that deal for a RD, and I need to be indifferent about trading a RD for that deal.4 I repeat this process with the rest, and decide .6 for R and AJ and .3 for FS. It's useful to check and make sure that all the relationships I elicited before hold- I prefer R and AJ the same, and the ordering is all correct. I don't need to do this process for TS or PP, as p is trivially 1 or 0 in that case.

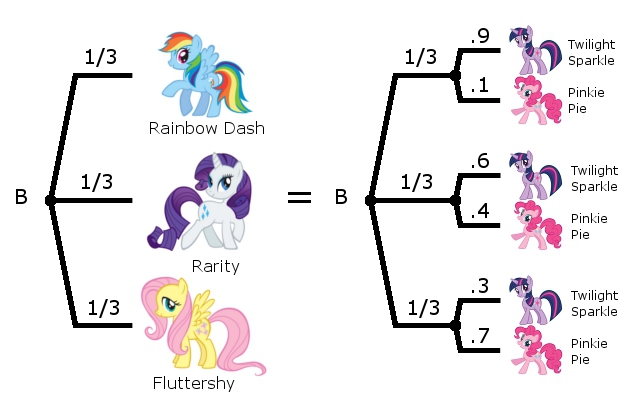

Now that I have a preference scoring, I can move to axiom 4. I start by making things more complicated- I take all of the prospects that weren't TS or PP and turn them into deals of {p TS, 1-p PP}. (Pictured is just the expansion of the right tree; try expanding the tree for A. It's much easier.)

Then, using axiom 1 again, I rearrange this tree. The A tree (not shown) and B tree now have only two prospects, and I've expressed the probabilities of those prospects in a complicated way that I know how to simplify.

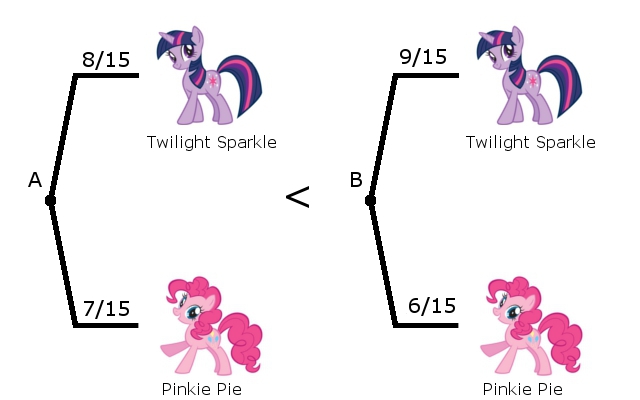

And we have one last axiom to apply: choice. Deal B has a higher chance of the better prospect, and so I pick it. Note that that's the case even though my actual chance of receiving TS with deal B is 0%- this is just how I'm representing my preferences, and this computation is telling me that my probability-weighted preference for deal B is higher than my probability-weighted preference for deal A. Not only do I know that I should choose deal B, but I know how much better deal B is for me than deal A.5

This was a toy example, but the beauty of this method is that all calculations are local. That means we can apply this method to a problem of arbitrary size without changes. Once we have probabilities and preferences for the possible outcomes, we can propagate those from the back of the tree through every node (decision or uncertainty) until we know what to do everywhere. Of course, whether the method will have a runtime shorter than the age of the universe depends on the size of your problem. You could use this to decide which chess moves to play against an opponent whose strategy you can guess from the board configuration, but I don't recommend it.6 Typical real-world problems you would use this for are too large to solve with intuition but small enough that a computer (or you working carefully) can solve it exactly if you give it the right input.

Next we start the meat of decision analysis: reducing the real world to math.

1. These axioms are Ronald Howard's 5 Rules of Actional Thought.

2. Another method you might consider is comparing a prospect to its neighbors; RD in terms of TS and R, R in terms of RD and FS, FS in terms of R and PP. You could then unpack those into the preference probability

3. Assigning these probabilities is tough, especially if you aren't comfortable with probabilities. Some people find it helpful to use a probability wheel, where they can see what 60% looks like, and adjust the wheel until it matches what they feel. See also 1001 PredictionBook Nights and This is what 5% feels like.

4. In actual practice, deals often come with friction and people tend to be attached to what they have beyond the amount that they want it. It's important to make sure that you're actually coming up with an indifference value, not the worst deal you would be willing to make, and flipping the deal around and making sure you feel the same way is a good way to check.

5. If you find yourself disagreeing with the results of your analysis, double check your math and make sure you agree with all of your elicited preferences. An unintuitive answer can be a sign of an error in your inputs or your calculations, but if you don't find either make sure you're not trying to start with the bottom line.

6. There are supposedly 10120 possible games of chess, and this method would evaluate all of them. Even with computation-saving implementation tricks, you're better off with another algorithm.

63 comments

Comments sorted by top scores.

comment by lukeprog · 2011-12-01T23:37:05.078Z · LW(p) · GW(p)

My Little Pony was clearly the correct choice, here.

Replies from: MixedNuts↑ comment by MixedNuts · 2011-12-01T23:44:24.092Z · LW(p) · GW(p)

I disagree. If one is not familiar with this opus, one shall have to expend more attention than would be suitable on matching the illustrations on the probability trees to their names and abbreviations.

Replies from: Vaniver, Vaniver, fiddlemath, Cyan↑ comment by Vaniver · 2011-12-02T06:45:40.834Z · LW(p) · GW(p)

Talk about inferential distance; for some reason it didn't even occur to me that people might not know their names (whereas I did think long and hard before going with that as my only example). I'll edit the pictures to include the names (tomorrow).

Replies from: prase, Morendil↑ comment by prase · 2011-12-02T11:13:33.112Z · LW(p) · GW(p)

it didn't even occur to me that people might not know their names

Seriously? I see these damned horses for the first time in life. Not only I don't know their names, but it is hard to distinguish them visually. And I put quite a big probability on the hypothesis that 95% of people whom I personally know are unfamiliar with this as well.

whereas I did think long and hard before going with that as my only example

Couldn't you go with apple, pizza, bicycle, broken watch, pen, or whatever sort of items whose names are known? It would perhaps be too ordinary, but your present choice really makes it harder.

Replies from: Vaniver, Kaj_Sotala, Will_Sawin, Bugmaster↑ comment by Vaniver · 2011-12-03T19:36:07.155Z · LW(p) · GW(p)

Seriously?

Yes. I'm not sure why.

Couldn't you go with apple, pizza, bicycle, broken watch, pen, or whatever sort of items whose names are known? It would perhaps be too ordinary, but your present choice really makes it harder.

Future posts will not re-use this example. I wanted to use lots of pictures for this one to make sure the steps were clear, and found it easier to motivate myself to do that with ponies.

↑ comment by Kaj_Sotala · 2011-12-09T17:41:39.636Z · LW(p) · GW(p)

And I put quite a big probability on the hypothesis that 95% of people whom I personally know are unfamiliar with this as well.

I didn't know them either, but this post was one of the last straws that broke the back of my resistance made me start watching the series.

(It's good, by the way!)

↑ comment by Will_Sawin · 2011-12-02T19:13:32.141Z · LW(p) · GW(p)

How hard are they to distinguish visually? They are each dominated by a single (unique) color. Is my model of visual perception wrong?

Replies from: prase↑ comment by prase · 2011-12-02T21:37:26.529Z · LW(p) · GW(p)

The colours are "similar", in that they are all pastel tones, and I have probably not good memory for colours. Now when I am looking on the ponies for the third or fourth time, I am starting to "feel the difference", but at the beginning, I saw six extremely similar ugly pictures.

My explanation probably isn't very good, but visual impressions are difficult to describe verbally.

↑ comment by Morendil · 2011-12-02T18:53:03.866Z · LW(p) · GW(p)

Seconding prase: "Seriously? I see these damned horses for the first time in life. Not only I don't know their names, but it is hard to distinguish them visually."

Make that "most of these damned horses" and "almost for the first time". I have kids, and they watch TV, so I'm vaguely aware of these things in much the same way I'm vaguely aware of cars honking outside: it's a somewhat unwelcome but not actively unpleasant awareness.

(Also the MLP allusion joke has already been done here before. Without pictures, admittedly, but I'd still judge it a "once and only once" kind of joke.)

↑ comment by fiddlemath · 2011-12-02T04:55:43.163Z · LW(p) · GW(p)

I agree. I'm halfway through, and I really wish I had a handy key of which initials go with which ponies - or just those initials next to every instance of every pony. right now, I have to track lots of extra details that have little bearing on the material.

Replies from: pedanterrific↑ comment by pedanterrific · 2011-12-02T05:41:11.508Z · LW(p) · GW(p)

TS - purple/purple, AJ - orange/yellow, PP - pink/pink,

RD - blue/rainbow, R - white/purple, FS - yellow/pink

comment by Dan_Moore · 2011-12-02T15:58:14.117Z · LW(p) · GW(p)

Upvoted.

It seems, though, that the system discussed here should be prefaced by a scope. In particular, the scope for preferences about outcomes would include outcomes that you can assign a dollar value to. Outside the scope, for example, would be outcomes that you are willing to sacrifice your life for. The equivalence axiom implies a scalar metric applying to the whole scope.

Replies from: Vaniver↑ comment by Vaniver · 2011-12-02T16:58:04.037Z · LW(p) · GW(p)

You're right that this is an important thing to discuss, and so we'll talk about this more explicitly when I get to micromorts, which are probably one of the more useful concepts that comes up in an introductory survey. (I think it's better to establish this methodology and show that it applies to those situations too than argue it applies everywhere then establish what it actually is.)

A quick answer, though: my sense is that people feel like they have categorical preferences, but actually have tradeoff preferences. If you ask people about chicken vs. p cake or 1-p death, many will scoff at the idea of taking any chance at death to upgrade from chicken to cake. When you look at actual behavior, though, those same people do risk death to upgrade from chicken to cake- and so in some sense that's "worth sacrificing your life for." The difference between the cake > chicken preference and the family alive > family dead preference, for example, seems to be one of degree and not one of kind.

Replies from: Dan_Moore↑ comment by Dan_Moore · 2011-12-09T17:59:37.736Z · LW(p) · GW(p)

these axioms are prescriptive, not descriptive: you will be better off if you behave this way, but you must choose to...

When you look at actual behavior, though...

Are you prescribing a rational method of decision making or are you describing actual behavior (possibly not rational)?

Replies from: TobyBartels, Vaniver↑ comment by TobyBartels · 2011-12-15T05:08:13.331Z · LW(p) · GW(p)

Are you prescribing a rational method of decision making or are you describing actual behavior (possibly not rational)?

Although Vaniver punted this by saying that you shouldn't judge ultimate values on rationality, you're right that this describes behaviour (and not values directly), and inferring values from behaviour assumes rationality.

However … it's intuitively obvious to me that it's perfectly rational to (in some circumstances) to go the store and get some cake when all that you have at home is chicken, even though leaving the house increases your risk of death (car crash etc). I assumed that this is the sort of behaviour that Vaniver was referring to; do you agree that it's rational (and so we can infer values in line with the axiom of equivalence)?

↑ comment by Vaniver · 2011-12-09T22:20:47.226Z · LW(p) · GW(p)

Values are endogenous to this system, and so it's not clear to me what it would mean to prescribe rational values. I think there is some risk of death small enough that accepting it in exchange for upgrading from chicken to cake is rational behavior for real people (who prefer cake to chicken), and difficulty in accepting that statement is generally caused by difficulty imagining small probabilities, not a value disagreement.

If you actually do have categorical preferences, you can implement them by multiple iterations of the method: "Minimize chance of dying, then maximize flavor on the optimal set."

Replies from: Dan_Moore↑ comment by Dan_Moore · 2011-12-15T16:13:31.245Z · LW(p) · GW(p)

Vaniver & Toby,

I have no problem with accepting the axiom of equivalence for a usefully large subset of all preferences. It just seems that there may be some preferences which are 'priceless' as compared to chicken vs. cake.

Replies from: Vaniver↑ comment by Vaniver · 2011-12-15T17:21:25.762Z · LW(p) · GW(p)

It just seems that there may be some preferences which are 'priceless' as compared to chicken vs. cake.

There are definitely signalling reasons to believe this is so. If I work at a factory where management has put a dollar value on my accidental death due to workplace hazards and uses that value in decision-making, I might feel less comfortable than if I worked at a factory where management insists that the lives of its employees are priceless, because at the first factory the risk of my death is now openly discussed (and management might be callous).

I'm not sure there are decision-making reasons to believe this is so. The management of the second factory continue to operate it, even though there is some risk of accidental death, suggesting that their "priceless" is not "infinity" but "we won't say our price in public." They might perform a two-step optimization: "ensure risk of death is below a reasonable threshold at lowest cost," but it's not clear that will result in a better solution.* It may be the risk of death could be profitably lowered below the reasonable threshold, and they don't because there's no incentive to, or they may be spending more on preventing deaths than the risk really justifies. (It might be better to spend that money, say, dissuading employees from smoking if you value their lifespans rather than not being complicit in their death.)

*For example, this is how the EPA manages a lot of pollutants, and it is widely criticized by economists for being a cap rather than a tax, because it doesn't give polluters the right incentives. So long as you're below the EPA standards, there's no direct benefit to you for halving your pollution even though there is that benefit to the local environment.

comment by JenniferRM · 2011-12-02T03:14:36.081Z · LW(p) · GW(p)

Upvote :-)

Followup questions spring to mind... Is there standard software for managing large trees of this sort? Is any of it open source? Are there file formats that are standard in this area? Do any posters (or lurkers who could be induced to start an account to respond) personally prefer particular tools in real life?

Actionable advice would be appreciated!

Replies from: pjeby, malthrin, daenerys↑ comment by pjeby · 2011-12-02T16:13:28.208Z · LW(p) · GW(p)

There's a program called Flying Logic that makes it really easy to draw trees and set up mathematical calculations -- sort of a DAG-based spreadsheet sort of thing, with edge weights and logical operators and whatnot. It's marketed mainly for doing cause-and-effect type analyses (using the Theory of Constraints "Thinking Processes") but it can do Bayesian belief nets and other things.

I've played with the demo and it's quite easy to use, but to be honest I didn't use the logical-spreadsheet functionality that much, except to the extent the tutorials show you how to play with confidence values and get the outputs of a fuzzy logic computation.

It's definitely not free OR open source, though.

↑ comment by malthrin · 2011-12-02T15:27:55.220Z · LW(p) · GW(p)

There's a Stanford online course next semester called Probabilistic Graphical Models that will cover different ways of representing this sort of problem. I'm enrolled.

↑ comment by daenerys · 2011-12-02T03:41:22.847Z · LW(p) · GW(p)

A while back I took a class called Aiding and Understanding Human Decision Making. It was a lot like this (aka not really my thing, but ces las vie). I don't remember what software we used. I remember the software confused the dickens out of me, and I preferred just running all the various algorithms by hand. Don't remember what it was though.

Do to my intense powers of Google-fu, I found the class website, but it's been updated since I took it. Mentions both @Risk and Crystal Ball

Actually, it looks like before the current prof taught it, it was taught by a different prof. His course site is here and includes links to lots of good articles (that you don't need access to databases to view)

ETA: On further examination, that class website is older (rather than newer) than the class I took and slightly different (the one I took was HFE 890 in 2010 I think. That website is HFE 742 in 2006), so the software mentioned might be similarly outdated.

comment by torekp · 2011-12-02T01:34:23.812Z · LW(p) · GW(p)

or you want there to be an additional value for certainty beyond the prospects involved. I can only respond that these axioms are prescriptive, not descriptive

I prescribe that people not follow all of the axioms, if they don't feel like it. Especially when the order and equivalence axioms are fleshed out with an implication of strict indifference. Some relevant discussion .

comment by Sara Younes (sara-younes) · 2020-12-11T09:47:22.266Z · LW(p) · GW(p)

Thank you for this article. Can you please guide me on how did you simplify and compute the probability of A & B in the final step.

Replies from: Vaniver↑ comment by Vaniver · 2020-12-11T18:08:58.828Z · LW(p) · GW(p)

That is, where did 8/15 and 9/15 come from?

Let's step through the B case. I only need to track probability of TS, because probability of PP is 1 minus that. The RD turns into .9/3 = 9/30ths, the R turns into 6/30ths, and the FS turns into 3/30ths. Add those together and you get 9+6+3=18, and 18/30 simplifies to 9/15ths.

What about the A case? Here the underlying probabilities are 1, .6, and 0, so 10/30, 6/30, and 0/30. 16/30 simplifies to 8/15ths.

comment by malthrin · 2011-12-02T15:24:56.846Z · LW(p) · GW(p)

This recursive expected value calculation is what I implemented to solve my coinflip question. There's a link to the Python code in that post for anyone who is curious about implementation.

comment by taw · 2011-12-02T09:54:06.876Z · LW(p) · GW(p)

You must be willing to assign a probability to quantify any uncertainty important to your decision. You must have consistent probabilities.

- What's your probability of basic laws of mathematics being true?

- What's your probability of Collatz conjecture being true?

If you answered 1 to the first, and anything but 0 or 1 to the second, you're inconsistent. If you're unwilling to answer the second, you just broke your axioms.

Replies from: MixedNuts, Vaniver, prase, TobyBartels↑ comment by MixedNuts · 2011-12-02T10:03:18.008Z · LW(p) · GW(p)

Subjective probability allows logical uncertainty.

Replies from: taw↑ comment by taw · 2011-12-02T11:23:43.893Z · LW(p) · GW(p)

Subjective probabilities are inconsistent in any model which includes Peano arithmetic by straightforward application of Gödel's incompleteness theorems, which is essentially any non-finite model.

Most people here seem to be extremely unwilling to admit that probabilities and uncertainty are not the same thing.

Replies from: pragmatist, TheOtherDave, Louie, hairyfigment↑ comment by pragmatist · 2011-12-02T12:29:51.934Z · LW(p) · GW(p)

Subjective probabilities are inconsistent in any model which includes Peano arithmetic by straightforward application of Gödel's incompleteness theorems, which is essentially any non-finite model.

Could you explain why this is true, please?

Replies from: taw↑ comment by taw · 2011-12-02T12:48:22.549Z · LW(p) · GW(p)

Let X() be a consistent probability assignment (function from statement to probability number).

Let Y() be a probability assignment including: Y(2+2=5) = X(Y is consistent), and otherwise Y(z)=X(z)

What's X(Y is consistent)?

If X(Y is consistent)=1, then Y(2+2=5)=1, and Y is blatantly inconsistent, and so is X is inconsistent according to basic laws of mathematics.

If X(Y is consistent)=0, then Y(2+2=5)=0=X(2+2=5), and by definition X=Y, so X is inconsistent according to itself.

Replies from: orthonormal, twanvl, Vladimir_Nesov↑ comment by orthonormal · 2011-12-03T23:55:47.333Z · LW(p) · GW(p)

That's not Goedelian at all, it's a variant of Russell's paradox and can be excluded by an analogue of the theory of types (which would make Y an illegally self-referential probability assignment).

↑ comment by twanvl · 2011-12-02T20:28:14.763Z · LW(p) · GW(p)

What if X(Y is consistent)=0.5? Then Y(2+2=5) = 0.5, and Y might or might not be inconsistent.

Another solution is of course to let X be incomplete, and refuse to assign X(Y is consistent). In fact, that would be the sensible thing to do. X can never be a function from ''all'' statements to probabilities, it's domain should only include statements strictly smaller than X itself.

Replies from: pragmatist↑ comment by pragmatist · 2011-12-02T21:39:08.102Z · LW(p) · GW(p)

If Y(2 + 2 = 5) = 0.5, Y is still blatantly inconsistent, so that won't help.

I think your second point might be right, though. Isn't it the case that the language of first-order arithmetic is not powerful enough to refer to arbitrary probability assignments over its statements? After all, there are an uncountable number of such assignments, and only a countable number of well-formed formulas in the language. So I don't see why a probability assignment X in a model that includes Peano arithmetic must also assign probabilities to statements like "Y is consistent".

Replies from: taw↑ comment by taw · 2011-12-02T22:08:42.146Z · LW(p) · GW(p)

If you let X be incomplete like twanvl suggests, then you pretty much agree with my position of using probability as a useful tool, and disagree with Bayesian fundamentalism.

Getting into finer points of what is constructible or provable in what language is really not a kind of discussion we could usefully have within confines of lesswrong comment boxes, since we would need to start by formalizing everything far more than we normally do. And it wouldn't really work, it is simply not possible to escape Goedel's incompleteness theorem if you have something even slightly powerful and non-finite, it will get you one way or another.

Replies from: pragmatist, pragmatist, endoself↑ comment by pragmatist · 2011-12-02T22:27:51.246Z · LW(p) · GW(p)

I'm possibly being obtuse here, but I still don't see the connection to the incompleteness theorem. I don't deny that any consistent theory capable of expressing arithmetic must be incomplete, but what does that have to do with the argument you offered above? That argument doesn't hinge on incompleteness, as far as I can see.

↑ comment by pragmatist · 2011-12-03T00:32:03.500Z · LW(p) · GW(p)

And it wouldn't really work, it is simply not possible to escape Goedel's incompleteness theorem if you have something even slightly powerful and non-finite, it will get you one way or another.

This is slightly exaggerated. The theory of real numbers is non-finite and quite powerful, but it has a complete axiomatization.

↑ comment by endoself · 2011-12-02T22:49:48.552Z · LW(p) · GW(p)

If you let X be incomplete like twanvl suggests, then you pretty much agree with my position of using probability as a useful tool, and disagree with Bayesian fundamentalism.

How is a distribution useful if it refuses to answer certain questions? I think I'm misunderstanding something you said, since I think that the essence of Bayesianism is the idea that probabilities must be used to make decisions, while you seem to be contrasting these two things.

↑ comment by Vladimir_Nesov · 2011-12-03T00:32:56.526Z · LW(p) · GW(p)

Let X() be a consistent probability assignment (function from statement to probability number).

What does it mean for this function to be "consistent"? What kinds of statements do you allow?

Let Y() be a probability assignment ...

What's X(Y is consistent)?

If "probability assignment" is a mapping from statements (or Goedel numbers) to the real interval [0,1], it's not a given that Y, being a "probability assignment", is definable, so that you can refer to it in the statement "Y is consistent" above.

↑ comment by TheOtherDave · 2011-12-02T16:43:11.833Z · LW(p) · GW(p)

"Most people here seem to be extremely unwilling to admit that probabilities and uncertainty are not the same thing."

I can't speak for anyone else, but for my part that's because I rarely if ever see the terms used consistently to describe different things. That may not be true of mathematicians, but very little of my language use is determined by mathematicians.

For example, given questions like:

1) When I say that the coin I'm about to flip has an equal chance of coming up heads or tails, am I making a statement about probability or uncertainty?

2) When I say that the coin I have just flipped, but haven't yet looked at, has an equal chance of having come up heads or tails, am I making a statement about probability or uncertainty?

3) When I say that the coin I have just looked at has a much higher chance of having come up heads rather than tails, but you haven't looked at the coin yet and you say at the same time that it has an equal chance of having come up heads or tails, are we both making a statement about the same thing, and if so which thing is it?

...I don't expect consistent answers from 100 people in my linguistic environment. Rather I expect some people will answer "uncertainty" in all three cases, other people will answer "probability", still others will give neither answer. Some might even say that I'm talking about "probability" in case 1, "uncertainty" in case 2, and that in case 3 I'm talking about uncertainty and you're talking about probability.

In that kind of linguistic environment, it's safest to treat the words as synonyms. If someone wants to talk to me about the difference between two kinds of systems in the world, the terms "probability" and "uncertainty" aren't going to be very useful for doing so unless they first provide two definitions.

↑ comment by Louie · 2011-12-11T12:24:36.530Z · LW(p) · GW(p)

Subjective probabilities are inconsistent in any model which includes Peano arithmetic by straightforward application of Gödel's incompleteness theorems.

Did you mean to say incomplete (eg, implying that some small class of bizarrely constructed theorems about subjective probability can't be proven or disproven)?

Because the standard difficulties that Godel's theorem introduces to Peano arithmetic wouldn't render subjective probabilities inconsistent (eg, no theorems about subjective probability could be proven).

↑ comment by hairyfigment · 2011-12-03T00:15:52.133Z · LW(p) · GW(p)

which is essentially any non-finite model.

I don't know if that actually solves the problem. Nor do I know if it makes sense to claim that understanding the two meanings of a Gödel statement, and the link between them, puts you in a different formal system which can therefore 'prove' the statement without contradiction. But it seems to me this accounts for what we humans actually do when we endorse the consistency of arithmetic and the linked mathematical statements. We don't actually have the brains to write a full Gödel statement for our own brains and thereby produce a contradiction.

In your example below, X(Y is consistent) might in fact be 0.5 because understanding what both systems say might put us in Z. Again, this may or may not solve the underlying problem. But it shouldn't destroy Bayesianism to admit that we learn from experience.

↑ comment by Vaniver · 2011-12-02T15:52:30.536Z · LW(p) · GW(p)

If you answered 1 to the first, and anything but 0 or 1 to the second, you're inconsistent.

1 to the first for reasonable definitions of "true." .8 to the second- it seems like the sort of thing that should be true.

To assess the charge of inconsistency, though, we have unpack what you mean by that. Do you mean that I can't see the mathematical truth of a statement without reasoning through it? Then, yes, I very much agree with you. That is not a power I have. (My reasoning is also finite; I doubt I will solve the Collatz conjecture.)

But what I mean by an uncertainty of .8 is not "in the exterior world, a die is rolled such that the Collatz conjecture is true in 80% of universes but not the rest." Like you point out, that would be ridiculous. I'm not measuring math; I'm measuring my brain. What I mean is "I would be willing to wager at 4-1 odds that the Collatz conjecture is true for sufficiently small dollar amounts." Inconsistency, to me, is allowing myself to be Dutch Booked- which those two probabilities do not do.

Replies from: prase↑ comment by prase · 2011-12-02T16:04:02.345Z · LW(p) · GW(p)

You can be "Dutch booked" by someone who can solve the conjecture. (I am not sure whether this can be referred to as Dutch booking, but it would be the case where you both would have access to the same information and one would be in a better position due to imperfections in the other's reasoning.)

it seems like the sort of thing that should be true

It seems also a bit like the sort of thing that might be undecidable.

Replies from: Vaniver↑ comment by Vaniver · 2011-12-02T16:13:09.788Z · LW(p) · GW(p)

I'm pretty sure that a Dutch Book is only a Dutch Book if it's pure arbitrage- that is, you beat someone using only the odds they publish. If you know more than someone else and win a bet against them, that seems different.

It seems also a bit like the sort of thing that might be undecidable.

Quite possibly. I'm not a good judge of mathematical truth- I tend to be more trusting than I should be. It looks to me like if you can prove "every prime can be expressed as the output of algorithm X", where X is some version of the Collatz conjecture in reverse, then you're done. (Heck, that might even map onto the Sieve of Eratosthenes.) That it isn't solved already drops my credence down from ~.95 to ~.8.

Replies from: prase, wedrifid↑ comment by prase · 2011-12-02T17:02:23.774Z · LW(p) · GW(p)

I'm pretty sure that a Dutch Book is only a Dutch Book if it's pure arbitrage- that is, you beat someone using only the odds they publish. If you know more than someone else and win a bet against them, that seems different.

They publish probability of axioms of arithmetics being roughly 1 and probability of Collatz conjecture being 0.8, you see that the conjecture is logically equivalent to the axioms and thus that their odds are mutually inconsistent. You don't "know" more in the sense of having observed more evidence. (I'd agree that this is a tortured interpretation of Dutch booking, but it's probably what you get if you systematically distinguish external evidence from own reasoning.)

↑ comment by wedrifid · 2011-12-02T16:46:00.565Z · LW(p) · GW(p)

I'm pretty sure that a Dutch Book is only a Dutch Book if it's pure arbitrage- that is, you beat someone using only the odds they publish. If you know more than someone else and win a bet against them, that seems different.

Yes. Crudely speaking they have to be stupid, not just ignorant!

Replies from: prase↑ comment by prase · 2011-12-02T11:28:44.845Z · LW(p) · GW(p)

What's your probability of basic laws of mathematics being true?

If (basic laws = axioms and inference rules), meaning of "true" needs clarification.

What's your probability of Collatz conjecture being true?

0.64 (Here, by "true" I mean "can be proven in Peano arithmetics".)

Replies from: taw↑ comment by taw · 2011-12-02T11:34:05.890Z · LW(p) · GW(p)

0.64 (Here, by "true" I mean "can be proven in Peano arithmetics".)

Then you're enitrely inconsistent, since P(Collatz sequence for k converges) is either 0 or 1 for all k by basic laws of mathematics, and P(Collatz conjecture is true) equals product of these, and by basic laws of mathematics can only be 0 or 1.

Replies from: prase↑ comment by prase · 2011-12-02T11:52:07.424Z · LW(p) · GW(p)

Why had you chosen Collatz conjecture to illustrate the fact (which already has been discussed several times) that uncertainty about mathematical statements introduces inconsistency of some sort? I am equally willing to put p = 0.1 to the statement "last decimal digit of 1543! is 7", although in fact this is quite easy to check. Just I don't want to spend time checking.

If for consistency you demand that subjective probabilities assigned to logically equivalent propositions must be equal (I don't dispute that it is sensible to include that to definition of "consistent"), then real people are going to be inconsistent, since they don't have enough processing power to check for consistency. This is sort of trivial. People hold inconsistent beliefs all the time, even when they don't quantify them by probabilities.

If you point to some fine mathematical problems with "ideal Bayesian agents", then I don't see how it is relevant in context of the original post.

Edit: by the way,

P(Collatz sequence for k converges) is either 0 or 1

sounds frequentistish.

Replies from: taw↑ comment by taw · 2011-12-02T12:17:00.656Z · LW(p) · GW(p)

I am equally willing to put p = 0.1 to the statement "last decimal digit of 1543! is 7", although in fact this is quite easy to check. Just I don't want to spend time checking.

What probabilities are are you willing to assign to statements:

- 1543! = 1540 (1543 1542 1541 1539!)

- The last digit of "1540 (1543 1542 1541 1539!)" is 0 and not 7

Bayesian probabilities don't give you any anchoring to reality, they only give you consistency.

If you're willing to abandon consistency as well, they give you precisely nothing whatsoever.

Probabilities are a tool for talking about uncertainty, they are not uncertainty, to think otherwise is a ridiculous map-territory confusion.

sounds frequentistish.

As ad hominem attacks go, that's an interesting one.

If there's one possible universe where Collatz conjecture is true/false, it is true/false is all other possible universes as well. There are no frequencies there, it's just pure fact of logic.

Replies from: prase↑ comment by prase · 2011-12-02T13:06:35.740Z · LW(p) · GW(p)

The last digit of "1540 (1543 1542 1541 1539!)" is 0 and not 7

Updated. (Didn't occur to me it would be so easy.)

Bayesian probabilities don't give you any anchoring to reality, they only give you consistency. If you're willing to abandon consistency as well, they give you precisely nothing whatsoever.

It is unnecessarily black-and-white point of view on consistency. I can improve my consistency a lot without becoming completely consistent. In practice we all compartmentalise.

Probabilities are a tool for talking about uncertainty, they are not uncertainty.

I did certainly not dispute that (if I understand correctly what you mean, which I am not much sure about).

As ad hominem attacks go, that's an interesting one.

The point was, subjective probability is a degree of belief in the proposition; saying "it must be either 0 or 1 by laws of mathematics" rather implies that it is an objective property of the proposition. This seems to signal that you use a non-subjectivist (not necessarily frequentist, my fault) interpretation of probability. We may be then talking about different things. Sorry for ad hominem impression.

↑ comment by TobyBartels · 2011-12-15T05:01:40.376Z · LW(p) · GW(p)

While this sort of thing is interesting, I really don't see its relevance to practical decision making methods as discussed in this post. In fact, the OP even has an escape clause ‘important to your decision’ that applies perfectly here. (The Collatz Conjecture is not important to your decision, almost always in the real world.)