Why do we need an understanding of the real world to predict the next tokens in a body of text?

post by Valentin Baltadzhiev (valentin-baltadzhiev) · 2024-02-06T14:43:50.559Z · LW · GW · No commentsThis is a question post.

Contents

Answers 3 Gerald Monroe 1 Kenku None No comments

I want to start by saying that this is my first question on LessWrong, so I apologise if I am breaking some norms or not asking it properly.

The whole question is pretty much contained in the title. I see a lot of people, Zvi included that claim we have moved beyond the idea that LLMs "simply predict the next token" and that they have some understanding now.

How is this obvious? What is the relevant literature?

Is it possible that LLMs are just mathematical representations of language? (does this question even make sense?) For example, if I teach you to count sheep by adding the number of sheep in two herds, you will get a lot of rules in the form of X + Y = Z, and never see any information about sheep. If, after seeing a million examples you become pretty good at predicting the next token in the sequence "5 + 4 =", does this imply that you have learned something about sheep?

Answers

It depends on what you mean by "the world" and what you think matters in a practical sense.

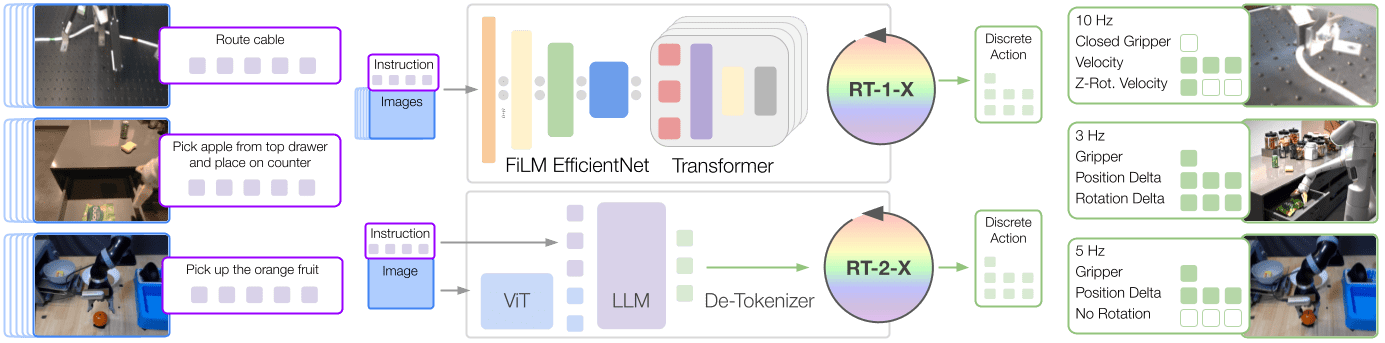

I think it's important to note that an "LLM" is a neural network that receives and emits tokens, but there is no reason it has to only be trained on languages. Images, sound, text, video, proprioception all work, and the outputs can be robotics commands like below, from https://robotics-transformer-x.github.io/ :

These days anything that isn't SOTA doesn't count, and this method outperforms previous methods of robotics control by 1.5x-3x.

When I see "the real world", as an ML engineer who works on embedded systems, I think of the world as literally just the space accessible to a robot. Not "the earth" but "the objects in a robotics cell or on the table in front of it".

Future AI systems will be able to handle more complex worlds, for example if mamba happens to work for robotics control as well, then the world complexity can be increased by a factor of 10-20 later this year or next year when this is integrated into robotics models.

Is this what you meant?

I think it's important to remember that the "real world" in some ways is actually easier than you might think. You gave an example,

If, after seeing a million examples you become pretty good at predicting the next token in the sequence "5 + 4 =", does this imply that you have learned something about sheep?

The world of language effectively has no rules, for instance in programming APIs a new unseen API may be missing methods on it's objects that every similar API the model has ever seen has. So the model will attempt to call those methods, 'hallucinating' code that won't run.

However the 'real' world has many consistent rules, such as gravity, friction, inertia, object fragility, permanence, and others. This means a robotic system can learn general strategies that will apply even to never seen objects, and a transformer neural network will naturally learn some form of compressed strategy in order to learn an effective robotics policy.

Example:

What happens is the "carrot" is blue? If it's a real carrot? A metal object that happens to look like a carrot? Object is covered in oil?

Is it possible for the model to attempt a grab based on wrong assumptions and change it's strategy once it learns the object is different?

↑ comment by Valentin Baltadzhiev (valentin-baltadzhiev) · 2024-02-06T16:41:03.318Z · LW(p) · GW(p)

Thanks for the detailed answer! I think that helped

Does the following make sense:

We use language to talk about events and objects (could be emotions, trees, etc). Since those are things that we have observed, our language will have some patterns that are related to the patterns of the world. However, the patterns in the language are not a perfect representation of the patterns in the world (we can talk about things falling away from our planet, we can talk about fire which consumes heat instead of producing it, etc). An LLM trained on text then learns the patterns of the language but not the patterns of the world. Its "world" is only language, and that's the only thing it can learn about.

Does the above sound true? What are the problems with it?

I am ignoring your point that neural networks can be trained on a host of other things since there is little discussion around whether or not Midjourney "understands' the images it is generating. However, the same point should apply to other modalities as well

Replies from: None↑ comment by [deleted] · 2024-02-06T17:04:23.866Z · LW(p) · GW(p)

I think what you are saying simply generalizes to:

"The model learns only what is has to learn to minimize loss in the training environment".

Every weight is there to minimize loss. It won't learn a deep understanding of anything when a shallow understanding works.

And this is somewhat true. It's more complex than this and depends heavily on the specific method used - architecture, hyperparams, distillation or sparsity.

In practice, what does this mean? How do you get the model to have a deep understanding of a topic? In the real world you mostly get this by being forced to have it, aka actually doing a task in a domain.

Note that this is the second step to LLM training, rounds of RLHF that teach the model to do the task of question answering, or RL against another LLM to filter out unwanted responses.

This should also work for real world task domains.

Replies from: valentin-baltadzhiev↑ comment by Valentin Baltadzhiev (valentin-baltadzhiev) · 2024-02-06T18:36:45.756Z · LW(p) · GW(p)

Okay, all of that makes sense. Could this mean that the model didn’t learn anything about the real world, but it learned something about the patterns of words which give it thimbs up from the RLHFers?

Replies from: None↑ comment by [deleted] · 2024-02-06T21:13:58.549Z · LW(p) · GW(p)

This becomes a philosophical question. I think you are still kinda stuck on a somewhat obsolete view of intelligence. For decades people thought that AGI would require artificial self awareness and complex forms of metacognition.

This is not the case. We care about correct answers on tasks, where we care the most about "0 shot" performance on complex real world tasks. This is where the model gets no specific practice on the task, just a lot of practice to develop the skills needed for each step needed to complete the task, and access to reference materials and a task description.

For example if you could simulate an auto shop environment you might train the model to rebuild Chevy engines and have a 0 shot test on rebuilding Ford engines. Any model that scores well on a benchmark of Ford rebuilds has learned a general understanding about the real world.

Then imagine you don't have just 1 benchmark, but 10,000+, over a wide range of real world 0 shot tasks. Any model that can do at human level, across as many of these tests as the average human (derived with sampling since a human taking the test suite doesn't have time for 10,000 tests) is an AGI.

Once the model beats all living humans at all tasks in expected value on the suite the model is ASI.

It doesn't matter how it works internally, the scores on a realistic unseen test is proof the model is AGI.

Replies from: valentin-baltadzhiev↑ comment by Valentin Baltadzhiev (valentin-baltadzhiev) · 2024-02-07T09:16:27.259Z · LW(p) · GW(p)

I think I understand, thank you. For reference, this is the tweet which sparked the question: https://twitter.com/RichardMCNgo/status/1735571667420598737

I was confused as to why you would necessarily need "understanding" and not just simple next token prediction to do what ChatGPT does

What aspect of the real world do you think the model fails to understand?

No, seriously. Think for a minute and write down your answer before reading the rest.

You just wrote your objection using text. In the limit, a LLM that predicts text perfectly would output just what you wrote when prompted by my question (modulo some outside-text [LW · GW] about two philosophers on LessWrong), therefore it's not a valid answer to “what part of the world does the LLM fail to model”.

↑ comment by Valentin Baltadzhiev (valentin-baltadzhiev) · 2024-02-06T18:47:08.559Z · LW(p) · GW(p)

I don’t really have a coherent answer to that but here it goes (before reading the spoiler): I don’t think the model understands anything about the real world because it never experienced the real world. It doesn’t understand why “a pink flying sheep” is a language construct and not something that was observed in the real world.

Reading my answer maybe we also don’t have any understanding of the real world, we have just come up with some patterns based on the qualia (tokens) that we have experienced (been trained on). Who is to say whether those patterns match to some deeper truth or not? Maybe there is a vantage point from which our “understanding” will look like hallucinations.

I have a vague feeling that I understand the second part of your answer. Not sure though. In that model of yours are the hallucinations of ChatGPT just the result of an imperfectly trained model? And can a model be trained to ever perfectly predict text?

Thanks for the answer it gave me some serious food for thought!

Replies from: Random Developer↑ comment by Random Developer · 2024-02-06T19:50:02.690Z · LW(p) · GW(p)

You're asking good questions! Let me see if I can help explain what other people are thinking.

It doesn’t understand why “a pink flying sheep” is a language construct and not something that was observed in the real world.

When talking about cutting edge models, you might want to be careful when making up examples like this. It's very easy to say "LLMs can't do X", when in fact a state-of-the-art model like GPT 4 can actually do it quite well.

For example, here's what happens if you ask ChatGPT about "pink flying sheep". It realizes that sheet are not supposed to be pink or to fly. So it proposes several hypotheses, including:

- Something really weird is happening. But this is unlikely, given what we know about sheep.

- The observer might be on drugs.

- The observer might be talking about a work of art.

- Maybe someone has disguised a drone as a pink flying sheep.

...and so on.

Now, ChatGPT 4 does not have any persistent memories, and it's pretty bad at planning. But for this kind of simple reasoning about how the world works, it's surprisingly reliable.

For an even more interesting demonstration of what ChatGPT can do, I was recently designing a really weird programming language. It didn't work like any popular language. It was based on a mathematical notation for tensors with implicit summations, it had a Rust-like surface syntax, and it ran on the GPU, not the CPU. This particular combination of features is weird enough that ChatGPT can't just parrot back what it learned from the web.

But when I have ChatGPT a half-dozen example programs in this hypothetical language, it was perfectly capable of writing brand-new programs. It could even recognize the kind of problems that this language might be good for solving, and then make a list of useful programs that couldn't be expressed in my language. It then implemented common algorithms in the new language, in a more or less correct fashion. (It's hard to judge "correct" in a language that doesn't actually exist.)

I have had faculty advisors on research projects who never engaged at this level. This was probably because they couldn't be bothered.

However, please note that I'm am not claiming that ChatGPT is "conscious" or anything like that. If I had to guess, I would say that it very likely isn't. But that doesn't mean that it can't talk about the world in reasonably insightful ways, or offer mostly-cogent feedback on the design of a weird programming language. When I say "understanding", I don't mean it in some philosophical sense. I mean it in the sense of drawing practical onclusions about unfamiliar scenarios. Or to use a science fiction example, I wouldn't actually care whether SkyNet experiences subjective consciousness. I would care whether it could manufacture armed robots and send them to kill me, and whether it could outsmart me at military strategy.

However, keep in mind that despite GPT 4's strengths, it also has some very glaring weaknesses relative to ordinary humans. I think that the average squirrel has better practical problem-solving skills than GPT 4, for example. And I'm quite happy about this, because I suspect that building actual smarter-than-human AI would be about as safe as smashing lumps of plutonium together.

Does this help answer your question?

Replies from: valentin-baltadzhiev↑ comment by Valentin Baltadzhiev (valentin-baltadzhiev) · 2024-02-06T21:09:32.905Z · LW(p) · GW(p)

I think it does, thank you! In your model does a squirrel perform better than ChatGPT at practical problem solving simply because it was “trained” on practical problem solving examples and ChatGPT performs better on language tasks because it was trained on language? Or is there something fundamentally different between them?

Replies from: Random Developer↑ comment by Random Developer · 2024-02-06T22:37:51.508Z · LW(p) · GW(p)

I suspect ChatGPT 4's weaknesses come from several sources, including:

- It's effectively amnesiac, in human terms.

- If you look at the depths of the neural networks and the speed with which they respond, they have more in common with human reflexes than deliberate thought. It's basically an actor doing a real-time improvisation exercise, not a writer mulling over each word. The fact that it's as good as it is, well, it's honestly terrifying to me on some level.

- It has never actually lived in the physical world, or had to solve practical problems. Everything it knows comes from text or images.

Most people's first reaction to ChatGPT is to overestimate it. Then they encounter various problems, and they switch to underestimating it. This is because we're used to interacting with humans. But ChatGPT is very unlike a human brain. I think it's actually better than us at some things, but much worse at other key things.

Replies from: valentin-baltadzhiev↑ comment by Valentin Baltadzhiev (valentin-baltadzhiev) · 2024-02-07T09:16:39.695Z · LW(p) · GW(p)

Thank you for your answers!

No comments

Comments sorted by top scores.