In Most Markets, Lower Risk Means Higher Reward

post by dawangy · 2021-08-13T17:32:16.585Z · LW · GW · 13 commentsContents

13 comments

The age old adage that high risk is associated with high expected return has not been true in the U.S. stock market, at least when using the academic standard of measuring risk with the either beta or standard deviation of returns. This claim is one of plain numbers, so by itself, it is not disputed. However, the reasons for the existence of this phenomenon, as well as its practical exploitability are. Here we will explore different hypotheses about why this is the case, including some hypotheses that are consistent with the EMH and some that are not.

In my view, the most comprehensive empirical study of a similar result was in Frazzini and Pedersen. In this paper the authors examined the low beta phenomenon by constructing hypothetical portfolios that are long low beta stocks and short high beta stocks in market-neutral ratios. Using this approach, the authors determined that low beta was associated with excess returns in 19 out of 20 countries' stocks, and was also true in many alternative markets such as those for corporate bonds, treasuries, foreign exchange, and commodities. The in-sample effect was strongest in the Canadian stock market, and the Austrian stock market was the only studied market for which the effect was (very slightly) negative. The out of sample construction based on the methodology created in sample was also positive in all nations studied except Sweden. Note that this is slightly different from my headline, as it suggests that the "risk-adjusted" returns of low beta stocks is higher than those of high beta stocks. Not necessarily that the returns of low beta stocks are higher in general.

The hypothesis of Frazzini and Pedersen is that some investors are leverage constrained, meaning that they are less capable of borrowing funds in order to increase exposure to equity. This leads those less capable of leverage to seek the higher expected returns dictated by their preferences through exposure to riskier stocks, which reduces the risk adjusted expected returns of riskier stocks because investors will purchase them at discounted expected returns to achieve artificial market leverage. The paper illustrates that under the framework of modern portfolio theory, it is mathematically true that certain kinds of investors that they describe will yield the types of results they are describing.

Since it was written by researchers working at AQR, its almost certainly true that the authors believe that the factor is exploitable. Otherwise, it wouldn't be a great advertisement for their collection of low beta mutual funds. For what it's worth, those funds are some of the only equity funds they offer that have actually generated alpha in their lifetime. Most haven't. The CEO of AQR claims he is "in the middle" in terms of his belief in the EMH, but to be honest his words and actions sound more to me like he doesn't believe in it.

In response, Novy-Marx and Velokov dispute the idea that the low beta factor violates the EMH by suggesting that

- A value weighted version of the factor is closer to what investors can reasonably exploit in practice because firms with very low capitalization are very illiquid and can't be readily turned over without incurring significant costs. This value weighted version shows a lower excess return.

- The most recent additions to the Fama-French 5 factor model (explained later) explain the bulk of the effect, and the remaining outperformance is not significant. In their words, low beta "earns most of these returns by tilting strongly to profitability and investment... The strategy’s alpha relative to the Fama and French five-factor model is only 24 bps/month, and insignificant (t-statistic of 1.63)."

The Fama-French Factor Model is probably the most academically accepted model of excess market returns, and is often used in defense of the weak EMH. It tries to explain that some stocks have variation in risk adjusted returns, but only because there are other risks at the level of each company's fundamentals for which investors are compensated for taking on. For example, it posits that investing in small companies has inherent risks that may not be reflected in price volatility, and so therefore investors who invest in smaller firms will receive higher risk adjusted returns for taking on this risk. "Profitability and investment" mentioned in the last section are the names of two such risk factors added to the Fama-French model in 2013/2014 and published in 2015.

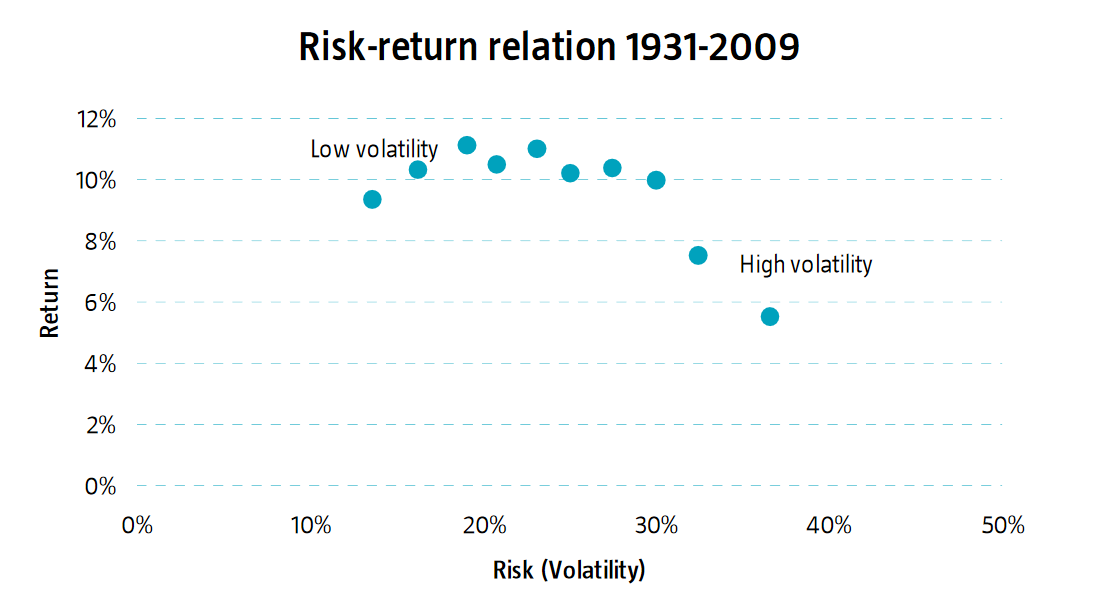

Where do I stand on this issue? Well to be honest I have personal issues with both of the explanations presented. Perhaps it is because I'm not a real researcher like these people and misunderstand what they're saying. Nevertheless, I'll start by airing my complaints about Frazzini and Pedersen. Their hypothesis that leverage constrained investors choose instead to invest in higher beta due to rational optimization seems suspect to me because in the U.S., stocks with higher beta or volatility do not even have higher absolute returns without adjusting for risk.

Admittedly, this chart isn't from a purely academic source, but the strength of the prior evidence is convincing enough that I believe what the authors are saying. Why would leveraged restricted rational investors try to get higher expected returns by tilting their portfolios to stocks that are probably just worse?

I also don't like the counter-explanation by academics like Novy-Marx (but also some others) that the low beta phenomenon is explained by the latest two factors in the Fama-French Model. Originally the Fama-French model only had 3 fundamental risk factors. If things don't quite work out after the first 3, it seems awfully ad-hoc to just find 2 more and then add them to the back. There also seems to be a belief in academia that getting higher risk adjusted returns through analysis of company fundamentals is more possible than getting them through historical price data. After all, the "weak" form of the EMH states that it is possible to get higher risk adjusted returns through fundamental analysis but not historical price analysis, while the "semi-strong" form states that it is not possible through either fundamental or price analysis, and only possible for those with material nonpublic information. Why isn't there a version of the EMH that states it is only possible through analysis of price history and not through company fundamentals? (For the record, I don't believe this.)

The people who trade actively in industry know that quantitative investors do use price history in making decisions. "Trend following" is one of the most popular CTA strategies and it means exactly what it sounds like, i.e. "buy what went up recently." These trend followers have produced alpha, although markedly less in recent years. Perhaps it is a bit unfair to include high frequency traders into the mix, but they obviously qualify too. So then, if price history is useful according to those in industry who are undoubtedly successful in more ways than just luck (like D.E. Shaw, Citadel, RenTech, etc.), why force the conversation back into fundamental risk factors (and two that seem very arbitrary, at that)?

The low volatility phenomenon remains a mystery to me, and I don't find satisfaction in reading results from academia that try to explain its existence.

13 comments

Comments sorted by top scores.

comment by Douglas_Knight · 2021-08-13T19:35:00.355Z · LW(p) · GW(p)

Fama-French is not a "model of market efficiency." Honest economists describe it as the best challenge to Fama's theory of efficiency. Sometimes Fama tries to reconcile this model with efficiency by proposing that there is a fat tail of risk correlated with the anomalies, but this is just a hypothesis, a research program, not something that has any evidence beyond the anomaly.

Replies from: dawangy↑ comment by dawangy · 2021-08-13T19:42:16.614Z · LW(p) · GW(p)

I should perhaps clarify that I am talking about weak form efficiency. In a weak-form efficient market, active management through fundamental analysis can still produce excess returns. The three and five factor models attempt to find fundamental factors that can predict excess returns. This contradicts the stronger forms of EMH, but it stands just fine with the weak form. In addition, academic practitioners use the five factor model often in defense of weak EMH.

To your point, perhaps I should edit the original article though. Investopedia says of the FF model, "there is a lot of debate about whether the outperformance tendency is due to market efficiency or market inefficiency."

comment by AaronF (aaron-franklin-esq) · 2021-08-14T19:13:55.250Z · LW(p) · GW(p)

REMOVED

comment by rossry · 2021-08-22T15:41:49.420Z · LW(p) · GW(p)

Originally the Fama-French model only had 3 fundamental risk factors. If things don't quite work out after the first 3, it seems awfully ad-hoc to just find 2 more and then add them to the back. There also seems to be a belief in academia that getting higher risk adjusted returns through analysis of company fundamentals is more possible than getting them through historical price data.

I'm a bit confused here -- the core Fama-French insight is that if a given segment of the market have a large common correlation, then it'll be under-invested in by investors constrained by a portfolio risk budget. In this framework, I think it's perfectly valid to identify new factors as the research progresses.

(1) As a toy example, say that we discover all the stocks that start with 'A' are secretly perfectly correlated with each other. So, from a financial perspective, they're one huge potential investment with a massive opportunity to deploy many trillions of dollars of capital.

However, every diversified portfolio manager in the world has developed the uncontrollable shakes -- they thought they had a well-diversified portfolio of 2600 companies, but actually they have 100 units of general-A-company and 2500 well-diversified holdings. Assuming that each stock had the same volatility, that general-A position quintuples their portfolio variance! The stock-only managers start thinking about rotating As into Bs through Zs, and both the leveraged managers and the stocks+plus+bonds managers think about how much they'll have to trim stock leverage, and how much is that should be As vs the rest...

Ultimately, when it all shakes out, many people have cut their general-A investments significantly, and most have increased their other investments modestly. A's price has fallen a bit. Because A's opportunities to generate returns are still strong, A now has some persistent excess return. Some funds are all-in on A, but they're hugely outweighed by funds that take 3x leveraged bets on B-Z, and so the relative underinvestment and outperformance persist.

(2) In this case, the correlation between the A stocks is analogous to an extreme French-Fama factor (in the sense the original authors mean the term). It "predicts higher risk-adjusted returns", but not in a practically exploitable way, because the returns go along with a "factor"-wide correlation that limits just how much of it you can take on, as an investor with a risk budget.

If you could pick only one stock in this world, you would make it an A. Sure. But any sophisticated portfolio already has as much A as it wants, and so there's no way for them to trade A to eliminate the excess return.

(3) And in this universe, would it be valid for Fama and French to write their initial model, notice this extra correlation (and that it explains higher risk-adjusted returns for A stocks), and tack it on to the other factors of the model? I think that's perfectly valid.

Replies from: dawangy↑ comment by dawangy · 2021-08-28T23:12:47.034Z · LW(p) · GW(p)

It seems ad hoc to me because they continue to add "fundamental" factors to their model, instead of accepting that the risk-return paradox just existed. Why accept 5 fundamental factors when you could just accept one technical factor?

Suppose that in 20 years we discover that although currently in 2021 we are able to explain the risk-return paradox with 5 factors and transaction costs, the risk-return paradox still exists despite 5 factors in this new out of sample data from the future. What do we do then? Find 2 more factors? Or should we just conclude that the market for the period of time up until then was just not efficient in a weak-form sense?

Replies from: rossry↑ comment by rossry · 2021-08-29T16:20:46.505Z · LW(p) · GW(p)

I think of the Fama-French thesis as having two mostly-separate claims: (1) correlated factors create under-investment + excess return, and (2) the "right" factors to care about are these three -- oops five -- fundamentally-derived ones.

Like you, I'm pretty skeptical on the way (2) is done by F-F, and I think the practice of hunting for factors could (should) be put on much more principled ground.

It's worth keeping in mind, though, that (1) is not just "these features predict excess returns", but "these features have correlation, and that correlation arrows excess returns". So it's not the same as saying there's a single excess-return factor, because the model has excess return being driven specifically by correlation and portfolio under-investment.

Example: In hypothetical 2031, it feels valid to me to say "oh, the new 'crypto minus fiat' factor explains a bunch of correlated variance, and I predict it will be accompanied by excess returns". The fact that the factor is new doesn't mean its correlation should do anything different (to portfolio weightings, and thus returns) than other correlated factors do.

I also don't think the binary of "the risk-return paradox exists" vs "the market is efficient in a weak-form sense" is a helpful way to divide hypothesis-space. If there's a given observed amount of persistent excess return, F-F ideas might explain some of it but leave the rest looking like inefficiency. The fact that some inefficiency remains doesn't mean that we should ignore the part that is explainable, though.

Replies from: dawangy↑ comment by dawangy · 2021-09-09T05:11:04.410Z · LW(p) · GW(p)

I think I would agree with you that if you could really find the "right" factors to care about because they capture predictable correlated variance in a sensible way, then we should accept those parts as "explainable". I just find that these FF betas are too unstable and arbitrary for my liking, which is a sentiment you seem to understand.

I focus so much on the risk-return paradox because it is such a simple and consistent anomaly. Maybe one day that won't be true anymore, but I'm just more willing to accept that this phenomenon just exists as a quirk of the marketplace than that FF explains "part of it, and the rest looks like inefficiency". FF could just as easily be too bad a way to explain correlated variance to use in any meaningful way.

Replies from: rossry↑ comment by rossry · 2021-09-10T02:44:15.081Z · LW(p) · GW(p)

Reasonable beliefs! I feel like we're mostly at a point where our perspectives are mainly separated by mood, and I don't know how to make forward progress from here without more data-crunching than I'm up for at this time.

Thanks for discussing!

comment by JenniferRM · 2021-08-18T03:09:33.399Z · LW(p) · GW(p)

Is there any coherent defense for using price volatility as a proxy for risk?

To me, this move just seems... stupid? Like not tracking what matters at all? I've USED this math in practice as a data scientist, when the product manager wanted to see financial statistics, but I didn't BELIEVE it while I was using it. (My own hunch is that "actual risk" is always inherently subjective, and based on what "you" can predict and how precisely you can predict it, and when you know you can't predict something very well you call that thing "risky".)

If we treat this "attempt at a proxy for risk" as a really really terrible proxy for risk, such that its relation to actual risk is essentially random, then it seems like you should expect that doing statistics on "noise vs payout" would show "whatever the average payout is in general" is also "the average payout for each bucket whose members are defined by having a certain random quantity of this essentially noisy variable".

If I understand correctly, this "it is all basically flat and similar" result is the numerical result in search of an explanation... I wonder if there some clever reason that "this is basically just noise" doesn't count as a valid answer?

Replies from: dawangy, gilch↑ comment by dawangy · 2021-08-29T00:16:48.017Z · LW(p) · GW(p)

I don't think that gilch answered the question correctly. His two games A and B are both "additive" games (unless I'm misunderstanding him). The wagers are not a percent of bankroll but are instead a constant figure each time. His mention of the Kelly criterion is relevant to questions about the effect of leverage on returns, but is relevant neither to his example games nor to your question of why volatility is used as a "proxy" for risk.

I'd say that to a large extent you are right to be suspicious of this decision to use variance as a proxy for risk. The choice to use volatility as a risk proxy was definitely a mathematical convenience that works almost all of the time, except when it absolutely doesn't. And when it doesn't work out, it does so ways that can negate all the time that it does work out for. The most commonly used model of a stock's movements is Geometric Brownian Motion, which only has two parameters, µ and σ. Since σ is the sole determinant of the standard deviation of the next minute/day/month/year's move, it is used as the "risk" parameter since it determines the magnitude distribution for how much you can expect to make/lose.

But to get to the heart of the matter (i.e. why people accept and use this model despite it's failure to take into account "real" risk), I refer you to this stackexchange post.

↑ comment by gilch · 2021-08-18T06:56:45.569Z · LW(p) · GW(p)

Suppose I offer you two games:

A) You put up ten dollars. I flip a fair coin. Heads, I give it back and pay you one cent. Tails, I keep it all.

B) You put up $100,000. I flip a fair coin. Heads, I give it back and pay you $100. Tails, I keep it all.

You have the edge, right? Which bet is riskier? The only difference is scale.

What if we iterate? With game A, we trade some tens back and forth, but you accumulate one cent per head. It's a great deal. With game B, I'll probably have to put up some Benjamins, but eventually I'll get a streak of enough tails to wipe you out. Then I keep your money because you can't ante up.

The theoretically optimal investing strategy is Kelly, which accounts for this effect. The amount to invest is a function of your payoff distribution and the current size of your bankroll. Your bankroll size is known, but the payoff distribution is more difficult to calibrate. We could start with the past distribution of returns from the asset. Most of the time this looks like a modified normal distribution with much more kurtosis and negative skew.

The size of your risk isn't the number of dollars you have invested. It's how much you stand to lose and with what probability.

Volatility is much more predictable in practice than price. One can forecast it with much better accuracy than chance using e.g. a GARCH model.

Given these parameters, you can adjust your bet size for the forecast variance from your volatility model.

So volatility is most of what you need to know. There's still some black swan risk unaccounted for. Outliers that are both extreme and rare might not have had time to show up in your past distribution data. But in practice, you can cut off the tail risk using insurance like put options, which cost more the higher the forecast volatility is. So volatility is still the main parameter here.

Given this, for a given edge size, it makes sense to set the bet size based on forecast volatility and to pick assets based on the ratio of expected edge to forecast volatility. So something like a Sharpe ratio.

I have so far neglected the benefits of diversification. The noise for uncorrelated bets will tend to cancel out, i.e. reduce volatility. You can afford to take more risk on a bet, i.e. allocate more dollars to it, if you have other uncorrelated bets that can pay off and make up for your losses when you get unlucky.

comment by bluefalcon · 2021-08-15T03:54:38.094Z · LW(p) · GW(p)

The interesting measure would be absolute returns, not risk-adjusted.

Replies from: dawangy