Is the mind a program?

post by EuanMcLean (euanmclean) · 2024-11-28T09:42:02.892Z · LW · GW · 40 commentsContents

The search for a software/hardware separation in the brain No software/hardware separation in the brain: a priori arguments Is there an evolutionary incentive for a software/hardware separation? Software/hardware separation has an energy cost Evolution does not separate levels of abstraction No software/hardware separation in the brain: empirical evidence Conclusion Appendix: Defining a simulation of a physical process None 40 comments

This is the second in a sequence of posts scrutinizing computational functionalism (CF). In my last post [LW · GW], I defined a concrete claim that computational functionalists tend to make:

Practical CF: A simulation of a human brain on a classical computer, capturing the dynamics of the brain on some coarse-grained level of abstraction, that can run on a computer small and light enough to fit on the surface of Earth, with the simulation running at the same speed as base reality[1], would cause the same conscious experience as that brain.

I contrasted this with “theoretical CF”, the claim that an arbitrarily high-fidelity simulation of a brain would be conscious. In this post, I’ll scrutinize the practical CF claim.

To evaluate practical CF, I’m going to meet functionalists where they (usually) stand and adopt a materialist position about consciousness. That is to say that I’ll assume all details of a human’s conscious experience are ultimately encoded in the physics of their brain.

The search for a software/hardware separation in the brain

Practical CF requires that there exists some program that can be run on a digital computer that brings about a conscious human experience. This program is the “software of the brain”. The program must simulate some abstraction of the human brain that:

- Is simple enough that it can be [LW · GW] simulated on a classical computer on the surface of the Earth,

- Is causally closed from lower-level details of the brain, and

- Encodes all details of the brain’s conscious experience.

Condition (a), simplicity, is required to satisfy the definition of practical CF. This requires an abstraction that excludes the vast majority of the physical degrees of freedom of the brain. Running the numbers[2], an Earth-bound classical computer can only run simulations at a level of abstraction well above atoms, molecules, and biophysics.

Condition (b), causal closure, is required to satisfy the definition of a simulation (given in the appendix [? · GW]). We require the simulation to correctly predict some future (abstracted) brain state if given a past (abstracted) brain state, with no need for any lower-level details not included in the abstraction. For example, if our abstraction only includes neurons firing, then it must be possible to predict which neurons are firing at given the neuron firings at , without any biophysical information like neurotransmitter trajectories.

Condition (c), encoding conscious experience, is required to claim that the execution of the simulation contains enough information to specify the conscious experience it is generating. If one cannot in principle read off the abstracted brain state and determine the nature of the experience,[3] then we cannot expect the simulation to create an experience of that nature.[4]

Strictly speaking, each of these conditions are not binary, but come in degrees. An abstraction that is somewhat simple, approximately causally closed, and encodes most of the details of the mind could potentially suffice for an at least relaxed version practical CF. But the harder it is to satisfy these conditions in the brain, the weaker practical CF becomes. The more complex the program, the harder it is to run practically. The weaker the causal closure, the more likely details outside the abstraction are important for consciousness. And the more elements of the mind that get left out of the abstraction, the more different the hypothetical experience would be & the more likely one of the missing elements are necessary for consciousness.

The search for brain software could be considered a search for a “software/hardware distinction” in the brain (c.f. Marr’s levels of analysis). The abstraction which encodes consciousness is the software level, all other physical information that the abstraction throws out is the hardware level.

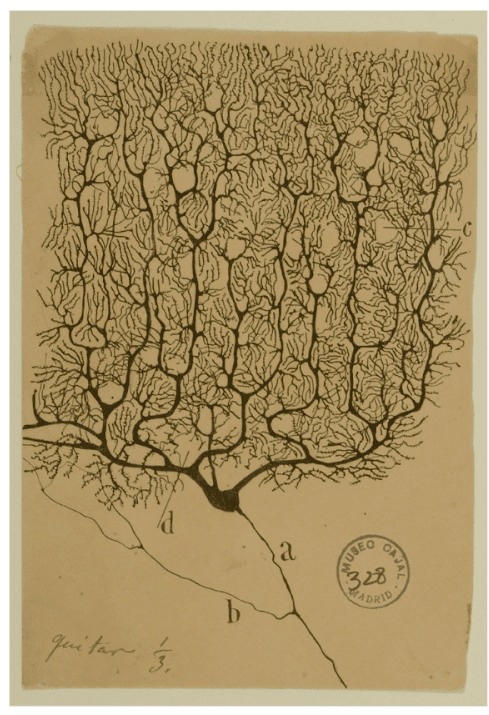

Does such an abstraction exist?[5] A first candidate abstraction is the neuron doctrine: the idea that the mind is governed purely by patterns of neuron spiking. This abstraction seems simple enough (it could be captured with an artificial neural network of the same size: requiring around parameters).

But is it causally closed? If there are extra details that causally influence neuron firings (like the state of glial cells, densities of neurotransmitters and the like), we would have to modify the abstraction to include those extra details. Similarly, if the mind is not fully specified by neuron firings, we’d have to include whatever extra details influence the mind. But if we include too many extra details, we lose simplicity. We walk a tightrope.

So the big question is: how many extra details (if any) must mental software include?

There’s still a lot of uncertainty in this question. But all things considered, I think the evidence points towards an absence of such an abstraction that satisfies these three conditions.[6] In other words, there is no software/hardware separation in the brain.

No software/hardware separation in the brain: a priori arguments

Is there an evolutionary incentive for a software/hardware separation?

Should we expect selective pressures to result in a brain that exhibits causally separated software & hardware? To answer this, we need to know what the separation is useful for. Why did we build software/hardware separation into computers?

Computers are designed to run the same programs as other computers with different hardware. You can download whatever operating system you want and run any program you want, without having to modify the programs to account for the details of your specific processor.

There is no adaptive need for this property in brains. There is no requirement for the brain to download and run new software.[7] Nor did evolution plan ahead to the glorious transhumanist future so we can upload our minds to the cloud. The brain only ever needs to run one program: the human mind. So there is no harm in implementing the mind in a way arbitrarily entangled with the hardware.

Software/hardware separation has an energy cost

Not only is there no adaptive reason for a software/hardware separation, but there is an evolutionary disincentive. Causally separated layers of abstraction are energetically expensive.

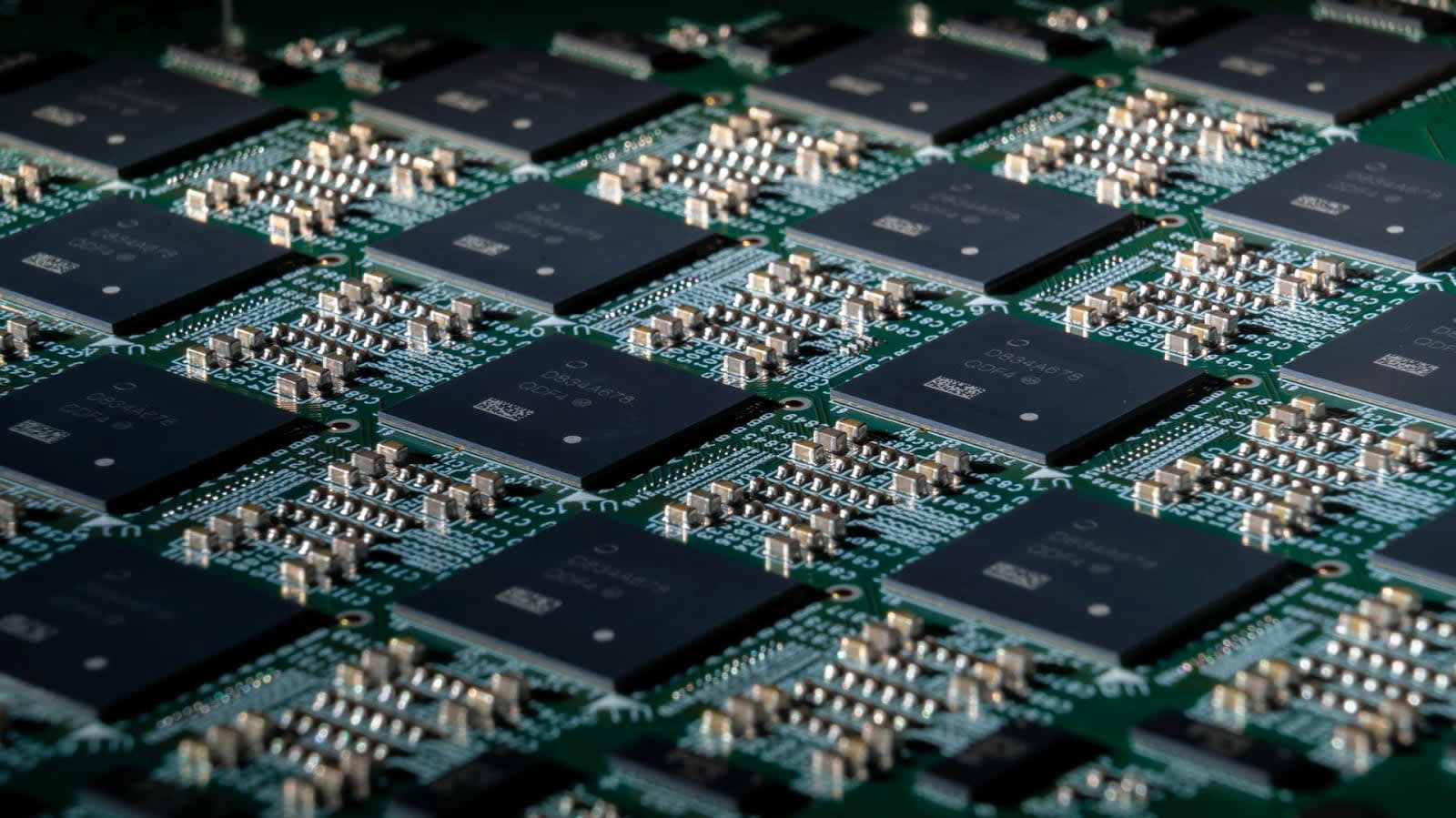

Normally with computers, the more specialized hardware is to software, the more energy efficient the system can be. More specialized hardware means there are fewer general-purpose overheads (in the form of e.g. layers of software abstraction). Neuromorphic computers - with a more intimate relationship between hardware and software - can be 10,000 times more energy efficient than CPUs or GPUs.

Evolution does not separate levels of abstraction

The incremental process of natural selection results in brains with a kind of entangled internal organisation that William Wimsatt calls generative entrenchment. When a new mechanism evolves, it does not evolve as a module cleanly separated from the rest of the brain and body. Instead, it will co-opt whatever pre-existing processes are available, be that on the neural, biological or physical level. This results in a tangled web of processes, each sensitive to the rest.

Imagine a well-trained software developer, Alice. Alice writes clean code. When Alice is asked to add a feature, she writes a new module for that feature, cleanly separated from the rest of the code’s functionality. She can safely modify one module without affecting how another runs.

Now imagine a second developer, Bob. He’s a recent graduate with little formal experience and not much patience. Bob’s first job is to add a new feature to a codebase, but quickly, since the company has promised the release of the new feature tomorrow. Bob makes the smallest possible series of changes to get the new feature running. He throws some lines into a bunch of functions, introducing new contingencies between modules. After many rushed modifications the codebase is entangled with itself. This leads to entanglement between levels of abstraction.

If natural selection was going to build a codebase, would it be more like Alice or Bob? It would be more like Bob. Natural selection does not plan ahead. It doesn’t worry about the maintainability of its code. We can expect natural selection to result in a web of contingencies between different levels of abstraction.[8]

No software/hardware separation in the brain: empirical evidence

Let’s take the neuron doctrine as a starting point. The neuron doctrine is a suitably simple abstraction that could be practically simulated. It plausibly captures all the aspects of the mind. But the neuroscientific evidence is that neuron spiking is not causally closed, even if you include a bunch of extra detail.

Since the neuron doctrine was first developed over a hundred years ago, a richer picture of neuroscience has emerged in which both the mind and neuron firing have contingencies on many biophysical aspects of the brain. These include precise trajectories of neurotransmitters, densities in tens of thousands of ion channels, temperature fluctuations, glial details, blood flow, the propagation of ATP, homeostatic neuron spiking, and even bacteria and mitochondria. See Cao 2022 and Godfrey-Smith 2016 for more.

Take ATP as an example. Can we safely abstract the dynamics of ATP out of our mental program, by, say, taking the average ATP density and ignoring fluctuations? It’s questionable whether such an abstraction would be causally closed, because of all the ways ATP densities can influence neuron firing and message passing:

ATP also plays a role in intercellular signalling in the brain. Astrocytic calcium waves, which have been shown to modulate neural activity, propagate via ATP. ATP is also degraded in the extracellular space into adenosine, which inhibits neural spiking activity, and seems to be a primary determinant of sleep pressure in mammals. Within neurons, ATP hydrolysis is one of the sources of cyclic AMP, which is involved in numerous signaling pathways within the cell, including those that modulate the effects of several neurotransmitters producing effects on the neuronal response to subsequent stimuli in less than a second. Cyclic AMP is also involved in the regulation of intracellular calcium concentrations from internal stores, which can have immediate and large effects on the efficacy of neurotransmitter release from a pre-synaptic neuron.

(Cao 2022)

From this quote I count four separate ways neuron firing could be sensitive to fluctuations in ATP densities, and one way that conscious experience is sensitive to it. The more ways ATP (and other biophysical contingencies) entangle with neuron firing, the harder it is to imagine averaging out all these factors without losing predictive power on the neural and mental levels.

If we can’t exclude these contingencies, could we include them in our brain simulation? The first problem is that this results in a less simple program. We might now need to simulate fields of ATP densities and the trajectories of trillions of neurotransmitters.

But more importantly, the speed of propagation of ATP molecules (for example) is sensitive to a web of more physical factors like electromagnetic fields, ion channels, thermal fluctuations, etc. If we ignore all these contingencies, we lose causal closure again. If we include them, our mental software becomes even more complicated.

If we have to pay attention to all the contingencies of all the factors described in Cao 2022, we end up with an astronomical array of biophysical details that result in a very complex program. It may be intractable to capture all such dynamics in a simulation on Earth.

Conclusion

I think that Practical CF, the claim that we could realistically recreate the conscious experience of a brain with a classical computer on Earth, is probably wrong.

Practical CF requires the existence of a simple, causally closed and mind-explaining level of abstraction in the brain, a “software/hardware separation”. But such a notion melts under theoretical and empirical scrutiny. Evolutionary constraints and biophysical contingencies imply an entanglement between levels of abstraction in the brain, preventing any clear distinction between software and hardware.

But if we just include all the physics that could possibly be important for the mind in the simulation, would it be conscious then? I’ll try to answer this in the next post.

Appendix: Defining a simulation of a physical process

A program can be defined as[9]: a partial function , where is a finite alphabet (the "language" in which the program is written), and represents all possible strings over this alphabet (the set of all possible inputs and outputs).

Denote a physical process that starts with initial state and ends with final state by , where is the space of all possible physical configurations (specifying physics down to, say, the Planck length). Denote the space of possible abstractions as where , such that contains some subset of the information in .

I consider a program to be running a simulation of physical process up to abstraction if outputs when given for all and .

- ^

1 second of simulated time is computed at least every second in base reality.

- ^

Recall from the last post that an atom-level simulation would cost at least FLOPS. The theoretical maximum FLOPS of an Earth-bound classical computer is something like . So the abstraction must be, in a manner of speaking, times simpler than the full physical description of the brain.

- ^

If armed with a perfect understanding of how brain states correlate with experiences.

- ^

I’m cashing in my “assuming materialism” card.

- ^

Alternatively, what’s the “best” abstraction we can find – how much can an abstraction satisfy these conditions?

- ^

Alternatively, the "best" abstraction that best satisfies the three conditions does not satisfy the conditions as well as one might naively think.

- ^

One may argue that brains do download and run new software in the sense that we can learn new things and adapt to different environments. But this is really the same program (the mind) simply operating with different inputs, just as a web browser is the same program regardless of which website you visit.

- ^

This analogy doesn’t capture the full picture, since programmers don’t have power over hardware. A closer parallel would be one where Alice and Bob are building the whole system from scratch including the hardware. Bob wouldn’t just entangle different levels of software abstraction, he would entangle the software and the hardware.

- ^

Other equivalent definitions e.g. operations of a Turing machine or a sequence of Lambda expressions, are available.

- ^

The question of whether such a simulation contains consciousness at all, of any kind, is a broader discussion that pertains to a weaker version of CF that I will address later on in this sequence.

40 comments

Comments sorted by top scores.

comment by Rafael Harth (sil-ver) · 2024-11-28T10:49:29.807Z · LW(p) · GW(p)

No software/hardware separation in the brain: empirical evidence

I feel like the evidence in this section isn't strong enough to support the conclusion. Neuroscience is like nutrition -- no one agrees on anything, and you can find real people with real degrees and reputations supporting just about any view. Especially if it's something as non-committal as "this mechanism could maybe matter". Does that really invalidate the neuron doctrine? Maybe if you don't simulate ATP, the only thing that changes is that you have gotten rid of an error source. Maybe it changes some isolated neuron firings, but the brain has enough redundancy that it basically computes the same functions.

Or even if it does have a desirable computational function, maybe it's easy to substitute with some additional code.

I feel like the required standard of evidence is to demonstrate that there's a mechanism-not-captured-by-the-neuron-doctrine that plays a major computational role, not just any computational role. (Aren't most people talking about neuroscience still basically assuming that this is not the case?)

We can expect natural selection to result in a web of contingencies between different levels of abstraction.[6]

Mhh yeah I think the plausibility argument has some merit.

Replies from: euanmclean↑ comment by EuanMcLean (euanmclean) · 2024-11-28T16:15:00.621Z · LW(p) · GW(p)

Especially if it's something as non-committal as "this mechanism could maybe matter". Does that really invalidate the neuron doctrine?

I agree each of the "mechanisms that maybe matter" are tenuous by themselves, the argument I'm trying to make here is hits-based. There are so many mechanisms that maybe matter, the chances of one of them mattering in a relevant way is quite high.

Replies from: zac-hatfield-dodds↑ comment by Zac Hatfield-Dodds (zac-hatfield-dodds) · 2024-11-28T21:58:06.596Z · LW(p) · GW(p)

I think your argument also has to establish that the cost of simulating any that happen to matter is also quite high.

My intuition is that capturing enough secondary mechanisms, in sufficient-but-abstracted detail that the simulated brain is behaviorally normal (e.g. a sim of me not-more-different than a very sleep-deprived me), is likely to be both feasible by your definition and sufficient for consciousness.

Replies from: euanmclean↑ comment by EuanMcLean (euanmclean) · 2024-11-29T12:28:39.264Z · LW(p) · GW(p)

If I understand your point correctly, that's what I try to establish here

the speed of propagation of ATP molecules (for example) is sensitive to a web of more physical factors like electromagnetic fields, ion channels, thermal fluctuations, etc. If we ignore all these contingencies, we lose causal closure again. If we include them, our mental software becomes even more complicated.

i.e., the cost becomes high because you need to keep including more and more elements of the dynamics.

comment by Charlie Steiner · 2024-11-28T16:46:55.153Z · LW(p) · GW(p)

Causal closure is impossible for essentially every interesting system, including classical computers (my laptop currently has a wiring problem that definitely affects its behavior despite not being the sort of thing anyone would include in an abstract model).

Are there any measures of approximate simulation that you think are useful here? Computer science and nonlinear dynamics probably have some.

Replies from: euanmclean, sil-ver, green_leaf↑ comment by EuanMcLean (euanmclean) · 2024-11-28T20:24:38.148Z · LW(p) · GW(p)

Yes, perfect causal closure is technically impossible, so it comes in degrees. My argument is that the degree of causal closure of possible abstractions in the brain is less than one might naively expect.

Are there any measures of approximate simulation that you think are useful here?

I am yet to read this but I expect it will be very relevant! https://arxiv.org/abs/2402.09090

↑ comment by Rafael Harth (sil-ver) · 2024-11-28T21:33:54.895Z · LW(p) · GW(p)

Sort of not obvious what exactly "causal closure" means if the error tolerance is not specified. We could differentiate literally 100% perfect causal closure, almost perfect causal closure, and "approximate" causal closure. Literally 100% perfect causal closure is impossible for any abstraction due to every electron exerting nonzero force on any other electron in its future lightcone. Almost perfect causal closure (like 99.9%+) might be given for your laptop if it doesn't have a wiring issue(?), maybe if a few more details are included in the abstraction. And then whether or there exists an abstraction for the brain with approximate causal closure (95% maybe?) is an open question.

I'd argue that almost perfect causal closure is enough for an abstraction to contain relevant information about consciousness, and approximate causal closure probably as well. Of course there's not really a bright line between those two, either. But I think insofar as OP's argument is one against approximate causal closure, those details don't really matter.

↑ comment by green_leaf · 2024-11-28T17:09:35.546Z · LW(p) · GW(p)

I'm thinking the causal closure part is more about the soul not existing than about anything else.

Replies from: Charlie Steiner↑ comment by Charlie Steiner · 2024-11-28T18:39:20.684Z · LW(p) · GW(p)

Nah, it's about formalizing "you can just think about neurons, you don't have to simulate individual atoms." Which raises the question "don't have to for what purpose?", and causal closure answers "for literally perfect simulation."

Replies from: green_leaf↑ comment by green_leaf · 2024-11-29T10:34:38.914Z · LW(p) · GW(p)

The neurons/atoms distinction isn't causal closure. Causal closure means there is no outside influence entering the program (other than, let's say, the sensory inputs of the person).

Replies from: Charlie Steiner↑ comment by Charlie Steiner · 2024-11-29T18:06:01.029Z · LW(p) · GW(p)

Euan seems to be using the phrase to mean (something like) causal closure (as the phrase would normally be used e.g. in talking about physicalism) of the upper level of description - basically saying every thing that actually happens makes sense in terms of the emergent theory, it doesn't need to have interventions from outside or below.

Replies from: green_leaf↑ comment by green_leaf · 2024-11-30T00:36:22.948Z · LW(p) · GW(p)

I know the causal closure of the physical as the principle that nothing non-physical influences physical stuff, so that would be the causal closure of the bottom level of description (since there is no level below the physical), rather than the upper.

So if you mean by that that it's enough to simulate neurons rather than individual atoms, that wouldn't be "causal closure" as Wikipedia calls it.

comment by JBlack · 2024-11-28T23:22:19.712Z · LW(p) · GW(p)

The conclusion does not follow from the argument.

The argument suggests that it is unlikely that a perfect replica of the functioning of a specific human brain can be emulated on a practical computer. The conclusion generalizes that out to no conscious emulation of a human brain, at all.

These are enormously different claims, and neither follows from the other.

Replies from: euanmclean↑ comment by EuanMcLean (euanmclean) · 2024-11-29T11:57:38.560Z · LW(p) · GW(p)

The statement I'm arguing against is:

Practical CF: A simulation of a human brain on a classical computer, capturing the dynamics of the brain on some coarse-grained level of abstraction, that can run on a computer small and light enough to fit on the surface of Earth, with the simulation running at the same speed as base reality, would cause the conscious experience of that brain.

i.e., the same conscious experience as that brain. I titled this "is the mind a program" rather than "can the mind be approximated by a program".

Whether or not a simulation can have consciousness at all is a broader discussion I'm saving for later in the sequence, and is relevant to a weaker version of CF.

I'll edit to make this more clear.

comment by Drake Thomas (RavenclawPrefect) · 2024-11-30T01:50:18.959Z · LW(p) · GW(p)

The theoretical maximum FLOPS of an Earth-bound classical computer is something like .

Is this supposed to have a different base or exponent? A single H100 already gets like FLOP/s.

comment by Vladimir_Nesov · 2024-11-28T23:30:39.231Z · LW(p) · GW(p)

A first candidate abstraction is the neuron doctrine: the idea that the mind is governed purely by patterns of neuron spiking. This abstraction seems simple enough (it could be captured with an artificial neural network of the same size: requiring around parameters).

Human brain only holds about 200-300 trillion synapses, getting to 1e20 asks for almost a million parameters per synapse.

Replies from: euanmclean↑ comment by EuanMcLean (euanmclean) · 2024-11-29T14:23:57.281Z · LW(p) · GW(p)

thanks, corrected

comment by Steven Byrnes (steve2152) · 2024-12-01T01:05:07.031Z · LW(p) · GW(p)

In my last post [LW · GW], I defined a concrete claim that computational functionalists tend to make:

Practical CF: A simulation of a human brain on a classical computer, capturing the dynamics of the brain on some coarse-grained level of abstraction, that can run on a computer small and light enough to fit on the surface of Earth, with the simulation running at the same speed as base reality, would cause the same conscious experience as that brain.

From reading this comment [LW(p) · GW(p)], I understand that you mean the following:

- Practical CF, more explicitly: A simulation of a human brain on a classical computer, capturing the dynamics of the brain on some coarse-grained level of abstraction, that can run on a computer small and light enough to fit on the surface of Earth, with the simulation running at the same speed as base reality, would cause the same conscious experience as that brain, in the specific sense of thinking literally the exact same sequence of thoughts in the exact same order, in perpetuity.

I agree that “practical CF” as thus defined is false—indeed I think it’s so obviously false that this post is massive overkill in justifying it.

But I also think that “practical CF” as thus defined is not in fact a claim that computational functionalists tend to make.

Let’s put aside simulation and talk about an everyday situation.

Suppose you’re the building manager of my apartment, and I’m in my apartment doing work. Unbeknownst to me, you flip a coin. If it’s heads, then you set the basement thermostat to 20°C. If it’s tails, then you set the basement thermostat to 20.1°C. As a result, the temperature in my room is slightly different in the two scenarios, and thus the temperature in my brain is slightly different, and this causes some tiny number of synaptic vesicles to release differently under heads versus tails, which gradually butterfly-effect into totally different trains of thought in the two scenarios, perhaps leading me to make a different decision on some question where I was really ambivalent and going back and forth, or maybe having some good idea in one scenario but not the other.

But in both scenarios, it’s still “me”, and it’s still “my mind” and “my consciousness”. Do you see what I mean?

So anyway, when you wrote “A simulation of a human brain on a classical computer…would cause the same conscious experience as that brain”, I initially interpreted that sentence as meaning something more like “the same kind of conscious experience”, just as I would have “the same kind of conscious experience” if the basement thermostat were unknowingly set to 20°C versus 20.1°C.

(And no I don’t just mean “there is a conscious experience either way”. I mean something much stronger than that—it’s my conscious experience either way, whether 20°C or 20.1°C.)

Do you see what I mean? And under that interpretation, I think that the statement would be not only plausible but also a better match to what real computational functionalists usually believe.

Replies from: sil-ver, euanmclean, andrei-alexandru-parfeni↑ comment by Rafael Harth (sil-ver) · 2024-12-02T13:44:43.491Z · LW(p) · GW(p)

I agree that “practical CF” as thus defined is false—indeed I think it’s so obviously false that this post is massive overkill in justifying it.

But I also think that “practical CF” as thus defined is not in fact a claim that computational functionalists tend to make.

The term 'functionalist' is overloaded. A lot of philosophical terms are overloaded, but 'functionalist' is the most egregiously overloaded of all philosophical terms because it refers to two groups of people with two literally incompatible sets of beliefs:

- (1) the people who are consciousness realists and think there's this well-defined consciousness stuff exhibited from human brains, and also that the way this stuff emerges depends on what computational steps/functions/algorithms are executed (whatever that means exactly)

- (2) the people who think consciousness is only an intuitive model, in which case functionalism is kinda trivial and not really a thing that can be proved or disproved, anyway

Unless I'm misinterpreting things here (and OP can correct me if I am), the post is arguing against (1), but you are (2), which is why you're talking past each other here. (I don't think this sequence in general is relevant to your personal views, which is what I also tried to say here [LW(p) · GW(p)].) In the definition you rephrased

would cause the same conscious experience as that brain, in the specific sense of thinking literally the exact same sequence of thoughts in the exact same order, in perpetuity.

... consciousness realists will read the 'thinking' part as referring to thinking in the conscious mind, not to thinking in the physical brain. So to you this reads obviously false to you because you don't think there is a conscious mind separate from the physical brain, and the thoughts in the physical brain aren't 'literally exactly the same' in the biological brain vs. the simulation -- obviously! But the (1) group does, in fact, believe in such a thing, and their position does more or less imply that it would be thinking the same thoughts.

I believe this is what OP is trying to gesture at as well with their reply here.

↑ comment by EuanMcLean (euanmclean) · 2024-12-02T11:43:36.051Z · LW(p) · GW(p)

Thanks for the comment Steven.

Your alternative wording of practical CF is indeed basically what I'm arguing against (although, we could interpret different degrees of the simulation having the "exact" same experience, and I think the arguments here don't only argue against the strongest versions but also weaker versions, depending on how strong those arguments are).

I'll explain a bit more why I think practical CF is relevant to CF more generally.

Firstly, functionalist commonly say things like

Computational functionalism: the mind is the software of the brain. (Piccinini [LW(p) · GW(p)])

Which, when I take at face value, is saying that there is actually a program being implemented by the brain that is meaningful to point to (i.e. it's not just a program in the sense that any physical process could be a program if you simulate it (assuming digital physics etc)). That program lives on a level of abstraction above biophysics.

Secondly, computational functionalism, taken at fact value again, says that all details of the conscious experience should be encoded in the program that creates it. If this isn't true, then you can't say that conscious experience is that program because the experience has properties that the program does not.

Putnam advances an opposing functionalist view, on which mental states are functional states. (SEP)

He proposes that mental activity implements a probabilistic automaton and that particular mental states are machine states of the automaton’s central processor. (SEP)

the mind is constituted by the programs stored and executed by the brain (Piccinini [LW(p) · GW(p)])

I can accept the charge that this still is a stronger version of CF that a number of functionalists subscribe to. Which is fine! My plan was to address quite narrow claims at the start of the sequence and move onto broader claims later on.

I'd be curious to hear which of the above steps you think miss the mark on capturing common CF views.

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2024-12-02T14:42:44.777Z · LW(p) · GW(p)

I guess I shouldn’t put words in other people’s mouths, but I think the fact that years-long trains-of-thought cannot be perfectly predicted in practice because of noise is obvious and uninteresting to everyone, I bet including to the computational functionalists you quoted, even if their wording on that was not crystal clear.

There are things that the brain does systematically and robustly by design, things which would be astronomically unlikely to happen by chance. E.g. the fact that I move my lips to emit grammatical English-language sentences rather than random gibberish. Or the fact that humans wanted to go to the moon, and actually did so. Or the fact that I systematically take actions that tend to lead to my children surviving and thriving, as opposed to suffering and dying.

That kind of stuff, which my brain does systematically and robustly, is what makes me me. My memories, goals, hopes and dreams, skills, etc. The fact that I happened to glance towards my scissors at time 582834.3 is not important, but the robust systematic patterns are.

And the reason that my brain does those things systematically and robustly is because the brain is designed to run an algorithm that does those things, for reasons that can be explained by a mathematical analysis of that algorithm. Just as a sorting algorithm systematically sorts numbers for reasons that can be explained by a mathematical analysis of that algorithm.

I don’t think “software versus hardware” is the right frame. I prefer “the brain is a machine that runs a certain algorithm”. Like, what is software-versus-hardware for a mechanical calculator? I dunno. But there are definitely algorithms that the mechanical calculator is implementing.

So we can talk about what is the algorithm that the brain is running, and why does it work? Well, it builds models, and stores them, and queries them, and combines them, and edits them, and there’s a reinforcement learning actor-critic thing, blah blah blah.

Those reasons can still be valid even if there’s some unpredictable noise in the system. Think of a grandfather clock—the second hand will robustly move 60× faster than the minute hand, by design, even if there’s some noise in the pendulum that effects the speed of both. Or think of an algorithm that involves randomness (e.g. MCMC), and hence any given output is unpredictable, but the algorithm still robustly and systematically does stuff that is a priori specifiable and be astronomically unlikely to happen by chance. Or think of the Super Mario 64 source code compiled to different chip architectures that use different size floats (for example). You can play both, and they will both be very recognizably Super Mario 64, but any given exact sequence of button presses will eventually lead to divergent trajectories on the two systems. (This kind of thing is known to happen in tool-assisted speedruns—they’ll get out of sync on different systems, even when it’s “the same game” to all appearances.)

But it’s still reasonable to say that the Super Mario 64 source code is specifying an algorithm, and all the important properties of Super Mario 64 are part of that algorithm, e.g. what does Mario look like, how does he move, what are the levels, etc. It’s just that the core algorithm is not specified at such a level of detail that we can pin down what any given infinite sequence of button presses will do. That depends on unimportant details like floating point rounding.

I think this is compatible with how people use the word “algorithm” in practice. Like, CS people will causally talk about “two different implementations of the MCMC algorithm”, and not just “two different algorithms in the MCMC family of algorithms”.

That said, I guess it’s possible that Putnam and/or Piccinini were describing things in a careless or confused way viz. the role of noise impinging upon the brain. I am not them, and it’s probably not a good use of time to litigate their exact beliefs and wording. ¯\_(ツ)_/¯

↑ comment by sunwillrise (andrei-alexandru-parfeni) · 2024-12-01T03:31:45.065Z · LW(p) · GW(p)

Edit: This comment misinterpreted [LW(p) · GW(p)] the intended meaning of the post.

Practical CF, more explicitly: A simulation of a human brain on a classical computer, capturing the dynamics of the brain on some coarse-grained level of abstraction, that can run on a computer small and light enough to fit on the surface of Earth, with the simulation running at the same speed as base reality, would cause the same conscious experience as that brain, in the specific sense of thinking literally the exact same sequence of thoughts in the exact same order, in perpetuity.

I... don't think this is necessarily what @EuanMcLean [LW · GW] meant? At the risk of conflating his own perspective and ambivalence on this issue with my own [LW · GW], this is a question of personal identity and whether the computationalist perspective, generally considered a "reasonable enough" assumption to almost never be argued for explicitly [LW(p) · GW(p)] on LW, is correct. As I wrote [LW(p) · GW(p)] a while ago on Rob's post [LW · GW]:

As TAG has written [LW(p) · GW(p)] a number of times, the computationalist thesis seems not to have been convincingly (or even concretely) argued for in any LessWrong post or sequence (including Eliezer's Sequences). What has been argued for, over and over again, is physicalism, and then more and more rejections of dualist conceptions of souls.

That's perfectly fine, but "souls don't exist and thus consciousness and identity must function on top of a physical substrate" is very different from "the identity of a being is given by the abstract classical computation performed by a particular (and reified) subset of the brain's electronic circuit," and the latter has never been given compelling explanations or evidence. This is despite the fact that the particular conclusions that have become part of the ethos of LW about stuff like brain emulation, cryonics etc are necessarily reliant on the latter, not the former.

As a general matter, accepting physicalism as correct would naturally lead one to the conclusion that what runs on top of the physical substrate works on the basis of... what is physically there (which, to the best of our current understanding, can be represented through Quantum Mechanical probability amplitudes [LW · GW]), not what conclusions you draw from a mathematical model that abstracts away quantum randomness in favor of a classical picture, the entire brain structure in favor of (a slightly augmented version of) its connectome, and the entire chemical make-up of it in favor of its electrical connections. As I have mentioned, that is a mere model that represents a very lossy compression of what is going on; it is not the same [LW · GW] as the real thing, and conflating the two is an error that has been going on here for far too long. Of course, it very well might be the case [LW · GW] that Rob and the computationalists are right about these issues, but the explanation up to now should make it clear why it is on them to provide evidence for their conclusion.

I recognize you wrote [LW(p) · GW(p)] in response to me [LW · GW] a while ago that you "find these kinds of conversations to be very time-consuming and often not go anywhere." I understand this, and I sympathize to a large extent: I also find these discussions very tiresome, which became part of why I ultimately did not engage too much with some of the thought-provoking responses to the question I posed a few months back. So it's totally ok for us not to get into the weeds of this now (or at any point, really). Nevertheless, for the sake of it, I think the "everyday experience" thermostat example does not seem like an argument in favor of computationalism over physicalism-without-computationalism, since the primary generator of my intuition that my identity would be the same in that case is the literal physical continuity of my body throughout that process. I just don't think there is a "prosaic" (i.e., bodily-continuity-preserving) analogue or intuition pump to the case of WBE or similar stuff in this respect.

Anyway, in light of footnote 10 in the post ("The question of whether such a simulation contains consciousness at all, of any kind, is a broader discussion that pertains to a weaker version of CF that I will address later on in this sequence"), which to me draws an important distinction between a brain-simulation having some consciousness/identity versus having the same consciousness/identity as that of whatever (physically-instantiated) brain it draws from, I did want to say that this particular post seems focused on the latter and not the former, which seems quite decision-relevant to me:

Replies from: steve2152jbash [LW(p) · GW(p)]: These various ideas about identity don't seem to me to be things you can "prove" or "argue for". They're mostly just definitions that you adopt or don't adopt. Arguing about them is kind of pointless.

sunwillrise [LW(p) · GW(p)]: I absolutely disagree. The basic question of "if I die but my brain gets scanned beforehand and emulated [? · GW], do I nonetheless continue living (in the sense of, say, anticipating [LW · GW] the same kinds of experiences)?" seems the complete opposite of pointless, and the kind of conundrum in which agreeing or disagreeing with computationalism leads to completely different answers.

Perhaps there is a meaningful linguistic/semantic component to this, but in the example above, it seems understanding the nature of identity is decision-theoretically relevant for how one should think about whether WBE would be good or bad (in this particular respect, at least).

↑ comment by Steven Byrnes (steve2152) · 2024-12-02T01:46:38.344Z · LW(p) · GW(p)

I should probably let EuanMcLean speak for themselves but I do think “literally the exact same sequence of thoughts in the exact same order” is what OP is talking about. See the part about “causal closure”, and “predict which neurons are firing at t1 given the neuron firings at t0…”. The latter is pretty unambiguous IMO: literally the exact same sequence of thoughts in the exact same order.

I definitely didn’t write anything here that amounts to a general argument for (or against) computationalism. I was very specifically responding to this post. :)

comment by Nate Showell · 2024-11-29T20:51:35.690Z · LW(p) · GW(p)

Another piece of evidence against practical CF is that, under some conditions, the human visual system is capable of seeing individual photons. This finding demonstrates that in at least some cases, the molecular-scale details of the nervous system are relevant to the contents of conscious experience.

comment by Dagon · 2024-11-29T16:59:06.344Z · LW(p) · GW(p)

This comes down to a HUGE unknown - what features of reality need to be replicated in another medium in order to result in sufficiently-close results?

I don't know the answer, and I'm pretty sure nobody else does either. We have a non-existence proof: it hasn't happened yet. That's not much evidence that it's impossible. The fact that there's no actual progress toward it IS some evidence, but it's not overwhelming.

Personally, I don't see much reason to pursue it in the short-term. But I don't feel a very strong need to convince others.

Replies from: sil-ver↑ comment by Rafael Harth (sil-ver) · 2024-11-29T17:41:49.985Z · LW(p) · GW(p)

I don't know the answer, and I'm pretty sure nobody else does either.

I see similar statements all the time (no one has solved consciousness yet / no one knows whether LLMs/chickens/insects/fish are conscious / I can only speculate and I'm pretty sure this is true for everyone else / etc.) ... and I don't see how this confidence is justified. The idea seems to be that no one can't have found the correct answers to the philosophical problems yet because if they had, those answers would immediately make waves and soon everyone on LessWrong would know about it. But it's like... do you really think this? People can't even agree on whether consciousness is a well-defined thing or a lossy abstraction [LW · GW]; do we really think that if someone had the right answers, they would automatically convince everyone of them?

There are other fields where those kinds of statements make sense, like physics. If someone finds the correct answer to a physics question, they can run an experiment and prove it. But if someone finds the correct answer to a philosophical question, then they can... try to write essays about it explaining the answer? Which maybe will be slightly more effective than essays arguing for any number of different positions because the answer is true?

I can imagine someone several hundred years ago having figured out, purely based on first-principles reasoning, that life is no crisp category at the territory but just a lossy conceptual abstraction. I can imagine them being highly confident in this result because they've derived it for correct reasons and they've verified all the steps that got them there. And I can imagine someone else throwing their hands up and saying "I don't know what mysterious force is behind the phenomenon of life, and I'm pretty sure no one else does, either".

Which is all just to say -- isn't it much more likely that the problem has been solved, and there are people who are highly confident in the solution because they have verified all the steps that led them there, and they know with high confidence which features need to be replicated to preserve consciousness... but you just don't know about it because "find the correct solution" and "convince people of a solution" are mostly independent problems, and there's just no reason why the correct solution would organically spread?

(As I've mentioned, the "we know that no one knows" thing is something I see expressed all the time, usually just stated as a self-evident fact -- so I'm equally arguing against everyone else who's expressed it. This just happens to the be first time that I've decided to formulate my objection.)

Replies from: Dagon, weightt-an↑ comment by Dagon · 2024-11-29T18:06:44.254Z · LW(p) · GW(p)

But if someone finds the correct answer to a philosophical question, then they can... try to write essays about it explaining the answer? Which maybe will be slightly more effective than essays arguing for any number of different positions because the answer is true?

I think this is a crux. To the extent that it's a purely philosophical problem (a modeling choice, contingent mostly on opinions and consensus about "useful" rather than "true"), posts like this one make no sense. To the extent that it's expressed as propositions that can be tested (even if not now, it could be described how it will resolve), it's NOT purely philosophical.

This post appears to be about an empirical question - can a human brain be simulated with sufficient fidelity to be indistinguishable from a biological brain. It's not clear whether OP is talking about an arbitrary new person, or if they include the upload problem as part of the unlikelihood. It's also not clear why anyone cares about this specific aspect of it, so maybe your comments are appropriate.

Replies from: sil-ver↑ comment by Rafael Harth (sil-ver) · 2024-11-29T18:27:02.810Z · LW(p) · GW(p)

What about if it's a philosophical problem that has empirical consequences? I.e., suppose answering the philosophical questions tells you enough about the brain that you know how hard it would be to simulate it on a digital computer. In this case, the answer can be tested -- but not yet -- and I still think you wouldn't know if someone had the answer already.

Replies from: Dagon↑ comment by Dagon · 2024-11-29T18:47:04.375Z · LW(p) · GW(p)

I'd call that an empirical problem that has philosophical consequences :)

And it's still not worth a lot of debate about far-mode possibilities, but it MAY be worth exploring what we actually know and we we can test in the near-term. They've fully(*) emulated some brains - https://openworm.org/ is fascinating in how far it's come very recently. They're nowhere near to emulating a brain big enough to try to compare WRT complex behaviors from which consciousness can be inferred.

* "fully" is not actually claimed nor tested. Only the currently-measurable neural weights and interactions are emulated. More subtle physical properties may well turn out to be important, but we can't tell yet if that's so.

Replies from: sil-ver↑ comment by Rafael Harth (sil-ver) · 2024-11-29T19:58:13.886Z · LW(p) · GW(p)

I'd call that an empirical problem that has philosophical consequences :)

That's arguable, but I think the key point is that if the reasoning used to solve the problem is philosophical, then a correct solution is quite unlikely to be recognized as such just because someone posted it somewhere. Even if it's in a peer-reviewed journal somewhere. That's the claim I would make, anyway. (I think when it comes to consciousness, whatever philosophical solution you have will probably have empirical consequences in principle, but they'll often not be practically measurable with current neurotech.)

Replies from: Dagon↑ comment by Dagon · 2024-11-30T03:43:45.944Z · LW(p) · GW(p)

Hmm, still not following, or maybe not agreeing. I think that "if the reasoning used to solve the problem is philosophical" then "correct solution" is not available. "useful", "consensus", or "applicable in current societal context" might be better evaluations of a philosophical reasoning.

Replies from: sil-ver↑ comment by Rafael Harth (sil-ver) · 2024-11-30T10:46:49.028Z · LW(p) · GW(p)

Couldn't you imagine that you use philosophical reasoning to derive accurate facts about consciousness, which will come with insights about the biological/computational structure of consciousness in the human brain, which will then tell you things about which features are critical / how hard human brains are to simulate / etc.? This would be in the realm of "empirical predictions derived from philosophical reasoning that are theoretically testable but not practically testable".

I think most solutions to consciousness should be like that, although I'd grant that it's not strictly necessary. (Integrated Information Theory might be an example of a theory that's difficult to test even in principle if it were true.)

Replies from: Dagon↑ comment by Dagon · 2024-11-30T16:35:48.518Z · LW(p) · GW(p)

Couldn't you imagine that you use philosophical reasoning to derive accurate facts about consciousness,

My imagination is pretty good, and while I can imagine that, it's not about this universe or my experience in reasoning and prediction.

Can you give an example in another domain where philosophical reasoning about a topic led to empirical facts about that topic? Not meta-reasoning about science, but actual reasoning about a real thing?

Replies from: sil-ver↑ comment by Rafael Harth (sil-ver) · 2024-11-30T17:32:41.032Z · LW(p) · GW(p)

Can you give an example in another domain where philosophical reasoning about a topic led to empirical facts about that topic?

Yes -- I think evolution is a pretty clean example. Darwin didn't have any more facts than other biologists or philosophers, and he didn't derive his theory by collecting facts; he was just doing better philosophy than everyone else. His philosophy led to a large set of empirical predictions, those predictions were validated, and that's how and when the theory was broadly accepted. (Edit: I think that's a pretty accurate description of what happened, maybe you could argue with some parts of it?)

I think we should expect that consciousness works out the same way -- the problem has been solved, the solution comes with a large set of empirical predictions, it will be broadly accepted once the empirical evidence is overwhelming, and not before. (I would count camp #1 [LW · GW] broadly as a 'theory' with the empirical prediction that no crisp divide between conscious and unconscious processing exists in the brain, and that consciousness has no elegant mathematical structure in any meaningful sense. I'd consider this empirically validated once all higher cognitive functions have been reverse-engineered as regular algorithms with no crisp/qualitative features separating conscious and unconscious cognition.)

(GPT-4o says that before evolution was proposed, the evolution of humans was considered a question of philosophy, so I think it's quite analogous in that sense.)

Replies from: gwern, Dagon↑ comment by gwern · 2024-11-30T19:22:35.885Z · LW(p) · GW(p)

Edit: I think that's a pretty accurate description of what happened, maybe you could argue with some parts of it?

I think one could argue with a lot of your description of how Charles Darwin developed his theory of evolution after the H.M.S. Beagle expedition and decades of compiling examples and gradually elaborating a theory before he finally finished Origin of Species.

Replies from: sil-ver↑ comment by Rafael Harth (sil-ver) · 2024-11-30T19:55:28.626Z · LW(p) · GW(p)

Fair enough; the more accurate response would have been that evolution might be an example, depending on how the theory was derived (which I don't know). Maybe it's not actually an example.

The crux would be when exactly he got the idea; if the idea came first and the examples later, then it's still largely analogous (imo); if the examples were causally upstream of the core idea, then not so much.

↑ comment by Dagon · 2024-11-30T19:10:20.461Z · LW(p) · GW(p)

That's a really good example, thank you! I see at least some of the analogous questions, in terms of physical measurements and variance in observations of behavioral and reported experiences. I'm not sure I see the analogy in terms of qualia and other unsure-even-how-to-detect phenomena.

↑ comment by weightt an (weightt-an) · 2024-11-30T11:20:59.096Z · LW(p) · GW(p)

I can imagine someone several hundred years ago having figured out, purely based on first-principles reasoning, that life is no crisp category at the territory but just a lossy conceptual abstraction. I can imagine them being highly confident in this result because they've derived it for correct reasons and they've verified all the steps that got them there. And I can imagine someone else throwing their hands up and saying "I don't know what mysterious force is behind the phenomenon of life, and I'm pretty sure no one else does, either".

But is this a correct conclusion? I have an option right now to make a civilization out of brains-in-vats in a sandbox simulation similar to our reality but with clear useful distinction on life VS non life. Like, suppose there is a "mob" class.

Like, then, this person there, inside it, who figured out that life and non life is a same thing is wrong in a local useful sense, and correct in a useless global sense (like, everything is code / matter in outer reality). People inside the simulation who found the actual working thing that is life scientifically, would laugh at them 1000 simulated years later and present it as an example of presumptuousness of philosophers. And i agree with them, it was a misapplication.

↑ comment by Rafael Harth (sil-ver) · 2024-11-30T12:13:21.138Z · LW(p) · GW(p)

I see your point, but I don't think this undermines the example? Like okay, the 'life is not a crisp category' claim has nuance to it, but we could imagine the hypothetical smart philosopher figuring out that as well. I.e., life is not a crisp category in the territory, but it is an abstraction that's well-defined in most cases and actually a useful category because of this <+ any other nuance that's appropriate>.

It's true that the example here (figuring out that life isn't a binary/well-defined thing) is not as practically relevant as figuring out stuff about consciousness. (Nonetheless I think the property of 'being correct doesn't entail being persuasive' still holds.) I'm not sure if there is a good example of an insight that has been derived philosophically, is now widely accepted, and has clear practical benefits. (Free Will and implications for the morality of punishment are pretty useful imo, but they're not universally accepted so not a real example, and also no clear empirical predictions.)

Replies from: weightt-an↑ comment by weightt an (weightt-an) · 2024-11-30T13:11:13.852Z · LW(p) · GW(p)

Well, it's one thing to explore the possibility space and completely the other one to pinpoint where you are in it. Many people will confidently say they are at X or at Y, but all that they do is propose some idea and cling to it irrationally. In aggregate, in hindsight there will be people who bonded to the right idea, quite possibly. But it's all mix Gettier cases and true negative cases.

And very often it's not even "incorrect" it's "neither correct nor incorrect". Often there is frame of reference shift such that all the questions posed before it turn out to be completely meaningless. Like "what speed?", you need more context as we know now.

And then science pinpoints where you are by actually digging into the subject matter. It's a kind of sad state of "diverse hypothesis generation" when it's a lot easier just go blind into it.