Requisite Variety

post by Stephen Fowler (LosPolloFowler) · 2023-04-21T08:07:28.751Z · LW · GW · 0 commentsContents

Implication For Alignment Acknowledgements None No comments

Epistemics:

First two sections are almost half a century old ideas from early Control Theory. Last section on alignment is speculative.

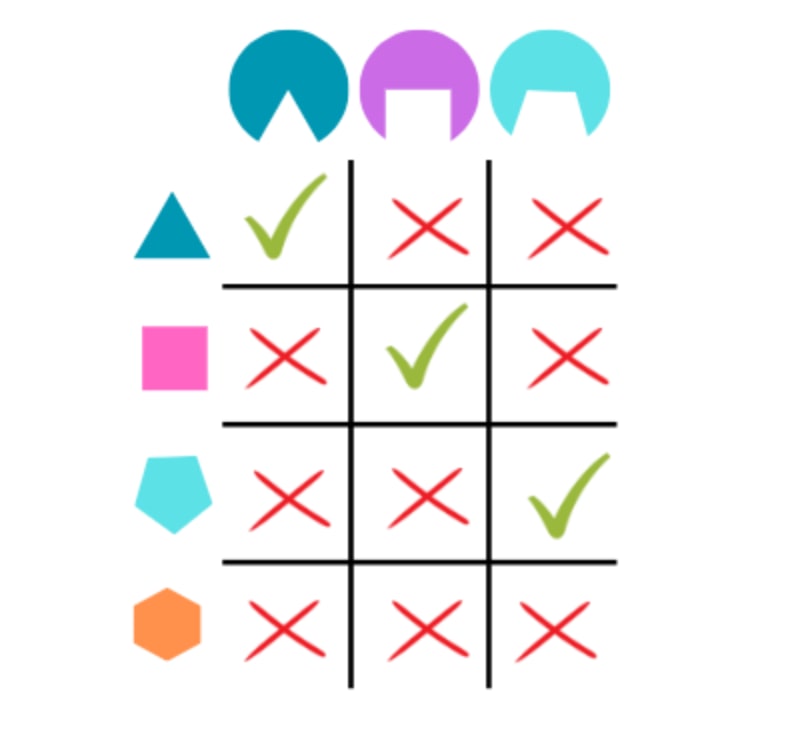

Illustration inspired by Norman and Bar-Yam, 2018

TL;DR:

Requisite Variety concerns the straightforward relationship between the number of actions we can take and the number of different actions required to mitigate problems that could arise. I use it to argue maintaining control of an increasingly complex system of AI's will require humans to dramatically improve their cognitive "throughput" or ultimately surrender control.

“Both of these qualities of the complex system—heterogeneity in the parts, and richness of interaction between them—have the same implication: the quantities of information that flow, either from system to observer or from part to part, are much larger than those that flow when the scientist is physicist or chemist. And it is because the quantities are large that the limitation is likely to become dominant in the selection of the appropriateic strategy”

- W. Ross Ashby, “Requisite variety and its implications for the control of complex systems”

"Can the pace of technological progress continue to speed up indefinitely? Is there not a point where humans are unable to think fast enough to keep up with it?"

-Ray Kurzweil, The law of accelerating return

Requisite Variety is a very fancy sounding name for a very pedestrian concept.

It's so simple that as the idea clicks you might be tempted to think "oh hang on, this post began with 2 separate quotes, there must be some deeper nuance at play". But no! It is really that simple!

In plain English:

‘Requisite’ = Required

‘Variety’ = Number of elements.

"Requisite Variety", concerns the "required number of options" you need to control or mitigate the impact of some complex systems. Lets say there's 3 different ways a system could behave badly and each requires a separate response. If you are stuck with only 2 responses, we can immediately conclude that this is insufficient. It doesn't matter what the responses are.

It's almost tautological.

Here's a cute story about a Roomba.

Suppose, you’ve just purchased a new automated vacuum cleaner, designed to clean your room. You set it down in the living room. You whip out the remote and notice it only has one button. You click the button. “CLEANING LIVING ROOM”. Fantastic.

20 minutes later, you set the Roomba down in your bedroom. You click the button. “CLEANING LIVING ROOM” it says, and rolls away toward the living room. After a few more tests, you work out pretty quickly that this single button appears to tell it to do exactly one thing, and that’s cleaning the living room. For illustrative purposes, your apartment has a bedroom, a living room, a bathroom and a kitchen.

The refund arrives a week or so later. The replacement remote has 2 buttons. “CLEANING LIVING ROOM” squeaks the Roomba. 20 minutes later you try the second button “CLEANING KITCHEN”. You hope in vain that there is some way to remap the buttons, but there is not.

A few weeks, and many frustrated calls with support, another replacement arrives. This time when you open the box and let out a deep sigh.

You already know you’ll need to return it. There are only 3 buttons.

Why is it that you knew the remote wasn't a suitable replacement?

Simple. You had more rooms than buttons on the remote. Assuming the standard of each button being assigned to a single room remained, you couldn't possibly signal the Roomba to clean each unique room in your house.[1]

The amount of control over the outcome is bounded by the number of possible commands.

Suppose we have a set of all possible disturbances and a set of all possible actions .

We have a function where is the set of utility outcomes.

We will simplify the discussion by having to be the set , either things are ok or they are not. This is the outcome for properties that we care about, it is not intended to keep track of the full state of the system after applying our action to a disturbance.

is the "set of appropriate responses to ".

In other words

Given of a finite set of disturbances , and a set of actions we say that "B controls iff

The "Requisite Variety" of a set of disturbances is the minimum cardinality of the a set of responses that control it.

Implication For Alignment

"The reward signals that researchers send into this datacenter would then increasingly be based on crude high-level observations like “Do humans seem to be better off?” and “Are profits going up?”, since humans would be increasingly unable to accurately judge more granular decisions like “How many widgets should our Tulsa, OK factory manufacture this fiscal quarter?” in isolation."

-Ajeya Cotra, "Without specific countermeasures, the easiest path to transformative AI likely leads to AI takeover [LW · GW]"

Imagine a scenario in which we have a group of humans monitoring a deployed AGI system and providing real time feedback. Let's be very nice to the humans and have the AGI be completely truthful in its reporting. We will also assume that the AGI is perfect in it's ability to identify potential problems.

When it encounters a decision that it is unsure of that the AGI will be request a response from the humans. The "disturbance" here is the current state of the AGI system. Unfortunately a team of humans can only think so fast which means that as the system continues to grow in both size and complexity.

One of the following might then occur:

- As the variety of the disturbances continues to increase, human cognitive capacity is insufficient to keep pace.

Not a very fun scenario. Unable to steer the AGI with enough precision, control falls out of out hands. Even with the AGI system wanting us to be in control, we simply think too slow. - The variety of disturbances continues to increase. Humans are able to enhance their cognitive capacity to keep up.

Cyborgs baby! [LW · GW] Humans are able to increase the speed at which they can process information managing to keep up with the ever growing AGI. - The variety of disturbances for each unit of time remains constant, despite the system continuing to increase in complexity.

Requisite Variety doesn't concern itself with fully controlling the system, just ensuring we have a system that is satisfactory. Here we might imagine a scenario in which the number of "important decisions" that need to be made by the humans remains roughly constant in time, despite the system continuing to grow in complexity.

We would we would be extremely lucky for this to be the case. - The variety of disturbances is capped, we communicate alignment information for a finite period of time and this is sufficient

In this scenario alignment forever doesn't really require monitoring. We just need to communicate a finite string of information pointing to alignment and we can sit back and let the AGI do the rest. Broadly I see this possibility as being divisible into two subcategories:- In the worlds in which life is good, the amount of information required to describe the alignment target is relatively small. Our response might involve use rewiring the AGI's internals, as described by John Wentworth here [LW · GW]. Riffing off Wentworth, the closer the abstractions used by the AGI are to our own the shorter the amount of data we would need to communicate, something I wrote about recently here [LW · GW].

- In worlds in which life is less good, the amount of information required to describe a satisfactory human alignment target is very very high. This will possibly be the case if the Natural Abstraction Hypothesis doesn't hold. In this scenario the amount of data you need is in the same ballpark as the amount of data required to describe an emulation of a human brain.

In this somewhat bad (but hopefully unlikely) outcome one or many humans end up needing to be consulted by the AGI for major decisions. As the AGI system continues to increase in complexity, it simulates more and more virtual brains to consult.

Acknowledgements

Requisite Variety was given the name in a 1956 paper by W. Ross Ashby. An idea very similar to requisite variety is also discussed by Yudkowsky in The Sequences [LW · GW], thanks to David Udell for pointing this out. It is directly related to The Good Regulator Theorem which John Wentworth has discussed here [LW · GW].

Myself, Peter Park and Nicholas Pochinkov discussed this idea extensively during the MATS 2.0 program. Thank to Nicholas Pochinkov, David Udell and Peter Park for feedback.

- ^

You could get around this problem by using a series of button presses to signal the Roomba. But notice that this too can only signal a finite number of rooms assuming there is a maximum message length allowed. The punchline is ultimately the same.

0 comments

Comments sorted by top scores.