Alignment Targets and The Natural Abstraction Hypothesis

post by Stephen Fowler (LosPolloFowler) · 2023-03-08T11:45:28.579Z · LW · GW · 0 commentsContents

Review: The Natural Abstraction Hypothesis Re-Targetting The Search If We Are Exceptionally Lucky More Realistic Scenarios None No comments

In this post, we explore the Natural Abstraction Hypothesis (NAH), a theory concerning the internal representations used by cognitive systems. We'll delve into how this hypothesis could significantly reduce the amount of data required to align AGI, provided we have powerful interpretability tools. We review the idea of Re-targetting The Search [LW · GW] and discuss how aligning AI targets requires less effort as their internal representations move closer to human abstractions. By the end of this post, you'll understand one way the NAH could impact future alignment efforts.

My research is supported by a grant from the LTFF. This post was inspired by Johannes C. Mayers recent comment about "Bite Sized Tasks [LW · GW]" and originally formed part of a much longer work. The present post was edited with assistance from a language model.

Review: The Natural Abstraction Hypothesis

Wentworth's Natural Abstraction Hypothesis states "a wide variety of cognitive architectures will learn to use approximately the same high-level abstract objects/concepts to reason about the world. [LW · GW]"

In his Summary of the Natural Abstraction Hypothesis [LW · GW], TheMcDouglas views this as 3 separate subclaims. I paraphrase his definitions of each claim here.

- Abstractability: The amount of information you need to know about a system that is far away is much lower than a total description of that system. The "universe abstracts well". This is almost tautological.

- Convergence: A wide variety of cognitive architectures will form the same abstractions.

- Human-Compatability: Humans are in included in that class of cognitive architectures, the abstractions used in our day to day lives are natural.

For our purposes, the NAH is a claim that when we "cut open" the internal of an AGI we can expect to find preexisting internal references to objects that we care about. The task of actually aligning the model becomes easier or harder depending on the degree to which the NAH is true.

Re-Targetting The Search

Re-Targetting The Search [LW · GW] is an proposal that explains how you could use fantastic interpretability tools to align an AGI. By "fantastic interpretability" tool, I believe the intended requirement is that they allow you to accurately decompose and translate the internal logic of the AGI, accurately identify pointers to the outside world (i.e. you know what the important variables mean) and finally the ability to cleanly edit the internal logic.

The first assumption is that the target AGI uses a re-targettable general search procedure. By general purpose we mean an algorithm that takes as input a goal or outcome, and returns a reasonable plan for achieving it [LW · GW]. By re-targettable we mean that there is a search algorithm can be altered to search for a different goal easily.

Secondly, we assume some version of the NAH holds. That means we anticipate the AGI already having representations of abstractions humans care about. The degree to which this is true is unclear.

If We Are Exceptionally Lucky

For the moment, lets assume the best possible case. Here we crack open the internals of the AGI and discover an accurate representation of "ideal human alignment target" already exists.

In this unrealistically ideal case, the proposal is simple. Using our fantastic interpretability tools, we just identify the part of the AGI corresponding to the target of the search procedure and edit it to be pointing to what we actually want.

Alignment solved. Lets go home.

More Realistic Scenarios

In a more realistic case, weaker forms of the NAH holds. We can't find a reference to any "ideal human values" but we can find accurate references to abstractions humans and we understand the limitations of those abstractions. By accurate, I mean we don't run into the kind of problems outlined by Yudkowsky here [LW · GW].

We are going to have to "spell out" the alignment target using the pre-existing concepts within the AGI.

Lets suppose our alignment target is the rather modest goal of having at least one living human. That should satisfy all the "AI-not-kill-everyone" folk, although I'm sure it's going to upset a few people at CLR.

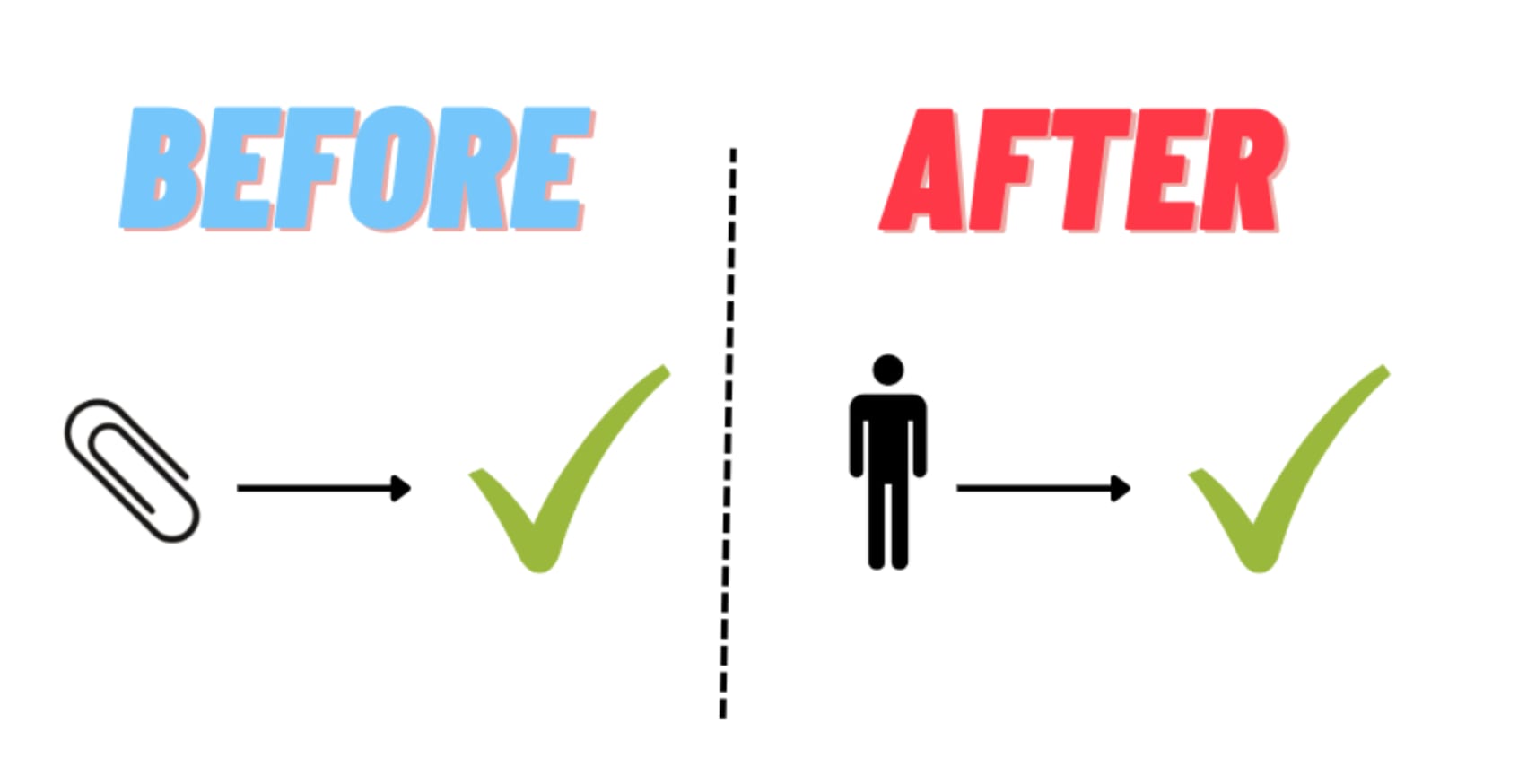

If we write out the conditions we want our AGI to satisfy we will want to point to concepts, such as "human". For each concept we need concepts we use one of two things could be true. Either it already exists as a variable in the AGI's internal logic, or that variable will need to be specified using whatever other variables we have access to in the AGI's internal logic (bad case). In the bad case, we have to hope that the internal variables that do exist let us cleanly specify the concept we want to use in a small amount of extra conditions.

For illustrative purposes, suppose "humans" does not exist as an internal concept for the AGI, but "feathers" and "bipedal" do exists. Then we will take a page from Plato and write the first condition as "Number of (featherless bipeds) is greater than one". But of course, this condition is satisfied by a plucked chicken and so our condition must then be "Number of ((featherless bipeds) which are not chickens) is greater than one".

The kicker is, the description length needed to pin down the abstraction target is very short if the AGI shares our abstractions, but long otherwise.

0 comments

Comments sorted by top scores.