How Often Does Taking Away Options Help?

post by niplav · 2024-09-21T21:52:40.822Z · LW · GW · 7 commentsContents

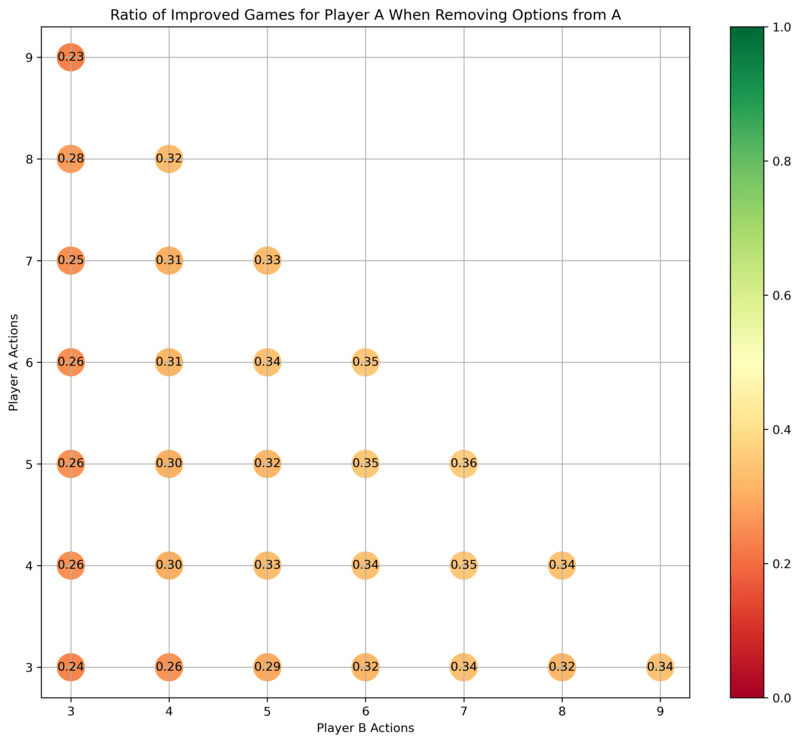

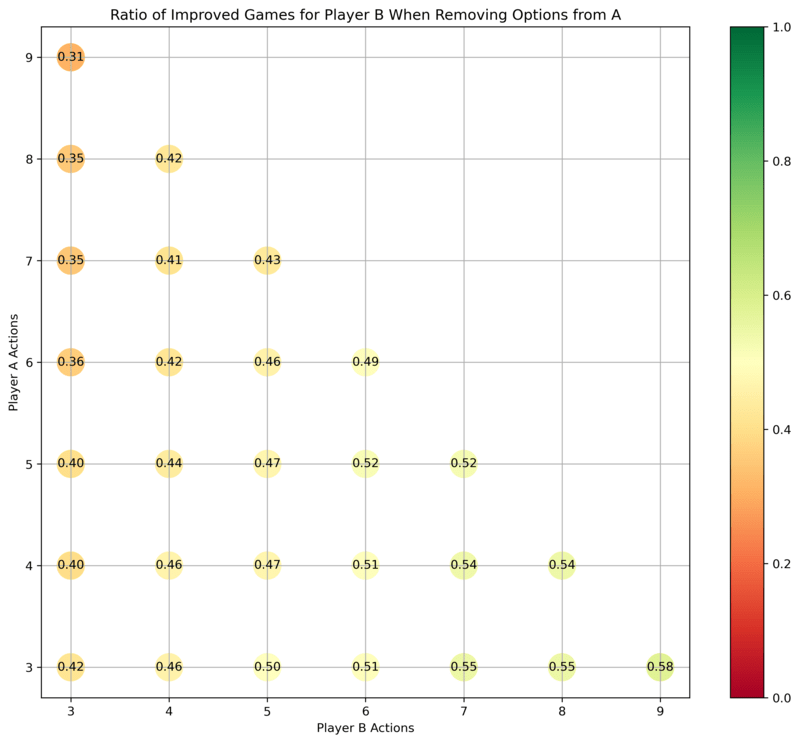

In small normal-form games, taking away an option from a player

improves the payoff of that player usually <⅓ of the time, but improves

the payoffs for the other player ½ of the time. The numbers depend on

the size of the game; plotted here.

Frequency

Plots

Interpretation

Postscript

None

7 comments

In small normal-form games, taking away an option from a player improves the payoff of that player usually <⅓ of the time, but improves the payoffs for the other player ½ of the time. The numbers depend on the size of the game; plotted here.

There's been [LW · GW] some discussion about the origins of [LW · GW] paternalism.

I believe that there's another possible justification for paternalism: Intervening in situations between different actors to bring about Pareto-improved games.

Let's take the game of chicken between Abdullah and Benjamin. If a paternalist Petra favors Abdullah, and Petra has access to Abdullah's car before the game, Petra can remove the steering wheel to make Abdullah's commitment for them — taking an option away. This improves Abdullah's situation by forcing Benjamin to swerve first, and guaranteeing Abdullah's victory (after all, it's a strictly dominant strategy for Benjamin to swerve).

In a less artificial context, one could see minimum wage laws as an example of this. Disregarding potential effects from increased unemployment, having higher minimum wage removes the temptation of workers to accept lower wages. Braess' paradox is another case where taking options away from people helps.

Frequency

We can figure this out by running a Monte-Carlo simulation.

First, start by generating random normal form games with payoffs in . Then, compute the Nash equilibria for both players via vertex enumeration of the best response polytope (using nashpy)—the Lemke-Howson algorithm was giving me duplicate results. Compute the payoffs for both Abdullah and Benjamin.

Then, remove one option from Abdullah (which translates to deleting a row from the payoff matrix).

Calculate the Nash equilibria and payoffs again.

We assume that all Nash equilibria are equally likely, so for each player we take the mean payoff across Nash equilibria.

For a player, taking away one of Abdullah's options is considered an improvement iff the mean payoff in the original game is stricly lower than the mean payoff in the game with one option removed. Thus, one can improve the game for Abdullah by taking away one of his options, and one can improve the game for Benjamin by taking away one of Abdullah's options, or both.

Plots

For games originally of size , how often is it the case that taking an option away from Abdullah improves the payoffs for Abdullah?

For games originally of size , how often is it the case that taking an option away from Abdullah improves the payoffs for Benjamin?

Interpretation

Abdullah is most helped by taking an option away from him when both he and Benjamin have a lot of options to choose from, e.g. in the case where both have six options. If Abdullah has many options and Benjamin has few, then taking an option away from Abdullah usually doesn't help him.

Benjamin is much more likely to be in an improved position if one takes away an option from Abdullah, especially if Benjamin had many options available already—which suggests that in political situations, powerful players are incentivized to advocate for paternalism over weaker players.

Postscript

One can imagine a paternalist government as a mechanism designer with a bulldozer, then.

Code here, largely written by Claude 3.5 Sonnet.

7 comments

Comments sorted by top scores.

comment by Dagon · 2024-09-22T02:25:38.364Z · LW(p) · GW(p)

This seems really dependent on the distribution of games and choices faced by participants. Also the specifics of why external limits are possible but normal commitments aren’t.

Edit: this is interesting work that I have never seen explored before. My concern is about applying it too directly to the real world, not about the underlying exploration.

comment by Emrik (Emrik North) · 2024-12-24T02:27:57.297Z · LW(p) · GW(p)

In nature, you can imagine species undergoing selection on several levels / time-horizons. If long-term fitness-considerations for genes differ from short-term considerations, long-term selection (let's call this "longscopic") may imply net fitness-advantage for genes which remove options wrt climbing the shortscopic gradient.

Meiosis as a "veil of cooperation"

Holly suggests this explains the origin of meiosis itself. Recombination randomizes which alleles you end up with in the next generation so it's harder for you to collude with a subset of them. And this forces you (as an allele hypothetically planning ahead) to optimize/cooperate for the benefit of all the other alleles in your DNA.[1] I call it a "veil of cooperation"[2], because to works by preventing you from "knowing" which situation you end up in (ie, it destroys options wrt which correlations you can "act on" / adapt to).

Compare that to, say, postsegregational killing mechanisms rampant[3] in prokaryotes. Genes on a single plasmid ensure that when the host organism copies itself, any host-copy that don't also include a copy of the plasmid are killed by internal toxins. This has the effect of increasing the plasmid's relative proportion in the host species, so without mechanisms preventing internal misalignment like that, the adaptation remains stable.

There's constant fighting in local vs global & shortscopic vs longscopic gradients all across everything, and cohesive organisms enforce global/long selection-scopes by restricting the options subcomponents have to propagate themselves.

Generalization in the brain as an alignment mechanism against shortscopic dimensions of its reward functions (ie prevents overfitting)

Another example: REM-sleep & episodic daydreaming provides constant generalization-pressure for neuremic adaptations (learned behaviors) to remain beneficial across all the imagined situations (and chaotic noise) your brain puts them through. Again an example of a shortscopic gradient constantly aligning to a longscopic gradient.

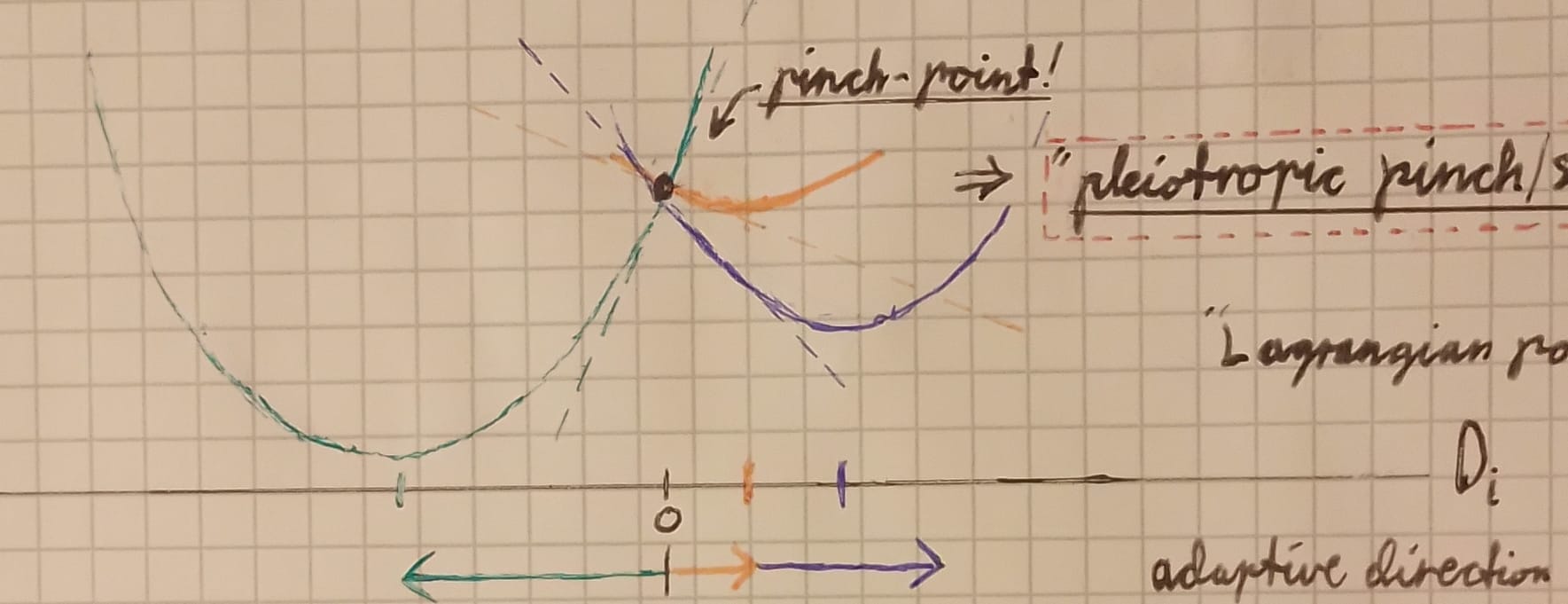

Some abstractions for thinking about internal competition between subdimensions of a global gradient

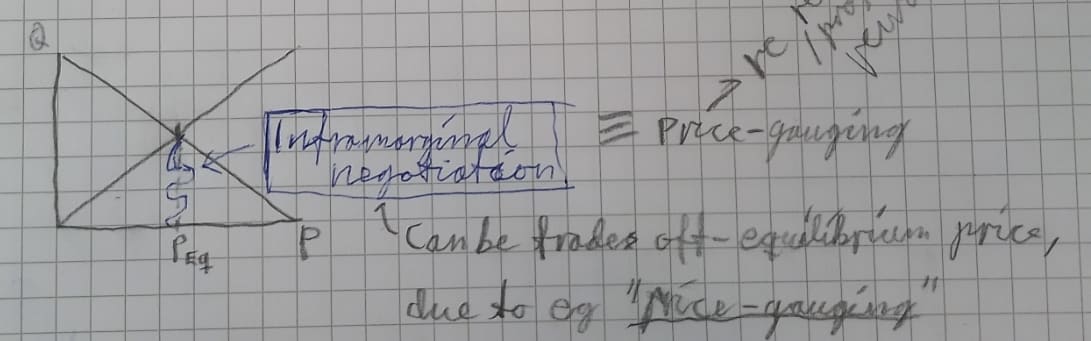

For example, you can imagine each set of considerations as a loss gradient over genetic-possibility-space, and the gradients diverging from each other on specific dimensions. Points where they intersect from different directions are "pleiotropic/polytelic pinch-points", and represent the best compromise [LW · GW] geneset for both gradients—sorta like an equilibrium price in a supply-&-demand framework.

To take the economics-perspective further: if a system (an economy, a gene pool, a brain, whatever) is at equilibrium price wrt the many dimensions of its adaptation-landscape[4] (whether the dimensions be primary rewards or acquired proxies), then globally-misaligned local collusions can be viewed as inframarginal trade[5]. Thus I find a #succinct-statement from my notes:

"mesaoptimizers (selfish emes) evolve in the inframarginal rent (~slack) wrt to the global loss-function."

(Thanks for prompting me to rediscover it!)

So, take a brain-example again: My brain has both shortscopic and longscopic reward-proxies & behavioral heuristics. When I postpone bedtime in order to, say, get some extra work done because I feel behind; then the neuremes representing my desire to get work done now are bidding for decision-weight at some price[6], and decision-weight-producers will fulfill the trades & provide up to equilibrium. But unfortunately, those neuremes have cheated the market by isolating the bidding-war to shortscopic bidders [LW(p) · GW(p)] (ie enforced a particularly narrow perspective), because if they hadn't, then the neuremes representing longscopic concerns would fairly outbid them.[7]

(Note: The economicsy thing is a very incomplete metaphor, and I'm probably messing things up, but this is theory, so communicating promising-seeming mistakes is often as helpfwl as being correct-but-slightly-less-bold.)

- ^

ie, it marginally flattens the intra-genomic competition-gradient, thereby making cooperative fitness-dimensions relatively steeper.

- ^

from "veil of ignorance".

- ^

or at least that's the word they used... I haven't observed this rampancy directly.

- ^

aka "loss-function"

- ^

Inframarginal trade: Trade in which producers & consumers match at off-equilibrium price, and which requires the worse-off party to not have the option of getting their thing cheaper at the global equilibrium-price. Thus it reflects a local-global disparity in which trades things are willing to make (ie which interactions are incentivized).

- ^

The "price" in this case may be that any assembly of neurons which "bids" for relevancy to current activity takes on some risk of depotentiation if it then fails synchronize. That is, if its firing rate slips off the harmonics of the dominant oscillations going on at present, and starts firing into the STDP-window for depotentiation.

- ^

If they weren't excluded from the market, bedtime-maintenance-neuremes would outbid working-late-neuremes, with bids reflecting the brain's expectation that maintaining bedtime has higher utility long-term compared to what can be greedily grabbed right now. (Because BEDTIME IS IMPORTANT!) :p

comment by Sune · 2024-09-22T07:18:04.299Z · LW(p) · GW(p)

Why does the plot start at 3x3 instead of 2x2? Of course, it is not common to have games with only one choice, but for Chicken that is what you end up with when removong one option. You could even start the investigation at 2x1 options.

Replies from: niplavcomment by df fd (df-fd) · 2024-09-21T23:11:25.027Z · LW(p) · GW(p)

In the context of minimum wage.

I assume Abdullah has many options mean he has many job offers/alternatives to jobs.

What does it mean for Benjamin to have many options?