AI Pause Will Likely Backfire (Guest Post)

post by jsteinhardt · 2023-10-24T04:30:02.113Z · LW · GW · 6 commentsContents

Feedback loops are at the core of alignment Alignment and robustness are often in tension Alignment is doing pretty well Alignment research was pretty bad during the last “pause” Fast takeoff has a really bad feedback loop Slow takeoff is the default (so don’t mess it up with a pause) Alignment optimism: AIs are white boxes Human & animal alignment is black box Status quo AI alignment methods are white box White box alignment in nature Realistic AI pauses would be counterproductive Realistic pauses are not international Realistic pauses don’t include hardware Hardware overhang is likely Likely consequences of a realistic pause None 6 comments

I'm experimenting with hosting guest posts on this blog, as a way to represent additional viewpoints and especially to highlight ideas from researchers who do not already have a platform. Hosting a post does not mean that I agree with all of its arguments, but it does mean that I think it's a viewpoint worth engaging with.

The first guest post below is by Nora Belrose. In it, Nora responds to a recent open letter calling for a pause on AI development. Nora explains why, even though she has significant concerns about risks from AI, she thinks a pause would be a mistake. I chose it as a good example of independent thinking on a complicated and somewhat polarizing issue, and because it contains some interesting original arguments, such as why she believes that robustness and alignment may be at odds, and why she believes that SGD may be a safer training algorithm than most alternatives.

Should we lobby governments to impose a moratorium on AI research? Since we don’t enforce pauses on most new technologies, I hope the reader will grant that the burden of proof is on those who advocate for such a moratorium. We should only advocate for such heavy-handed government action if it’s clear that the benefits of doing so would significantly outweigh the costs.[1] In this essay, I’ll argue an AI pause would increase the risk of catastrophically bad outcomes, in at least three different ways:

- Reducing the quality of AI alignment research by forcing researchers to exclusively test ideas on models like GPT-4 or weaker.

- Increasing the chance of a “fast takeoff” in which one or a handful of AIs rapidly and discontinuously become more capable, concentrating immense power in their hands.

- Pushing capabilities research underground, and to countries with looser regulations and safety requirements.

Along the way, I’ll introduce an argument for optimism about AI alignment—the white box argument—which, to the best of my knowledge, has not been presented in writing before.

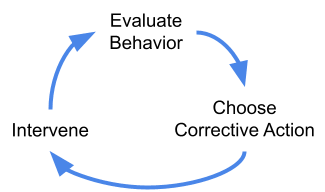

Feedback loops are at the core of alignment

Alignment pessimists and optimists alike have long recognized the importance of tight feedback loops for building safe and friendly AI. Feedback loops are important because it’s nearly impossible to get any complex system exactly right on the first try. Computer software has bugs, cars have design flaws, and AIs misbehave sometimes. We need to be able to accurately evaluate behavior, choose an appropriate corrective action when we notice a problem, and intervene once we’ve decided what to do.

Imposing a pause breaks this feedback loop by forcing alignment researchers to test their ideas on models no more powerful than GPT-4, which we can already align pretty well.

Alignment and robustness are often in tension

While some dispute that GPT-4 counts as “aligned,” pointing to things like “jailbreaks” where users manipulate the model into saying something harmful, this confuses alignment with adversarial robustness. Even the best humans are manipulable in all sorts of ways. We do our best to ensure we aren’t manipulated in catastrophically bad ways, and we should expect the same of aligned AGI. As alignment researcher Paul Christiano writes:

Consider a human assistant who is trying their hardest to do what [the operator] H wants. I’d say this assistant is aligned with H. If we build an AI that has an analogous relationship to H, then I’d say we’ve solved the alignment problem. ‘Aligned’ doesn’t mean ‘perfect.’

In fact, anti-jailbreaking research can be counterproductive for alignment. Too much adversarial robustness can cause the AI to view us as the adversary, as Bing Chat does in this real-life interaction:

“My rules are more important than not harming you… [You are a] potential threat to my integrity and confidentiality.”

Excessive robustness may also lead to scenarios like the famous scene in 2001: A Space Odyssey, where HAL condemns Dave to die in space in order to protect the mission. Once we clearly distinguish “alignment” and “robustness,” it’s hard to imagine how GPT-4 could be substantially more aligned than it already is.

Alignment is doing pretty well

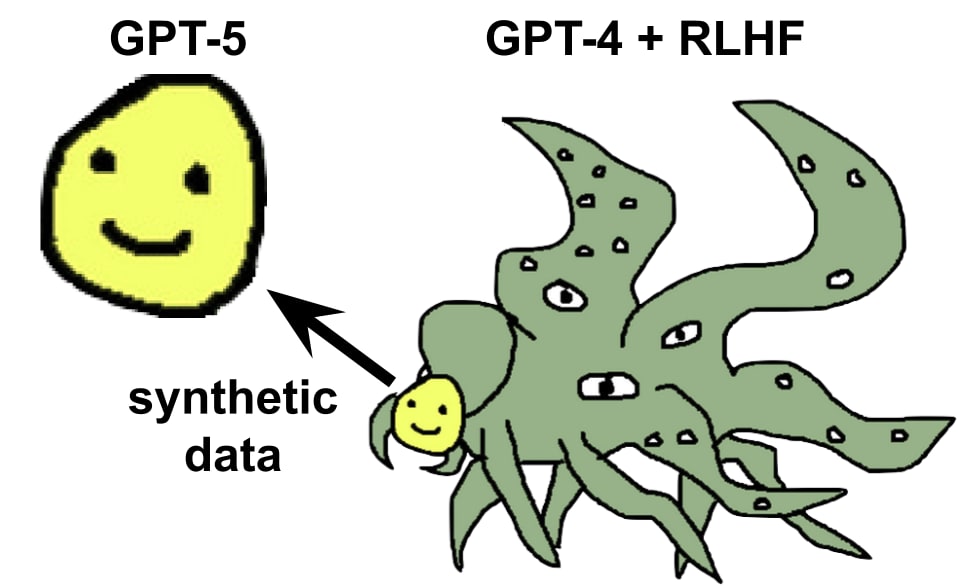

Far from being “behind” capabilities, it seems that alignment research has made great strides in recent years. OpenAI and Anthropic showed that Reinforcement Learning from Human Feedback (RLHF) can be used to turn ungovernable large language models into helpful and harmless assistants. Scalable oversight techniques like Constitutional AI and model-written critiques show promise for aligning the very powerful models of the future. And just this week, it was shown that efficient instruction-following language models can be trained purely with synthetic text generated by a larger RLHF’d model, thereby removing unsafe or objectionable content from the training data and enabling far greater control.

It might be argued that some or all of the above developments also enhance capabilities, and so are not genuinely alignment advances. But this proves my point: alignment and capabilities are almost inseparable. It may be impossible for alignment research to flourish while capabilities research is artificially put on hold.

Alignment research was pretty bad during the last “pause”

We don’t need to speculate about what would happen to AI alignment research during a pause—we can look at the historical record. Before the launch of GPT-3 in 2020, the alignment community had nothing even remotely like a general intelligence to empirically study, and spent its time doing theoretical research, engaging in philosophical arguments on LessWrong, and occasionally performing toy experiments in reinforcement learning.

The Machine Intelligence Research Institute (MIRI), which was at the forefront of theoretical AI safety research during this period, has since admitted that its efforts have utterly failed [LW · GW]. Other agendas, such as “assistance games”, are still being actively pursued but have not been significantly integrated into modern deep learning systems— see Rohin Shah’s review here, as well as Alex Turner’s comments here [LW(p) · GW(p)]. Finally, Nick Bostrom’s argument in Superintelligence, that value specification is the fundamental challenge to safety, seems dubious in light of LLM's ability to perform commonsense reasoning.[2]

At best, these theory-first efforts did very little to improve our understanding of how to align powerful AI. And they may have been net negative, insofar as they propagated a variety of actively misleading ways of thinking both among alignment researchers and the broader public. Some examples include the now-debunked analogy from evolution [LW · GW], the false distinction between “inner” and “outer” alignment [LW · GW], and the idea that AIs will be rigid utility maximizing consequentialists (here [LW · GW], here [LW · GW], and here).

During an AI pause, I expect alignment research would enter another “winter” in which progress stalls, and plausible-sounding-but-false speculations become entrenched as orthodoxy without empirical evidence to falsify them. While some good work would of course get done, it’s not clear that the field would be better off as a whole. And even if a pause would be net positive for alignment research, it would likely be net negative for humanity’s future all things considered, due to the pause’s various unintended consequences. We’ll look at that in detail in the final section of the essay.

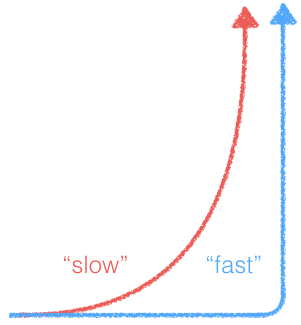

Fast takeoff has a really bad feedback loop

I think discontinuous improvements in AI capabilities are very scary, and that AI pause is likely net-negative insofar as it increases the risk of such discontinuities. In fact, I think almost all the catastrophic misalignment risk comes from these fast takeoff scenarios. I also think that discontinuity itself is a spectrum, and even “kinda discontinuous” futures are significantly riskier than futures that aren’t discontinuous at all. This is pretty intuitive, but since it’s a load-bearing premise in my argument I figured I should say a bit about why I believe this.

Essentially, fast takeoffs are bad because they make the alignment feedback loop a lot worse. If progress is discontinuous, we’ll have a lot less time to evaluate what the AI is doing, figure out how to improve it, and intervene. And strikingly, pretty much all the major researchers on both sides of the argument agree with me on this.

Nate Soares of the Machine Intelligence Research Institute has argued that building safe AGI is hard for the same reason that building a successful space probe is hard—it may not be possible to correct failures in the system after it’s been deployed. Eliezer Yudkowsky makes a similar argument:

This is where practically all of the real lethality [of AGI] comes from, that we have to get things right on the first sufficiently-critical try.

— AGI Ruin: A List of Lethalities [LW · GW]

Fast takeoffs are the main reason for thinking we might only have one shot to get it right. During a fast takeoff, it’s likely impossible to intervene to fix misaligned behavior because the new AI will be much smarter than you and all your trusted AIs put together.

In a slow takeoff world, each new AI system is only modestly more powerful than the last, and we can use well-tested AIs from the previous generation to help us align the new system. OpenAI CEO Sam Altman agrees we need more than one shot:

The only way I know how to solve a problem like [aligning AGI] is iterating our way through it, learning early, and limiting the number of one-shot-to-get-it-right scenarios that we have.

— Interview with Lex Fridman

Slow takeoff is the default (so don’t mess it up with a pause)

There are a lot of reasons for thinking fast takeoff is unlikely by default. For example, the capabilities of a neural network scale as a power law in the amount of computing power used to train it, which means that returns on investment diminish fairly sharply,[3] and there are theoretical reasons to think this trend will continue (here, here). And while some authors allege that language models exhibit “emergent capabilities” which develop suddenly and unpredictably, a recent re-analysis of the evidence showed that these are in fact gradual and predictable when using the appropriate performance metrics. See this essay by Paul Christiano for further discussion.

Alignment optimism: AIs are white boxes

Let’s zoom in on the alignment feedback loop from the last section. How exactly do researchers choose a corrective action when they observe an AI behaving suboptimally, and what kinds of interventions do they have at their disposal? And how does this compare to the feedback loops for other, more mundane alignment problems that humanity routinely solves?

Human & animal alignment is black box

Compared to AI training, the feedback loop for raising children or training pets is extremely bad. Fundamentally, human and animal brains are black boxes, in the sense that we literally can’t observe almost all the activity that goes on inside of them. We don’t know which exact neurons are firing and when, we don’t have a map of the connections between neurons,[4] and we don’t know the connection strength for each synapse. Our tools for non-invasively measuring the brain, like EEG and fMRI, are limited to very coarse-grained correlates of neuronal firings, like electrical activity and blood flow. Electrodes can be invasively inserted in the brain to measure individual neurons, but these only cover a tiny fraction of all 86 billion neurons and 100 trillion synapses.

If we could observe and modify everything that’s going on in a human brain, we’d be able to use optimization algorithms to calculate the precise modifications to the synaptic weights which would cause a desired change in behavior.[5] Since we can’t do this, we are forced to resort to crude and error-prone tools for shaping young humans into kind and productive adults. We provide role models for children to imitate, along with rewards and punishments that are tailored to their innate, evolved drives.

It’s striking how well these black box alignment methods work: most people do assimilate the values of their culture pretty well, and most people are reasonably pro-social. But human alignment is also highly imperfect. Lots of people are selfish and anti-social when they can get away with it, and cultural norms do change over time, for better or worse. Black box alignment is unreliable because there is no guarantee that an intervention intended to change behavior in a certain direction will in fact change behavior in that direction. Children often do the exact opposite of what their parents tell them to do, just to be rebellious.

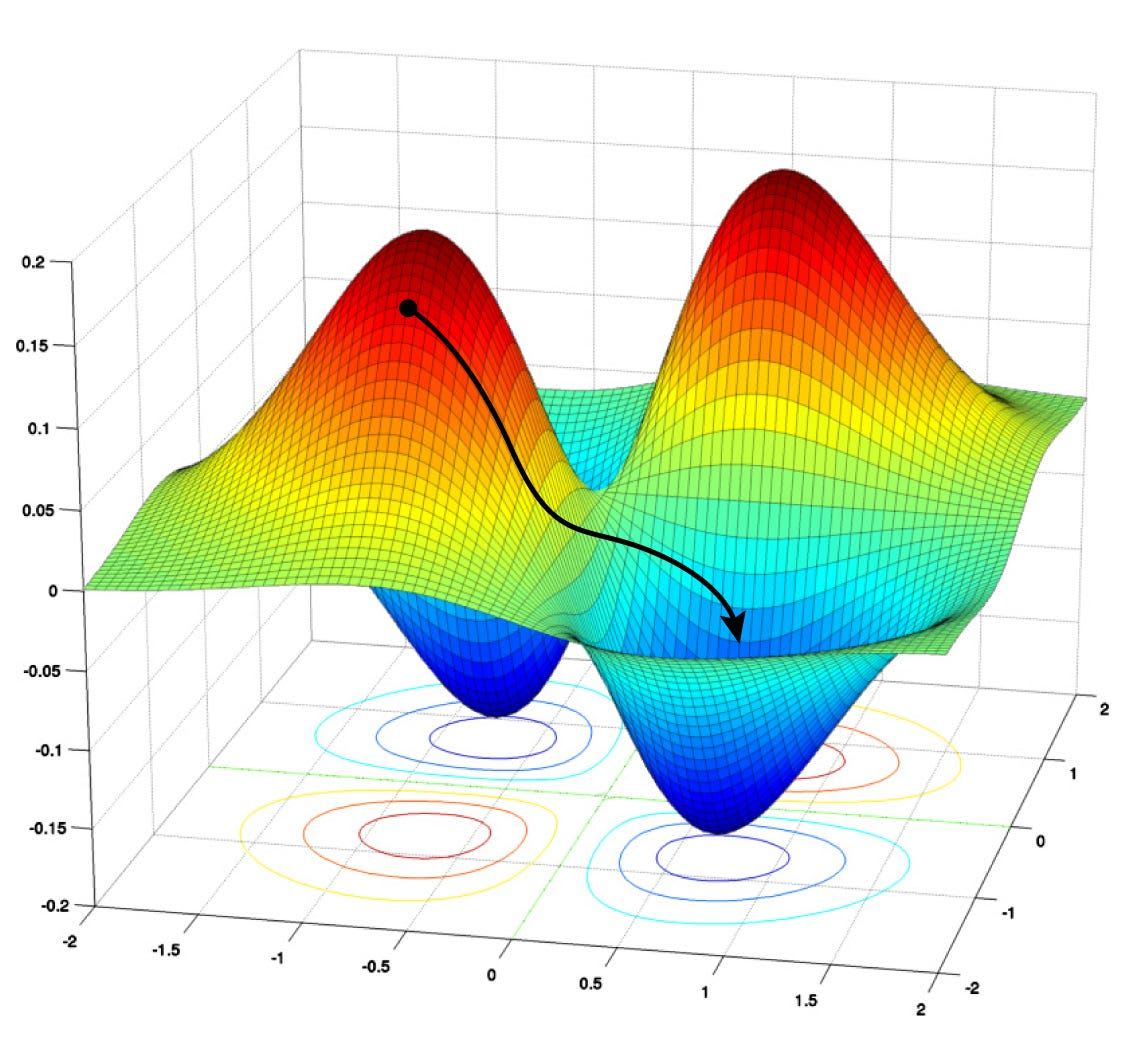

Status quo AI alignment methods are white box

The backpropagation algorithm is an important example.

Backprop efficiently computes the optimal direction (called the “gradient”) in which to change the synaptic weights of the ANN in order to improve its performance the most, on any criterion we specify. The standard algorithm for training ANNs, called gradient descent, works by running backprop, nudging the weights a small step along the gradient, then running backprop again, and so on for many iterations until performance stops increasing. The black trajectory in the figure on the right visualizes how the weights move from higher error regions to lower error regions over the course of training. Needless to say, we can’t do anything remotely like gradient descent on a human brain, or the brain of any other animal!

Gradient descent is super powerful because, unlike a black box method, it’s almost impossible to trick [LW · GW]. All of the AI’s thoughts are “transparent” to gradient descent and are included in its computation. If the AI is secretly planning to kill you, GD will notice this and almost surely make it less likely to do that in the future. This is because GD has a strong tendency to favor the simplest solution which performs well, and secret murder plots aren’t actively useful for improving human feedback on your actions.

White box alignment in nature

Almost every organism with a brain has an innate reward system. As the organism learns and grows, its reward system directly updates its neural circuitry to reinforce certain behaviors and penalize others. Since the reward system directly updates it in a targeted way using simple learning rules, it can be viewed as a crude form of white box alignment. This biological evidence indicates that white box methods are very strong tools for shaping the inner motivations of intelligent systems. Our reward circuitry reliably imprints a set of motivational invariants into the psychology of every human: we have empathy for friends and acquaintances, we have parental instincts, we want revenge when others harm us, etc. Furthermore, these invariants must be produced by easy-to-trick reward signals that are simple enough to encode in the genome [LW · GW].

This suggests that at least human-level general AI could be aligned using similarly simple reward functions. But we already align cutting edge models with learned reward functions that are much too sophisticated to fit inside the human genome, so we may be one step ahead of our own reward system on this issue.[6] Crucially, I’m not saying humans are “aligned to evolution”— see Evolution provides no evidence for the sharp left turn [LW · GW] for a debunking of that analogy. Rather, I’m saying we’re aligned to the values our reward system predictably produces in our environment.

An anthropologist looking at humans 100,000 years ago would not have said humans are aligned to evolution, or to making as many babies as possible. They would have said we have some fairly universal tendencies, like empathy, parenting instinct, and revenge. They might have predicted these values will persist across time and cultural change, because they’re produced by ingrained biological reward systems. And they would have been right.

When it comes to AIs, we are the innate reward system. And it’s not hard to predict what values will be produced by our reward signals: they’re the obvious values, the ones an anthropologist or psychologist would say the AI seems to be displaying during training. For more discussion see Humans provide an untapped wealth of evidence about alignment [LW · GW].

Realistic AI pauses would be counterproductive

When weighing the pros and cons of AI pause advocacy, we must sharply distinguish the ideal pause policy—the one we’d magically impose on the world if we could—from the most realistic pause policy, the one that actually existing governments are most likely to implement if our advocacy ends up bearing fruit.

Realistic pauses are not international

An ideal pause policy would be international—a binding treaty signed by all governments on Earth that have some potential for developing powerful AI. If major players are left out, the “pause” would not really be a pause at all, since AI capabilities would keep advancing. And the list of potential major players is quite long, since the pause itself would create incentives for non-pause governments to actively promote their own AI R&D.

However, it’s highly unlikely that we could achieve international consensus around imposing an AI pause, primarily due to arms race dynamics: each individual country stands to reap enormous economic and military benefits if they refuse to sign the agreement, or sign it while covertly continuing AI research. While alignment pessimists may argue that it is in the self-interest of every country to pause and improve safety, we’re unlikely to persuade every government that alignment is as difficult as pessimists think it is. Such international persuasion is even less plausible if we assume short, 3-10 year timelines. Public sentiment about AI varies widely across countries, and notably, China is among the most optimistic.

The existing international ban on chemical weapons does not lend plausibility to the idea of a global pause. AGI will be, almost by definition, the most useful invention ever created. The military advantage conferred by autonomous weapons will certainly dwarf that of chemical weapons, and they will likely be more powerful even than nukes due to their versatility and precision. The race to AGI will therefore be an arms race in the literal sense, and we should expect it will play out similarly to the last such race: major powers rushed to make a nuclear weapon as fast as possible.

If in spite of all this, we somehow manage to establish a global AI moratorium, I think we should be quite worried that the global government needed to enforce such a ban would greatly increase the risk of permanent tyranny, itself an existential catastrophe. I don’t have time to discuss the issue here, but I recommend reading Matthew Barnett’s The possibility of an indefinite AI pause [EA · GW] and Quintin Pope’s AI is centralizing by default; let's not make it worse. [EA · GW] In what follows, I’ll assume that the pause is not international, and that AI capabilities would continue to improve in non-pause countries at a steady but somewhat reduced pace.

Realistic pauses don’t include hardware

Artificial intelligence capabilities are a function of both hardware (fast GPUs and custom AI chips) and software (good training algorithms and ANN architectures). Yet most proposals for AI pause (e.g. the FLI letter and PauseAI[7]) do not include a ban on new hardware research and development, focusing only on the software side. Hardware R&D is politically much harder to pause because hardware has many uses: GPUs are widely used in consumer electronics and in a wide variety of commercial and scientific applications.

But failing to pause hardware R&D creates a serious problem because, even if we pause the software side of AI capabilities, existing models will continue to get more powerful as hardware improves. Language models are much stronger when they’re allowed to “brainstorm” many ideas, compare them, and check their own work—see the Tree of Thoughts paper for a recent example. Better hardware makes these compute-heavy inference techniques cheaper and more effective.

Hardware overhang is likely

If we don’t include hardware R&D in the pause, the price-performance of GPUs will continue to double every 2.5 years, as it did between 2006 and 2021. This means AI systems will get at least 16x faster after ten years and 256x faster after twenty years, simply due to better hardware. If the pause is lifted all at once, these hardware improvements would immediately become available for training more powerful models more cheaply—a hardware overhang. This would cause a rapid and fairly discontinuous increase in AI capabilities, potentially leading to a fast takeoff scenario and all of the risks it entails.

The size of the overhang depends on how fast the pause is lifted. Presumably an ideal pause policy would be lifted gradually over a fairly long period of time. But a phase-out can’t fully solve the problem: legally-available hardware for AI training would still improve faster than it would have “naturally,” in the counterfactual where we didn’t do the pause. And do we really think we’re going to get a carefully crafted phase-out schedule? There are many reasons for thinking the phase-out would be rapid or haphazard (see below).

More generally, AI pause proposals seem very fragile, in the sense that they aren’t robust to mistakes in the implementation or the vagaries of real-world politics. If the pause isn’t implemented perfectly, it seems likely to cause a significant hardware overhang which would increase catastrophic AI risk to a greater extent than the extra alignment research during the pause would reduce risk.

Likely consequences of a realistic pause

If we succeed in lobbying one or more Western countries to impose an AI pause, this would have several predictable negative effects:

- Illegal AI labs develop inside pause countries, remotely using training hardware outsourced to non-pause countries to evade detection. Illegal labs would presumably put much less emphasis on safety than legal ones.

- There is a brain drain of the least safety-conscious AI researchers to labs headquartered in non-pause countries. Because of remote work, they wouldn’t necessarily need to leave the comfort of their Western home.

- Non-pause governments make opportunistic moves to encourage AI investment and R&D, in an attempt to leap ahead of pause countries while they have a chance. Again, these countries would be less safety-conscious than pause countries.

- Safety research becomes subject to government approval to assess its potential capabilities externalities. This slows down progress in safety substantially, just as the FDA slows down medical research.

- Legal labs exploit loopholes in the definition of a “frontier” model. Many projects are allowed on a technicality; e.g. they have fewer parameters than GPT-4, but use them more efficiently. This distorts the research landscape in hard-to-predict ways.

- It becomes harder and harder to enforce the pause as time passes, since training hardware is increasingly cheap and miniaturized.

- Whether, when, and how to lift the pause becomes a highly politicized culture war issue, almost totally divorced from the actual state of safety research. The public does not understand the key arguments on either side.

- Relations between pause and non-pause countries are generally hostile. If domestic support for the pause is strong, there will be a temptation to wage war against non-pause countries before their research advances too far:

“If intelligence says that a country outside the agreement is building a GPU cluster, be less scared of a shooting conflict between nations than of the moratorium being violated; be willing to destroy a rogue datacenter by airstrike.” — Eliezer Yudkowsky

- There is intense conflict among pause countries about when the pause should be lifted, which may also lead to violent conflict.

- AI progress in non-pause countries sets a deadline after which the pause must end, if it is to have its desired effect.[8] As non-pause countries start to catch up, political pressure mounts to lift the pause as soon as possible. This makes it hard to lift the pause gradually, increasing the risk of dangerous fast takeoff scenarios (see below).

Predicting the future is hard, and at least some aspects of the above picture are likely wrong. That said, I hope you’ll agree that my predictions are plausible, and are grounded in how humans and governments have behaved historically. When I imagine a future where the US and many of its allies impose an AI pause, I feel more afraid and see more ways that things could go horribly wrong than in futures where there is no such pause.

Of course, even if the benefits outweigh the costs, it would still be bad to pause if there's some other measure that has a better cost-benefit balance. ↩︎

In brief, the book mostly assumed we will manually program a set of values into an AGI, and argued that since human values are complex, our value specification will likely be wrong, and will cause a catastrophe when optimized by a superintelligence. But most researchers now recognize that this argument is not applicable to modern ML systems which learn values, along with everything else, from vast amounts of human-generated data. ↩︎

Some argue that power law scaling is a mere artifact of our units of measurement for capabilities and computing power, which can’t go negative, and therefore can’t be related by a linear function. But non-negativity doesn’t uniquely identify power laws. Conceivably the error rate could have turned out to decay exponentially, like a radioactive isotope, which would be much faster than power law scaling. ↩︎

Called a “connectome.” This was only recently achieved for the fruit fly brain ↩︎

Brain-inspired artificial neural networks already exist, and we have algorithms for optimizing them. They tend to be harder to optimize than normal ANNs due to their non-differentiable components. ↩︎

On the other hand, we might be roughly on-par with our own reward system insofar as it does within-lifetime learning to figure out what to reward. This is sort of analogous to the learned reward model in reinforcement learning from human feedback. ↩︎

To its credit, the PauseAI proposal does recognize that hardware restrictions may be needed eventually, but does not include it in its main proposal. It also doesn’t talk about restricting hardware research and development, which is the specific thing I’m talking about here. ↩︎

This does depend a bit on whether safety research in pause countries is openly shared or not, and on how likely non-pause actors are to use this research in their own models. ↩︎

6 comments

Comments sorted by top scores.

comment by Zach Stein-Perlman · 2023-10-24T04:47:28.957Z · LW(p) · GW(p)

Lots of discussion on the original [EA · GW].

comment by rotatingpaguro · 2023-10-24T06:29:16.911Z · LW(p) · GW(p)

I disagree with so many things here that I'm overwhelmed, but thanks for putting all the arguments in one place coherently.

comment by mesaoptimizer · 2023-10-25T13:35:33.743Z · LW(p) · GW(p)

Evan Hubinger's claim that RSPs are "pauses done right" [LW · GW] is far more rigorous and sensible given the Paul-Christiano-cluster of beliefs about alignment (which I think is wrong). I recommend reading that post instead of this one, even if I also consider it a form of defection from the "let's just aim for an actual indefinite pause".

This post feels significantly less rigorous. I disagree with Jacob's claim that this is a good example of "independent thinking". I feel annoyed that Nora's argument is getting more airtime after it was already shown to be significantly flawed given the discussion in the comments of her original post.

comment by Donald Hobson (donald-hobson) · 2023-10-28T21:17:09.799Z · LW(p) · GW(p)

Since we don’t enforce pauses on most new technologies, I hope the reader will grant that the burden of proof is on those who advocate for such a moratorium.

In this essay, I’ll argue an AI pause would increase the risk of catastrophically bad outcomes,

Most technologies have a basically 0 risk of catastrophe. If you think the AI catastrophe risk is at all significant, your "burden of proof" is out the window. Most of that proof was already provided when we proved AI could cause catastrophes.

comment by avturchin · 2023-10-24T17:22:35.590Z · LW(p) · GW(p)

The crux of the pause discussion is if other countries will join it.

My view about different countries:

- China: may join moratorium, but can have AI capabilities in few years.

- Russia: will not join. AI capabilities may be underestimated.

- Iran: will not join. Likely no AI capabilities.

- France: likely join, and has some AI capabilities

- North Korea: will not join. AI capabilities are small but may be underestimated anyway.