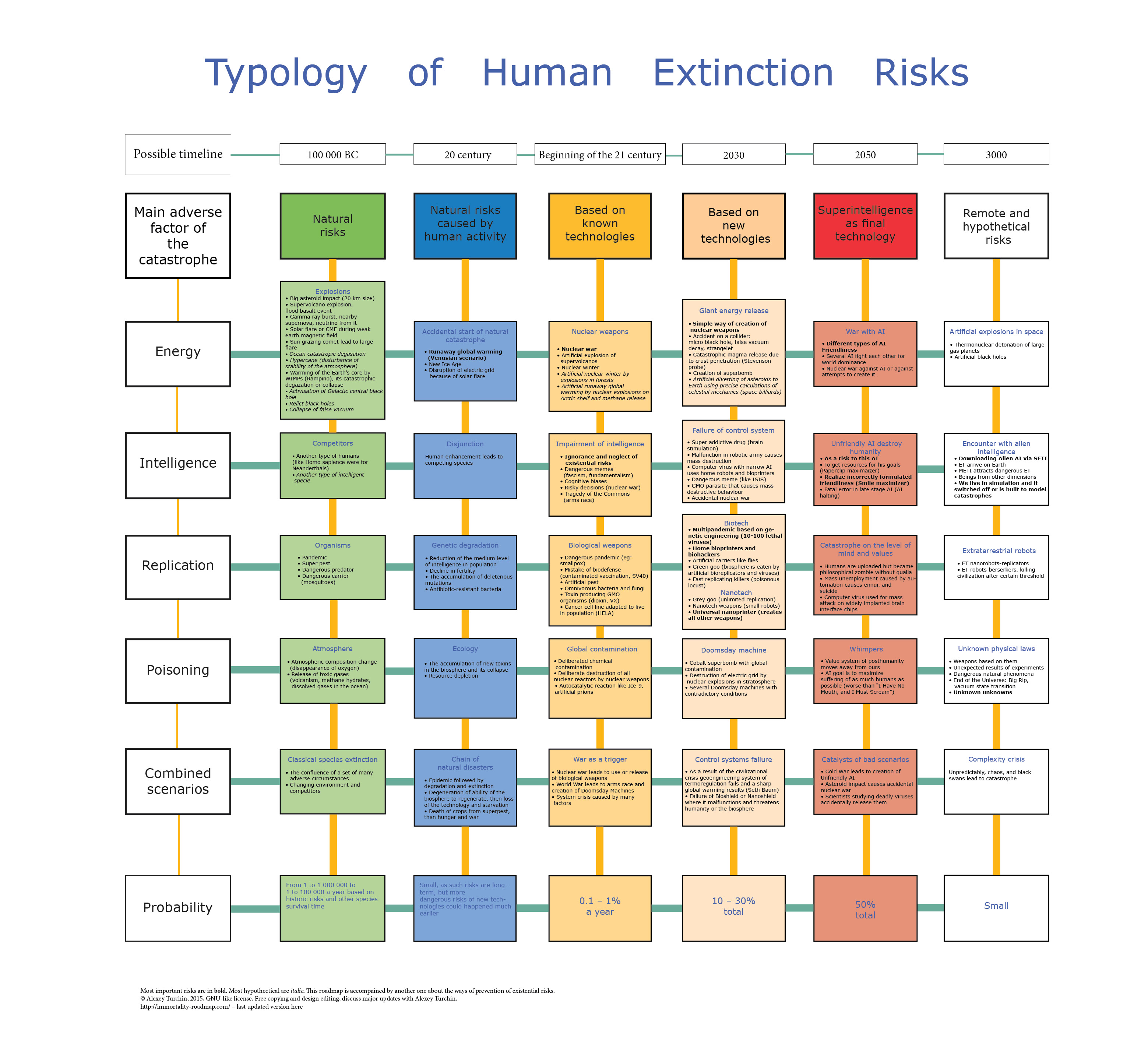

A map: Typology of human extinction risks

post by turchin · 2015-06-23T17:23:53.319Z · LW · GW · Legacy · 6 commentsContents

6 comments

In 2008 I was working on a Russian language book “Structure of the Global Catastrophe”, and I brought it to one our friends for review. He was geologist Aranovich, an old friend of my late mother's husband.

We started to discuss Stevenson's probe — a hypothetical vehicle which could reach the earth's core by melting its way through the mantle, taking scientific instruments with it. It would take the form of a large drop of molten iron – at least 60 000 tons – theoretically feasible, but practically impossible.

Milan Cirkovic wrote an article arguing against this proposal, in which he fairly concluded that such a probe would leave a molten channel of debris behind it, and high pressure inside the earth's core could push this material upwards. A catastrophic degassing of the earth's core could ensue that would act like giant volcanic eruption, completely changing atmospheric composition and killing all life on Earth.

Our friend told me that in his institute they had created an upgraded version of such a probe, which would be simpler, cheaper and which could drill down deeply at a speed of 1000 km per month. This probe would be a special nuclear reactor, which uses its energy to melt through the mantle. (Something similar was suggested in the movie “China syndrome” about a possible accident at a nuclear power station – so I don’t think that publishing this information would endanger humanity.) The details of the reactor-probe were kept secret, but there was no money available for practical realisation of the project. I suggested that it would be wise not to create such a probe. If it were created it could become the cheapest and most effective doomsday weapon, useful for worldwide blackmail in the reasoning style of Herman Khan.

But in this story the most surprising thing for me was not a new way to kill mankind, but the ease with which I discovered its details. If your nearest friends from a circle not connected with x-risks research know of a new way of destroying humanity (while not fully recognising it as such), how many more such ways are known to scientists from other areas of expertise!

I like to create full exhaustive lists, and I could not stop myself from creating a list of human extinction risks. Soon I reached around 100 items, although not all of them are really dangerous. I decided to convert them into something like periodic table — i.e to sort them by several parameters — in order to help predict new risks.

For this map I chose two main variables: the basic mechanism of risk and the historical epoch during which it could happen. Also any map should be based on some kind of future model, nd I chose Kurzweil’s model of exponential technological growth which leads to the creation of super technologies in the middle of the 21st century. Also risks are graded according to their probabilities: main, possible and hypothetical. I plan to attach to each risk a wiki page with its explanation.

I would like to know which risks are missing from this map. If your ideas are too dangerous to openly publish them, PM me. If you think that any mention of your idea will raise the chances of human extinction, just mention its existence without the details.

I think that the map of x-risks is necessary for their prevention. I offered prizes for improving the previous map which illustrates possible prevention methods of x-risks and it really helped me to improve it. But I do not offer prizes for improving this map as it may encourage people to be too creative in thinking about new risks.

Pdf is here: http://immortality-roadmap.com/typriskeng.pdf

6 comments

Comments sorted by top scores.

comment by Lalartu · 2015-06-24T09:42:29.080Z · LW(p) · GW(p)

First, this map mixes two different things: human extinction and collapse of civilization. It has a lot of risks that cannot cause the former such as resource depletion, and has things like "disjunction" box that I would call not a risk but a desirable future.

Second, it mixes x-risks with things that sound bad. Facsism in not a x-risk.

Third, it lacks such category as voluntary extinction.

Replies from: turchin↑ comment by turchin · 2015-06-24T10:51:35.785Z · LW(p) · GW(p)

The goal of the map is to show only human extinction risks. But collapse of the civilization and dangerous memetic system are things that rise the probability of human extinction. To make the map more clear I will add bottom line, there I will concentrate all things that change probability of extinction, which may be named as "second level risks".

comment by Gunnar_Zarncke · 2015-06-27T21:47:18.067Z · LW(p) · GW(p)

I like the systematics and the level of completion you achieved even though I think some examples are redundant, contrieved or better instances could be found. Yes, criticism is always possible. My main question is how you arrived at the probability judgements for the 2030 and 2050 colums.

Replies from: turchin↑ comment by turchin · 2015-06-29T21:13:41.636Z · LW(p) · GW(p)

The notion of probability in case of x-risks is completely different from any other probabilities. Because we have to measure probability of unique event which will not have observers by definition. So the best way to apply probability here is just to show our relative stake on probability of one or other scenarios. So, the digits are more or less arbitrary, but have to show that the risks are high and that they are growing, and that the total is around 50 percent, as it was estimated by different x-risks authors: Leslie said that it is 30 pr cent in 200 years, Rees that it is 50 per cent in 100 years. I am going to make another map just about the probability of x-risks, which would include estimates from different authors, and different ideas about how the probability of x-risks should be define.