Toy Problem: Detective Story Alignment

post by johnswentworth · 2020-10-13T21:02:51.664Z · LW · GW · 5 commentsContents

Why Is This Interesting? None 5 comments

Suppose I train some simple unsupervised topic model (e.g. LDA) on a bunch of books. I look through the topics it learns, and find one corresponding to detective stories. The problem: I would like to use the identified detective-story cluster to generate detective stories from GPT.

The hard part: I would like to do this in such a way that the precision of the notion of detective-stories used by the final system is not limited by the original simple model.

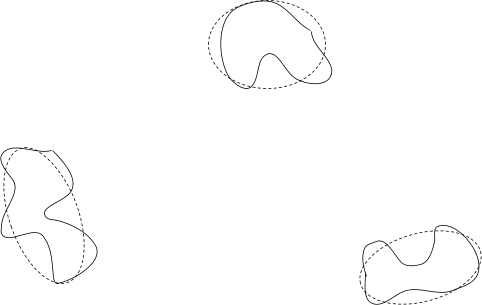

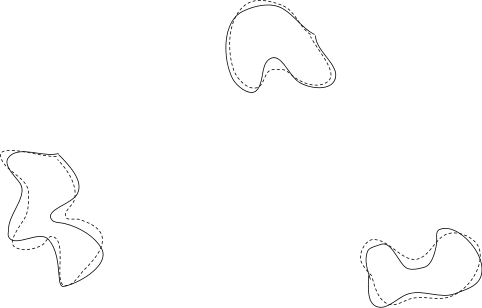

Here’s what that means, visually. The space of real-world books has some clusters in it:

One of those clusters is the detective-story cluster. The simple model approximates those clusters using something simple - for the sake of visualization, ellipses:

The more complex model (e.g. GPT) presumably has a much more precise approximation of the shape of the clusters:

So, we’d like to use the simple model to identify one of the clusters, but then still use the full power of the complex model to sample from that cluster.

Of course, GPT may not contain a single variable corresponding to a cluster-id, which is largely what makes the problem interesting. GPT may not internally use a notion of “cluster” at all. However, the GPT model should still contain something (approximately) isomorphic to the original cluster, since that real pattern is still in the data/environment: since there is a real cluster of "detective stories" in the data/environment itself, the GPT model should also contain that cluster, to the extent that the GPT model matches the data/environment.

In particular, the “precision not limited by original model” requirement rules out the obvious strategy of generating random samples from GPT and selecting those which the simple model labels as detective-stories. If we do that, then we’ll end up with some non-detective-stories in the output, because of shortcomings in the simple model’s notion of detective-stories. Visually, we’d be filtering based on the ellipse approximation of the cluster, which is exactly what we want to avoid.

(Note: I am intentionally not giving a full mathematical formalization of the problem. Figuring out the right formalization is part of the problem - arguably the hard part.)

Why Is This Interesting?

This is a toy model for problems like:

- Representing stable pointers to values [LW · GW]

- Producing an aligned successor AI from an aligned initial AI

- Producing an AI which can improve its notion of human values over time

Human values are conceptually tricky, so rather than aligning to human values, this toy problem aligns to detective novels. The toy problem involves things like:

- Representing stable pointers to the concept of detective-stories

- Producing a successor detective-story-model from an initial detective-story-model

- Producing a model which can improve its notion of “detective-stories” over time

Ideally, a solution to this problem would allow us to build a detective-story-generator with a basin of attraction: given a good-enough initial notion of detective-stories, its notion of detective-stories would improve over time and eventually converge to the "real" notion. Likewise with human values: ideally, we could build a system which converges to "perfect" alignment over time as its world-model improves, as long as the initial notion of human values is good enough.

5 comments

Comments sorted by top scores.

comment by TurnTrout · 2023-05-16T03:23:03.199Z · LW(p) · GW(p)

Here are very quick thoughts on a candidate approach, copied from a Discord conversation:

Possibly you could take a weaker/shallower transformer (like gpt2small) and then use model-stitching to learn a linear map from layer 3/12 of gpt2small to layer 12/48 of gpt2-xl, and then take a "detective story vector" [LW · GW] in gpt2small, and then pass it through the learned linear transform to get a detective story vector in gpt2xl?

The main reason youd do this is if you were worried that a normal steering vector in the powerful model, wouldn't do the real thing you wanted for eg deception reasons / something else messing up the ability to do normal gpt2xl activation additions.

Insofar as the weak model (gpt2small) has a "detective" direction, then you should be able to translate it into the corresponding direction in the powerful model (gpt2xl)

And insofar as you verify that:

- The detective-story vector works in gpt2small (as evidenced by increased generation of detective stories)

- The linearly translated vector seems to work in gpt2xl (as evidenced by increased generation of detective stories)

This seems like good evidence of having "actually steered" the more powerful model (gpt2xl, in this example). This is because neither gpt2small's steering vector, nor the stitching itself, were optimizing directly over the outputs of the translated vector working. Instead, it's more of an "emergent structure" you'd expect if you really had found a direction-in-story-space implied by the weaker model.

(I think gpt2small is too weak for this experiment to actually work; I'd expect you'd need more modern models, like gpt2xl -> Vicuna-13B.)

comment by adamShimi · 2020-10-18T11:29:39.668Z · LW(p) · GW(p)

That looks like a fun problem.

It also makes me think of getting from maps (in your sense of abstraction) to the original variable. Because the simple model has a basic abstraction, a map built from throwing a lot of information; and you want to build a better map. So you're asking how to decide what to add to the map to improve it, without having access to the initial variable. Or maybe differently, you have a bunch of simple abstractions (from the simple models), a bunch of more complex abstractions (from the complex model), and given a simple abstraction, you want to find the best complex abstraction that "improve" the simple abstraction. Is that correct?

For a solution, here's a possible idea if the complex model is also a cluster model, and both models are trained on the same data: look for the learned cluster in the complex model with the bigger intersection with the detective story cluster in the simple model. Obvious difficulties are "how do you compute that intersection?", and for more general complex models (like GPT-N), "how to even find the clusters?" Still, I would say that this idea satisfies your requirement of improving the concept if the initial one is good enough (most of the "true" cluster lies in the simple model's cluster/most of the simple model's cluster lies in the "true" cluster).

Replies from: johnswentworth↑ comment by johnswentworth · 2020-10-18T17:50:42.445Z · LW(p) · GW(p)

Your thoughts on abstraction here are exactly on the mark.

For the cluster-intersection thing, I think the biggest barrier is that "biggest cluster intersection" implicitly assumes some space in which the cluster intersection size is measured - some features, or some distance metric, or something along those lines. A more complex model will likely be using a different feature space, a different implicit metric, etc.

comment by mtaran · 2020-10-17T17:43:41.325Z · LW(p) · GW(p)

The problem definition talks about clusters in the space of books, but to me it’s cleaner to look at regions of token-space, and token-sequences as trajectories through that space.

GPT is a generative model, so it can provide a probability distribution over the next token given some previous tokens. I assume that the basic model of a cluster can also provide a probability distribution over the next token.

With these two distribution generators in hand, you could generate books by multiplying the two distributions when generating each new token. This will bias the story towards the desired cluster, while still letting GPT guide the overall dynamic. Some hyperparameter tuning for weighting these two contributions will be necessary.

You could then fine-tune GPT using the generated books to break the dependency on the original model.

Seems like a fun project to try, with GPT-3, though probably even GPT-2 would give some interesting results.

Replies from: johnswentworth↑ comment by johnswentworth · 2020-10-18T18:02:03.902Z · LW(p) · GW(p)

If anyone tries this, I'd be interested to hear about the results. I'd be surprised if something that simple worked reliably, and it would likely update my thinking on the topic.