Analyzing how SAE features evolve across a forward pass

post by bensenberner, danibalcells, Michael Oesterle, Ediz Ucar (ediz-ucar), StefanHex (Stefan42) · 2024-11-07T22:07:02.827Z · LW · GW · 0 commentsThis is a link post for https://arxiv.org/abs/2410.08869

Contents

No comments

This research was completed for the Supervised Program for Alignment Research (SPAR) summer 2024 iteration. The team was supervised by @Stefan Heimersheim [LW · GW] (Apollo Research). Find out more about the program and upcoming iterations here.

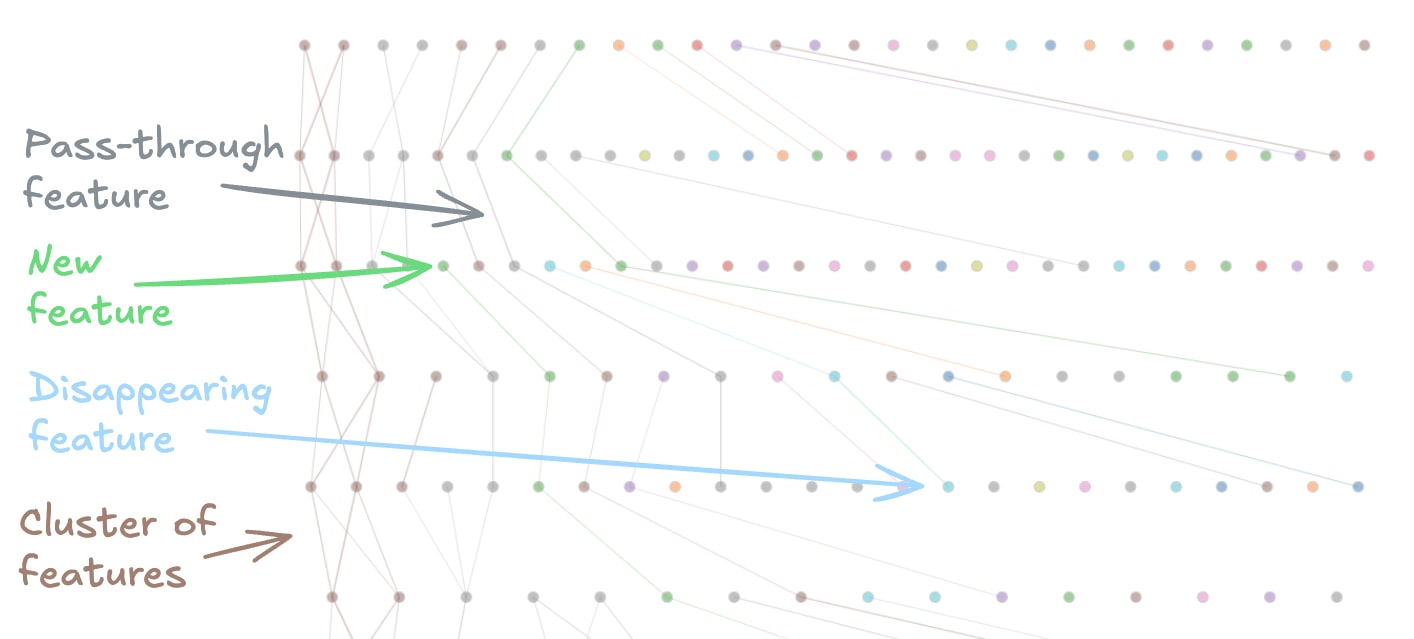

TL,DR: We look for related SAE features, purely based on statistical correlations. We consider this a cheap method to estimate e.g. how many new features there are in a layer and how many features are passed through from previous layers (similar to the feature lifecycle in Anthropic’s Crosscoders). We find communities of related features, and features that appear to be quasi-boolean combinations of previous features.

Here’s a web interface showcasing our feature graphs.

We ran many prompts through GPT-2 residual stream SAEs and measured which features fired together, and then created connected graphs of frequently co-firing features (“communities”) that spanned multiple layers (inspired by work from Marks 2024). As expected, features within communities fire on similar input tokens. In some circumstances features appeared to “specialize” in later layers.

You can read the paper on arXiv, or find us at the ATTRIB workshop at NeurIPS!

0 comments

Comments sorted by top scores.