Scaling laws for dominant assurance contracts

post by jessicata (jessica.liu.taylor) · 2023-11-28T23:11:07.631Z · LW · GW · 5 commentsThis is a link post for https://unstableontology.com/2023/11/28/scaling-laws-for-dominant-assurance-contracts/

Contents

5 comments

(note: this post is high in economics math, probably of narrow interest)

Dominant assurance contracts are a mechanism proposed by Alex Tabarrok for funding public goods. The following summarizes a 2012 class paper of mine on dominant assurance contracts. Mainly, I will be determining how much the amount of money a dominant assurance contract can raise as a function of how much value is created for how many parties, under uncertainty about how much different parties value the public good. Briefly, the conclusion is that, while Tabarrok asserts that the entrepreneur's profit is proportional to the number of consumers under some assumptions, I find it is proportional to the square root of the number of consumers under these same assumptions.

The basic idea of assurance contracts is easy to explain. Suppose there are N people ("consumers") who would each benefit by more than $S > 0 from a given public good (say, a piece of public domain music) being created, e.g. a park (note that we are assuming linear utility in money, which is approximately true on the margin, but can't be true at limits). An entrepreneur who is considering creating the public good can then make an offer to these consumers. They say, everyone has the option of signing a contract; this contract states that, if each other consumer signs the contract, then every consumer pays $S, and the entrepreneur creates the public good, which presumably costs no more than $NS to build (so the entrepreneur does not take a loss).

Under these assumptions, there is a Nash equilibrium of the game, in which each consumer signs the contract. To show this is a Nash equilibrium, consider whether a single consumer would benefit by unilaterally deciding not to sign the contract in a case where everyone else signs it. They would save $S by not signing the contract. However, since they don't sign the contract, the public good will not be created, and so they will lose over $S of value. Therefore, everyone signing is a Nash equilibrium. Everyone can rationally believe themselves to be pivotal: the good is created if and only if they sign the contract, creating a strong incentive to sign.

Tabarrok seeks to solve the problem that, while this is a Nash equilibrium, signing the contract is not a dominant strategy. A dominant strategy is one where one would benefit by choosing that strategy (signing or not signing) regardless of what strategy everyone else takes. Even if it would be best for everyone if everyone signed, signing won't make a difference if at least one other person doesn't sign. Tabarrok solves this by setting a failure payment $F > 0, and modifying the contract so that if the public good is not created, the entrepreneur pays every consumer who signed the contract $F. This requires the entrepreneur to take on risk, although that risk may be small if consumers have a sufficient incentive for signing the contract.

Here's the argument that signing the contract is a dominant strategy for each consumer. Pick out a single consumer and suppose everyone else signs the contract. Then the remaining consumer benefits by signing, by the previous logic (the failure payment is irrelevant, since the public good is created whenever the remaining consumer signs the contract).

Now consider a case where not everyone else signs the contract. Then by signing the contract, the remaining consumer gains $F, since the public good is not created. If they don't sign the contract, they get nothing and the public good is still not created. This is still better for them. Therefore, signing the contract is a dominant strategy.

What if there is uncertainty about how much the different consumers value the public good? This can be modeled as a Bayesian game, where agents (consumers and the entrepreneur) have uncertainty over each other's utility function. The previous analysis assumed that there was a lower bound $T on everyone's benefit from the public good. So, it still applies under some uncertainty, as long as there is a lower bound. For example, if each consumer's utility in the good is uniformly distributed in [$1, $2], then S can be set to $0.999, and the argument still goes through, generating about $N of revenue to fund the public good.

However, things are more complicated when the lower bound is 0. Suppose each consumer's benefit from the public good is uniformly distributed in [$0, $1] (Tabarrok writes "G" for the CDF of this distribution). Then, how can the entrepreneur set S so as to ensure that the good is created and they receive enough revenue to create it? There is no non-zero T value that is a lower bound, so none of the previous arguments apply.

Let's modify the setup somewhat so as to analyze this situation. In addition to setting S and F, the entrepreneur will set K, the threshold number of people who have to sign the contract for the public good to be built. If at least K of the N consumers sign the contract, then they each pay $S and the public good is built. If fewer than K do, then the public good is not created, and each who did sign gets $F.

How much value can a consumer expect to gain by signing or not signing the contract? Let X be a random variable equal to the number of other consumers who sign the contract, and let V be the consumer's value of the public good. If the consumer doesn't sign the contract, the public good is produced with probability , producing expected value for the consumer.

Alternatively, if the consumer does sign the contract, the probability that the public good is produced is . In this condition, they get value ; otherwise, they get value F. Their expected value can then be written as .

The consumer will sign the contract if the second quantity is greater than the first, or equivalently, if the difference between the second and the first is positive. This difference can be written as:

.

Intuitively, the first term is the expected value the agents gains from signing by being pivotal, while the remaining terms express the agent's expected value from success and failure payments.

This difference is monotonic in V. Therefore, each consumer has an optimal strategy consisting of picking a threshold value W and signing the contract when V > W (note: I write W instead of Tabarrok's V*, for readability). This W value is, symmetrically, the same for each consumer, assuming that each consumer's prior distribution over V is the same and these values are independent.

The distribution over X is, now, binomial with N-1 being the number of trials and being the probability of success. The probabilities in the difference can therefore be written as:

- .

By substituting these expressions into the utility difference , replacing V with W, and solving for this expression equaling 0 (which is true at the optimal W threshold), it is possible to solve for W. This allows expressing W as a function of F, S, and K. Specifically, when C = 0 and V is uniform in [0, 1], Tabarrok finds that, when the entrepreneur maximizes profit, . (I will not go into detail on this point, as it is already justified by Tabarrok).

The entrepreneur will set F, S, and K so as to maximize expected profit. Let Y be a binomially distributed random variable with N trials and a success probability of , which represents how many consumers sign the contract. Let C be the cost the entrepreneur must pay to provide the public good. The entrepreneur's expected profit is then

which (as shown in the paper) can simplified to

.

Note that probability terms involving Y depend on W which itself depends on F, S, K. Tabarrok analyzes the case where C = 0 and V is uniform in [0, 1], finding that the good is produced with probability approximately 0.5, K is approximately equal to N/2, W is approximately equal to N/2, and F is approximately equal to S.

To calculate profit, we plug these numbers into the profit equation, yielding:

.

Using the normal approximation of a binomial, we can estimate the term to be , where is the probability density of the distribution at x. Note that this term is the probability of every consumer being pivotal, ; intuitively, the entrepreneur's profit is coming from the incentive consumers have to contribute due to possibly being pivotal. The expected profit is then , which is proportional to .

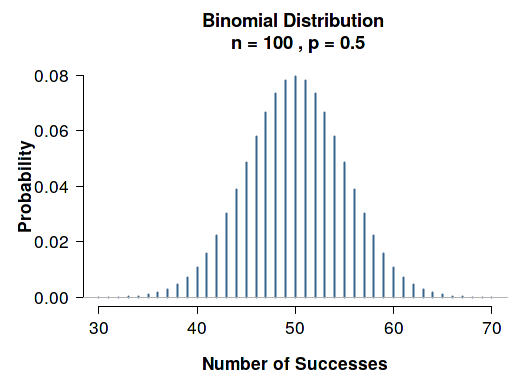

The following is a plot of Y in the N=100 case; every consumer is pivotal at Y=50, which has approximately 0.08 probability.

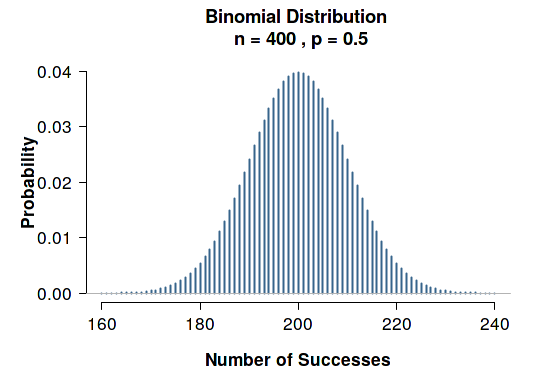

This second plot shows N=400; the probability of everyone being pivotal is 0.04, half of the probability in the N=100 case, showing a scaling law for probability of being pivotal.

Tabarrok, however, claims that the expected profit in this case is proportional to N/2:

Setting V* to 1/2 and K to N/2 it is easy to check that expected profit is proportional to N/2 which is increasing in N.

This claim in the paper simply seems to be a mathematical error, although it's possible I am missing something. In my 2012 essay, I derived that profit was proportional to , but didn't point out that this differed from Tabarrok's estimate, perhaps due to an intuition against openly disagreeing with authoritative papers.

We analyzed the case when V is uniform in [0, 1]; what if instead V is uniform in [0, Z]? This leads to simple scaling: W becomes Z/2 instead of 1/2, and expected profit is proportional to . This yields a scaling law for the profit that can be expected from a dominant assurance contract.

For some intuition on why profit is proportional to , consider that the main reason for someone to sign the contract (other than the success and failure payments, which don't depend on V) is that they may be the pivotal person who produces the good. If you randomly answer N true-or-false questions, your mean score will be N/2, and the probability that a given question is pivotal (in terms of your score being above 50% just because of answering that question correctly) will be proportional to by the normal approximation to a binomial. Introducing uncertainty into whether others sign the contract will, in general, put an upper bound on how pivotal any person can believe themselves to be, because they can expect some others to both sign and not sign the contract. Whereas, in the case where there was a positive lower bound on every consumer's valuation, it was possible for a consumer to be 100% confident that the good would be produced if and only they signed the contract, implying a 100% chance of being pivotal.

The fact that profit in the uncertain valuation case scales with is a major problem for raising large amounts of money from many people with dominant assurance contracts. It is less of a problem when raising money from a smaller number of people, since is closer to N in those cases.

Excludable goods (such as copyrighted content) can in general raise revenue proportional to the total value created, even under uncertainty about consumer valuations. Dominant assurance contracts can function with non-excludable goods, however, this reduces the amount of expected revenue that can be raised.

[ED NOTE: since writing this post, I have found a corresponding impossibility result (example 8) in the literature, showing that revenue raised can only grow with where is the number of consumers, under some assumptions.]

5 comments

Comments sorted by top scores.

comment by TimL · 2024-01-14T13:08:40.730Z · LW(p) · GW(p)

Hi, great post. I've also noted the square-root rule and think it should be more widely known. I've also co-authored a paper showing that the dominant assurance contract described by Tabarrok does not lead to larger profit than a standard contract with the same parameters and have some other work that I'm writing up on the same thing for large N with fixed and variable payments.

I've come up with the upper limit of for the fixed-payment, and something similar for variable payment.

Is there a lot of interest in this at the moment? I agree, it's of narrow interest, so I'm curious into why you've posted this now.

(In case you're wondering how I got here, I followed Tabarrok's recent post about EnsureDone on MarginalRevolution. I'd heard of LessWrong before then but hadn't joined).

Replies from: jessica.liu.taylor↑ comment by jessicata (jessica.liu.taylor) · 2024-01-15T02:50:39.079Z · LW(p) · GW(p)

Nice job with the bound! I've heard a number of people in my social sphere say very positive things about DACs so this is mainly my response to them.

Replies from: TimLcomment by JenniferRM · 2023-11-30T18:42:59.873Z · LW(p) · GW(p)

I think the utility function and probability framework from VNM rationality [? · GW] is a very important kernel of math that constrains "any possible agent that can act coherently (as a limiting case)".

((I don't think of the VNM stuff as the end of the story at all, but it is an onramp to a larger theory that you can motivate and teach in a lecture or three to a classroom. There's no time in the VNM framework. Kelly doesn't show up, and the tensions and pragmatic complexities of trying to apply either VNM or Kelly to the same human behavioral choices in real life and have that cause your life to really go better are non-trivial!))

With that "theory which relates to an important agentic process" as a background, I have a strong hunch that Dominant Assurance Contracts (DACs) are really really conceptually important, in a similarly deep way.

I think that "theoretical DACs" probably constrain all possible governance systems that "collect money to provide public services" where the governance system is bounded by some operational constraint like "freedom" or "non-tyranny" or "the appearance of non-tyranny" or maybe "being limited to organizational behavior that is deontically acceptable behavior for a governance system" or something like that.

In the case of DACs, the math is much less widely known than VNM rationality. Lesswrong has a VNM tag that comes up a lot, but the DAC tag [? · GW] has less love. And in general, the applications of DACs to "what an ideal tax-collecting service-providing governance system would or could look like" isn't usually drawn out explicitly.

However, to me, there is a clear sense in which "the Singularity might will produce a single AI that is mentally and axiologically unified as sort of 'single thing' that is 'person-shaped', and yet it might also be vast, and (if humans still exist after the Singularity) would probably provide endpoint computing services to humans, kinda like the internet or kinda like the government does".

And so in a sense, if a Singleton comes along who can credibly say "The State: it is me" then the math of DACs will be a potential boundary case on how ideal such Singletons could possibly work (similarly to how VNM rationality puts constrains on how any agent could work) if such Singletons constrained themselves to preference elicitation regimes that had a UI that was formal, legible, honest, "non-tyrannical", etc.

That is to say, I think this post is important, and since it has been posted here for 2 days and only has 26 upvotes at the time I'm writing this comment, I think the importance of the post is not intelligible to most of the potential audience!

Replies from: jessica.liu.taylor↑ comment by jessicata (jessica.liu.taylor) · 2023-11-30T22:10:12.762Z · LW(p) · GW(p)

Thanks for the comment! I do think DACs are an important economics idea. This post details the main reason why I don't think they can raise a lot of money (compared with copyright etc) under most realistic conditions, where it's hard to identify lots of people who value the good at above some floor. AGI might have an easier time with this sort of thing through better predictions of agents' utility functions, and open-source agent code.