The AI Driver's Licence - A Policy Proposal

post by Joshua W (sooney), Tessa Malan (tessamalan) · 2024-07-21T20:38:07.093Z · LW · GW · 1 commentsContents

Introduction Moving Forward - Scenarios Background Defining Advanced AI Models Risks of unregulated AIs The definition of a licence Licencing as a tool Self / industrial vs governmental regulation A mix in between: ISO standards Three Avenues for Regulation Development vs Usage Regulation Related and Existing Work Existing work on creating formal “barriers to entry” for AI technology Existing licencing examples and comparison Huggingface🤗 - Terms of Service CITI Certificate - Data or Specimens Only Research 🇬🇧 Driver’s Licence 🇨🇭 Firearm Licence Comparing Licencing Examples - Table Proposal - The AI Driver’s Licence Issuing, Regulating and Enforcing Authority Regulating Authority Issuing Authority Enforcing Authority Costs, Time to Acquire, Validity and Background Checks Single licence vs. Multi-Class licence Policy implications: Integrating user licencing into existing frameworks Pros and Cons Conclusion & Limitations Limitations Acknowledgements None 1 comment

TL;DR: In response to the escalating capabilities and associated risks of advanced AI systems, we advocate for the implementation of an “AI Driver’s Licence” policy. Our proposal is informed by existing licencing frameworks and existing AI legislation. This initiative mandates that users of advanced AI systems must obtain a licence, ensuring they have undergone minimal technical and ethical training The licence requirements would be defined by an international regulatory body, such as the ISO, to maintain consistent and up-to-date standards globally. Independent organisations would issue the licences, while local governments would enforce compliance through audits and penalties. By focusing on the usage stage of the AI lifecycle, this policy aims to mitigate misuse risks , contributing to a safer AI landscape. Our proposal complements existing regulations and emphasises the need for international cooperation to effectively manage the deployment and usage of advanced AI technologies.

Introduction

Sam Altman, the CEO of OpenAI, claims we need “a new agency that licences any effort above a certain scale of capabilities and could take that licence away and ensure compliance with safety standards” (Wheeler, 2023).

Licencing has a long history, with key success stories in protecting the safety of the public by controlling access to potentially harmful activities and items. However, in some cases it has also resulted in adverse effects, driving large parts of industries to the black market, an entirely unregulated space.

Today, we face an uncertain and rapidly changing AI landscape, with AI tools and models capable of increasingly broad and self-determined actions. Many have written about the catastrophic scenarios that may unfold if AI is not appropriately regulated, and experts continue to ascribe higher chances of seeing these scenarios play out.

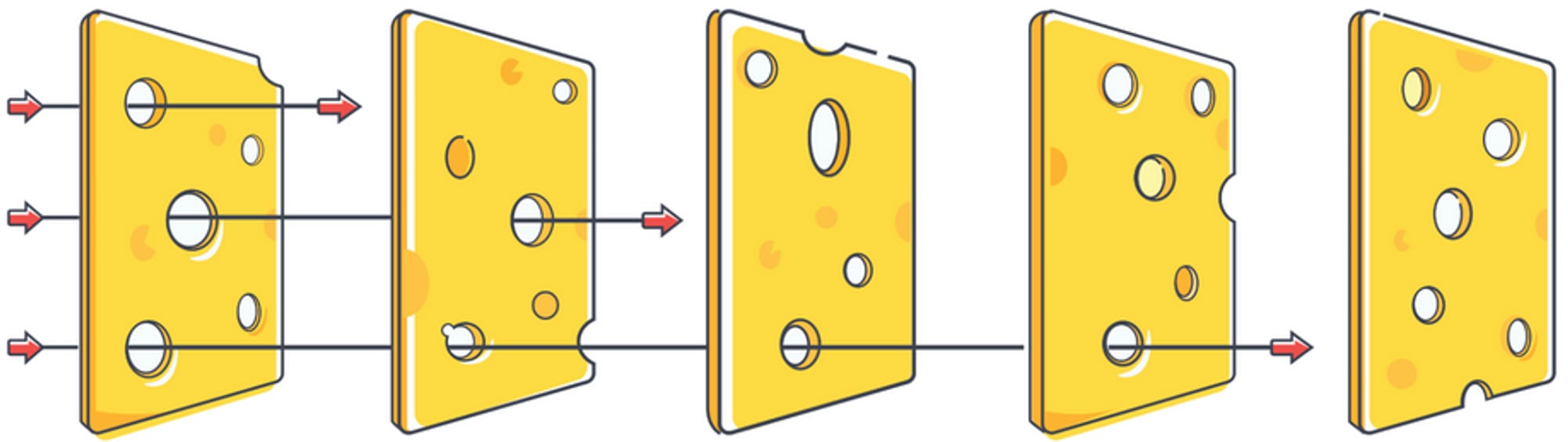

A safe AI landscape necessarily involves various legally enforceable regulations, applied at all stages of the AI life cycle. We can think of this body of cooperating regulations as a Swiss cheese model. This model suggests that multiple layers of regulations acting on various different aspects of the issue at hand, although individually imperfect, they are highly effective when working in combination. This approach prevents various risks and adverse effects, utilising different policy levers.

We want to explore a slice of the Swiss cheese model that could reduce the risk of misuse of advanced AI systems. Our proposal: requiring an “AI driver’s licence” style licencing for advanced AI usage.

In this piece, we’ll talk through our research and argument, and on a high level expand on what our proposal would entail further.

Moving Forward - Scenarios

Going forward, there are a few different ways regulations could be done:

- Do nothing: everything continues as is, people have immediate access to advanced AI without clear consequences for malicious use.

- Regulating development of AI: rigorous regulations on the development of advanced AI models may ensure that what makes it into the public sphere for usage is already safe enough.

- Ban/limit advanced AI from the get go: nobody is (legally) allowed to produce advanced AI models.

- International standards for AI model life cycles: a reputable, independent body draws up licencing standards, governments can reference these standards and use them in their regulations.

This list of scenarios is not exhaustive and not all the scenarios are equally likely or easy to implement. Here, we want to focus on the fourth option where regulation is not entirely done by governmental bodies but includes the work of international organisations.

Background

Defining Advanced AI Models

Our policy proposal does not aim to regulate the usage of all AI systems, but only those with sufficient capabilities to cause a substantial threat to society. The exact definition of such an advanced AI system would depend on the actual scope and implementation of a driver’s licence policy for AI systems. In some cases, it may be beneficial to remain vague and in others to provide a sharp definition instead. It could be transformative AI as in Gruetzemacher and Whittlestone, an AI system “short of achieving superintelligence” or similar to Google Deepmind’s definition of AGI, an “artificial intelligence that matches (or outmatches) humans on a range of tasks”. However, in the hope of making it more tangible for policy makers we propose that all AI models that fall under the high risk category under the EU AI Act require users to have received an AI driver’s licence. For models of this risk category we use the term advanced AI systems.

When the “current AI landscape” is mentioned we refer to models with capabilities that do not exceed GPT-4o from OpenAI or Claude Opus from Anthropic. These are all models below computing powers of FLOPS according to Epoch.

Risks of unregulated AIs

Many experts and stakeholders in the AI field have discussed what they perceive to be the likely dangerous results of advanced AI left unregulated.

In an Overview of Catastrophic AI Risks, these four categories are identified:

- Malicious use (’individuals or groups intentionally use AIs to cause harm’)

- AI race (’competitive environments compel actors to deploy unsafe AIs or cede control to AIs’)

- Organisational risks (’human factors and complex systems can increase the chances of catastrophic accidents’)

- Rogue AIs (’inherent difficulty in controlling agents far more intelligent than humans’)

The two risks that a driver's licence for AI systems would address most are malicious use and organisational risks. We believe our proposal should be part of larger system of regulations - one layer amongst others - which together should have many checks and balances at each phase of the AI life cycle.

The definition of a licence

Legally, a licence is defined by the Law Dictionary as follows:

“A permission, accorded by a competent authority, conferring the right to do some act which without such authorisation would be illegal, or would be a trespass or a tort”.

A certificate, or certification, is defined by the Law Dictionary as:

“A written assurance, or official representation, that some act has or has not been done, or some event occurred, or some legal formality been complied with”.

A certificate implies a certain level of knowledge or expertise on a subject matter. These are often used in specific artisan fields, for example hairdressing.

The definitions aren’t clear cut, and there are examples where regulations require specific certifications to be allowed to open up occupational practices/businesses. However, moving forward, we’ll frame our proposal as a licence, since the formal definition of a licence aligns more with our proposal.

Licencing as a tool

Licencing has historically been used as a tool, with two broad outcomes:

- Adherence to standards for the safety of citizens (e.g. physician and trade licences),

- Anti-competitive occupational licencing - where safety is not a major concern - as a tool to restrict access and drive up prices (e.g. licences for florists)

Early licences were introduced in good faith, ensuring that physicians in the 16th century had the necessary education and qualifications to administer medical care to the general public. Today, we licence legal access to a wide range of occupations, activities, technologies and dangerous goods. With harm reduction being the main motivation of our policy proposal, we view licencing as a tool to introduce standards for the safety of citizens.

As an example of positive reception of safety policies, 84% of Americans “supported requiring first-time gun purchasers to take a safety course”, part of obtaining a gun licence - according to a 2019 survey.

Self / industrial vs governmental regulation

Chang Ma proposes an Externality view to assess whether an industry should be self or government regulated. This framework uses three main benchmarks to propose the optimal regulatory setup - which in most cases tends to be a hybrid self & government model - and determines which of the two bodies should have more regulatory power.

The benchmarks:

- Externalities to society (costs or benefits that affect a third party) and externalities within the industry (factors that affect all producers),

- Monopoly distortions,

- The degree of asymmetric information (higher when there is more information expertise that the government inherently does not have access to and would thus impede on its ability to effectively regulate, e.g. in the securities market).

Ma’s conclusion is that

“[…] self-regulation is more desirable than government regulation if the degree of asymmetric information is larger than the size of monopoly distortion and externalities to society”.

In his paper, Ma briefly analyses the tech industry. He advocates for a hybrid regulatory approach with more government control, since the monopoly (or oligopoly) distribution in the tech sector is high and the externalities to societies are significant (for example, consumer concerns about their personal information) - outweighing the lack of expert knowledge in government.

A mix in between: ISO standards

ISO (International Organization for Standardization) standards are international standards for a large range of fields - from a standardised cup of tea to energy management systems. These standards help international compatibility and promote co-operation (Fitzpatrick, 2023).

In the ISO system,

“Organisations receive certification if a Certification Body verifies that they have followed the specifications of a management system standard”

and,

“Certification Bodies gain accreditation if they are formally authorised by an Accreditation Body to perform certification assessments”.

Some of these standards are referenced by the legislature of countries, making them legally binding. For example, ISO 13485:2016 sets out standards for the manufacturing of medical devices. Several countries, including Australia, Canada, the EU, Japan, Malaysia, Singapore, the UK and the USA, have written this standard into their legislation (Kristina Zvonar Brkic, 2023).

Three Avenues for Regulation

We have discussed three avenues for regulation. With all three, the government is necessarily involved - but the extent of its role varies.

- Self: Industries are responsible for setting standards and administering licences, for example lawyers are governed by the standards set out by bar associations. These are still subject to constitutional legislation and government audits.

- Government: The government sets regulations and local governmental authorities enforce them, for example consumer protection standards in the pharmaceutical industry.

- Independent, objective organisations: Independent organisations are set up as a regulatory authority, ideally with checks and balances ensuring the continued objectivity. They are independent of governments and should not have any conflicts of interest. The academic peer review system is one example of an independent “organisation” being the enforcing authority, whereas in the case of ISO, the organisation acts as the regulating authority.

Development vs Usage Regulation

The regulation of AI looks different at each lifecycle stage. The Centre for Emerging Technology and Security (CETAS), defines three stages:

- Design, testing and training

- Immediate deployment and usage

- Longer-term deployment and diffusion

Each stage has different associated risk pathways. The scope of our proposal focuses on stages 2 and 3. CETAS suggests policy interventions aimed at achieving three goals: creating visibility and understanding; promoting best practices; and establishing incentives and enforcement.

Specifically, they suggest that policymakers should:

“Explore how different regulatory tools, including licencing, registration and liability can be used to hold developers accountable and responsible for mitigating the risks of increasingly capable AI systems”.

While the need for legal liability at the deployment and usage stage is highlighted, there is no mention of licencing the usage of AI in this comprehensive framework.

Related and Existing Work

Existing work on creating formal “barriers to entry” for AI technology

Lots of work has been done in regulating what is allowed to be developed. Here we summarise two governments’ legislation - two differing examples that we’ll revisit again later.

- The EU AI Act takes a risk based approach. It classifies AI systems based on their risk level, imposing stricter rules on high-risk applications like those used in healthcare, transportation, and law enforcement. Developers are mandated to document their systems clearly, including a summary of the data used to train models.

- Chinese AI regulations focus more on content control and target recommendation algorithms, deep synthesis, and generative AI. Specific requirements include algorithm registration, data security assessments, content traceability, and restrictions on discriminatory algorithms.

Some certifications have emerged, granting those who obtain it with more credibility. For example, there is an IAPP certificate for Artificial Intelligence Governance Professionals. Stanford University School of Engineering offers an Artificial Intelligence Graduate Certificate. Such certificates mostly seem to create higher salary opportunities for those working in the field of AI, but they are not requirements to access any AI technology.

Existing licencing examples and comparison

Before advancing with user licencing for AI systems we first look at existing licencing and certification schemes. We compare four different licencing examples in terms of how they are issued, regulated and enforced as well as what the requirements are in order to obtain such a licence.

Huggingface🤗 - Terms of Service

Huggingface is a popular platform that promotes open source access to datasets, pre-trained AI models as well as computational power. The pre-trained models can range from any small, low-performing model to large models with state of the art performance. In order to use Huggingface’s services users need to read and accept their Terms of Services. No governmental authority is involved.

CITI Certificate - Data or Specimens Only Research

The Certificate for the course Data or Specimens Only Research by CITI is a requirement by many organisations that perform research with data from humans. For example, Physionet from the Massachusetts Institute of Technology (MIT) only shares their recorded patient data to researchers after having passed this course. The course is designed by CITI, who also issues the certificate after the student has passed an online examination. This ensures adhering to ethical standard when processing and researching on the data. No governmental authority is involved.

🇬🇧 Driver’s Licence

Driver’s licences are commonly required by citizens if they want to drive a car. While a car can used as a utility it can also pose a serious threat when either misused or abused. In the UK the government is responsible for regulating the requirements as well as issuing licences. Further the government enforces that citizens do not drive without a licence and provides hefty fines if the rules are disobeyed. The requirements are much more extensive compared to the previous two examples. The driver’s licence is arguably the most expensive example that is provided. There are different licence classes for various types of vehicles, but in any case once obtained, a licence remains valid indefinitely.

🇨🇭 Firearm Licence

A technology more dangerous than cars and designed to kill is firearms. Switzerland has homicide rates 20 times lower than the US despite being in the top 8% for firearms per capita world wide. While Switzerland also defines different classes for different types of firearms, the regulation, issuing and enforcing authority are all governmental authorities. Safety training is not required but background checks are performed and the requirements are stricter. Firearm licences are mostly not permanent and need to be renewed after five years.

Comparing Licencing Examples - Table

The following table provides an overview over the different licencing schemes and how they compare in different details. The right-most column describes our policy proposal and is further discussed in the next section.

| Huggingface - Terms of Service | CITI Certificate (ethical research) | Driver’s licence (UK) | Firearm Licence (Switzerland) | AI User Licence (proposal) | |

|---|---|---|---|---|---|

| Regulating Authority | Huggingface (Internal policies) | CITI | Governmental organisation (DVLA) | Swiss federal government | international org approved by government (e.g. UN, ISO) |

| Issuing Authority | Huggingface | CITI | Governmental organisation (Driver and Vehicle Licencing Agency (DVLA)) | Police authority (governmental organisation) | non-governmental organisation |

| Enforcing Authority | Huggingface (Internal review teams) | Organisation that requires the certificate | Police, Gov. organisation (Driver and Vehicle Standards Agency) | Local police forces (governmental organisation) | local government |

| Government regulated | ❌ | ❌ | ✅ | ✅ | ✅ / ❌ |

| Requirements | Reading and accepting, above 13 years | Studying course contents and passing a test, above 13 years | Studying theory, practical driving hours, passing theoretical and practical exam, pass vision examination, above 15 years 9 months, valid ID | No criminal record, no perceived threats, legitimate need, above 18 years | study course, pass a test (all online), valid ID |

| Background Checks | ❌ | ❌ | ❌ | ✅ | ❌ |

| Time to acquire | if text is read ~ 42 mins, else ~ 1 min | ~ 5 hours | 3-6 months | weeks to months | in the range of 5-12 hours |

| Costs | free | free (sponsored by university) | ~ $2000 (incl. lessons, examination and processing fees) | ~ $56 (processing fees) | low costs, universally obtainable |

| Validity | permanent | 5 years | permanent | Mostly 5 years, some registrations are permanent | permanent, unless new AI models require reassessment of risks and training |

| Legal consequences for disobeying | Account suspension, legal action for violations | Without certificate there is no access | Fines, revocation, imprisonment | Fines, revocation, imprisonment | Without certificate there is no access, AI deployers & licence issuer face penalties for violations |

Proposal - The AI Driver’s Licence

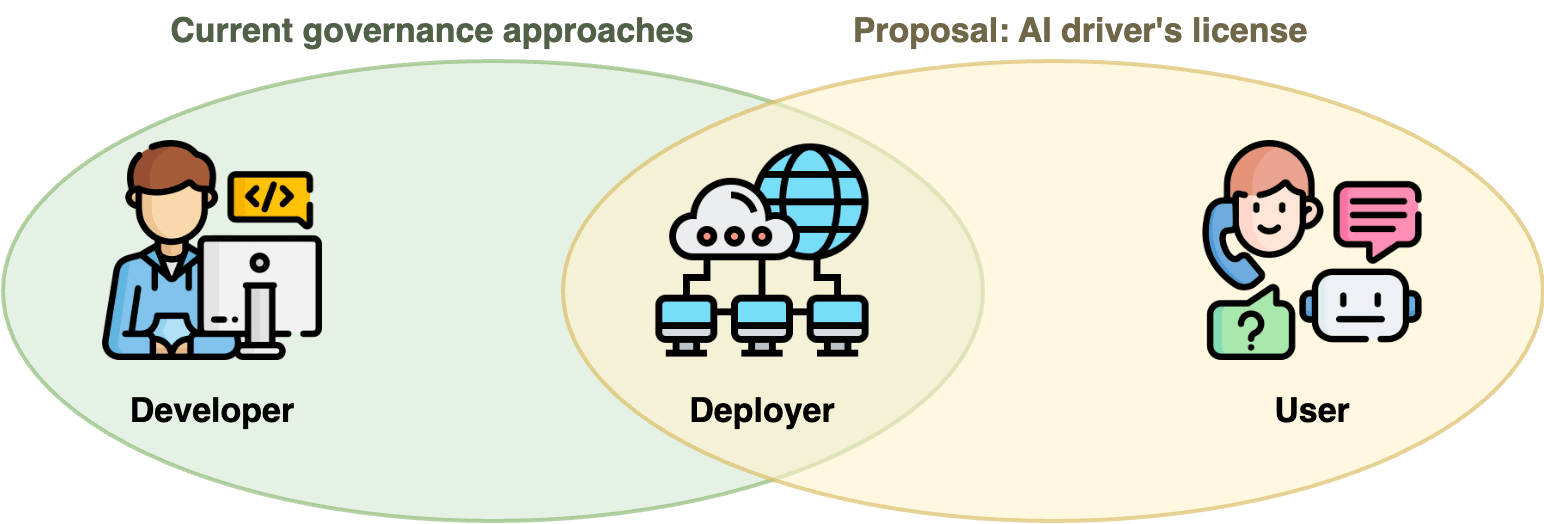

We propose that users of an advanced AI system are obliged to obtain a licence in order to use these systems. This licence permits the owner to use any type of model that falls under the definition of advanced AI systems. This policy would not regulate the developers of these models as existing policy proposals tend to do (see top Figure). In this case the users as well as the deployers of these AI systems are targeted by the policy proposal.

Users are under the obligation to have an AI driver’s licence before using advanced AI systems. At the same time deployers of these systems are obligated to check that their customers (or users) possess such a licence. If users or deployers fail to comply with these rules they face penalties.

In the following we analyse different possibilities to define the regulations around the policy as well as state our opinion about which options seems most promising. The right-most column in the table above summarises our preferences.

Issuing, Regulating and Enforcing Authority

Central to this regulatory approach is defining which authorities have power over issuing, regulating and enforcing the licences.

Regulating Authority

The regulating authority is responsible with defining the requirements for obtaining the AI driver’s licence. In the example of Huggingface, the company itself defines the contents of their Terms of Service. Governmental agencies determine the requirements for licences such as the driver’s licence in the UK or the firearms licence in Switzerland. For the implementation of the AI driver’s licence some organisations needs to be appointed to define the requirements to obtain the licence and keep the requirements updated. If this is to be done by a governmental body, it would either need to be created or integrated into an existing body with similar tasks. The corresponding task force would have to form opinions by asking domain experts for their opinions. If many governments decide to implement a licencing scheme and independently assess the requirements, this would lead to a lot of redundant work and possibly with very heterogeneous results. An alternative approach that would mitigate this problem would be to have an international organisation that defines the requirements centrally. This would save costs for the individual governments as well as guarantee that everything is up to date. Further, it would guarantee a more homogeneous field of requirements, making it easier for users to follow as well as for deployers of advanced AI models to follow the requirements. Current examples would be ISO, the trusted organisation for setting many international standards, or the USB Implementers Forum, which defined standards for USB-C connectors which were then required by the EU as a common charger for mobile devices. In any case, whether a governmental or non-governmental organisation defines the requirements, a governmental body needs to implement these requirements into law. In addition, the punishments for disobeying the rules need be set as well.

Issuing Authority

The issuing authority is able to distribute licences. It has to make sure that users comply with the requirements determined by the regulating authority. In the case of Terms of Services it is the company itself. And once again, it is a governmental body that issues licences for regular driver’s licences in the UK as well as firearms in Switzerland. Having governmental bodies responsible for distributing licences is more expensive to run and a potentially slow process but it guarantees higher compliance. On the other hand, if licences are distributed by independent organisations the entire process could open a new business sector with quick adoption rates. Not all governments are known to be proficient at navigating the digital world. If independent organisations are allowed to issue licences, the most convenient and cheap licences will be most successful. A simple online course and examination such as with the example of CITI seems to be promising.

Enforcing Authority

After having discussed the regulatory authority for defining licence requirements and the issuing authority for distributing licences, the enforcing authority complements the two by making sure that all rules are adhered to. As for the previous authorities, the enforcing authority could either be governmental or non-governmental. A governmental inspection could either sporadically check deployers of advanced AI systems - and also issuing organisations in the case of the issuing authority not being a governmental body - or require regular reports. Alternatively, deployers (and issuing organisations) could also be required to undergo auditing processes by non-governmental institutions such as is common in the financial industry.

💡 Ideally, we would like to see independent organisations issuing licences to users of AI systems. An international organisation formalising the licence requirements in order for a user to be granted a certificate while governmental institutions audit whether the issuing organisations adhere to the requirements and regulations of the international committee. In addition, the governmental institution also investigates if deployers of advanced AI systems follow the regulations.

Costs, Time to Acquire, Validity and Background Checks

In order to keep the overhead low that is introduced by an AI driver’s licence, yet remain effective the costs, validity and length of the course need to be considered.

The costs should be kept low in order for the licence to be obtainable independent of income. If the AI driver’s licence teaching content is provided online, the costs would mainly be for administrative tasks. Ideally, the courses are sponsored in the same way it is done for the CITI courses. In order to reduce competition pressures a government implementing AI driver’s licence could decide to initially sponsor the costs for obtaining the licences. This way the inhibition level for users to obtain the licence would be further lowered and incentives to avoid the acquisition of the licence and use illegal methods are reduced.

What about the time to acquire the licence? The time it takes to study the course’s content as well as take the exam should be sufficient to gather the necessary knowledge on how to ethically and safely use advanced AI systems. We roughly estimate this to be in the range of five to twelve hours total. The duration would also depend on the student’s previous knowledge and speed of learning. However, more attention is required to define the actual syllabus which in turn defines the length of the course. If an online course is provided and the proof of identity can also be verified online, obtaining the licence should be possible directly once the exam is passed.

A driver’s licence as well as the agreement of the Terms of Service are permanent. But not all licences are. The Swiss firearm licence needs to be renewed every five years. The same is true for the CITI course on ethical research. Whether this is due to economic interests or due to possible updates in the syllabus remains unknown. The validity of the AI driver’s licence should be permanent as there is no specific need to have it renewed frequently. There might be an objection to permanent validity, when new AI models and their capabilities require a reassessment of the licence requirements. In this case - as we are facing tremendous development speeds in the AI landscape - it might be wise to cap the validity.

In the previous examples background checks are only required for individuals obtaining a firearms licence. This is a valid requirement as the government wants to make sure that the threat posed by the individual is low before providing access to lethal technology. Background checks in the US are effective and correlated with a 15% drop in homicide rate. Similar arguments can be made for the AI driver’s licence. But introducing background checks for this licence would be a much greater obstacle as costs, time to acquire, time to implement, as well as acceptance of the regulation would be less favourable. Hence, under the current AI landscape we would prefer background checks to be avoided and instead have a faster implementation of the policy proposal.

Single licence vs. Multi-Class licence

Introducing an AI driver’s licence can be done in two ways: 1) a single licence that allows user interaction with any advanced AI system, or 2) a hierarchy of classes that allows users to access AI models that correspond to given level of models.

Driver’s licences as well as firearm licences both have different classes for different vehicle and weapon types respectively. The manuals for different vehicle and weapon types are different and can also include different training. Does this also extend to AI models?

Over the past few years a multitude of different types of AI models have been developed. Not all types maintain mainstream attention for long. Currently Generative AI model types such as Transformers for Natural Language Processing as well as Diffusion Models and Vision Transformers for image generation are among the most notable. The AI landscape is moving swiftly and any regulation similar to a ‘licence for trucks’ might not hold for long. The risk based approach from the EU AI Act seems a more favourable approach in incorporating all necessary models.

Studies on gun policies come to a similar conclusion. Siegel et al. concluded that the

“[…] most effective gun-control measures are those that regulate who has legal access to guns as opposed to what kinds of guns they have access to”.

For the sake of simplicity and faster adoption rates we propose a single licence scheme rather than a multi-class licence scheme.

Policy implications: Integrating user licencing into existing frameworks

It is necessary to reflect (on a high level, since the details will get complicated quickly) on how our proposal will affect existing related policies.

The EU’s AI Act of 2024 places most of the responsibility on the developers of AI systems. Users of these systems can of course be held liable for violations of the GDPR and harm caused - but the AI Act does not specifically tackle scenarios of intentional malicious use by bad actors.

Chinese regulations have several restrictions on users and developers, including:

- AI-generated content must align with the Chinese Communist Party ideology (adherence to core socialist values),

- anything harmful, hateful, containing misinformation or that may undermine national security is prohibited (content restrictions).

In contrast to the EU AI Act the Chinese regulation also addresses users by requiring them to

- remain within the bounds of the intended uses, or face penalties (purpose limitations),

- register with their real names in order to access AI systems (real-name registration).

In both cases, introducing an AI driver’s licence requirement for access to advanced AI models would not contradict any existing regulations - but rather complement them. In the EU AI Act developers and deployers have to submit reports regarding their models in order to assess the risk category they fall into. In case of model deployment, the model deployers could integrate their strategy of adhering to the requirements of the AI driver’s licence to the report. This would add an acceptable amount of work when complying with the regulations within the EU. With Chinese regulations the deployers are already required to check for the user’s name. It would be a minor addition to also check for an AI driver’s licence.

We believe that we should be working towards a regulatory ecosystem, that dynamically addresses the evolving risks that AI technology pose. Others have already referenced the Swiss cheese model as a metaphor for effectively mitigating different risks with the various regulatory measures we put in place - at all stages of AI’s lifecycle.

Pros and Cons

In the table below, we have summarised some of the most relevant consequences to consider and whether we think they would be positive or negative outcomes, should this licence be implemented.

| Pros (+) | Cons (-) |

|---|---|

| Increased safety and risk reduction, as result of intentional and educated usage | Administrative and financial strain on educational institutions wanting to provide licences for research |

| More public awareness of AI safety concerns | Pushback from companies if they predict lower profits |

| Introduces no major conflicts with existing legislation | Individual access limitation, if licence cost is significant |

| Could create a registry of those with access to advanced AI, helpful for investigations following non-compliance | If regulations are too expensive or cumbersome to implement, industry could shift to the black market or operate in unregulated countries (competitive pressures) |

| Training and testing administration is a new business opportunity | Relies heavily on international adoption |

| Increased trust in those working with advanced AI systems | |

| Incentivises the development of lower risk models that aren’t classified as advanced |

Conclusion & Limitations

We conclude that the policy proposal of introducing AI driver’s licences for advanced AI systems is a feasible and helpful tool in order to reduce misuse of capable and dangerous AI systems. The AI driver’s licence requires users to obtain a licence before interacting with advanced AI systems. Part of the requirements is studying materials on ethics and safety aspects when using these systems as well as passing an associated exam. The success of the policy depends on multiple implementation details.

The implementation speed depends a lot on the scope of the requirements such as whether they are defined by a new governmental body or an international organisation and whether background checks are required or not.

We laid out that an international organisation defining the standards and requirements for the AI driver’s licence is preferable to every government coming up with their own requirements. Issuing the AI driver’s licences could also be done by independent organizations. Governments then would only have to make sure that the issuing organizations adhere to the rules for providing licences as well as check that deployers of advanced AI models monitor whether their customers and/or users possess a licence.

Further, regulators will have to carefully check that competition pressures won’t be an issue as users could quickly use AI systems that are provided abroad and circumvent the licence requirements. In order to effectively implement an AI driver’s licence - as well as many other AI regulations - international collaboration is inevitable.

Because we are regulating the usage stage of an AI model*,* we are effectively forcing developers to consider how their use of each component of the AI Triad (data, compute, algorithm) will affect the classification of their product as an advanced AI model.

There are many details to iron out and policymakers to involve. If the AI driver’s licence were to be implemented, a much deeper feasibility study would be required.

We believe we have motivated the potential of this licencing scheme successfully enough to warrant a deeper investigation. We invite any readers to engage with us with feedback and comments.

Limitations

While having the potential to reduce harmful misuse of advanced AI systems, there are some limitations to this proposal. Firstly, it does not address the existential risks posed by advanced AI systems. This is, to some extent, a consequence of the fact that this regulatory approach only affects the deployment and user side. It does not guarantee that the AI models behave safely, when users adhere to ethical and safe conducts. However, we do believe the policy proposal can and should be part of a bigger regulatory system. Before it could be implemented, there would need to be a much more in depth analysis - by policy experts - of the impact it could have, especially if it could deepen existing monopolies or have adverse affects due to competitive pressures.

The next steps would need to include designing the scope and content of the course users would need to complete. This goes hand in hand with defining which models fall under the term of advanced AI models and a re-evaluation of whether the high risk category of the EU AI Act is the correct scope. Finally, appropriate penalties should be defined for issuing licences falsely or illegally as well as for AI model deployers not keeping their due diligence and not requiring the AI driver’s licences from their customers.

Acknowledgements

We would like to thank James Bryant for his mentorship, taking the time to answer our questions as well as providing feedback. James also proved to be a great motivator. Special thanks to our AI Safety Fundamental Cohorts that provided feedback on our ideas. Another big thank you to Amelia Agranovich who proofread and edited our draft.

Icons used in this project were designed by Eucalyp and are available on Flaticon.

1 comments

Comments sorted by top scores.

comment by Jiro · 2024-07-27T09:14:06.666Z · LW(p) · GW(p)

Any time you demand a license for something, that amounts to "if you don't do as demanded, men with guns will come and if you continue to disobey, will kill you or lock you in a cage". That should be enough to oppose this proposal. We've been through this before with cryptography rules, and even those were only about export.

Not to mention that even that assumes that the people handing out the licenses will be good at their job. If you actually implement this, you're not going to get licenses that require expertise; you're going to get licenses that require that you follow whatever arbitrary rule some committee and pressure groups have cooked up. The AI license is far more likely to be used to prevent business competition and to sound good in a political speech than to promote AI safety.

And using an international organization is even worse. The last thing I want is China and Russia getting a vote on what software I'm allowed to have.