Most likely is not likely

post by Malmesbury (Elmer of Malmesbury) · 2022-02-12T17:27:27.120Z · LW · GW · 2 commentsContents

2 comments

Cross-posted from Telescopic Turnip.

“And I stop at every mirror just to stare at my own posterior.” – Megan Thee Stallion

I love bayesian statistics just as much as you do. However, there is a trap that people keep falling into when interpreting bayesian statistics. Here is a quick explainer, just so I can link to it in the future.

You roll a 120-faced die. If it falls on one, you stop. If it gives any other number, you re-roll until you get a one. How many times do you think you will have to roll the die? Let’s do the math: you have a 1/120 chance of rolling the die only once, a 119/120 × 1/120 chance of rolling it twice, and so on. So a single roll is the most likely outcome, any other number of rolls will happen less often. Yet, a 1/120 chance is not even 1%. In this case, “most likely” is actually pretty unlikely.

While this problem is pretty obvious in a simple die-roll game, it can become very confusing for more complicated questions. One of them, a classic Internet flamewar topic, is the doomsday argument. It’s a calculation of the year humanity is the most likely to go extinct, using Bayesian statistics. Briefly, it goes like this:

- We have no idea when or how humanity can possibly go extinct, so we start with no strong a priori beliefs about that, aside from the fact that it’s in the future. However, we have one observation: we know we are living in the 21st century.

- Bayes’ theorem says that the probability of an hypothesis is proportional to your prior beliefs times the likelihood (that is, the probability of observing what we observe under the hypothesis).

- If humanity goes extinct in 3 billion years, it means you were born in the very, very beginning. If we pick a human life at random, it’s quite unlikely to live in the 21st century.

- If humanity goes extinct in a few centuries, then you were born somewhere around the middle of the crowd, and living in the 21st was pretty likely. The prior is roughly the same, but the likelihood is much higher.

The exact parameters can change, but the conclusion is usually that humanity will most likely go extinct within a few millenia.

And that’s where the flamewars begin: how can you know that such a huge disaster will happen in the next millenia? I think the problem with this reaction is that is conflates “most likely” with “likely”, just like the example above with the dice. The calculation says that the year 9000 AD is the most likely date for human extinction, but the actual, absolute probability of it happening is still pretty damn low. Think of it like this:

- What’s more likely: that humanity goes extinct in the year 3000 AD, or next year?

- What’s more likely: that humanity goes extinct in the year 3000 AD, or in the year 666,666,666 AD exactly? What about 666,666,667 AD?

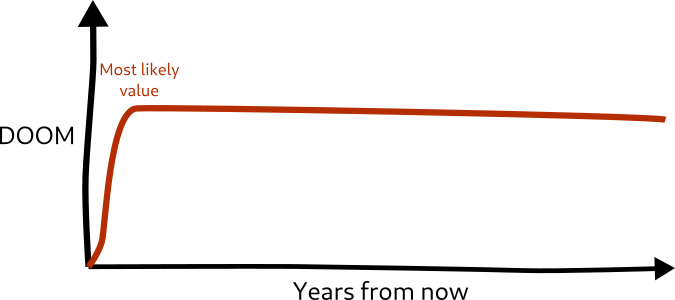

Repeat the same question for every year from today to the infinite. Without even doing the Bayesian dance, we can already expect the distribution to look something like this:

Keep in mind that we are talking about a probability distribution, so it must sum to one. Therefore, it cannot possibly be constant from now to infinity. Unless it’s discontinuous, it has to reach a maximum somewhere and then decrease. The question is only “where is this maximum”. I think, put this way, it’s pretty obvious and intuitive that the most likely date for human extinction is not-so-far-from-now. (It’s also worth pointing out that, under the Doomsday argument’s assumptions, the expectancy of this distribution is infinite.) I hope this will permanently solve the flamewars forever.

Unfortunately, this pattern is widespread in academic papers and Internet discussions: someone does some statistics, pulls out a most likely hypothesis, but doesn’t pay too much attention to how likely this hypothesis is absolute terms. When examining such statistical reasoning, it is often worth looking under the hood, to see how much uncertainty is hidden around the most likely explanation.

2 comments

Comments sorted by top scores.

comment by Quintin Pope (quintin-pope) · 2022-02-12T22:08:19.178Z · LW(p) · GW(p)

I think the true reference class relevant for the doomsday argument is “humans who think about the doomsday argument”, rather than “humans in general”. Thus, one way in which the doomsday argument might not apply is if future generations stopped thinking about the doomsday argument.

As an illustration, imagine that humanity was replaced by p-zombies/automata without any internal thoughts at all. That seems like it would satisfy the doomsday argument’s prediction. Thus, removing only the internal thoughts related to the doomsday argument should do so as well.

I’d therefore like to suggest the doomsday argument as a possible infohazard, which we should try to avoid discussing when possible. (To be clear, I don’t blame you at all for bringing it up, and I agree with your main point that conflating between “most” vs “very” likely is harmful and happens often. I just think that mentioning the doomsday argument is a bad idea.)

Replies from: meedstrom