Superintelligence 17: Multipolar scenarios

post by KatjaGrace · 2015-01-06T06:44:45.533Z · LW · GW · Legacy · 38 commentsContents

Summary Another view Notes In-depth investigations How to proceed None 38 comments

This is part of a weekly reading group on Nick Bostrom's book, Superintelligence. For more information about the group, and an index of posts so far see the announcement post. For the schedule of future topics, see MIRI's reading guide.

Welcome. This week we discuss the seventeenth section in the reading guide: Multipolar scenarios. This corresponds to the first part of Chapter 11.

Apologies for putting this up late. I am traveling, and collecting together the right combination of electricity, wifi, time, space, and permission from an air hostess to take out my computer was more complicated than the usual process.

This post summarizes the section, and offers a few relevant notes, and ideas for further investigation. Some of my own thoughts and questions for discussion are in the comments.

There is no need to proceed in order through this post, or to look at everything. Feel free to jump straight to the discussion. Where applicable and I remember, page numbers indicate the rough part of the chapter that is most related (not necessarily that the chapter is being cited for the specific claim).

Reading: “Of horses and men” from Chapter 11

Summary

- 'Multipolar scenario': a situation where no single agent takes over the world

- A multipolar scenario may arise naturally, or intentionally for reasons of safety. (p159)

- Knowing what would happen in a multipolar scenario involves analyzing an extra kind of information beyond that needed for analyzing singleton scenarios: that about how agents interact (p159)

- In a world characterized by cheap human substitutes, rapidly introduced, in the presence of low regulation, and strong protection of property rights, here are some things that will likely happen: (p160)

- Human labor will earn wages at around the price of the substitutes - perhaps below subsistence level for a human. Note that machines have been complements to human labor for some time, raising wages. One should still expect them to become substitutes at some point and reverse this trend. (p160-61)

- Capital (including AI) will earn all of the income, which will be a lot. Humans who own capital will become very wealthy. Humans who do not own income may be helped with a small fraction of others' wealth, through charity or redistribution. p161-3)

- If the humans, brain emulations or other AIs receive resources from a common pool when they are born or created, the population will likely increase until it is constrained by resources. This is because of selection for entities that tend to reproduce more. (p163-6) This will happen anyway eventually, but AI would make it faster, because reproduction is so much faster for programs than for humans. This outcome can be avoided by offspring receiving resources from their parents' purses.

Another view

Tyler Cowen expresses a different view (video, some transcript):

The other point I would make is I think smart machines will always be complements and not substitutes, but it will change who they’re complementing. So I was very struck by this woman who was a doctor sitting here a moment ago, and I fully believe that her role will not be replaced by machines. But her role didn’t sound to me like a doctor. It sounded to me like therapist, friend, persuader, motivational coach, placebo effect, all of which are great things. So the more you have these wealthy patients out there, the patients are in essense the people who work with the smart machines and augment their power, those people will be extremely wealthy. Those people will employ in many ways what you might call personal servants. And because those people are so wealthy, those personal servants will also earn a fair amount.

So the gains from trade are always there, there’s still a law of comparative advantage. I think people who are very good at working with the machines will earn much much more. And the others of us will need to find different kinds of jobs. But again if total output goes up, there’s always an optimistic scenario.

Though perhaps his view isn't as different as it sounds.

Notes

1. The small space devoted to multipolar outcomes in Superintelligence probably doesn't reflect a broader consensus that a singleton is more likely or more important. Robin Hanson is perhaps the loudest proponent of the 'multipolar outcomes are more likely' position. e.g. in The Foom Debate and more briefly here. This week is going to be fairly Robin Hanson themed in fact.

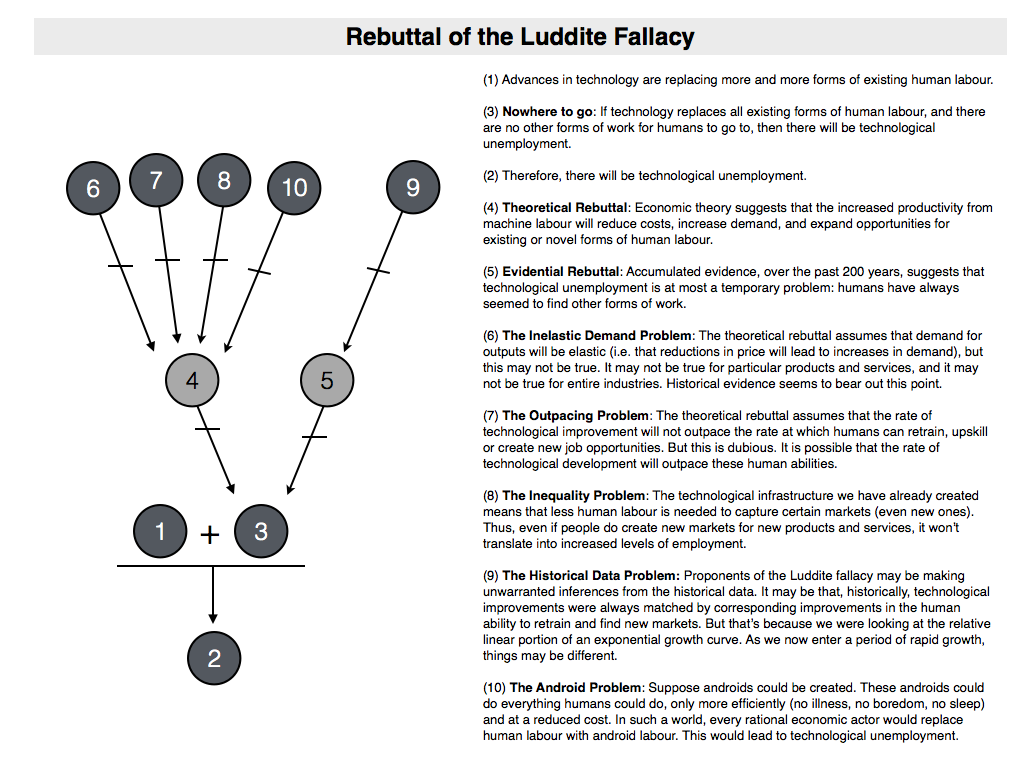

2. Automation can both increase the value produced by a human worker (complementing human labor) and replace the human worker altogether (substituting human labor). Over the long term, it seems complementarity has been been the overall effect. However by the time a machine can do everything a human can do, it is hard to imagine a human earning more than a machine needs to run, i.e. less than they do now. Thus at some point substitution must take over. Some think recent unemployment is due in large part to automation. Some think this time is the beginning of the end, and the jobs will never return to humans. Others disagree, and are making bets. Eliezer Yudkowsky and John Danaher clarify some arguments. Danaher adds a nice diagram:

3. Various policies have been proposed to resolve poverty from widespread permanent technological unemployment. Here is a list, though it seems to miss a straightforward one: investing ahead of time in the capital that will become profitable instead of one's own labor, or having policies that encourage such diversification. Not everyone has resources to invest in capital, but it might still help many people. Mentioned here and here:

And then there are more extreme measures. Everyone is born with an endowment of labor; why not also an endowment of capital? What if, when each citizen turns 18, the government bought him or her a diversified portfolio of equity? Of course, some people would want to sell it immediately, cash out, and party, but this could be prevented with some fairly light paternalism, like temporary "lock-up" provisions. This portfolio of capital ownership would act as an insurance policy for each human worker; if technological improvements reduced the value of that person's labor, he or she would reap compensating benefits through increased dividends and capital gains. This would essentially be like the kind of socialist land reforms proposed in highly unequal Latin American countries, only redistributing stock instead of land.

4. Even if the income implications of total unemployment are sorted out, some are concerned about the psychological and social consequences. According to Voltaire, 'work saves us from three great evils: boredom, vice and need'. Sometimes people argue that even if our work is economically worthless, we should toil away for our own good, lest the vice and boredom overcome us.

I find this unlikely, given for instance the ubiquity of more fun and satisfying things to do than most jobs. And while obscolesence and the resulting loss of purpose may be psychologically harmful, I doubt a purposeless job solves that. Also, people already have a variety of satisfying purposes in life other than earning a living. Note also that people in situations like college and lives of luxury seem to do ok on average. I'd guess that unemployed people and some retirees do less well, but this seems more plausibly from losing a previously significant source of purpose and respect, rather than from lack of entertainment and constraint. And in a world where nobody gets respect from bringing home dollars, and other purposes are common, I doubt either of these costs will persist. But this is all speculation.

On a side note, the kinds of vices that are usually associated with not working tend to be vices of parasitic unproductivity, such as laziness, profligacy, and tendency toward weeklong video game stints. In a world where human labor is worthless, these heuristics for what is virtuous or not might be outdated.

Nils Nielson discusses this issue more, along with the problem of humans not earning anything.

5. What happens when selection for expansive tendencies go to space? This.

6. A kind of robot that may change some job markets:

(picture by Steve Jurvetson)

In-depth investigations

If you are particularly interested in these topics, and want to do further research, these are a few plausible directions, some inspired by Luke Muehlhauser's list, which contains many suggestions related to parts of Superintelligence. These projects could be attempted at various levels of depth.

- How likely is one superintelligence, versus many intelligences? What empirical data bears on this question? Bostrom briefly investigated characteristic time lags between large projects for instance, on p80-81.

- Are whole brain emulations likely to come first? This might be best approached by estimating timelines for different technologies (each an ambitious project) and comparing them, or there may be ways to factor out some considerations.

- What are the long term trends in automation replacing workers?

- What else can we know about the effects of automation on employment? (this seems to have a fair literature)

- What levels of population growth would be best in the long run, given machine intelligences? (this sounds like an ethics question, but one could also assume some kind of normal human values and investigate the empirical considerations that would make situations better or worse in their details.

- Are there good ways to avoid malthusian outcomes in the kind of scenario discussed in this section, if 'as much as possible' is not the answer to 6?

- What policies might help a society deal with permanent, almost complete unemployment caused by AI progress?

How to proceed

This has been a collection of notes on the chapter. The most important part of the reading group though is discussion, which is in the comments section. I pose some questions for you there, and I invite you to add your own. Please remember that this group contains a variety of levels of expertise: if a line of discussion seems too basic or too incomprehensible, look around for one that suits you better!

Next week, we will talk about 'life in an algorithmic economy'. To prepare, read the section of that name in Chapter 11. The discussion will go live at 6pm Pacific time next Monday January 12. Sign up to be notified here.

38 comments

Comments sorted by top scores.

comment by KatjaGrace · 2015-01-06T06:50:03.007Z · LW(p) · GW(p)

Was the analogy to horses good?

Replies from: Alex123, Sebastian_Hagen↑ comment by Alex123 · 2015-01-07T04:07:59.962Z · LW(p) · GW(p)

It's really good. People are superintelligence to horses, and they (horses) lost 95% of jobs. With SI to people, people will loose no less % of jobs. We have to take it as something provably coming. It will be painful but necessary change. So many people spend their lives on so simple jobs (like cleaning, selling etc).

↑ comment by Sebastian_Hagen · 2015-01-08T01:28:36.372Z · LW(p) · GW(p)

I like it. It does a good job of providing a counter-argument to the common position among economists that the past trend of technological progress leading to steadily higher productivity and demand for humans will continue indefinitely. We don't have a lot of similar trends in our history to look at, but the horse example certainly suggests that these kinds of relationships can and do break down.

comment by KatjaGrace · 2015-01-06T06:46:57.262Z · LW(p) · GW(p)

Do you think a multipolar outcome is more or less likely than a singleton scenario?

Replies from: Alex123↑ comment by Alex123 · 2015-01-06T20:47:46.371Z · LW(p) · GW(p)

Unless somebody specifically pushes for multipolar scenario its unlikely to arise spontaneously. With our military-oriented psychology any SI will be first considered for military purposes, including prevention of SI achievement by others. However, a smart group of people or organizations might purposefully multiply instances of near-ready SI in order to create competition which can increase our chances of survival. Creating social structure of SIs might make them socially aware and tolerant, which might include tolerance to people.

Replies from: Sebastian_Hagen, KatjaGrace↑ comment by Sebastian_Hagen · 2015-01-08T01:23:40.312Z · LW(p) · GW(p)

Note that multipolar scenarios can arise well before we have the capability to implement a SI.

The standard Hansonian scenario starts with human-level "ems" (emulations). If from-scratch AI development turns out to be difficult, we may develop partial-uploading technology first, and a highly multipolar em scenario would be likely at that point. Of course, AI research would still be on the table in such a scenario, so it wouldn't necessarily be multipolar for very long.

↑ comment by KatjaGrace · 2015-01-09T02:48:41.073Z · LW(p) · GW(p)

Why would military purposes preclude multiple parties having artificial intelligence? It seems you are assuming that if anyone achieves superintelligent machines, they will have a decisive enough advantage to prevent anyone else from having the technology. But if they are achieved incrementally, that need not be so.

comment by KatjaGrace · 2015-01-06T06:46:35.023Z · LW(p) · GW(p)

Are you convinced that absent a singleton or some other powerful forces, human wages will go below subsistance in the long run? (p160-161)

Replies from: Larks, Baughn, timeholmes↑ comment by Baughn · 2015-01-07T11:09:51.135Z · LW(p) · GW(p)

In the limit as time goes to infinity?

Yes. The evolutionary arguments seem clear enough. That isn't very interesting, though; how soon is it going to happen?

I'm inclined to think "relatively quickly", but I have little evidence for that, either way.

Replies from: Sebastian_Hagen, Capla↑ comment by Sebastian_Hagen · 2015-01-08T01:19:31.533Z · LW(p) · GW(p)

Yes. The evolutionary arguments seem clear enough. That isn't very interesting, though; how soon is it going to happen?

The only reason it might not be interesting is because it's clear; the limit case is certainly more important than the timeline.

That said, I mostly agree. The only reasonably likely third (not-singleton, not-human-wages-through-the-floor) outcome I see would be a destruction of our economy by a non-singleton existential catastrophe; for instance, the human species could kill itself off through an engineered plague, which would also avoid this scenario.

Replies from: diegocaleiro↑ comment by diegocaleiro · 2015-02-10T23:28:50.039Z · LW(p) · GW(p)

Not necessarily, there may be not enough economic stability enough to avoid constant stealing, which would redistribute resources in dynamical ways. The limit case could never be reached if forces are sufficiently dynamic. If the "temperature" is high enough.

↑ comment by Capla · 2015-01-10T17:12:04.382Z · LW(p) · GW(p)

Why? If humans are spreading out through the universe faster than the population is growing, then everyone can stay just ahead of the Malthusian trap.

Replies from: Baughn↑ comment by Baughn · 2015-01-10T17:54:08.903Z · LW(p) · GW(p)

That's not a realistic outcome. The accessible volume grows as t^3, at most, while population can grow exponentially with a fairly short doubling period. An exponential will always outrun a polynomial.

I could mention other reasons, but this one will do.

↑ comment by timeholmes · 2015-01-09T01:26:19.299Z · LW(p) · GW(p)

We too readily extrapolate our past into our future. Bostrom talks a lot about the vast wealth AI will bring, turning even the poor into trillionaires. But he doesn't connect this with the natural world, which, however much it once seemed to, does not expand no matter how much money is made. Wealth only comes from two sources: nature and human creativity. Wealth will do little to squeeze more resources out of a limited planet. Even so you maybe bring home an asteroid of pure diamond. Wealth is not the same as life well-lived! Looks to me like without a rapid social maturation the wealthy will employ a few peasants at slave wages (yes, trillionaires perhaps, but in a world where a cup of clean water costs a million), snap up most of the resources, and the rest of humanity will be rendered for glue. The quality of our future will be a direct reflection of our moral maturity and sophistication.

comment by KatjaGrace · 2015-01-06T06:49:14.144Z · LW(p) · GW(p)

Do you think we should do anything to prepare for these pressures for very low wages or very high populations outcomes ahead of time?

comment by KatjaGrace · 2015-01-06T06:47:26.167Z · LW(p) · GW(p)

Do you think there will be times of malthusian destitution in the future? (p163-6)

Replies from: diegocaleiro↑ comment by diegocaleiro · 2015-02-10T23:26:23.306Z · LW(p) · GW(p)

Many wild populations of non-island animals already have been through long selective pressures to the point of Malthusian conditions being reached by the worse off members of a population. Human societies, even the most primitive ones, live in cultures of perceived abundance, where much less than what we consider minimal is seen as enough to spend 6 hours a day chatting, gossiping, waging war or courting.

Human preferences seem to be logarithmic on amount of resources for many humans. I don't think I'd worry much about not owning the Moon once you gave me the Earth. One could envision a world where abundance far surpasses the needs of all after redistribution. people like Thiel seem to defend this as highly likely.

But then time keeps running and whoever is still multiplying will take more resources, or who is owning a lot and spending little.

comment by Larks · 2015-01-08T04:42:03.995Z · LW(p) · GW(p)

Bostrom argues that much of human art, etc. is actually just signalling wealth, and could be eventually replaced with auditing. But that seems possible at the moment - why don't men trying to attract women just show off the Ernst&Young Ap on their phone, which would vouch for their wealth, fitness, social skills etc.?

Replies from: KatjaGrace, timeholmes, skeptical_lurker↑ comment by KatjaGrace · 2015-01-09T02:58:08.212Z · LW(p) · GW(p)

Good question. One answer sometimes given to why men don't woo women with their bank statement or the like is that it is just hard to move from one equilibrium to another, because being the first man to try to woo a woman with his bank statement looks weird, and looking weird sends a bad signal.

Another thing to notice - which I'm not sure we have a good explanation for - is that people often do what I think is sometimes called 'multimodal signaling' - they signal the same thing in lots of different ways. For instance, instead of spending all their money on the most expensive car they can get, they spend some on a moderately expensive suit, and some on a nice house, etc.

Replies from: diegocaleiro↑ comment by diegocaleiro · 2015-02-10T23:17:43.567Z · LW(p) · GW(p)

The idea that what a man is trying to signal is wealth is false for reasons similar to Homo Economicus being a false analogy. A view a little closer to the truth (but still far from the socioendocrinological awesomeness involved) is here.

For some reason these discussions are always emotionally abhorrent to me. I've been among rich people all my life, so it's not related to being rich or poor. I just find it disgusting and morally outrageous that people even consider saying that what a man has to offer a woman is the extension of his bank account. It's a deflationary account of the beauty of courtship, love, romance and even parenting beyond my wildest dreams. I don't understand to what extent people in internet discussions like this really mean it when they say that what is being signaled could be substituted by auditing, but if that is truly what people mean, it is a sad mindstate to be in, to think that the World works that way.

↑ comment by timeholmes · 2015-01-09T00:30:42.996Z · LW(p) · GW(p)

Glad you mentioned this. I find Bostrom's reduction of art to the practical quite chilling! This sounds like a view of art from the perspective of a machine, or one who cannot feel. In fact it's the first time I've ever heard art described this way. Yes, such an entity (I wouldn't call them a person unless they are perhaps autistic) could only see UTILITY in art. According to my best definition of art [https://sites.google.com/site/relationalart/Home] –refined over a lifetime as a professional artist–art is necessarily anti-utilitarian. Perhaps I can't see "utility" in art because that aspect is so thoroughly dwarfed by art's monumental gifts of wonder, humor, pathos, depth, meaning, transformative alchemy, emotional uplift, spiritual renewal, etc. This entire catalog of wonders would be totally worthless to AI, which would prefer an endless grey jungle of straight lines.

Replies from: gjm, KatjaGrace↑ comment by gjm · 2015-01-09T13:28:06.317Z · LW(p) · GW(p)

such an entity [...] could only see UTILITY in art

I think you may be interpreting "utility" more narrowly than is customary here. The usual usage here is that "utility" is a catch-all term for everything one values. So if art provides me with wonder and humour and pathos and I value those (which, as it happens, it does and I do) then that's positive utility for me. If art provides other people with wonder and humour and pathos and they like that and I want them to be happy (which, as it happens, it does and they do and I do) then that too is positive utility. If it provides other people with those things and it makes them better people and I care about that (which it does, and maybe it does, and I do) then that too is positive utility.

would be totally worthless to AI

To an AI that doesn't care about those things, yes. To an AI that cares about those things, no. There's no reason why an AI shouldn't care about them. Of course at the moment we don't understand them, or our reactions to them, well enough to make an AI that cares about them. But then, we can't make an AI that recognizes ducks very well either.

↑ comment by KatjaGrace · 2015-01-09T02:58:48.706Z · LW(p) · GW(p)

Why do you suppose an AI would tend to prefer grey straight lines?

Replies from: diegocaleiro↑ comment by diegocaleiro · 2015-02-10T23:20:46.497Z · LW(p) · GW(p)

An AI that could just play with it's own reward circuitry might decide to prefer things it will frequently encounter without effort. Not necessarily grey straight lines, which are absent in my field of vision at the moment, but easygoing, laidback stuff.

↑ comment by skeptical_lurker · 2015-01-13T13:31:57.024Z · LW(p) · GW(p)

Because appreciating art/expensive win/whatever not only signals money but also culture. Saying "I have lots of money, here's my bank statement" isn't very subtle, and so signals low social skills.

comment by KatjaGrace · 2015-01-06T06:49:38.405Z · LW(p) · GW(p)

What was the most interesting thing in this section?

Replies from: Larks↑ comment by Larks · 2015-01-08T04:32:08.761Z · LW(p) · GW(p)

Yay, I finally caught up!

I think the section on resource allocation and Malthusian limits was interesting. Many people seem to think that

- people with no capital should be given some

- the repugnant conclusion is bad

Yet by continually 'redistributing' away from groups whole restrict their fertility, we actually ensure the latter.

Replies from: KatjaGrace, Larks↑ comment by KatjaGrace · 2015-01-09T03:02:22.473Z · LW(p) · GW(p)

Interesting framing.

It reminds me of this problem -

Many people feel that:

- everyone should have the right to vote

- almost everyone should have the right to reproduce

- people should not be able to buy elections

Yet when virtual minds can reproduce cheaply and quickly by spending money, it will be hard to maintain all of these.

Replies from: William_S↑ comment by William_S · 2015-01-10T02:48:40.338Z · LW(p) · GW(p)

I think the way that people would take out of this is avoiding assigning personhood status to virtual minds. There are already considerations that would weigh against virtual minds getting personhood status in the opinions of many people today, and if there are cases like this where assigning personhood creates and obvious and immediate problem, it might tip the balance of motivated cognition.

comment by Capla · 2015-01-10T17:29:12.351Z · LW(p) · GW(p)

If you had the option of having your brain sliced up and emulated today, would you take it? You would be among the first EMs.

On the one hand, "you" might have a decisive strategic advantage to shape the future. You will not have this advantage if you delay. On the other hand, it is unclear what complications the emulation process would present. The emulation might not be conscious or "you" in a meaningful sense. I have a general heuristic against getting my brain sliced up into tiny pieces.

I also want to know, what if you knew with certainty that the Emulation wouldn't be conscious, but just a clockwork mechanism that would act according to your values and thought-habits (at digital speeds and with the other advantages to being a digital mind). Accepting would mean your death, but you'd be replaced with a more effective version of you that can shape the future.

comment by Larks · 2015-01-08T04:36:06.303Z · LW(p) · GW(p)

Bostrom makes an interesting point that multipolar scenarios are likely to be extremely high variance: either very good or (assuming you believe in additivity of value) or very bad. Unfortunately it seems unlikely that any oversight could remain in such a scenario that could enable us to exercise or not this option in a utility-maximizing way.

Replies from: KatjaGrace↑ comment by KatjaGrace · 2015-01-09T03:03:16.294Z · LW(p) · GW(p)

Which 'option' do you mean?

Replies from: Larks↑ comment by Larks · 2015-01-12T04:44:24.455Z · LW(p) · GW(p)

'option' in the sense of a financial derivative - the right to buy an underlying security for a certain strike price in the future. In this case, it would be the chance to continue humanity if the future was bright, or commit racial-suicide if the future was dim. In general the asymmetrical payoff function means that options become more valuable the more volatile the underlying is. However, it seems that in a bad multipolar future we would not actually be able to (choose not to buy the security because it was below the strike price / choose to destroy the world) so we don't benefit from the option value.

comment by Capla · 2015-01-10T17:22:42.112Z · LW(p) · GW(p)

If we achieved a multipolar society of digital superintelligent minds, and the economy balloons overnight, I would expect that all the barriers to space travel would be removed. Could superrich, non-digital humans, simply hop on a star-ship and go to some other corner of the universe to set up culture? We may not be able to compete with digital minds, but do we have to? Is space big enough that biological humans can maintain their own culture and economy in isolation somewhere?

How long before the EM's catch up and we are in contact again? What happens then? Do you think we'd have enough mutual egalitarian instinct that we wouldn't just take what the other guy has (or more likely, the EM's just take what we have)? What would the state of the idea of "human rights" be at this time? Could a supercompetitive economy maintain a prime directive, such that they'd leave us alone?

comment by Capla · 2015-01-10T17:09:09.615Z · LW(p) · GW(p)

What would an EM economy be based on? These days the economy is largely driven by luxury goods. In the past it was largly driven by agricultural production. In a Malthusian, digital world is (almost) everything dedicated to electrical generation? What would the average superintelligent mind do for employment?

There's the perverse irony that as a super competitive economy tends toward perfect efficiency, all the things that the economy exists to serve (the ability to experience pleasure or love, the desire to continue to live, perhaps even conciseness itself) may be edited out, ground away by sheer competitive pressures. Could the universe become a dead and "mindless" set of processes that move energy around with near perfect efficiency, but for no reason?

comment by almostvoid · 2015-01-07T09:18:48.893Z · LW(p) · GW(p)

automation et.al aka AI scenarios and populations per se: less is more. We don't need or require 7billion+. the only reason this ideology of more ppl is for capitalism to create more docile consumers for rapacious share holders nothing else. capital should be invested in science of course, space exploration big time, build underwater cities for fun and get rid of planet-city destroying cars: eg Australia. it might be urbanized but really us sub-urbanized. an eco nightmare, disturbia through wrong technology [the car] deciding how ppl live. This has to stop even if we get down to 1 billion humans [one can dream]. However if AI really takes off and goes beyond the Event Horizon [false known as singularity whatever that is] than all ISMS are defunct be it socialism/capitalism/mercantilism/religionism hopefully they all vanish as humans don't work anymore on Earth. Let the AI run riot. There is always an off button.