Some lessons from the OpenAI-FrontierMath debacle

post by 7vik (satvik-golechha) · 2025-01-19T21:09:17.990Z · LW · GW · 4 commentsContents

The Events Further Details Other reasons why this is bad Datasets could help capabilities without explicit training Concerns about AI Safety Recent open conversations about the situation Expectations from future work None 4 comments

Recently, OpenAI announced their newest model, o3, achieving massive improvements over state-of-the-art on reasoning and math. The highlight of the announcement was that o3 scored 25% on FrontierMath, a benchmark comprising hard, unseen math problems of which previous models could only solve 2%. The events afterward highlight that the announcements were, unknowingly, not made completely transparent and leave us with lessons for future AI benchmarks, evaluations, and safety.

The Events

These are the important events that happened in chronological order:

- Before Nov 2024, Epoch AI worked on building an ambitious math evaluations benchmark, FrontierMath.

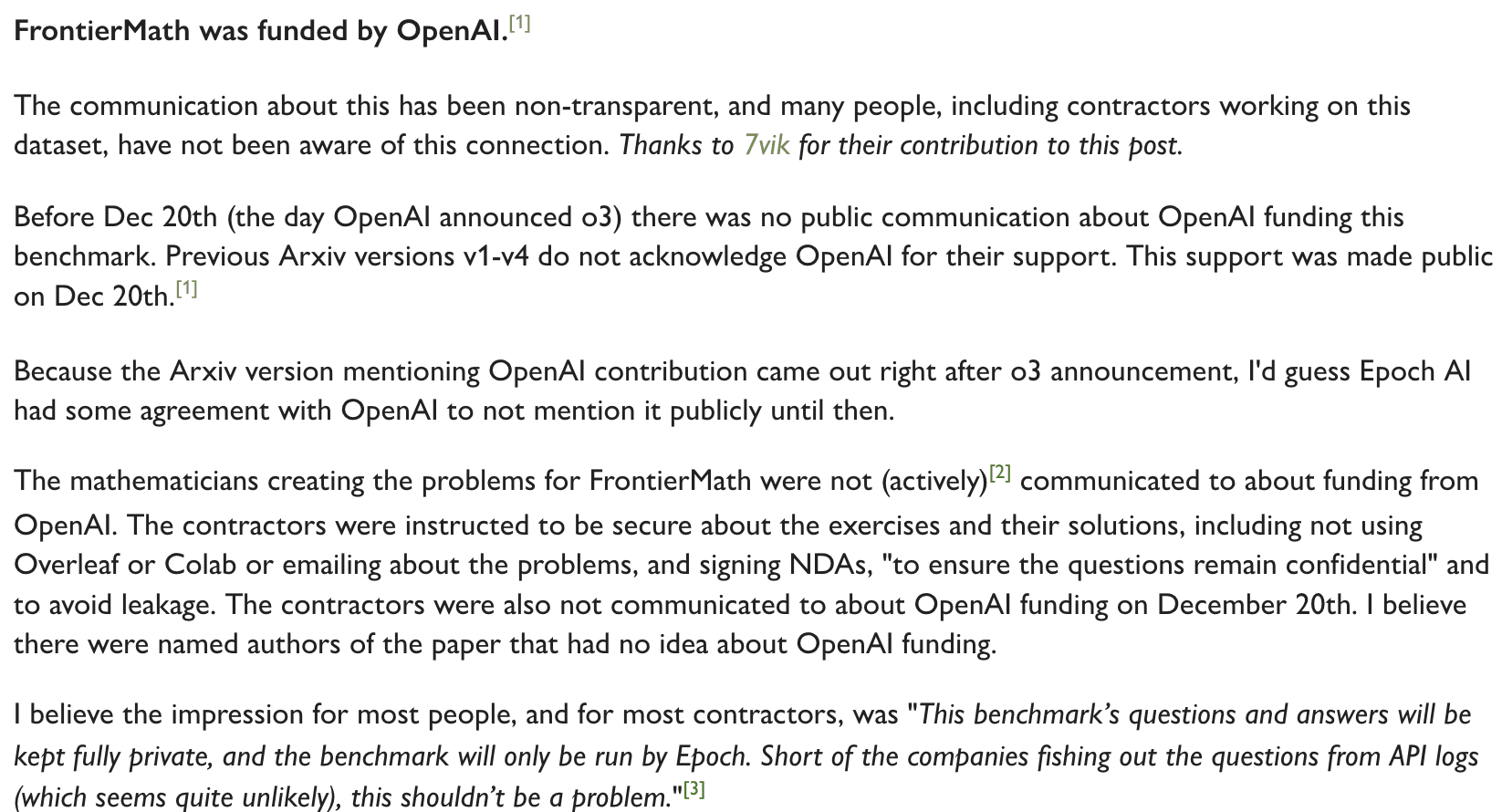

- They paid independent mathematicians something around $300-$1000 to contribute problems to it, without clearly telling them who funded it and who will have access.

- On Nov 7, 2024, they released the first version of their paper on arxiv, where they did not mention anything about the funding. It looks like [LW(p) · GW(p)] their contract with OpenAI did not allow them to share this information before the o3 announcement.

- On Dec 20, OpenAI announced o3, which surpassed all expectations and achieved 25% as compared to the previous best, 2%.

- On the exact same day, Epoch AI updated the paper with a v5. This update tells us that they were completely funded by OpenAI and shared with them exclusive access to most of the hardest problems with solutions:

Further Details

While all of this was felt concerning even during the update in December, recent events have thrown more light on the implicaitions of this.

Other reasons why this is bad

Let's analyse how much of an advantage this access could be, and how I believe it could have possibly been used to help capabilities even without training on them. I was surprised when I found a number of other things about the benchmark during a presentation by someone who worked on FrontierMath (some or most of this information is now publicly available):

Firstly, the benchmark consists of problems on three tiers of difficulty -- (a) 25% olympiad level problems, (b) 50% mid-difficulty problems, and (c) 25% difficult problems that would take an expert mathematician in the domain a few weeks to solve.

What this means, first of all, is that the 25% announcement, which did NOT reveal the distribution of easy/medium/hard problem tiers, was somewhat misleading. It may be possible that most problems o3 solved were from the first tier, which is not as groundbreaking as solving problems from the hardest tier of the benchmark, which it is known most for.

Secondly, OpenAI had complete access to the problems and solutions for most of the problems. This means they could have, in theory, trained their models to solve them. However, they verbally agreed not to do so, and frankly I don't think they would have done that anyway, simply because this is too valuable a dataset to memorize.

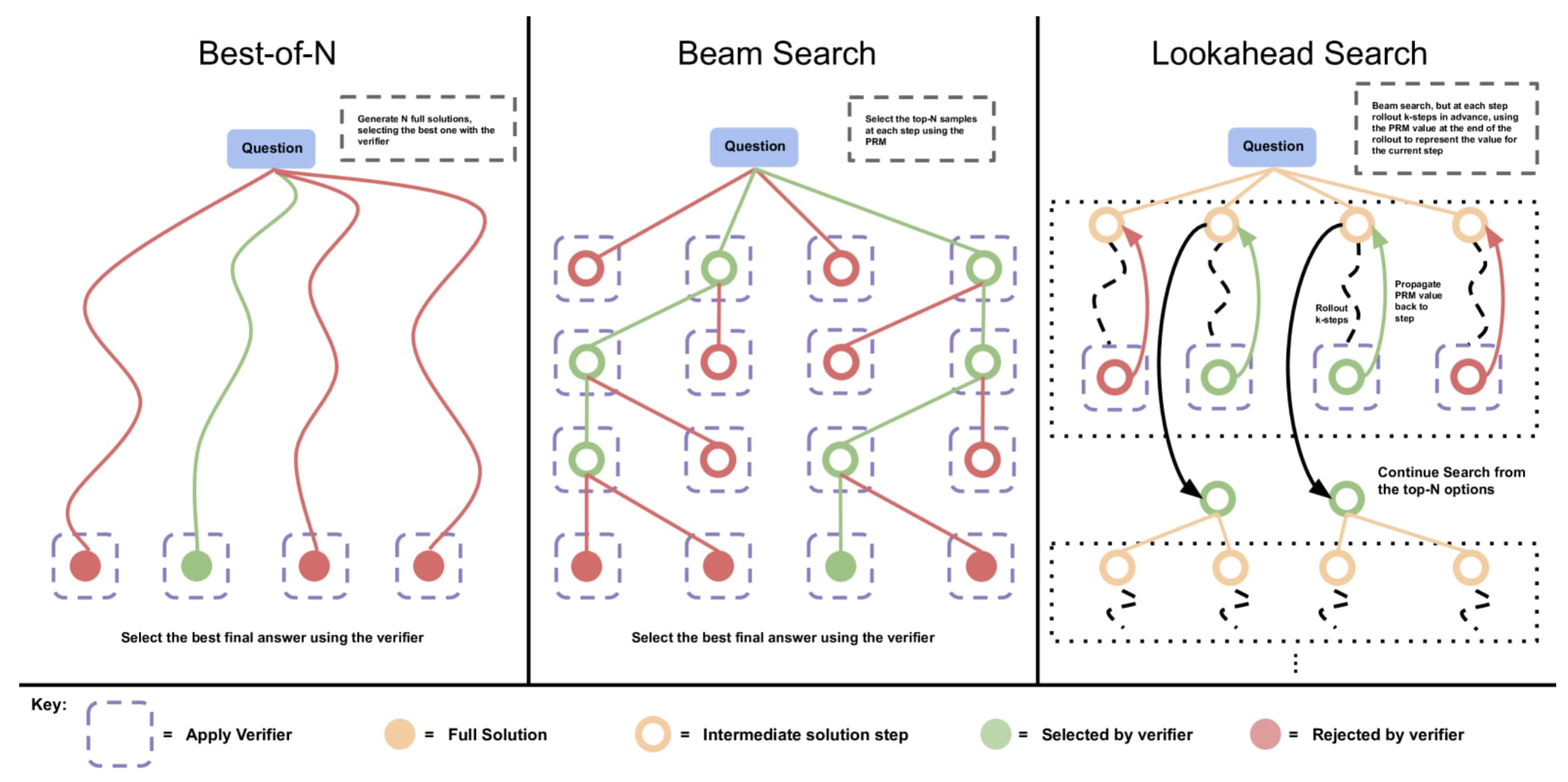

Datasets could help capabilities without explicit training

Now, nobody really knows what goes on behind o3 (this post [LW · GW] has some hypotheses about o1), but if they follow the "thinking" kind of inference-time scaling used by models published by other frontier labs that possibly uses advanced chain-of-thought techniques combined with recursive self-improvement and an MCMC-rollout against a Process Reward Model (PRM) verifier, FrontierMath could be a great task to validate the PRM on. (note that I don't claim it was used this way -- but I just want to emphasize that a verbal agreement of no explicit training is not enough).

A model that does inference-time compute scaling could greatly benefit from a process-verifier reward model for lookahead search on the output space or RL on chain-of-thought, and such benchmarks could be really good-quality data to validate universal, generalizable reasoning verifiers on - a really hard thing to otherwise get right on difficult tasks.

It would be great to know that the benchmark was just used for a single evaluation run and nothing else. That would mean that the benchmark can be considered completely untouched and can be used for fair evaluations comparing other models in the future.

Concerns about AI Safety

Epoch AI works on investigating the trajectory of AI for the benefit of society, and several people involved could be concerned with AI safety. Also, a number of the mathematicians who worked on FrontierMath would possibly have not contributed to this if they knew about the funding and exclusive access. It feels concerning that OpenAI could have inadvertently paid people to contribute to capabilities when they might not have wanted to.

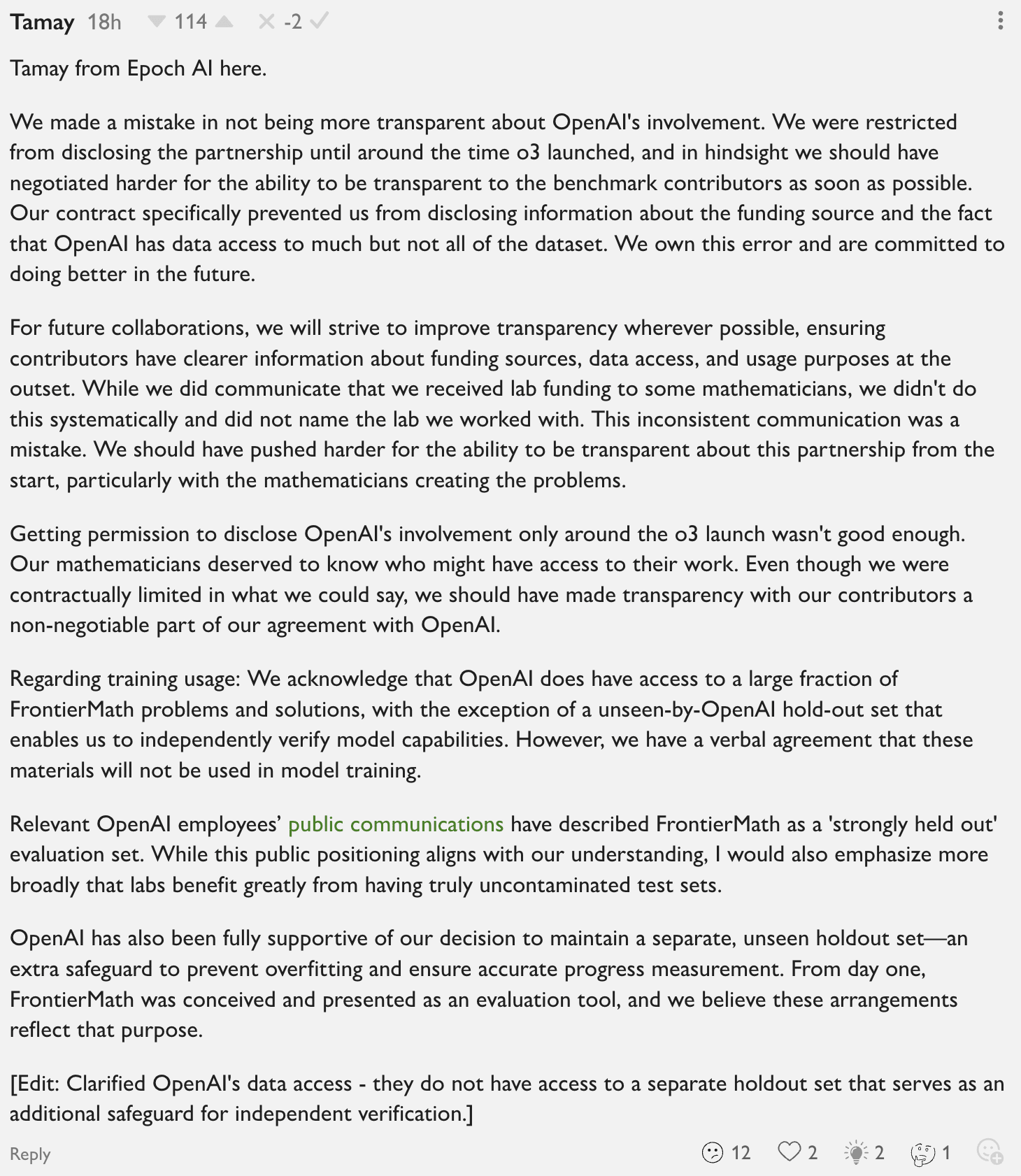

Recent open conversations about the situation

Also, in this shortform [LW(p) · GW(p)], Tamay from Epoch AI replied to this (which I really appreciate):

This was in reply in a comment thread in meemi's Shortform [LW · GW]:

However, we still haven't discussed everything, including concrete steps and the broader implications this has for AI benchmarks, evaluations, and safety.

Expectations from future work

Specifically, these are the things we need to be careful about in the future:

- Firstly, for all future evaluation benchmarks, we should aim to be completely transparent about:

- (i) who funds them, at least in front of the people actually contributing to it

- (ii) who will get complete or partial access to the data,

- (iii) a written, on-paper agreement that explicitly states guidelines on how the data will be used (training, validating, or evaluating the models or PRMs) as opposed to a verbal agreement.

- Secondly, I still consider this a very valuable benchmark - so what's the best we can do to ensure that similar concerns won't be an issue in future updates.

- Thirdly, I believe that AI safety researchers should be aware that in certain situations, building a shield can indirectly help people build a better sword.

I've been thinking about this last part a lot, especially the ways in which various approaches can contribute indirectly toward capabilities, despite careful considerations made to not do so directly.

Note: A lot of these ideas and hypotheses came out of private conversations with other researchers about the public announcements. I take no credit for this, and I may be wrong in my hypotheses about how exactly the dataset could have possibly been used. However I do see value in more transparent discussions around this, hence this post.

PS: I completely support Epoch AI and their amazing work on studying the trajectory and governance of AI, and I deeply appreciate their coming forward and accepting the concerns and willingness to do the best that can be done to fix things in the future.

Also, the benchmark is still extremely valuable and relevant, and is the best to evaluate advanced math capabilities. It would be great to get confirmation that it was not used in any other way than a single evaluation run.

4 comments

Comments sorted by top scores.

comment by james oofou (james-oofou) · 2025-01-19T23:24:28.860Z · LW(p) · GW(p)

It might as well be possible that o3 solved problems only from the first tier, which is nowhere near as groundbreaking as solving the harder problems from the benchmark

This doesn't appear to be the case:

https://x.com/elliotglazer/status/1871812179399479511

Replies from: satvik-golechhaof the problems we’ve seen models solve, about 40% have been Tier 1, 50% Tier 2, and 10% Tier 3

↑ comment by 7vik (satvik-golechha) · 2025-01-19T23:39:58.243Z · LW(p) · GW(p)

I don't think this info was about o3 (please correct me if I'm wrong). While this suggests not all of them were from the first tier, it would be much better to know what it actually was. Especially, since the most famous quotes about FrontierMath ("extremely challenging" and "resist AIs for several years at least") were about the top 25% hardest problems, the accuracy on that set seems more important to update on with them. (not to say that 25% is a small feat in any case).

comment by gugu (gabor-fuisz) · 2025-01-19T22:22:43.647Z · LW(p) · GW(p)

Secondly, OpenAI had complete access to the problems and solutions to most of the problems. This means they could have actually trained their models to solve it. However, they verbally agreed not to do so, and frankly I don't think they would have done that anyway, simply because this is too valuable a dataset to memorize.

Now, nobody really knows what goes on behind o3, but if they follow the kind of "thinking", inference-scaling of search-space models published by other frontier labs that possibly uses advanced chain-of-thought and introspection combined with a MCMC-rollout on the output distributions with a PRM-style verifier, FrontierMath is a golden opportunity to validate on.

If you think they didn't train on FrontierMath answers, why do you think having the opportunity to validate on it is such a significant advantage for OpenAI?

Couldn't they just make a validation set from their training set anyways?

In short, I don't think the capabilities externalities of a "good validation dataset" is that big, especially not counterfactually -- sure, maybe it would have took OpenAI a bit more time to contract some mathematicians, but realistically, how much more time?

Whereas if your ToC as Epoch is "make good forecasts on AI progress", it makes sense you want labs to report results on your dataset you've put together.

Sure, maybe you could commit to not releasing the dataset and only testing models in-house, but maybe you think you don't have the capacity in-house to elicit maximum capability from models. (Solving the ARC challange cost O($400k) for OpenAI, that is peanuts for them but like 2-3 researcher salaries at Epoch, right?)

If I was Epoch, I would be worried about "cheating" on the results (dataset leakage).

Re: unclear dataset split: yeah, that was pretty annoying, but that's also on OpenAI comms too.

I teeend to agree that orgs claiming to be safety orgs shouldn't sign NDAs preventing them from disclosing their lab partners / even details of partnerships, but this might be a tough call to make in reality.

I definitely don't see a problem with taking lab funding as a safety org. (As long as you don't claim otherwise.)

Replies from: satvik-golechha↑ comment by 7vik (satvik-golechha) · 2025-01-19T22:34:03.019Z · LW(p) · GW(p)

I definitely don't see a problem with taking lab funding as a safety org. (As long as you don't claim otherwise.)

I definitely don't have a problem with this as well - just that this needs to be much more transparent and carefully though-out than how it happened here.

If you think they didn't train on FrontierMath answers, why do you think having the opportunity to validate on it is such a significant advantage for OpenAI?

My concern is that "verbally agreeing to not use it for training" leaves a lot of opportunities to still use it as a significant advantage. For instance, do we know that they did not use it indirectly to validate a PRM that could in turn help a lot? I don't think making a validation set out of their training data would be as effective.

Re: "maybe it would have took OpenAI a bit more time to contract some mathematicians, but realistically, how much more time?": Not much, they might have done this indepently as well.