Rationalization Maximizes Expected Value

post by Kevin Dorst · 2023-07-30T20:11:26.377Z · LW · GW · 10 commentsThis is a link post for https://kevindorst.substack.com/p/rationalization-maximizes-expected

Contents

The empirical findings Why it’s rational Wait, why’d we ever think it was irrational? 1) Objective facts about desirability? 2) Money pumps? What next? None 10 comments

TLDR: After making a choice, we often change how desirable we think the options were to better align with that choice. This is perfectly rational. How much you’ll enjoy an outcome depends on both (1) what you get, and (2) how you feel about it. Once what you get is settled, if you can get yourself to enjoy it more, that will make you happier.

Pomona. That was the college for me. California sun. Small classes. West-coast freedom.

There we others on the list, of course. Claremont McKenna. Northwestern. WashU. A couple backups. But Pomona was where my heart was.

Early April, admissions letters started arriving:

Northwestern? Waitlisted.

Claremont? Rejected.

Pomona? Rejected.

WashU? Accepted.

Phew.

…

Great.

…

Fantastic, in fact.

Now that I thought about it more, WashU was the perfect school. My brother was there. It was only 2 hours from home. And it made it much easier to buy that motorcycle I’d been dreaming about.

Like clockwork, within a few weeks I was convinced that this was the best possible outcome—“Thank goodness I didn’t get into Pomona; I might’ve made the wrong choice!”

Feel familiar? This is an instance of rationalization—or, if you like, the psychological immune system. When we end up with an option we initially don’t want, we often come to appreciate new things about it. Getting fired becomes an opportunity for growth. A breakup becomes a chance to find yourself. A rejection becomes an inspiration to work harder. These are normal, healthy responses to disappointment.

Even so, rationalization has a bad rap. It is often thought to be a paradigm case of irrationality: with hard-nosed realism, we’d see our failures for what they are—failures—and be wiser (if not happier) for that.

I think this is wrong. Generically, rationalization maximizes expected value. And it’s usually epistemically above-board as well.

The empirical findings

The classic study on rationalization is from 1956. Subjects were shown some household appliances—a coffee-maker, a toaster, etc.—and rated them each for desirability on scale from 1–8.

They were then allowed to choose from two “randomly selected” objects (which were in fact selected so that one item was desirable and the other varied in how far behind it was). After choosing, they were allowed time to think more about each item, and were again asked to rate them for their desirability.

The result? People’s second desirability ratings were higher for their chosen object and lower for the unchosen one that was an option. This held whether or not they were given new information about the items. Merely choosing seemed to shift how they perceived the options.

This basic finding has been replicated many times, using various experimental setups and measures. For example, it’s been found that people are more interested in information that supports their decisions than information which tells against them—especially when the decision is not reversible.

These results are old. Usually that means we should be worried about their replicability. But I think we should trust them. Unlike more surprising “findings” of the behavioral sciences—for example, that standing in a “power pose” can increase your testosterone—this pattern of rationalization is familiar from our everyday lives. My experience with colleges was perfectly ordinary.

Why it’s rational

Philosophers distinguish two types of rationality. Practical rationality is about getting what you want; epistemic rationality is about getting to the truth.

I think it’s pretty easy to show that rationalization is often practically rational. Very often, how desirable an outcome is depends on how much we’ll value it at the time we get it. Often this is (in part) under our control. So often it’s in our interest to come to appreciate the option more than we otherwise would.

Setting rationalization to one side, this point is obvious.

Recently a friend wanted to go to a musical—one that I wasn’t particularly excited about, and wouldn’t have listened to otherwise. I knew that I tend to enjoy musical performances more if I listen to the soundtrack beforehand—I’m be better able to get into it, follow along, etc.

So I had two separate choices:

Go to the musical, or not?

Listen to the soundtrack, or not?

On its own, I wouldn’t enjoy listening—that had negative value. But doing so would allow me to enjoy the performance more if I did go. So the possible values look something like:

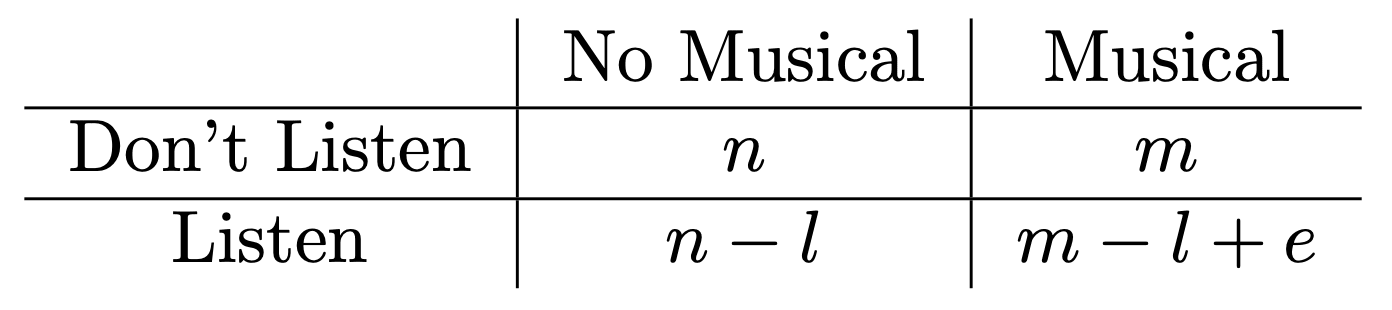

m is the value of going to the musical, l is the cost of listening, and e is the added enjoyment I’d get at the performance if I listened beforehand. Assuming this enjoyment outweighs the cost of listening (e > l), obviously the only two potentially rational options are Musical+Listen (bottom right) or No Musical+Don’t Listen (top left): given that I don’t go to the musical, listening lowers the value from n to n–l; given that I do, listening raises the value from m to m–l+e.

In other words: I should either avoid the musical and take steps to make that choice as enjoyable as possible (don’t waste time listening to it); or go to the musical and take steps to make that choice as enjoyable as possible (listen to it beforehand).

Notice that this has the same structure as rationalization. When you make a choice—whether between musicals, appliances, or colleges—there are two factors that determine how happy you’ll be with it:

- What option you choose; and

- How much you desire that option.

Most work on the rationality of decision-making focuses on (1), assuming that your values (from (2)) are fixed beforehand. As discussed in the previous post, standard economic models assume stable and precise value functions. But for real people, what we value is not stable or fixed—it’s a fact about ourselves that we can deliberately alter.

So suppose you find yourself with your choice (i.e. (1)) fixed—you’re definitely getting a toaster; your definitely going to WashU. At this point, you still have agency, for you can modulate what you value—or even change who you are—to come to appreciate it more.

If what you care about is maximizing expected value, this is perfectly rational. In fact, it’d be irrational not to rationalize—you’d be leaving happiness on the table!

Wait, why’d we ever think it was irrational?

At this point this might seem a bit too obvious. Why did anyone ever think that rationalization was irrational? I think there are two potential reasons:

1) Objective facts about desirability?

One reason to think it’s irrational is likely to come from philosophers (not economists). Perhaps there are objective facts about how desirable (for a particular person at a particular time) the relevant options are. If so, then it seems like post-decision changes in desirability ratings must be a way of distorting your beliefs about those objective facts.

In other words, this line of thought says that rationalization may be practically useful, but it’s epistemically irrational.

This is probably what’s going on in some cases. But two points.

First, as this recent BBS article argues at length, there is often an informational signal in learning what you choose: your choice reveals implicit knowledge had by some parts of your mind that your conscious self didn’t have access to.

Here’s an example. Many years ago I met someone through a mutual friend—let’s call him Charlie. Charlie seemed extremely nice, gracious, and interested in others. Every time I hung out with him, I could only list or remember positive things about the interaction. Still, I found myself disliking him more and more, not excited about spending one-on-one time together. Eventually I started avoiding him altogether. If you asked me why, I’d have had little idea—“we just don’t get along”, I’d say. But that didn’t quite capture it—we got along perfectly fine. Still, something felt off.

Years later, I learned from others, Charlie turned out to be a bad friend. He was unreliable, insincere, and treated some people (especially women) very badly.

The reason I was avoiding him, in hindsight, is that some part of my picked up on this. Some part of my subconscious—one for reading people and picking up on their patterns and intentions—had realized Charlie was not a great guy. My conscious mind had no direct access to this information. Still, the fact that I started avoiding him was a signal to that effect. Upon noticing those choices, it made perfectly good sense for me to try to think of reasons to rationalize my avoidance—there were reasons.

Upshot: sometimes rationalization is epistemically rational because it’s a way of picking up on information that’s unconsciously represented in your mind.

Still, there are reasons to think this mechanism doesn’t explain all patterns of rationalization.

But there’s a second reason why rationalization needn’t be epistemically irrational. Even if there are objective facts about desirability—and even if we’re not getting any information from the choices that were made—post-decision changes in our desirability ratings only indicate distortions if those objective facts aren’t under our control.

The case of me going to the musical shows why: there are objective facts about how enjoyable my evening will be, but I can affect them not only with my choice about whether to go to the musical, but also with my choice about whether to listen to it beforehand.

More generally, there’s ample evidence that mindset has genuine effects on objective metrics—even hormone levels or body weight and blood pressure—that contribute to how enjoyable (or good for you) an experience is.

If people rate the desirability of items based on how much they want them now—rather than how much they’ll come to want them in the future if they choose them—then the above empirical findings needn’t involve any distortion at all.

Instead, what’s happening is that after the choice is made—you’re getting the toaster—you come to change your mindset so that now the toaster is more valuable for you. (You resolve to eat more toast, say.) No distortion needed.

2) Money pumps?

A different objection to the rationality of rationalization comes from the economists. There’s a reason why standard economic models require stable preferences, after all: if your preferences (predictably) change over time, you can be subject to money pumps! That is: a series of trades which is guaranteed to result in giving away something you value.

To which the obvious reply is: So what?

Granted, ideal agents are not subject to money pumps. But we are not ideal agents—we didn’t need any clever psychological experiments to show that.

So here you are, having chosen the toaster. You can either rationalize your choice—coming to appreciate it more than the coffee-maker, and being happier with the outcome—or not.

If you do, you’ll be subject to a theoretically-possible money pump. But you’re extremely confident that no economist will jump out of the woodwork to money-pump you.

Meanwhile, if you don’t rationalize, you’ll be leaving happiness on the table. Given the rarity of money-pumps and the certainty of the incoming toaster, the option that actually maximizes expected value is to rationalize.[1]

Upshot: rationalization is not only healthy and normal; it’s (arguably) healthy and normal because it’s rational. It helps you to get what you value—or, rather, to value what you get—and does so without necessarily being epistemically distorting.

So—as Sister Hazel tells us—next time you get something you don’t want, the solution is simple: Change your mind.

What next?

- For a great recent discussion of rationalization, see this BBS article on why “Rationalization is Rational” by Fiery Cushman, and the ensuing commentaries.

- For a less rosy picture of rationalization, see this recent paper by Jake Quilty-Dunn. For the gist, see this back-and-forth between us on (the previous version of) this blog.

- ^

There are other responses to the money-pump concern. Notice that the table I drew for going to the musical involved no actual changes in values. Instead of a change in values, we can always fine-grain the options so that “getting a toaster with a negative mindset” and “getting a toaster with a positive mindset” are different options, meaning the “change” in desirability could simply reflect a change in which particular option you’re likely to get.

10 comments

Comments sorted by top scores.

comment by samshap · 2023-07-30T22:22:25.349Z · LW(p) · GW(p)

I agree that the type of rationalization you've described is often practically rational. And it's at most a minor crime against epestemic rationality. If anything, the epestemic crime here is not anticipating that your preferences will change after you've made a choice.

However, I don't think this case is what people have in mind when they critique rationalization.

The more central case is when we rationalize decisions that affect other people; for example, Alice might make a decision that maximizes her preferences and disregards Bob's, but after the fact she'll invent reasons that make her decision appear less callous: "I thought Bob would want me to do it!"

While this behavior might be practically rational from Alice's selfish perspective, she's being epestemically unvirtuous by lying to Bob, degrading his ability to predict her future behavior.

Maybe you can use specific terminology to differentiate your case from the more central one, maybe "preference rationalization"?

Replies from: Kevin Dorst, Avnix↑ comment by Kevin Dorst · 2023-08-01T20:47:19.046Z · LW(p) · GW(p)

Nice point. Yeah, that sounds right to me—I definitely think there are things in the vicinity and types of "rationalization" that are NOT rational. The class of cases you're pointing to seems like a common type, and I think you're right that I should just restrict attention. "Preference rationalization" sounds like it might get the scope right.

Sometimes people use "rationalization" to by definition be irrational—like "that's not a real reason, that's just a rationalization". And it sounds like the cases you have in mind fit that mold.

I hadn't thought as much about the cross of this with the ethical version of the case. Of course, something can be (practically or epistemically) rational without being moral, so there are some versions of those cases that I'd still insist ARE rational even if we don't like how the agent acts.

↑ comment by Sweetgum (Avnix) · 2023-07-31T20:04:01.514Z · LW(p) · GW(p)

Yes, and another meaning of "rationalization" that people often talk about is inventing fake reasons for your own beliefs, which may also be practically rational in certain situations (certain false beliefs could be helpful to you) but it's obviously a major crime against epistemic rationality.

I'm also not sure rationalizing your past personal decisions isn't an instance of this; the phrase "I made the right choice" could be interpreted as meaning you believe you would have been less satisfied now if you chose differently, and if this isn't true but you are trying to convince yourself it is to be happier then that is also a major crime against epistemic rationality.

comment by Richard_Kennaway · 2023-07-31T07:33:56.583Z · LW(p) · GW(p)

Once what you get is settled, if you can get yourself to enjoy it more, that will make you happier.

Why go the long way round, though? Rewrite your utility function already and you can have unlimited happiness without ever doing anything else.

And while I intend this as a reductio, "want what you have" is seriously touted as a recipe for happiness. (I am tickled by the fact that the "Related search" that Google shows me at the foot of that page is "i want what they have".)

In contrast:

Keltham holds forth to warn Pilar of the mistake called 'rationalization' -

- wait, it's called what in Taldane? That word shouldn't even exist! You can't 'rationalize' anything that wasn't Lawful to start with! That's like having the word for lying being 'truthization'!

— planecrash. Also this [LW · GW].

comment by Sweetgum (Avnix) · 2023-07-31T20:14:52.730Z · LW(p) · GW(p)

Wild speculation ahead: Perhaps the aversion to this sort of rationalization is not wholly caused by the suboptimality of rationalization, but also by certain individualistic attitudes prevalent here. Maybe I, or Eliezer Yudkowsky, or others, just don't want to be the sort of person whose preferences the world can bend to its will.

comment by Sweetgum (Avnix) · 2023-07-31T19:46:38.775Z · LW(p) · GW(p)

I wish you had gone more into the specific money pump you would be vulnerable to if you rationalize your past choices in this post. I can't picture what money pump would be possible in this situation (but I believe you that one exists.) Also, you not describing the specific money pump reduces the salience of the concern (improperly, in my opinion.) It's one thing to talk abstractly about money pumps, and another to see right in front of you how your decision procedure endorses obviously absurd actions.

Replies from: Kevin Dorst↑ comment by Kevin Dorst · 2023-08-01T21:02:35.468Z · LW(p) · GW(p)

Fair! I didn't work out the details of the particular case, partly for space and partly from my own limited bandwidth in writing the post. I'm actually having more trouble writing it out now that I sit down with it, in part because of the choice-dependent nature of how your values change.

Here's how we'd normally money-pump you when you have a predictable change in values. Suppose at t1 you value X at $1 and at t2 you predictably will come to value it at $2. Suppose at t1 you have X; since you value it at $1, you'll trade it to me for $1, so now you're at +$1; then I wait for t2 to come around, and now you value X more, so I offer to trade you $X for $2, so you happily trade and end up with X - $1. Which is worse than you started.

The trouble is that this seems like precisely a case where you WOULDN'T rationalize, since you traded X away. I think there will still be some way to do it, but haven't figured it out yet. It'd be interesting if not (it's MAYBE possible that the money-pump argument for fixed preferences I had in mind presupposed that how they change wouldn't be sensitive to which trades you make. But I kinda doubt it).

Let me know if you have thoughts! I'll write back if I have the chance to sit down and figure it out properly.

Replies from: Avnix↑ comment by Sweetgum (Avnix) · 2023-08-03T20:29:29.817Z · LW(p) · GW(p)

I think I got it. Right after the person buys X for $1, you offer to buy it off them for $2, but with a delay, so they keep X for another month before the sale goes through. After the month passes, they now value X at $3 so they are willing to pay $3 to buy it back from you, and you end up with +$1.

Replies from: samshap, Kevin Dorst↑ comment by Kevin Dorst · 2023-08-13T18:47:05.344Z · LW(p) · GW(p)

Yeah, that looks right! Nice. Thanks!