Do incoherent entities have stronger reason to become more coherent than less?

post by KatjaGrace · 2021-06-30T05:50:10.842Z · LW · GW · 5 commentsContents

5 comments

My understanding is that various ‘coherence’ arguments exist of the form:

- If your preferences diverged from being representable by a utility function in some way, then you would do strictly worse in some way than by having some kind of preferences that were representable by a utility function. For instance, you will lose money, for nothing.

- You have good reason not to do that / don’t do that / you should predict that reasonable creatures will stop doing that if they notice that they are doing it.

For example, from Arbital:

Well, but suppose I declare to you that I simultaneously:

* Prefer onions to pineapple on my pizza.

* Prefer pineapple to mushrooms on my pizza.

* Prefer mushrooms to onions on my pizza.

...

Suppose I tell you that I prefer pineapple to mushrooms on my pizza. Suppose you're about to give me a slice of mushroom pizza; but by paying one penny ($0.01) I can instead get a slice of pineapple pizza (which is just as fresh from the oven). It seems realistic to say that most people with a pineapple pizza preference would probably pay the penny, if they happened to have a penny in their pocket.[1]

After I pay the penny, though, and just before I'm about to get the pineapple pizza, you offer me a slice of onion pizza instead--no charge for the change! If I was telling the truth about preferring onion pizza to pineapple, I should certainly accept the substitution if it's free.

And then to round out the day, you offer me a mushroom pizza instead of the onion pizza, and again, since I prefer mushrooms to onions, I accept the swap.

I end up with exactly the same slice of mushroom pizza I started with... and one penny poorer, because I previously paid $0.01 to swap mushrooms for pineapple.

This seems like a qualitatively bad behavior on my part. By virtue of my incoherent preferences which cannot be given a consistent ordering, I have shot myself in the foot, done something self-defeating. We haven't said how I ought to sort out my inconsistent preferences. But no matter how it shakes out, it seems like there must be some better alternative--some better way I could reason that wouldn't spend a penny to go in circles. That is, I could at least have kept my original pizza slice and not spent the penny.

In a phrase you're going to keep hearing, I have executed a 'dominated strategy': there exists some other strategy that does strictly better.

On the face of it, this seems wrong to me. Losing money for no reason is bad if you have a coherent utility function. But from the perspective of the creature actually in this situation, losing money isn’t obviously bad, or reason to change. (In a sense, the only reason you are losing money is that you consider it to be good to do so.)

It’s true that losing money is equivalent to losing pizza that you like. But losing money is also equivalent to a series of pizza improvements that you like (as just shown), so why do you want to reform based on one, while ignoring the other?

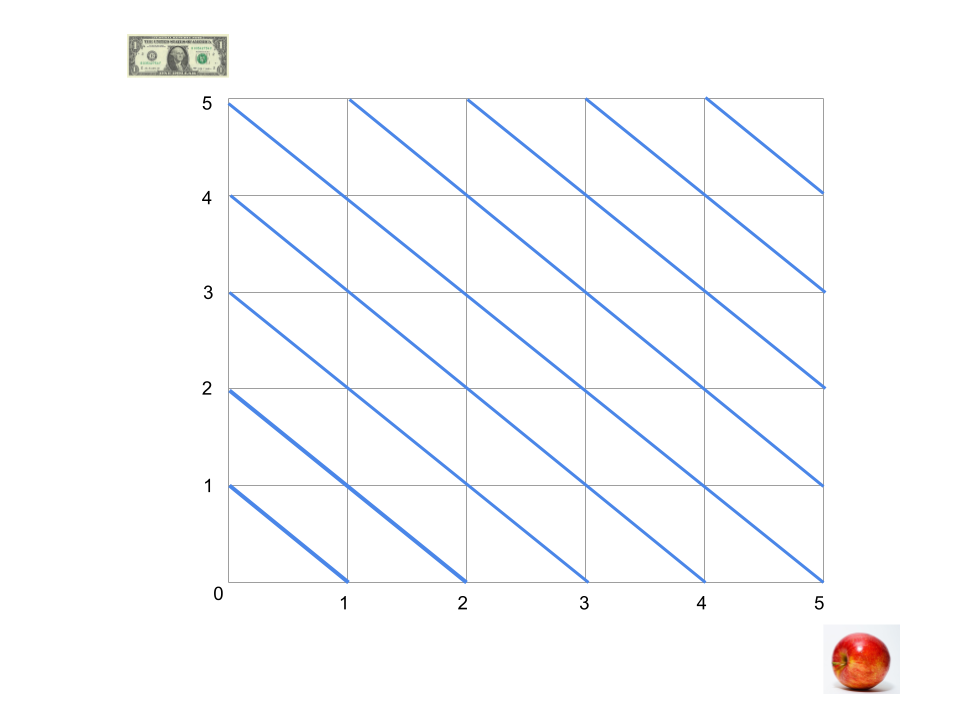

If you are even a tiny bit incoherent, then I think often a large class of things are actually implicitly worth the same amount. To see this, consider the following diagram of an entity’s preferences over money and apples. Lines are indifference curves in the space of items. The blue lines shown mean that you are indifferent between $1 and an apple on the margin across a range of financial and apple possession situations. (Not necessary for rationality.) Further out lines are better, and you can’t reach a further out line by traveling along whatever line you are on, because you are not indifferent between better and worse things.

Trades that you are fine with making can move your situation from anywhere on a line to anywhere on the same line or above it (e.g. you will trade an apple for $1 or anything more than that).

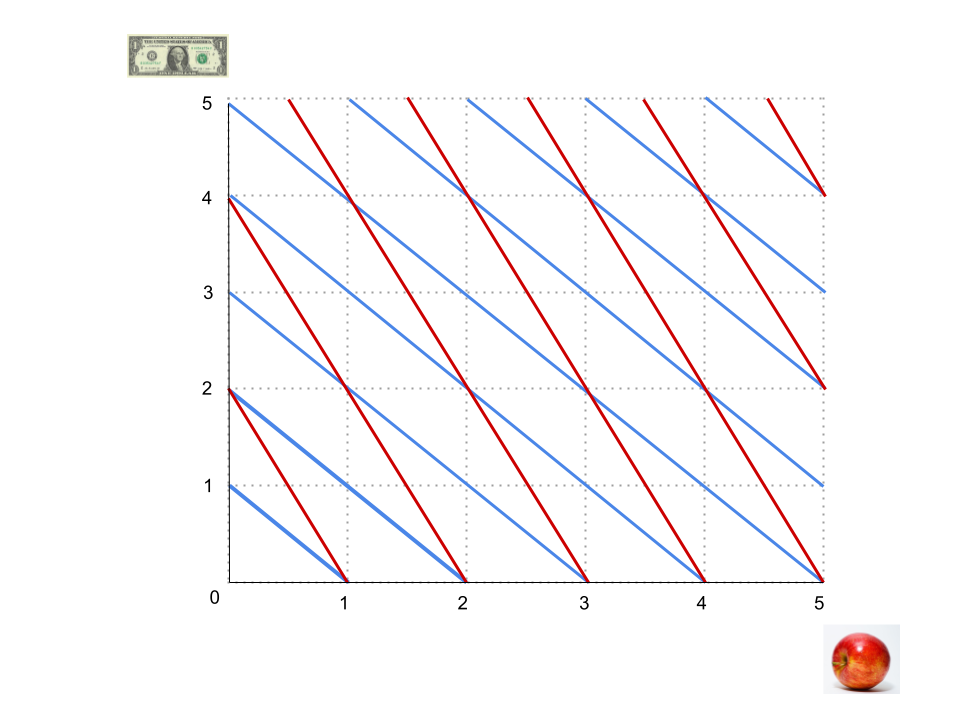

Now let’s say that you are also indifferent between an apple and $2, in general:

With two incoherent sets of preferences over the items in question, then there are two overlapping sets of curves.

If indifference curves criss-cross, then you can move anywhere among them while remaining indifferent - as long as you follow the lines, you are indifferent, and the lines now get you everywhere. Relatedly, you can get anywhere while making trades you are happy with. You are now indifferent to the whole region, at least implicitly. (In this simplest case at least, basically you now have two non-parallel vectors that you can travel along, and can add them together to get any vector in the plane.)

.png)

For instance, here, four apples are seen to be equally preferable to two apples (and also to two apples plus two dollars, and to one apple plus two dollars). Not shown because I erred: four apples are also equivalent to $-1 (which is also equivalent to winning the lottery).

If these were not lines, but higher-dimensional planes with lots of outcomes other than apples and dollars, and all of the preferences about other stuff made sense except for this one incoherence, the indifference hyperplanes would intersect and the entity in question would be effectively indifferent between everything. (To see this a different way, if it is indifferent between $1 and an apple, and also between $2 and an apple, and it considers a loaf of bread to be worth $2 in general, then implicitly a loaf of bread is worth both one apple and two apples, and so has also become caught up in the mess.)

(I don’t know if all incoherent creatures value everything the same amount - it seems like it should maybe be possible to just have a restricted region of incoherence in some cases, but I haven’t thought this through.)

This seems related to having inconsistent beliefs in logic. When once you believe a contradiction, everything follows. When once you evaluate inhorecently, every evaluation follows.

And from that position of being able to evaluate anything any amount, can you say that it is better to reform your preferences to be more coherent? Yes. But you can also say that it is better to reform them to make them less coherent. Does coherence for some reason win out?

In fact slightly incoherent people don’t seem to think that they are indifferent between everything (and slightly inconsistent people don’t think they believe everything). And my impression is that people do become more coherent with time, rather than less, or a mixture at random.

If you wanted to apply this to alien AI minds though, it would seem nice to have a version of the arguments that go through, even if just via a clear account of the pragmatic considerations that compel human behavior in one direction. Does someone have an account of this? Do I misunderstand these arguments? (I haven’t actually read them for the most part, so it wouldn’t be shocking.)

5 comments

Comments sorted by top scores.

comment by Steven Byrnes (steve2152) · 2021-06-30T14:15:38.334Z · LW(p) · GW(p)

The starting point is that future states can be better or worse from the perspective of people (or any other evolved creature). Maybe it's not totally ordered, but a future where I'm getting tortured and everyone hates me is definitely worse than a future where I'm feeling great and everyone loves me.

This is important because I think coherence (the way people use the term) only makes sense when there are preferences about future states—not preferences about trajectories, or preferences about actions, or preferences about decisions. Like, maybe I think it's a fun game to make the waiter keep switching my pizza for hours straight, well worth the few dollars that I lose. Or maybe I think it's always deontologically proper to give the answer "Yes I'll switch pizzas" when a waiter asks me if I want to switch pizzas, regardless of the exact pizzas that they're asking me about.

OK, now so far we have people with preferences about future states (possibly among other preferences). Now those people make AIs. Presumably they'll correspondingly build them and train them to actualize those preferences about future states.

So from a certain perspective, we already have our answer:

We shouldn't expect an AI to wind up concluding that all future states are equally good, because the designers don't want that to happen, and they'll presumably design the AI accordingly.

But let's drill down a bit and ask how. The answer of course depends on the AI's algorithms. Let's go with what happens in the human brain (at least, how I think the human brain works), as a possible architecture of a general intelligence.

It's not computationally feasible to conceptualize the whole world in a chunk. Instead our understanding of the world is built up from lots of little compositional pieces—little predictive models, but (like Logical Induction) the models aren't predicting every aspect of the world simultaneously, they pattern-match some aspect of what's going on (in either sensory inputs or thoughts), then activate, then make one or more narrow predictions about some aspect of what's going to happen next. Like there's a pattern "the ball is falling and it's going to hit the floor and then bounce back up", and this pattern is agnostic about color of the ball, how far away it is, how hungry I am, etc.

Then our plans are also built up from these little compositional pieces, and (to a first approximation) we come to have preferecnes about those pieces: some pieces are good (like "I'm impressing my friends") and some pieces are bad (like "this is painful") . But (again kinda like logical induction), there's no iron law enforcing global consistency of these preferences a priori. Instead there are processes that generally tend to drive preferences towards consistency. What are these processes?

Let's go through an example: a particular plausible human circular preference (based loosely on an example in Thinking Fast And Slow chapter 15). You won a prize! Your three options are:

(A) 5 lovely plates

(B) 5 lovely plates and 10 ugly plates

(C) 5 OK plates

No one has done this exact experiment to my knowledge, but plausibly (based on the book discussion) this is a circular preference in at least many people: When people see just A & B, they'll pick B because "it's more stuff, I can always keep the ugly ones as spares or use them for target practice or whatever". When they see just B & C, they'll pick C because "the average quality is higher". When they see just C & A, they'll likewise pick A because "the average quality is higher".

So what we have is two different preferences ("I want to have a prettier collection of stuff, not an uglier collection", and "I want extra free plates"), and different comparisons / situations make different aspects salient.

Again, what's happening is that it's computationally intractable to hold "an entire future situation" in our mind; we need to attend to certain aspects of it and not others. So we're naturally going to be prone to circular preferences by default.

OK, then what happens if you actually try to set up the money pump? You offer A, then $0.25 to switch to B, then $0.25 to switch to C, etc. I think the person would quickly catch on, because they'll also do the comparison "three steps ago versus now", and they'll notice that (unless switching is inherently fun as discussed above) they're now doing worse in every respect. And thus, they should stop going around in circles.

Basically, "other things equal, I prefer to have more money" (i.e. don't get money-pumped) is also a preference about future states, and in fact it's a "default" preference because of instrumental convergence.

Or another way of looking at it is: you can (and naturally do) have a preference "Insofar as my other preferences are self-contradictory, I should try to reduce that aspect of them", because this is roughly a Pareto-improving thing to do. All of my preferences about future states can be better-actualized simultaneously when I adopt the habit of "noticing when two of my preferences are working at cross-purposes, and when I recognize that happening, preventing them from doing so". So you gradually build up a bunch of new habits that look for various types of situations that pattern-match to "I'm working at cross-purposes to myself", and then execute a Pareto improvement—since these habits are by default positively reinforced. It's loosely analogous to how markets become more self-consistent when a bunch of people are scouting out for arbitrage opportunities.

comment by Bucky · 2021-07-04T16:25:30.991Z · LW(p) · GW(p)

I don’t know if all incoherent creatures value everything the same amount - it seems like it should maybe be possible to just have a restricted region of incoherence in some cases, but I haven’t thought this through.

This puts me in mind of sacred vs secular values - one can be coherent and the other incoherent as long as you refuse to trade between the 2. Or, more properly, as long as only 1-way trade is allowed which seems like the way sacred vs secular values work.

comment by tailcalled · 2021-06-30T08:21:16.745Z · LW(p) · GW(p)

"Incoherent entities have stronger reason to become more coherent than less" is very abstract, and if you pass through the abstraction it becomes obvious that it is wrong.

This is about the agent abstraction. The idea is that we can view the behavior of a system as a choice, by considering the situation it is in, the things it "could" do (according to some cartesian frame I guess), and what it then actually does. And then we might want to know if we could say something more compact about the behavior of the system in this way.

In particular, the agent abstraction is interested in whether the systen tries to achieve some goal in the environment. It turns out that if it follows certain intuitively goal-directed rules like always taking a decision and not oscillating around, it must have some well-defined goal.

There's then the question of what it means to have a reason. Obviously for an agent, it would make sense to treat the goal and things that derive from it as being a reason. For more general systems, I guess you could consider whatever mechanism it acts by to be a reason.

So will every system have a reason to become an agent? That is, will every system regardless of mechanism of action spontaneously change itself to have a goal? And I was about to say that the answer is no, because a rock doesn't do this. But then there's the standard point about how any system can be seen as an optimizer by putting a utility of 1 on whatever it does and a utility of 0 on everything else. That's a bit trivial though and presumably not what you meant.

So the answer in practice is no. Except, then in your post you changed the question from the general "systems" to the specific "creatures":

you should predict that reasonable creatures will stop doing that if they notice that they are doing it

Creatures a produced by evolution, and those that oscillate endlessly will tend to just go extinct, perhaps by some other creature evolving to exploit them, and perhaps just by wasting energy and getting outcompeted by other creatures that don't do this.

comment by Svyatoslav Usachev (svyatoslav-usachev-1) · 2021-07-02T20:57:09.973Z · LW(p) · GW(p)

My anecdotal experience of being a creature shows that I am very happy when I don't feel like an agent, coherent or not. The need for being an [efficient] agent only arises in the context of an adverse situation, e.g. related to survival, but agency and coherence are costly, in so many aspects. I am truly blessed when I am indifferent enough not to care about my agency or coherence.

comment by [deleted] · 2021-07-02T18:48:49.212Z · LW(p) · GW(p)

To add a simple observation to more detailed analysis : human brains have real world noise affecting their computations. So the preference they are going to exhibit when their internal pretences are almost the same is going to be random. This is also the optimal strategy for a game like rock paper scissors: to randomly choose from the 3 classes, because any preference for a class can be exploited like you found out.

We can certainly make AI systems that exhibit randomness whenever 2 actions being considered are close together in value heuristic.