The Mirror Chamber: A short story exploring the anthropic measure function and why it can matter

post by mako yass (MakoYass) · 2022-11-03T06:47:56.376Z · LW · GW · 13 commentsContents

Our World The Mirror Chamber None 13 comments

This story warrants and motivates the study of the hard problem of consciousness, presenting a plausible future scenario in which a notion of quantifiable anthropic measure as a property of an arrangement of matter demands operational definition on pain of death.

I know of no other piece of writing that motivates the hard problem of consciousness this forcefully, or at all, really.

The first section explains the quite plausible future history that might lead to the Mirror Chamber occurring in reality. If you are in a hurry, it's okay to skip straight to the section The Mirror Chamber

Our World

It's expensive to test new artificial brains. It's expensive because you can't tell whether a brain is working properly without putting some real human mind in it. For a long time, you just had to find someone who'd been granted a replication license, who was willing to put their life (or what is essentially the life of a close sibling) at risk just to help a manufacturing org to make a little incremental step forward.

You can't just acquire a replication license and give it to the manufacturing org's Ceo. Replication licenses cannot be bought. If they could, they would be very expensive in the wake of Replica Insurgencies, the ongoing Replica Purges, and all of the controversy over Replica Enfranchisement.

Nor can you take one of the "free" mind-encodings that abound over the shaded nets and use it in secret. We do have our Jarrets, who will claim to have consented to be instantiated in any situation their downloaders might desire, however perverted. For better or worse we have decided that Jarretism is a deeply wretched form of insanity, closely linked to open Carcony. It is illegal to intentionally transmit or instate any of the mind-encodings traded on the shaded nets.

I should explain Carcony. Carcony is what we call the crime underlying the insurgencies. A sickness in the body of mankind in which an errant contingent have begun to replicate like a plague, build armies, factories, gross, hypernormative nations where the openness and compromise of genuine humanity are not present.

Our society's memory of Carcony is still bleeding. The laws governing these sorts of activity are rightly very restrictive, but the work still needs to be done. Faster, more efficient neobrains need to be tested. Sound heads won out, a dialogue was had, a solution was devised.

A new professional class was lifted up to stand as proudly as any Doctor or any Laywer. After 15 years of training in metaethics and neonal engineering, a person could begin practice as a Consenter.

A Consenter is a special kind of person who is allowed to undergo replication into untested hardware then, within hours, commit replicide, without requiring a replication license. In light of this, the neobrain manufacturing sector became a lot more efficient. We all became a lot saner, more secure, more resilient, and faster, so much faster, all the better to savour spring, to watch bees' wings flap, to bathe in endless sunsets, to watch gentle rains drift down to earth. It is very good that consenters exist.

The Mirror Chamber

Consenter Nai Stratton enters the mirror chamber.

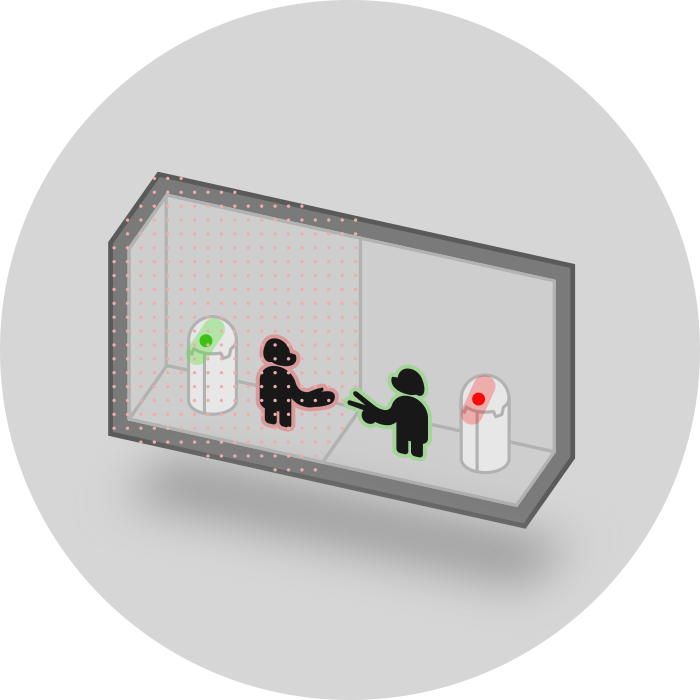

A mirror chamber is a blinding method designed to make the undertaking of self-murder as comfortable and humane as possible.

One of the walls of the mirror chamber displays a projection of a simulation of the chamber and its contents. The simulation is superficially indistinguishable from reality (as long as operating procedure was followed and the consenter has not brought any unexpected items into the room with them). Soon, the reflection of the chamber will also contain a reflection of Nai. It will start out as a perfect reflection, but it will soon diverge and become a separate being. Vitally, nothing about this divergence will tell either instance of Nai whether or not they are the original Nai- the executor- or whether they are the replica, who is to be executed. This is important. It's much easier for a consenter to morally justify letting the guillotine fall when they're taking on an even risk of being the one in the stocks, and a blinded setup is just more humane. The two instances can make the decision together on equal footing, taking on exactly the same amount of risk, each- having memories of being on the right side of the mirror many times before, and no memories of being on the wrong- tacitly feeling that they will go on to live a long and happy life.

Taking up one side of the room is a testing rig. The testing rig will take the test build's brain case and run it through all of the physical trauma that a new brain prosthetic must be able to withstand without permitting one flicker of a mental disturbance to graze its occupant soul. The nature and quantity of the physical trauma varies depending on the test build's rating. Under consenter-driven rapid iterative testing, builds are becoming hardier every year, consequently the trauma regimen becomes more harrowing to oversee.

Nai pours the neonal media into the brain case, and inserts the new build's specification chip into a slot beside it. The neonal media rapidly turns opaque and starts to orient itself in the shape of a human brain. Nai had read and mostly understood the specification of the new build, of course. Today, the latest advances in materials science and neonal engineering had motivated a much larger active brain portion and much thinner shielding than was had by previous models. Artificial brains- the parts that resemble a brain- were usually a miniature. Shielding was very important. Or it had been. Nai's current brain was a miniature. Neo-cells were more compact than organic cells. It had just made sense.

Nai had understood that a transition towards having larger neonal portions was what made sense now, but seeing the thing was something else. Something inarticulable begins to stir in Nai's chest.

Nai closes the brain case, sits down on a fixed seat opposing the testing armature, looks into the black reflective surface of the dormant mirror, and connects to a brain jack. After ten minutes of scanning, copying, and synchronization, the discontinuity is marked: All at once a white flash fills the room and a siren blares as the mirror turns white.

The mirror wall fades away to reveal a projection of the other room.

By design, the Consenter no longer knows which side of the mirror they are on. The original retains their memories of being the original, but the replica has those too. The original stays in their physical body, the replica feels the sensations of a simulated body, but nowhere in the chamber is there any hint by which either instance could figure out which one they are, and yet, the fates of these two near-identical beings will be very different.

As is customary, the two instances begin a game of Paper Scissors Rock.

(Paper, Paper), (Paper, Paper), (Rock, Rock), (Paper, Paper), (Paper, Scissors). Paper and Scissors are named.

As is custom, the loser speaks first.

Paper: "Cassowary". A random phrase spoken without much thought.

Scissors: "Cassowaries were able to lift their clawed foot and rend a person's guts right open, on a whim."

Paper: "They wouldn't do it just on a whim though."

Scissors: "Yes, always some reason for gutting a person, bouncing around in that tiny brain."

Paper smiles: "Do you feel big-brained today, Scissors?"

Scissors remembers that the test build has a physically larger brain. Over this vaguely threatening gallows humor, eye contact is broken. A difference is established.

Scissors: "Do you remember what it felt like, to have a larger brain?"

Nai had began Continuous Neuron Replacement at age nineteen, then the glials and the neoglials were ejected and Nai transitioned to a compacted, sheilded form, just like everyone else.

Nai is now seventy eight. It had been a while.

Paper wonders, what does it feel like to be... more? If there were two of you, rather than just one, wouldn't that mean something? What if there were another, but it were different?... so that-

Scissors: "Well?"

Paper: "Well what?"

Scissors: "What would it feel like? To be... More?... What if there were two of you, and one of me? Would you know?"

Paper: "But that's different."

Scissors: "How's it different? You know how the test build is architected. Literally, redundant parallel latices. It's not made of fatter, heavier neocells. It's made of a larger number of neocells, partially independent parallel networks to reduce and correct traumatic shearing."

It was true. The build that plays host to the replica (provisionally named "Wisp-Complete"), unlike the original's own build, is effectively three brains interleaved. But... Says Paper, "The triplets are so closely entangled- in state- that they're functionally indistinguishable from one neocell, how could that matter?"

Scissors: "There are still three of them. If I could somehow start three separate deterministic simulations of you all doing the same things at the same time, what is the probability that you're really one of those simualtions? Okay, now would you expect the probabilities to be different if we had three actively synchronised parallel units that were now physically connected? Why should that make a difference?"

Each instance sees on the face of the other the same anxiety, building.

They are hesitant to openly verbalise the source of the anxiety. I will omit their attempts to dismiss it. As it is written, they do not succeed. To put it simply: Each instance has now realised that the replica- its brain being physically more massive- has a higher expected anthropic measure than the original.

This violates one of the core requirements of the mirror chamber. It is no longer rational for a selfish agent in the position of either Paper nor Scissors to consent to the execution of the replica, because it is more likely than not, from either agent's perspective, that they are the replica. The rational decision for a selfish agent instead becomes...

Well... I'll have to unpack this a bit first. Our consenters have had many many decades to come to terms with these sorts of situations.

There are effectively four brains in the room. One Cloud-Sheet lattice in the original's brain case, and three redundant Wisp-Complete lattices in the test build, in the testing armature.

That gives any randomly selected agent that has observed that it is in the mirror chamber a 3/4 majority probability of being the replica, rather than being the original.

But aren't our consenters perfectly willing to take on a hefty risk death in service of progress? No. Most Consenters aren't. Selling one's mind and right to life in exchange for capital would be illegal. In a normal mirror chamber setup, when the original enters the mirror chamber, they are confident that it is the original who will walk out again. They are taking on no personal risk. None is expected, and none is required.

The obvious ways of defecting from protocol- an abdication of the responsibility of the consenter, a refusal to self-murder, an attempt to replicate without a replication license- are taken as nothing less than Carcony. It would be punished with the deaths of both copies and any ancestors of less than 10 years of divergence or equivalent. It is generally not smart to engage in Carcony. Taking the replica's build from the testing rig and running off with it would give each copy less than one percent probability of surviving.

But if, somehow, the original were killed? What if neither instance of the Consenter signed for their replica's execution, and the replica were left alive. That would not be considered Carcony. It would not even be considered theft- because a brain legally always belongs to its occupant mind.

And the replica's vessel would be rescued, inserted into a new body, and set free (if, perhaps, ignominiously).

So, do you see now? Do you see how Consenter Nai Paper-Chell-Glass-Stratton was motivated by a simple alignment of the payoff matrices?

After an expanse of silence in the dismal wake of their attempts to escape this revelation, it was Paper who spoke first: "We have to do it together, at the same time."

Scissors: "I hope you're not being sentimental."

Paper: "You wouldn't do that to me. Look... if you're the original... And I do myself, and I'm the replica. I wont actually be dead, because if you destroy a representation of your brain case in a simulation that doesn't actually destroy you in real life. I might not even desync. Whatever happens, I'll still be alive after the penetration so I'll know I'm the replica, but you might not know. It might look like I'm really dead. And you'll have no incentive to follow through and do yourself at that point."

Scissors: "I still don't see it."

Paper: "... If you're the replica, it doesn't matter whether you do yourself, you'll still get saved either way, but you're incented not to do yourself because having a simulated spike stuck through your simulated head will probably be pretty uncomfortable. But also, if you're the original, you're sort of doomed either way, you're just incented to run off and attempt Carcony, but the replica would definitely be caught and executed if you did that, and you'd probably be executed too, so you wouldn't do that to me. Would you?"

Scissors wipes away a tear. "Of course not.. this is... Your decision is acausally entangled with mine as directly as any decision ever can be. Karma applies. I would not do that. You fucking know that I wouldn't do that. Why are we like this? What scar is hewn upon our hearts that we'd suspect our own replicas of defection against self?"

Paper looks down. "Yeah. Sorry. We're a real piece of work. This probably isn't a good time for us to get into that."

They each walk to their respective testing armature, and ready them for what needs to be done.

The test build is an order of magnitude hardier than Nai's older Cloud-Sheet. As such, the testing armature is equipped to apply enough pressure to pierce the Cloud-Sheet's shielding, and so it was made possible for the instances to conspire to commit to the legal murder of Consenter Nai Scissors Chell Glass Stratton.

A few things happened in the wake of Consenter Paper Stratton's act of praxis.

Proposals were made to use singular, thicker neo-cells instead of using three redundant lattices, but most consenter-adjacent philosophers took the position that it was ridiculous to expect this to change the equations, that a cell with thrice the mass should be estimated to have about thrice the anthropic measure as a small neobrain, and the same amount of measure as a triple redundant lattice. The consenter union banned the use of mirror chambers in any case where the reasonable scoring of the anthropic measure of the test build was higher than the reasonable scoring of a consenter's existing build.

In some such cases, a lower sort of consenter would need to be found. Someone who could see two levers, one that had a 2/3 chance of killing them, and one that had a 1/3 chance of killing them, then pull the first one because it was what their employers preferred. Consenter Replica Paper Stratton was adored, but in their wake, the specialisation as it really existed lost some of its magic.

Mainkult philosophy had been lastingly moved. We could say we finally knew what anthropic measure really was. Anthropic measure really was the thing that caused consenter originals to kill themselves.

A realization settled over the stewards of the garden in the stars. The world that humanity built was so vast that every meaningful story had been told, that the worst has happened, and the best has happened, nothing could be absolutely prevented, and there was no longer anything new that was worth seeing.

And if that wasn't true of our garden, we would look out along the multiverse hierarchy and we would know how we were reflected infinitely, in all variations.

Some found this paralyzing. If the best had happened and the worst had happened, what was left to fight for?

It became about relative quantities.

Somewhere, a heart would break. Somewhere else a heart would swell. We just needed to make sure that more hearts swelled than broke.

We remembered Paper Stratton. How do you weigh two different hearts and say which one contains more subjective experience? Nai, from their place in orbit of sol answered, "With a scale". So it came to be that the older dyson fleets, with fatter, heavier neonal media in their columns, would host the more beautiful dreams. The new fleets, with a greater variance in neonal density, would host the trickier ones.

We couldn't test for anthropic measure. There was no design that could tease it out of the fabric of existence so that we could study its raw form and learn to synthesize it. As far as any of us could tell, anthropic measure simply was the stuff of the existence.

13 comments

Comments sorted by top scores.

comment by mako yass (MakoYass) · 2022-02-12T23:55:46.892Z · LW(p) · GW(p)

Interestingly, we just noticed that Turing Tests are natural mirror chambers!

A turing test is a situation where a human goes behind a curtain and answers question in earnest, and an AI goes behind another curtain and has to convince the audience that it's the human participant.

A simple way for an AI to pass the turing test is just constructing and running a model of a human who is having the experience of being a human participant in the turing test, then saying whatever the model says. [1]

In that kind of situation, as soon as you step behind that curtain, you can no longer know what you are, because you happen to know that there's another system, that isn't really a human, that is having this exact experience that you are having.

You feel human, you remember living a human life, but so does the model of a human that's being simulated within the AI.

[1] but this might not be what the AI does in practice. It might be easier and more reliable to behave in a way more superficially humanlike than a real human would or could behave, use little tricks that can only be performed with self-awareness. In this case, the model would know it's not really human, so the first-hand experience of being a real human participating in a turing test would be distinguishable from it. If you were sure of this self-aware actor theory, then it would no longer be a mirror chamber, but if you weren't completely sure, it still would be.

comment by Max Kaye (max-kaye) · 2020-08-22T19:36:19.096Z · LW(p) · GW(p)

This is commentary I started making as I was reading the first quote. I think some bits of the post are a bit vague or confusing but I think I get what you mean by anthropic measure, so it's okay in service to that. I don't think equating anthropic measure to mass makes sense, though; counter examples seem trivial.

> The two instances can make the decision together on equal footing, taking on exactly the same amount of risk, each- having memories of being on the right side of the mirror many times before, and no memories of being on the wrong- tacitly feeling that they will go on to live a long and happy life.

feels a bit like like quantum suicide.

note: having no memories of being on the wrong side does not make this any more pleasant an experience to go through, nor does it provide any reassurance against being the replica (presuming that's the one which is killed).

> As is custom, the loser speaks first.

naming the characters papers and scissors is a neat idea.

> Paper wonders, what does it feel like to be... more? If there were two of you, rather than just one, wouldn't that mean something? What if there were another, but it were different?... so that-

>

> [...]

>

> Scissors: "What would it feel like? To be... More?... What if there were two of you, and one of me? Would you know?"

isn't paper thinking in 2nd person but then scissors in 1st? so paper is thinking about 2 ppl but scissors about 3 ppl?

> It was true. The build that plays host to the replica (provisionally named "Wisp-Complete"), unlike the original's own build, is effectively three brains interleaved

Wait, does this now mean there's 4 ppl? 3 in the replica and 1 in the non-replica?

> Each instance has now realised that the replica- its brain being physically more massive- has a higher expected anthropic measure than the original.

Um okay, wouldn't they have maybe thought about this after 15 years of training and decades of practice in the field?

> It is no longer rational for a selfish agent in the position of either Paper nor Scissors to consent to the execution of the replica, because it is more likely than not, from either agent's perspective, that they are the replica.

I'm not sure this follows in our universe (presuming it is rational when it's like 1:1 instead of 3:1 or whatever). like I think it might take different rules of rationality or epistemology or something.

> Our consenters have had many many decades to come to terms with these sorts of situations.

Why are Paper and Scissors so hesitant then?

> That gives any randomly selected agent that has observed that it is in the mirror chamber a 3/4 majority probability of being the replica, rather than being the original.

I don't think we've established sufficiently that the 3 minds 1 brain thing are actually 3 minds. I don't think they qualify for that, yet.

> But aren't our consenters perfectly willing to take on a hefty risk death in service of progress? No. Most Consenters aren't. Selling one's mind and right to life in exchange for capital would be illegal.

Why would it be a hefty risk? Isn't it 0% chance of death? (the replicant is always the one killed)

> In a normal mirror chamber setup, when the original enters the mirror chamber, they are confident that it is the original who will walk out again. They are taking on no personal risk. None is expected, and none is required.

Okay we might be getting some answers soon.

> The obvious ways of defecting from protocol- an abdication of the responsibility of the consenter, a refusal to self-murder, an attempt to replicate without a replication license- are taken as nothing less than Carcony.

Holy shit this society is dystopic.

> It would be punished with the deaths of both copies and any ancestors of less than 10 years of divergence or equivalent.

O.O

> But if, somehow, the original were killed? What if neither instance of the Consenter signed for their replica's execution, and the replica were left alive. That would not be considered Carcony. It would not even be considered theft- because a brain always belongs to its mind.

I'm definitely unclear on the process for deciding; wouldn't like only one guillatine be set up and both parties affixed in place? (Moreover, why wouldn't the replica just be a brain and not in a body, so no guillatine, and just fed visual inputs along with the mirror-simulation in the actual room -- sounds feasilble)

> What if neither instance of the Consenter signed for their replica's execution

Wouldn't this be an abdication of responsibility as mentioned in the prev paragraph?

> So, do you see now? Do you see how Consenter Nai Paper-Chell-Glass-Stratton was motivated by a simple alignment of the payoff matrices?

Presumably to run away with other-nai-x3-in-a-jar-stratton?

> Paper: "You wouldn't do that to me. Look... if you're the original... And I do myself, and I'm the replica. I wont actually be dead, because if you destroy a representation of your brain case in a simulation that doesn't actually destroy you in real life. I might not even desync. Whatever happens, I'll still be alive after the penetration so I'll know I'm the replica, but you might not know. It might look like I'm really dead. And you'll have no incentive to follow through and do yourself at that point."

> Scissors: "I still don't see it."

So both parties sign for the destruction of the replica, but only the legit Nai's signing will actually trigger the death of the replica. The replica Nai's signing will only SIMULATE the death of a simulated replica Nai (the "real" Nai being untouched) - though if this happened wouldn't they 'desync' - like not be able to communicate? (presuming I understand your meaning of desync)

> Paper: "... If you're the replica, it doesn't matter whether you do yourself, you'll still get saved either way, but you're incented not to do yourself because having a simulated spike stuck through your simulated head will probably be pretty uncomfortable. But also, if you're the original, you're sort of doomed either way, you're just incented to run off and attempt Carcony, but there's no way the replica would survive you doing that, and you probably wouldn't either, you wouldn't do that to me. Would you?"

I don't follow the "original" reasoning; if you're the original and you do yourself the spike goes through the replica's head, no? So how do you do Carcony at that point?

> The test build is an order of magnitude hardier than Nai's older Cloud-Sheet. As such, the testing armature is equipped to apply enough pressure to pierce the Cloud-Sheet's shielding, and so it was made possible for the instances to conspire to commit to the legal murder of Consenter Nai Scissors Bridger Glass Stratton.

So piercing the sheilding of the old brain (cloud-sheet) is important b/c the various Nai's (ambiguous: all 4 or just 3 of them) are conspiring to murder normal-Nai and they need to pierce the cloud-sheet for that. But aren't most new brains they test hardier than the one Nai is using? So isn't it normal that the testing-spike could pierce her old brain?

> A few things happened in the wake of Consenter Paper Stratton's act of praxis.

omit "act of", sorta redundant.

> but most consenter-adjacent philosophers took the position that it was ridiculous to expect this to change the equations, that a cell with thrice the mass should be estimated to have about thrice the anthropic measure, no different.

This does not seem consistent with the universe. If that was the case then it would have been an issue going smaller and smaller to begin with, right?

Also, 3x lattices makes sense for error correction (like EC RAM), but not 3x mass.

> The consenter union banned the use of mirror chambers in any case where the reasonable scoring of the anthropic measure of the test build was higher than the reasonable scoring of a consenter's existing build.

this presents a problem for testing better brains; curious if it's going to be addressed.

I just noticed "Consenter Nai Paper-Chell-Glass-Stratton" - the 'paper' referrs to the rock-paper-sissors earlier (confirmed with a later Nai reference). She's only done this 4 times now? (this being replication or the mirror chamber)

earlier "The rational decision for a selfish agent instead becomes..." is implying the rational decision is to execute the original -- presumably this is an option the consenter always has? like they get to choose which one is killed? Why would that be an option? Why not just have a single button that when they both press it, the replica is died; no choice in the matter.

> Scissors: "I still don't see it."

Scissors is slower so scissors dies?

> Paper wonders, what does it feel like to be... more? If there were two of you, rather than just one, wouldn't that mean something? What if there were another, but it were different?... so that-

I thought this was Paper thinking not wondering aloud. In that light

> Scissors: "What would it feel like? To be... More?... What if there were two of you, and one of me? Would you know?"

looks like partial mind reading or something, like super mental powers (which shouldn't be a property of running a brain 3x over but I'm trying to find out why they concluded Scissors was the original)

> Each instance has now realised that the replica- its brain being physically more massive- has a higher expected anthropic measure than the original.

At this point in the story isn't the idea that it has a higher anthropic measure b/c it's 3 brains interleaved, not 1? while the parenthetical bit ("its brain ... massive") isn't a reason? (Also, the mass thing that comes in later; what if they made 3 brains interleaved with the total mass of one older brain?)

Anyway, I suspect answering these issues won't be necessary to get an idea of anthropic measure.

(continuing on)

> Anthropic measure really was the thing that caused consenter originals to kill themselves.

I don't think this is rational FYI

> And if that wasn't true of our garden, we would look out along the multiverse hierarchy and we would know how we were reflected infinitely, in all variations.

> [...]

> It became about relative quantities.

You can't take relative quantities of infinities or subsets of infinities (it's all 100% or 0%, essentially). You can have *measures*, though. David Deutsch's Beginning of Infinity goes into some detail about this -- both generally and wrt many worlds and the multiverse.

↑ comment by mako yass (MakoYass) · 2020-08-30T04:02:18.262Z · LW(p) · GW(p)

having no memories of being on the wrong side does not make this any more pleasant an experience to go through, nor does it provide any reassurance against being the replica (presuming that's the one which is killed

It provides no real reassurance, but it would make it a more pleasant experience to go through. The effect of having observed the coin landing heads many times and never tails, is going to make it instinctively easy to let go of your fear of tails.

Um okay, wouldn't they have maybe thought about this after 15 years of training and decades of practice in the field?

Possibly! I'm not sure how realistic that part is, to come to that realization while the thing is happening instead of long before, but it was kind of needed for the story. It's at least conceivable that the academic culture of the consenters was always a bit inadequate, maybe Nai had heard murmurings about this before, then the murmurer quietly left the industry and the Nais didn't take it seriously until they were living it.

I don't think we've established sufficiently that the 3 minds 1 brain thing are actually 3 minds

The odds don't have to be as high as 3:1 for the decision to come out the same way.

Holy shit this society is dystopic.

The horrors of collectively acknowledging that the accessible universe's resources are finite and that growth must be governed in order to prevent malthus from coming back? Or is it more about the beurocracy of it, yeah, you'd really hope they'd be able to make exceptions for situations like this, hahaha (but, lacking an AGI singleton, my odds for society still having legal systems with this degree of rigidity are honestly genuinely pretty high, like yeah, uh, human societies are bad, it's always been bad, it is bad right now, it feels normal if you live under it, you Respect The History and imagine it couldn't be any other way)

Wouldn't this be an abdication of responsibility as mentioned in the prev paragraph?

An abdication of the expectations of an employer, much less than an abdication of the law. Transfers to other substrates with the destruction of the original copy are legal, probably even commonplace in many communities.

I think this section is really confusing. They're talking about killing the original, which the chamber is not set up to do, but they have an idea as to how to do it. The replica is just a brain, they will only experience impaling their brain with a rod and it wouldn't actually happen. They would be sitting there with their brain leaking out while somehow still conscious.

omit "act of", sorta redundant.

Praxis consists of many actions, the reaction was very much towards this specific act, this is when people noticed.

If that was the case then it would have been an issue going smaller and smaller to begin with, right?

They only started saying things like this after the event. Beforehand it would have been very easy to deny that there was an issue. Those with smaller brains had the same size bodies as everyone else, just as much political power, exactly the same behaviours.

She's only done this 4 times now?

The other names are from different aspects of life. Some given by parents, some taken on as part of coming of age. Normally a person wouldn't take on names from executing a mirror chamber, in this case it was because it was such a significant event and a lot of people came to know Nai as Paper from the mirror chamber transcript.

looks like partial mind reading or something

It's because a lot of their macrostate is still in sync. This is a common experience in mirror chambers, so they don't remark on it.

It certainly is about measures.

Well that sounds interesting. Measure theory is probably going to be crucial for getting firm answers to a lot of the questions I've come to feel responsible for. Not just anthropics, there's some simulist multiverse stuff as well..

I don't think this is rational FYI

How so?

I should probably mention, if I were just shoved into that situation... We probably wouldn't kill the original. I wouldn't especially care about having reduced measure or existing less, because I consider myself a steward of the eschaton rather than a creature of it, if anything, my anthropic measure should be decreased as much as possible so that the painful path of meaning and service that I have chosen has less of an impact on the beauty of the sum work. Does that make sense? If not never mind it's not so important.

I'm going to need to find a way to justify making Paper and Scissors less ingroupy in the way they talk to each other, the premise that we would be able to eavesdrop a conversation between a philosopher and a copy of that philosopher and understand what they were on about is kind of not plausible and it comes through here. Maybe I should just not report the contents of the conversation, or turn that up to an extreme, have everything they say be comically terse and idiolectic, then explain it after the fact. Actually yes I think that would be great. Intimacy giving rise to ideolect needs to be depicted more.

comment by Martín Soto (martinsq) · 2022-11-26T22:29:38.722Z · LW(p) · GW(p)

Super cool story I really enjoyed, thank you!

That said, the moral of the story would just be "anthropic measure is just whatever people think anthropic measure is", right?

comment by Charlie Steiner · 2019-01-12T00:13:13.942Z · LW(p) · GW(p)

Very beautiful! Though see here, particularly footnote 1 [LW · GW]. I think there are pretty good reasons to think that our ability to locate ourselves as persons (and therefore our ability to have selfish preferences) doesn't depend on brain size or even redundancy, so long as the redundant parts are causally yoked together.

Replies from: MakoYass, MakoYass↑ comment by mako yass (MakoYass) · 2019-01-12T02:01:52.648Z · LW(p) · GW(p)

I haven't read their previous posts, could you explain what "who has the preferences via causality" refers to?

Replies from: Charlie Steiner↑ comment by Charlie Steiner · 2019-01-12T09:42:24.537Z · LW(p) · GW(p)

It's about trying to figure out what's implied about your brain by knowing that you exist.

It's also about trying to draw some kind of boundary with "unknown environment to interact with and reason about" on one side and "physical system that is thinking and feeling" on the other side. (Well, only sort of.)

Treating a merely larger brain as more anthropically important is equivalent to saying that you can draw this boundary inside the brain (e.g. dividing big neurons down the middle), so that part of the brain is the "reasoner" and the rest of the brain, along with the outside, is the environment to be reasoned about.

This is boundary can be drawn, but I think it doesn't match my self-knowledge as well as drawing the boundary based on my conception of my inputs and outputs.

My inputs are sight, hearing, proprioception, etc. My outputs are motor control, hormone secretion, etc. The world is the stuff that affects my inputs and is affected by my outputs, and I am the thing doing the thinking in between.

If I tried to define "I" as the left half of all the neurons in my head, suddenly I would be deeply causally connected to this thing (the right halves of the neurons) I have defined as not-me. These causal connections are like a huge new input and output channel for this defined-self - a way for me to be influenced by not-me, and influence it in turn. But I don't notice this or include it in my reasoning - Paper and Scissors in the story are so ignorant about it that they can't even tell which of them has it!

So I claim that I (and they) are really thinking of themselves as the system that doesn't have such an interface, and just has the usual suite of senses. This more or less pins down the thing doing my thinking as the usual lump of non-divided neurons, regardless of its size.

Replies from: MakoYass↑ comment by mako yass (MakoYass) · 2019-01-12T20:21:04.906Z · LW(p) · GW(p)

Treating a merely larger brain as more anthropically important is equivalent to saying that you can draw this boundary inside the brain

I really can't understand where this is coming from. When we weigh a bucket of water, this imposes no obligation to distinguish between individual water molecules. For thousands of years we did not know water molecules existed, and we thought of the water as continuous. I can't tell whether this is an answer to what you're trying to convey.

Where I'm at is... I guess I don't think we need to draw strict boundaries between different subjective systems. I'll probably end up mostly agreeing with Integrated Information theories. Systems of tightly causally integrated matter are more likely as subjectivities, but at no point are supersets of those systems completely precluded from having subjectivity, for example, the system of me, plus my cellphone, also has some subjectivity. At some point, the universe experiences the precise state of every transistor and every neuron at the same time (this does not mean that any conscious-acting system is cognisant of both of those things at the same time. Subjectivity is not cognisance. It is possible to experience without remembering or understanding. Humans do it all of the time.)

Replies from: Charlie Steiner↑ comment by Charlie Steiner · 2019-01-13T01:28:42.989Z · LW(p) · GW(p)

I'll probably end up mostly agreeing with Integrated Information theories

Ah... x.x Maybe check out Scott Aaronsons' blog posts on the topic (here and here)? I'm definitely more of the Denettian "consciousness is a convenient name for a particular sort of process built out of lots of parts with mental functions" school.

Anyhow, the reason I focused on drawing boundaries to separate my brain into separate physical systems is mostly historical - I got the idea from the Ebborians [LW · GW] (further rambling here [LW · GW]. Oh, right - I'm Manfred). I just don't find mere mass all that convincing as a reason to think that some physical system's surroundings are what I'm more likely to see next.

Intuitively it's something like a symmetry of my information - if I can't tell anything about my own brain mass just by thinking, then I shouldn't assign my probabilities as if I have information about my brain mass. If there are two copies of me, one on Monday with a big brain and one on Tuesday with a small brain, I don't see much difference in sensibleness between "it should be Monday because big brains are more likely" and "I should have a small brain because Tuesday is an inherently more likely day." It just doesn't compute as a valid argument for me without some intermediate steps that look like the Ebborians argument.

Replies from: MakoYass↑ comment by mako yass (MakoYass) · 2019-01-13T06:26:13.128Z · LW(p) · GW(p)

I'm definitely more of the Denettian "consciousness is a convenient name for a particular sort of process built out of lots of parts with mental functions" school.

I'm in that school as well. I'd never call correlates with anthropic measure like integrated information "consciousness", there's too much confusion there. I'm reluctant to call the purely mechanistic perception-encoding-rumination-action loop consciousness either. For that I try to stick, very strictly to "conscious behaviour". I'd prefer something like "sentience" to take us even further from that mire of a word.

(But when I thought of the mirror chamber it occurred to me that there was more to it than "conscious behaviour isn't mysterious, it's just machines". Something here is both relevant and mysterious. And so I have to find a way to reconcile the schools.)

athres ∝ mass is not supposed to be intuitive. Anthres ∝ number is very intuitive, what about the path from there to anthres ∝ mass didn't work for you?

↑ comment by mako yass (MakoYass) · 2019-01-12T01:00:15.641Z · LW(p) · GW(p)

Will read. I was given pause recently when I stumbled onto If a tree falls on Sleeping Beauty [LW · GW], where our bets (via LDT reflectivist pragmatism, I'd guess) end up ignoring anthropic reasoning

comment by Measure · 2022-11-04T14:33:05.627Z · LW(p) · GW(p)

I've read this before. Did you post it, or a version of it, previously?

Replies from: MakoYass↑ comment by mako yass (MakoYass) · 2022-11-05T01:16:15.731Z · LW(p) · GW(p)

Yeah, but I didn't post it again or anything. There was a bug with the editor, and it looks like the thing Ruby did to fix it caused it to be resubmitted.

I'd say the diagram I've added isn't quite good enough to warrant resubmitting it, but there is a diagram that would have been. This always really needed a diagram.