Get Social Sector Leaders to Use Evidence With This 1 Weird Trick

post by Ian David Moss · 2020-09-19T18:07:50.000Z · LW · GW · 0 commentsContents

Just providing people with evidence doesn’t mean they’ll do anything with it One-off workshops and training programs are (probably) a waste of time Increasing motivation is critical Making change requires structure and leadership Learning from the broader literature Building social and professional norms Strategic communication Reminders and nudges Learning from adult learning Supervised learning A call to action None No comments

Okay, it’s more like a dozen not-that-weird tricks, but the point is…

For a while now, I’ve been sounding the alarm about the social sector’s crisis of evidence use. To put it bluntly, the human race expends mind-boggling resources bringing studies, reports, and analyses into the world that are read by few and acted upon by no one. As I argued at the time, “we are either vastly overvaluing or vastly undervaluing the act of building knowledge.” My sincere hope has long been that it’s the latter — that the knowledge we produce really does hold tremendous value, and all we need to do is figure out what works to unlock it. Luckily there is a whole ecosystem of organizations dedicated to answering “what works?” kinds of questions, and four years ago that ecosystem produced a report called “The Science of Using Science” that tackles this question head-on.

“The Science of Using Science” seeks to understand what interventions are effective in increasing the use of research evidence by leaders and practitioners at various levels of civil society. It encompasses two reviews in one: the first examined the literature on evidence-informed decision-making (EIDM) itself, and the second prowled the broader social science literature for interventions that could be relevant to EIDM but haven’t specifically been tested in that context.

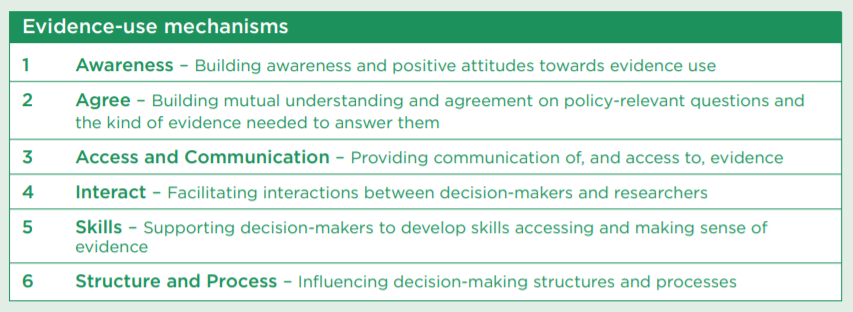

Based on previous theoretical work by themselves and others, the authors devised a conceptual framework encompassing six mechanisms under which to group the interventions discussed in the reviews. The mechanisms are as follows:

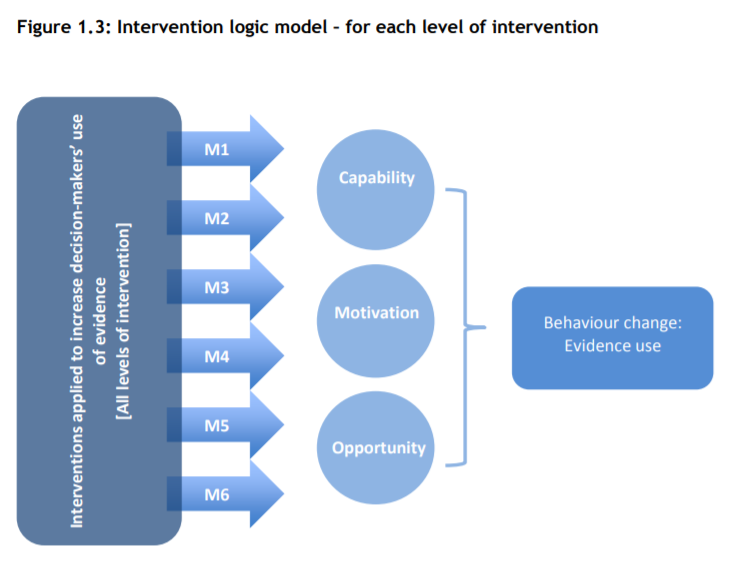

The theory is that these mechanisms motivate behavior change through the three-part Capability, Motivation, and Opportunity model developed by Michie et al in 2011 as diagrammed below:

So what did they find? The first review, the one focused on studies specifically assessing EIDM interventions, identified 23 existing reviews of moderate to high trustworthiness that were published between 1990–2015. Most of these drew from the health sector, and featured plenty of variation in the interventions, outcomes, and indicators used. Nevertheless, the aggregated findings offer several useful insights:

Just providing people with evidence doesn’t mean they’ll do anything with it

One of the clearest takeaways from the experimental literature, which backs up the descriptive evidence I’ve previously written about, is that simply putting evidence in front of your audience doesn’t accomplish much on its own. Interventions to facilitate access to evidence were only effective if they were paired with parallel interventions to increase decision-makers’ motivation and opportunity to use the evidence, such as targeted and personalized reminders.

One-off workshops and training programs are (probably) a waste of time

Similarly, the skill-building activities studied only worked if the intervention design simultaneously targeted decision-makers’ capability and motivation to use evidence. Interventions that were passive or applied at low intensity (e.g., a one-off half-day training session) had no effect.

Increasing motivation is critical

As you can see from the above, a common theme running through the report was that many of the interventions only worked if decision-makers had sufficient motivation to use evidence. Yet of the three behavior change prerequisites, motivation was the one least often targeted by interventions included in the study. The authors surmise that the capability/motivation/opportunity framework is like a three-legged stool — you need all three of them in place for the design to work as a whole. So it stands to reason that if motivation is the most neglected of the three elements, interventions to increase motivation are especially valuable.

Making change requires structure and leadership

While not a universal role, in general it seems to be the case that the more casual the nature of the intervention, the less likely it is to work. Of the six mechanisms studied in the first review, the closest that came to a failing grade across the board was M4 (interaction between researchers and decision-makers), as “a large majority of the…interventions that included an unstructured interaction component did not increase evidence use.” By contrast, the review found that authentically integrating interventions into existing decision-making systems and processes, rather than treating them as something separate or “extra,” appears to enhance their effects. Two specific examples that showed this kind of promise were evidence-on-demand hotlines and supervising the application of EIDM skills.

Learning from the broader literature

Arguably the most impressive contribution of “The Science of Using Science” comes from the second review, which examined the social science literature for more general clues about how to change behavior that could be applied to this context. This part of the project was breathtaking in scope — the authors not only identified some 67 distinct interventions across dozens of fields of study ranging from behavioral economics to user experience design, they were able to offer a preliminary assessment of the effectiveness of each of those interventions on outcomes related to evidence use. Below are some of the techniques and approaches that stood out as especially promising:

Building social and professional norms

Human beings are social animals, which makes social and professional norms incredibly powerful forces for influencing action. Social/professional norm interventions assessed by the authors included social marketing (the application of which would be marketing evidence use as a social or professional norm), social incentives (building an intrinsic motivation to use evidence), and identity cues and priming (triggering and reinforcing nascent evidence use norms). All three have a strong evidence base for building motivation in the broader social science literature.

Strategic communication

If you’ve ever wondered if “strategic communication” is really a thing or just marketing jargon — it’s real! Tailoring and targeting messages to recipients based on their concerns and interests, framing messaging around gains and losses, and deploying narrative techniques like metaphors and concrete examples all have ample evidence of effectiveness in the social science literature, increasing motivation for behavior change.

Reminders and nudges

It almost sounds too easy, but simple steps to increase the salience of things you want people to do are often enough to spur them into motion. Both reminders and “nudges” — simple changes to the choices people face on an everyday basis, such as happens if they take no action — have strong evidence behind them when it comes to behavior change.

Learning from adult learning

I mentioned above that low-intensity skill-building workshops were shown to be ineffective in the first review. That finding shouldn’t be a surprise in light of what studies on adult pedagogy have shown regarding what works in this arena. One such review noted that adult learning interventions had sharply diminishing effects if they included less than 20 hours of instruction or training or were delivered to more than 40 participants; furthermore, interventions worked best if they took place directly in participants’ work settings. Applying insights like these increased both capability and motivation to change behavior in the studies reviewed. (As someone who is regularly asked to conduct low-intensity skill-building workshops for clients, I’m eager to use these lessons to improve the quality and effectiveness of my own programming!)

Supervised learning

Not surprisingly, bringing the boss into the equation is a pretty effective way to motivate employees to use evidence! As highlighted previously, one of the successful case studies in the first review involved having senior leaders supervise employees attempting to apply new EIDM skills in practice. The second review found that supervision (mainly based on the idea of “clinical supervision” as applied in the healthcare field) moves the needle on all three behavior change outcomes of capability, motivation, and opportunity. In addition, a practice called “audit and feedback,” which is basically a kind of performance monitoring, had a small but significant impact on behavior change.

A call to action

I’ll close here with a finding that seems like a fitting summation of the lessons from “The Science of Using Science.” One review judged to be of high trustworthiness compared the effectiveness of different behavior change interventions to each other, and found that behavioral change interventions that worked best were those that emphasized action. The emphasis on action seems appropriate across the board when looking at the findings from the literature: over and over again, we are told that it’s not enough just to provide information and hope for the best, or get people talking to each other and hope for the best. Evidence is unlikely to be used, it seems, unless the entire intervention is designed with the act of using evidence as its center of gravity. As the authors write in the concluding sections, “the science of using science might be able to progress further by starting with the user of evidence and studying their needs and behaviours in decision-making,” and only then considering the role that research can play.

The bottom line: it’s not impossible to get people to use evidence. It’s just that most of us are going about it the wrong way. The global knowledge production industry is vast. It includes virtually all think tanks and universities, many government agencies, and an increasing number of foundations and NGOs. And what this research tells us is that many longstanding, firmly established, and seemingly impossible-to-change practices within these institutional ecosystems are premised on naive and incorrect assumptions about how human beings naturally use information in their decision-making process. It’s not hard to argue that billions of dollars a year are wasted as a result. But the good news is that these problems are, at least to some degree, fixable. The same advances in behavioral science, adult pedagogy, and other fields that have been used to solve problems ranging from eating healthier food to wearing condoms can be just as helpful for motivating social sector leaders to use evidence responsibly. Though we don’t yet know all the details of what works in which contexts, we do know enough now to say that trying to find out is a worthwhile endeavor.

0 comments

Comments sorted by top scores.