Justification for Induction

post by Krantz · 2023-11-27T02:05:49.217Z · LW · GW · 25 commentsContents

Part A Part B Part C Part D Part E None 25 comments

David Hume presented philosophers with a problem. His claim was that there is no justification in the assertion that, “instances of which we have had no experience resemble those of which we have had experience”. In the following paper, I will show that observations of instances can justify assertions of instances that have not been experienced. I will begin, in part A, by defining what it means to be an observation of an instance. Next, in part B, I will define what it means to be an assertion of an instance that has not been experienced. Then, in part C, I will define what it means for an assertion to be justified. In part D, I will show how experiences (as defined in part A) meet the criteria for justification (as defined in part C) for non-experienced instances (as defined in part B). Finally, in corollary part E, I will demonstrate how generalized statements about future events can be justified using the methodology from part D and the conceptualized framework of the (epsilon, delta) proofs that are employed in calculus.

Part A

An observation of instance, is a statement that can be made about the Universe. For example, “there is at least one swan that is white. (this sort of assertion can be made while looking at a white swan that is found in nature.)”

Part B

An instance that has not been experienced, is an observation that is to be made in the future. For example, “the next swan that we see will be white. (with regard to the next swan that will be observed in nature.)” An assertion of such instance, is a statement that is made at a time (t1) that predicates an observation that occurs at a time (t2), such that, the time (t1) is before the time (t2).

Part C

An assertion (p) is justified if the negation of (p) implies a contradiction. An assertion is also justified if it is directly observed. For example, if a white swan is observed, we are justified in making the assertion, “a white swan has been observed.”

Part D

Consider a case where an assertion (p1) is made, (the next swan that we see will be white.), at a given time (t1). According to the criteria in part C, an observation at time (t2) of an object that holds both properties (the next swan seen) and (white) justifies the assertion (p1). It is important to understand that at time (t1) the assertion (p1) was not justified, but at time (t2) the assertion (p1) was justified.

There is a substantial statement that is presented in Hume’s work; primarily that, “Assertions that are made about future instances, cannot be justified at the time they are made.”, however, this substantial statement does not entail that; “Assertions that are made about future instances can never be justified.” which subsequently entails the more general statement that; “Assertions that are made about the future cannot be justified.” On the contrary, it is my intention to illustrate that assertions of instances that have not been experienced (with respect to their assertion at t1) can be justified in the future in which they are observed (with respect to their observation at t2).

Part E

A generalized statement is an assertion that predicates properties to an unlimited number of observational cases. For example, “all objects that will be observed to have the property (swan) will also have the property (white).” It is the justification of this kind of generalized statement that I intend to establish a basis for approximation. In other words, there are observations which can be made that imply a partial justification of a generalized statement.

A formalized notion of partial justification is critical to the arguments presented here and thus, must be rigorously defined. A partial justification for the assertion of a given statement (q) is the affirmation of a premise (p) such that; the premise (p) is a member of the set of premises necessary to entail (q). This implies that partial justification for a statement (q), in the form of a true statement (p1), reduces the set of undetermined premises (p2, p3, p4, …) necessary to entail (q). In other words, if the set of premises necessary to entail (q), after the observation of (p1), is a proper subset of the set of premises necessary to entail (q), before the observation of (p1), then it follows that (q) is partially justified by (p1).

Consider the non-generalized statement, “the next n objects that are observed to have the property (swan) will also have the property (white).”

In the case; n = 2, we have;

(q1) The next two objects that are observed to have the property (swan) will also have the property (white). (stated at t1).

The statement (q1) is justified by the truth of both statements;

(p1) The first object to have the property (swan) also has the property (white). (observed at t2)

and

(p2) The second object to have the property (swan) also has the property (white). (observed at t3).

It is important to note that the justification of (q1) at t3 relies equally on both observations (p1 and p2). In other words, if either of the observations were to be false, q1 would necessarily be false. At t2, when the observation (p1) occurs, the set of unobserved premises necessary to entail (q1) transforms from the set A = ((p1) AND (p2)) to the set B = (p2). Since B is a subset of A, it follows that; (q1) is partially justified by (p1).

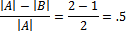

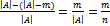

At this point, I will introduce a method for representing the degree of partial justification. To determine the degree of partial justification that a given set of observations (p1, p2, p3, …) makes on a statement (q), the cardinality of a set B = (the set of unobserved premises that are necessary to entail (q)) is subtracted from the cardinality of a set A = (the set of premises that were necessary to entail (q) at the time (q) was asserted) and then divided by the cardinality of A to produce a value between 0 and 1.

For the case of (q1) above (at time t2) we have;

In the cases; n = 3, n = 4 and n = 5 we have;

- (q2) The next three objects that are observed to have the property (swan) will also have the property (white). (stated at t1)

- (q3) The next four objects that are observed to have the property (swan) will also have the property (white). (stated at t1)

- (q4) The next five objects that are observed to have the property (swan) will also have the property (white). (stated at t1)

Given the following observations;

- The first object observed to have the property (swan) also has the property (white). (observed at t2)

- The second object observed to have the property (swan) also has the property (white). (observed at t3)

- The third object observed to have the property (swan) also has the property (white). (observed at t4)

- The fourth object observed to have the property (swan) also has the property (white). (observed at t5)

- The fifth object observed to have the property (swan) also has the property (white). (observed at t6)

It follows;

- At t1, (q2) (q3) (q4) have a partial justification = 0.

- At t2, (q2) = ((3-2)/3) = 1/3, (q3) = ((4-3)/4)= 1/4, (q4) = ((5-4)/5)= 1/5.

- At t3, (q2) = ((3-1)/3) = 2/3, (q3) = ((4-2)/4) = 1/2, (q4) = ((5-3)/5) = 2/5.

- At t4, (q2) = ((3-0)/3) = 1, (q3) = ((4-1)/4) = 3/4, (q4) = ((5-2)/5) = 3/5.

- At t5, (q3) = ((4-0)/4) = 1, (q4) = ((5-1)/5) = 4/5.

- At t6, (q4) = ((5-0)/5) = 1.

It can now be shown, that for any value n in the statement (q1) “the next n objects that are observed to have the property (swan) will also have the property (white).”, there is a number of observations m that justify (q1).

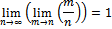

Consider the case; statement (q1) is made at (t1) and (pm) “The (m)th object observed to have the property (swan) also has the property (white).” is made at (t(m+1)).

let m = the number of observations;

Since;

and

we have;

we can now show;

Therefore, for all (q1), as n approaches infinity, it is the case that; as m approaches n, m/n approaches one (total justification). It is this notion that allow us to approximate the justification of generalized statements.

25 comments

Comments sorted by top scores.

comment by Krantz · 2023-11-27T02:13:34.167Z · LW(p) · GW(p)

Well, that looks absolutely horrible.

I promise, it looked normal until I hit the publish button..

Replies from: Viliam, rotatingpaguro, Cossontvaldes↑ comment by rotatingpaguro · 2023-11-27T07:43:57.894Z · LW(p) · GW(p)

Lesswrong supports math.

↑ comment by Valdes (Cossontvaldes) · 2023-11-27T11:58:33.967Z · LW(p) · GW(p)

This looks interesting. I will come back to this post later and read it if the math displays properly.

comment by Shiroe · 2023-11-27T08:59:15.580Z · LW(p) · GW(p)

On the contrary, it is my intention to illustrate that assertions of instances that have not been experienced (with respect to their assertion at t1) can be justified in the future in which they are observed (with respect to their observation at t2).

Sorry, I may not be following this right. I had thought the point of the skeptical argument was that you can't justify a prediction about the future until it happens. Induction is about predicting things that haven't happened yet. You don't seem to be denying the skeptical argument here, if we still need to wait for the prediction to resolve before it can be justified.

Replies from: Krantz↑ comment by Krantz · 2023-11-29T15:02:40.720Z · LW(p) · GW(p)

This is a good question.

I agree that you can't justify a prediction until it happens, but I'm urging us to consider what it actually means for a prediction to happen. This can become nuanced when you consider predictions that are statements which require multiple observations to be justified.

If I predict that a box (that we all know contains 10 swans) contains 10 white swans (My prediction is 'There are ten white swans in this box.'). When does that prediction actually 'happen'? When does it become 'justified'?

I think we all agree that after we've witnessed the 10th white swan, my assertion is justified. But am I justified at all to believe I am more likely to be correct after I've only witnessed 8 or 9 white swans?

This is controversial.

comment by Valdes (Cossontvaldes) · 2023-12-05T15:58:52.056Z · LW(p) · GW(p)

I think this somehow misses the crux for the problem of induction. By redefining "justification" (and your definition is reasonnable), you get to speak of partially justifying a general statement that has not yet been fully observed. Sure.

But this doesn't solve the question. The question, in your example, would be closer to "On what basis can I, having seen a large number of swans being white, consider it now more likely that the next swan I see will be white"?

comment by Krantz · 2023-11-29T14:17:46.507Z · LW(p) · GW(p)

This was a paper I wrote 8 - 10 years ago while taking a philosophy of science course primarily directed at Hume and Popper. Sorry about the math, I'll try to fix it when I have a moment.

The general point is this:

I am trying to highlight a distinction between two cases.

Case A - We say 'All swans are white.' and mean something like, 'There are an infinite number of swans in the Universe and all of them are white.'.

Hume's primary point, as I interpreted him, is that since there are an infinite number of observations that would need to be made to justify this assertion, making a single observation of a white swan doesn't make any sort of dent in the list of observations we would need to make. If you have an infinitely long 'to do list', then checking off items from your list, doesn't actually make any progress on completing your list.

Case B - We say 'All swans are white.' and mean something like, 'There are a finite number of swans (n) in the Universe and all of them are white.' (and (n) is going to be really big.).

If we mean this instead, then we can see that no matter how large (n) is, each observation makes comprehensive and calculable progress towards justifying that (n) swans are indeed white. I'm saying that, no matter how long your finite 'to do list' is, checking off an item is calculable progress towards the assertion that (n) swans are white.

In general, I think Hume did a great job of demonstrating why we can't justify assertions like the one in case A. I agree with him on that. What I'm saying, is that we shouldn't make statements like the one in case A. They are absurd (in the formal sense).

What I'm saying is that, yes, observations of instances can't provide any justification for general claims about infinite sets, but they can provide justification of general claims about finite sets (as large as you would like to make them) and that is important to consider.

Replies from: TAGcomment by Maximum_Skull · 2023-11-27T09:20:18.975Z · LW(p) · GW(p)

Bayesian probability theory fully answers this question from a philosophical point of view, and answers a lot of it from the practical point of view (doing calculations on probability distributions is computationally intensive and can get intractable pretty quick, so it's not a magic bullet in practice).

It extends logic to handle be able to uniformly handle both probabilistic statements and statements made with complete certainty. I recommend Jaynes's "Probability Theory: The Logic of Science" as a good guide to the subject in case you are interested.

Replies from: TAG, dr_s↑ comment by TAG · 2023-11-27T15:02:14.562Z · LW(p) · GW(p)

There's more than one problem of induction. Bayesian theory doesn't tell you anything about the ontological question, what makes the future resemble the past, and it only answers the epistemological question probablistically.

Replies from: dr_s, Maximum_Skull↑ comment by dr_s · 2023-11-27T15:09:03.471Z · LW(p) · GW(p)

Yeah, exactly. Bayesian theory is built on top of an assumption of regularity, not the other way around. If some malicious genie purposefully screwed with your observations, Bayesian theory would crash and burn. Heck, the classic "inductivist turkey" would have very high Bayesian belief in his chances of living past Christmas.

↑ comment by Maximum_Skull · 2023-11-27T16:43:17.255Z · LW(p) · GW(p)

I find the questions of "how and when can you apply induction" vastly more interesting than the "why it works" question. I am more of a "this is a weird trick which works sometimes, how and when does it apply?" kind of guy.

Bayesianism is probably the strongest argument for the "it works" part I can provide: here are the rules you can use to predict future events. Easily falsifiable by applying the rules, making a prediction and observing the outcomes. All wrapped up in an elegant axiomatic framework.

[The answer is probabilistic because the nature of the problem is (unless you possess complete information about the universe, which coincidentally makes induction redundant).]

Replies from: TAG↑ comment by TAG · 2023-11-28T17:58:18.000Z · LW(p) · GW(p)

I find the questions of “how and when can you apply induction” vastly more interesting than the “why it works” question.

Maybe you are personally not interested in the philosophical aspects, ...but then why say that Bayes fully answers them, when it doesn't and you don't care anyway?

Bayesian probability theory fully answers this question from a philosophical point of view

The answer is probabilistic because the nature of the problem is

Says who? For a long time, knowledge was associated with certainty.

Replies from: Maximum_Skull, Krantz↑ comment by Maximum_Skull · 2023-11-30T10:22:44.108Z · LW(p) · GW(p)

The post I commented on is about a justification of induction (unless I have commited some egregious misreading, which is a surprisingly common error mode of mine, feel free to correct me on this part). It seemed natural for me that I would respond with linking the the strongest justification I know -- although again, might have misread myself into understanding this differently from what words were written.

[This is basically the extent to which I mean that the question is resolved; I am conceding on ~everything else.]

↑ comment by Krantz · 2023-11-29T15:17:46.080Z · LW(p) · GW(p)

It sounds like they are simply suggesting I accept the principle of uniformity of nature as an axiom.

Although I agree that this is the crux of the issue, as it has been discussed for decades, it doesn't really address the points I aim to urge the reader to consider.

Replies from: TAG↑ comment by TAG · 2023-11-29T16:23:14.595Z · LW(p) · GW(p)

If the reader values having solutions to the philosophical issues as well as the practical ones, how are you going to change their mind? It's just a personal preference.

Replies from: Krantz↑ comment by Krantz · 2023-11-29T18:23:46.928Z · LW(p) · GW(p)

Why would I want to change a person's belief if they already value philosophical solutions? I think people should value philosophical solutions. I value them.

Maybe I'm misunderstanding your question.

It seemed like the poster above stated they do not value philosophical solutions. The paper isn't really aimed at converting a person that doesn't value 'the why' into a person that does. It is aimed at people which already do care about 'the why' and are looking to further reinforce/challenge their beliefs about what induction is capable of doing.

The principle of uniformity of nature is something we need to assume if we are going to declare we have evidence that the tenth swan to come out of the box would be white (in the situation where we have a box of ten swans and have observed 9 of them come out of the box and be white). Hume successfully convinced me that this can't be done without assuming the principle of uniformity in nature.

What I am claiming though, is that although we have no evidence to support the assertion 'The 10th swan will be white.' we do have evidence to support the assertion 'All ten swans in the box will be white.' (an assertion made before we opened the box.). This justification is not dependent upon assuming the principle of uniformity of nature.

In general, it is a clarification specifically about what induction is capable of producing justification for.

Future observation instances? No.

But general statements? I think this is plausible.

It's really just an inquiry into what counts as justification.

Necessary or sufficient evidence.

↑ comment by dr_s · 2023-11-27T09:52:27.654Z · LW(p) · GW(p)

It just pushes the question further. The essential issue with inference is "why should the universe be so nicely well-behaved and have regular properties?". Bayesian probability theory assumes it makes sense to e.g. assign a fixed probability to the belief that swans are white based on a certain amount of swans that we've seen being white, which already bakes in assumptions like e.g. that the swans don't suddenly change colour, or that there is a finite amount of them and you're sampling them in a reasonably random manner. Basically, "the universe does not fuck with us". If the universe did fuck with us, empirical inquiry would be a hopeless endeavour. And you can't really prove for sure that it doesn't.

The strongest argument in favour of the universe really being so nice IMO is an anthropic/evolutionary one. Intelligence is the ability to pattern-match and perform inference. This ability only confers a survival advantage in a world that is reasonably well-behaved (e.g. constant rules in space and time). Hence the existence of intelligent beings at all in a world is in itself an update towards that world having sane rules. If the rules did not exist or were too chaotic to be understood and exploited, intelligence would only be a burden.

Replies from: Maximum_Skull↑ comment by Maximum_Skull · 2023-11-27T11:34:02.566Z · LW(p) · GW(p)

I might have an unusual preference here, but I find the "why" question uninteresting.

It's fundamentally non-exploitable, in a sense that I do not see any advantage to be gained from knowing the answer (not a straightforward /empirical way of finding which one out of the variants I should pay attention to).

Replies from: dr_s↑ comment by dr_s · 2023-11-27T12:03:30.304Z · LW(p) · GW(p)

Oh, I mean, I agree. I'm not asking "why" really. I think in the end "I will assume empiricism works because if it doesn't then the fuck am I gonna do about it" is as respectable a reason to just shrug off the induction problem as they come. It is in fact the reason why I get so annoyed when certain philosophers faff about how ignorant scientists are for not asking the questions in the first place. We asked the questions, we found as useful an answer as you can hope for, now we're asking more interesting questions. Thinking harder won't make answers to unsolvable questions pop out of nowhere, and in practice, every human being lives accordingly to an implicit belief in empiricism anyway. You couldn't do anything if you couldn't rely on some basic constant functionality of the universe. So there's only people who accept this condition and move on and people who believe they can somehow think it away and have failed one way or another for the last 2500 years at least. At some point, you gotta admit you likely won't do much better than the previous fellows.

Replies from: Shiroe↑ comment by Shiroe · 2023-11-27T13:57:52.692Z · LW(p) · GW(p)

Do you have any examples of the "certain philosophers" that you mentioned? I've often heard of such people described that way, but I can't think of anyone who's insulted scientists for assuming e.g. causality is real.

Replies from: dr_s↑ comment by dr_s · 2023-11-27T14:13:18.456Z · LW(p) · GW(p)

For example there's recently been a controversy adjacent to this topic on Twitter involving one Philip Goff (philosopher) who started feuding over it with Sabine Hossenfelder (physicist, albeit with some controversial opinions). Basically Hossenfelder took up an instrumentalist position of "I don't need to assume that things described in the models we use are real in whatever sense you care to give to the word, I only need to know that those models' predictions fit reality" and Goff took issue with how she was brushing away the ontological aspects. Several days of extremely silly arguments about whether electrons exist followed. To me Hossenfelder's position seemed entirely reasonable, and yes, a philosophical one, but she never claimed otherwise. But Goff and other philosophers' position seemed to be "the scientists are ignorant of philosophy of science, if only they knew more about it, they would be far less certain about their intuitions on this stuff!" and I can't understand how they can be so confident about that or in what way would that precisely impact the scientists' actual work. Whether electrons "exist" in some sense or they are just a convenient mathematical fiction doesn't really matter a lot to a physicist's work (after all, electrons are nothing but quantized fluctuations of a quantum field, just like phonons are quantized fluctuations of an elastic deformation field; yet people probably feel intuitively that electrons "exist" a lot more than phonons, despite them being essentially the same sort of mathematical object. So maybe our intuitions about existence are just crude and don't well describe the stuff that goes on at the very edge of matter).

Replies from: Shiroe↑ comment by Shiroe · 2023-11-27T20:24:19.159Z · LW(p) · GW(p)

I see. Yes, "philosophy" often refers to particular academic subcultures, with people who do their philosophy for a living as "philosophers" (Plato had a better name for these people). I misread your comment at first and thought it was the "philosopher" who was arguing for the instrumentalist view, since that seems like their more stereotypical way of thinking and deconstructing things (whereas the more grounded physicist would just say "yes, you moron, electrons exist. next question.").

Replies from: dr_s↑ comment by dr_s · 2023-11-27T22:56:37.282Z · LW(p) · GW(p)

From the discussion it seemed that most physicists do take the realist view on electrons, but in general the agreement was that either view works and there's not a lot to say about it past acknowledging what everyone's favorite interpretation is. A question that can have no definite answer isn't terribly interesting.