Real World Solutions to Prisoners' Dilemmas

post by Scott Alexander (Yvain) · 2012-07-03T03:25:25.052Z · LW · GW · Legacy · 88 commentsContents

88 comments

Why should there be real world solutions to Prisoners' Dilemmas? Because such dilemmas are a real-world problem.

If I am assigned to work on a school project with a group, I can either cooperate (work hard on the project) or defect (slack off while reaping the rewards of everyone else's hard work). If everyone defects, the project doesn't get done and we all fail - a bad outcome for everyone. If I defect but you cooperate, then I get to spend all day on the beach and still get a good grade - the best outcome for me, the worst for you. And if we all cooperate, then it's long hours in the library but at least we pass the class - a “good enough” outcome, though not quite as good as me defecting against everyone else's cooperation. This exactly mirrors the Prisoner's Dilemma.

Diplomacy - both the concept and the board game - involves Prisoners' Dilemmas. Suppose Ribbentrop of Germany and Molotov of Russia agree to a peace treaty that demilitarizes their mutual border. If both cooperate, they can move their forces to other theaters, and have moderate success there - a good enough outcome. If Russia cooperates but Germany defects, it can launch a surprise attack on an undefended Russian border and enjoy spectacular success there (for a while, at least!) - the best outcome for Germany and the worst for Russia. But if both defect, then neither has any advantage at the German-Russian border, and they lose the use of those troops in other theaters as well - a bad outcome for both. Again, the Prisoner's Dilemma.

Civilization - again, both the concept and the game - involves Prisoners' Dilemmas. If everyone follows the rules and creates a stable society (cooperates), we all do pretty well. If everyone else works hard and I turn barbarian and pillage you (defect), then I get all of your stuff without having to work for it and you get nothing - the best solution for me, the worst for you. If everyone becomes a barbarian, there's nothing to steal and we all lose out. Prisoner's Dilemma.

If everyone who worries about global warming cooperates in cutting emissions, climate change is averted and everyone is moderately happy. If everyone else cooperates in cutting emissions, but one country defects, climate change is still mostly averted, and the defector is at a significant economic advantage. If everyone defects and keeps polluting, the climate changes and everyone loses out. Again a Prisoner's Dilemma,

Prisoners' Dilemmas even come up in nature. In baboon tribes, when a female is in “heat”, males often compete for the chance to woo her. The most successful males are those who can get a friend to help fight off the other monkeys, and who then helps that friend find his own monkey loving. But these monkeys are tempted to take their friend's female as well. Two males who cooperate each seduce one female. If one cooperates and the other defects, he has a good chance at both females. But if the two can't cooperate at all, then they will be beaten off by other monkey alliances and won't get to have sex with anyone. Still a Prisoner's Dilemma!

So one might expect the real world to have produced some practical solutions to Prisoners' Dilemmas.

One of the best known such systems is called “society”. You may have heard of it. It boasts a series of norms, laws, and authority figures who will punish you when those norms and laws are broken.

Imagine that the two criminals in the original example were part of a criminal society - let's say the Mafia. The Godfather makes Alice and Bob an offer they can't refuse: turn against one another, and they will end up “sleeping with the fishes” (this concludes my knowledge of the Mafia). Now the incentives are changed: defecting against a cooperator doesn't mean walking free, it means getting murdered.

Both prisoners cooperate, and amazingly the threat of murder ends up making them both better off (this is also the gist of some of the strongest arguments against libertarianism: in Prisoner's Dilemmas, threatening force against rational agents can increase the utility of all of them!)

Even when there is no godfather, society binds people by concern about their “reputation”. If Bob got a reputation as a snitch, he might never be able to work as a criminal again. If a student gets a reputation for slacking off on projects, she might get ostracized on the playground. If a country gets a reputation for backstabbing, others might refuse to make treaties with them. If a person gets a reputation as a bandit, she might incur the hostility of those around her. If a country gets a reputation for not doing enough to fight global warming, it might...well, no one ever said it was a perfect system.

Aside from humans in society, evolution is also strongly motivated to develop a solution to the Prisoner's Dilemma. The Dilemma troubles not only lovestruck baboons, but ants, minnows, bats, and even viruses. Here the payoff is denominated not in years of jail time, nor in dollars, but in reproductive fitness and number of potential offspring - so evolution will certainly take note.

Most people, when they hear the rational arguments in favor of defecting every single time on the iterated 100-crime Prisoner's Dilemma, will feel some kind of emotional resistance. Thoughts like “Well, maybe I'll try cooperating anyway a few times, see if it works”, or “If I promised to cooperate with my opponent, then it would be dishonorable for me to defect on the last turn, even if it helps me out., or even “Bob is my friend! Think of all the good times we've had together, robbing banks and running straight into waiting police cordons. I could never betray him!”

And if two people with these sorts of emotional hangups play the Prisoner's Dilemma together, they'll end up cooperating on all hundred crimes, getting out of jail in a mere century and leaving rational utility maximizers to sit back and wonder how they did it.

Here's how: imagine you are a supervillain designing a robotic criminal (who's that go-to supervillain Kaj always uses for situations like this? Dr. Zany? Okay, let's say you're him). You expect to build several copies of this robot to work as a team, and expect they might end up playing the Prisoner's Dilemma against each other. You want them out of jail as fast as possible so they can get back to furthering your nefarious plots. So rather than have them bumble through the whole rational utility maximizing thing, you just insert an extra line of code: “in a Prisoner's Dilemma, always cooperate with other robots”. Problem solved.

Evolution followed the same strategy (no it didn't; this is a massive oversimplification). The emotions we feel around friendship, trust, altruism, and betrayal are partly a built-in hack to succeed in cooperating on Prisoner's Dilemmas where a rational utility-maximizer would defect a hundred times and fail miserably. The evolutionarily dominant strategy is commonly called “Tit-for-tat” - basically, cooperate if and only if your opponent did so last time.

This so-called "superrationality” appears even more clearly in the Ultimatum Game. Two players are given $100 to distribute among themselves in the following way: the first player proposes a distribution (for example, “Fifty for me, fifty for you”) and then the second player either accepts or rejects the distribution. If the second player accepts, the players get the money in that particular ratio. If the second player refuses, no one gets any money at all.

The first player's reasoning goes like this: “If I propose $99 for myself and $1 for my opponent, that means I get a lot of money and my opponent still has to accept. After all, she prefers $1 to $0, which is what she'll get if she refuses.

In the Prisoner's Dilemma, when players were able to communicate beforehand they could settle upon a winning strategy of precommiting to reciprocate: to take an action beneficial to their opponent if and only if their opponent took an action beneficial to them. Here, the second player should consider the same strategy: precommit to an ultimatum (hence the name) that unless Player 1 distributes the money 50-50, she will reject the offer.

But as in the Prisoner's Dilemma, this fails when you have no reason to expect your opponent to follow through on her precommitment. Imagine you're Player 2, playing a single Ultimatum Game against an opponent you never expect to meet again. You dutifully promise Player 1 that you will reject any offer less than 50-50. Player 1 offers 80-20 anyway. You reason “Well, my ultimatum failed. If I stick to it anyway, I walk away with nothing. I might as well admit it was a good try, give in, and take the $20. After all, rejecting the offer won't magically bring my chance at $50 back, and there aren't any other dealings with this Player 1 guy for it to influence.”

This is seemingly a rational way to think, but if Player 1 knows you're going to think that way, she offers 99-1, same as before, no matter how sincere your ultimatum sounds.

Notice all the similarities to the Prisoner's Dilemma: playing as a "rational economic agent" gets you a bad result, it looks like you can escape that bad result by making precommitments, but since the other player can't trust your precommitments, you're right back where you started

If evolutionary solutions to the Prisoners' Dilemma look like trust or friendship or altruism, solutions to the Ultimatum Game involve different emotions entirely. The Sultan presumably does not want you to elope with his daughter. He makes an ultimatum: “Touch my daughter, and I will kill you.” You elope with her anyway, and when his guards drag you back to his palace, you argue: “Killing me isn't going to reverse what happened. Your ultimatum has failed. All you can do now by beheading me is get blood all over your beautiful palace carpet, which hurts you as well as me - the equivalent of pointlessly passing up the last dollar in an Ultimatum Game where you've just been offered a 99-1 split.”

The Sultan might counter with an argument from social institutions: “If I let you go, I will look dishonorable. I will gain a reputation as someone people can mess with without any consequences. My choice isn't between bloody carpet and clean carpet, it's between bloody carpet and people respecting my orders, or clean carpet and people continuing to defy me.”

But he's much more likely to just shout an incoherent stream of dreadful Arabic curse words. Because just as friendship is the evolutionary solution to a Prisoner's Dilemma, so anger is the evolutionary solution to an Ultimatum Game. As various gurus and psychologists have observed, anger makes us irrational. But this is the good kind of irrationality; it's the kind of irrationality that makes us pass up a 99-1 split even though the decision costs us a dollar.

And if we know that humans are the kind of life-form that tends to experience anger, then if we're playing an Ultimatum Game against a human, and that human precommits to rejecting any offer less than 50-50, we're much more likely to believe her than if we were playing against a rational utility-maximizing agent - and so much more likely to give the human a fair offer.

It is distasteful and a little bit contradictory to the spirit of rationality to believe it should lose out so badly to simple emotion, and the problem might be correctable. Here we risk crossing the poorly charted border between game theory and decision theory and reaching ideas like timeless decision theory: that one should act as if one's choices determined the output of the algorithm one instantiates (or more simply, you should assume everyone like you will make the same choice you do, and take that into account when choosing.)

More practically, however, most real-world solutions to Prisoner's Dilemmas and Ultimatum Games still hinge on one of three things: threats of reciprocation when the length of the game is unknown, social institutions and reputation systems that make defection less attractive, and emotions ranging from cooperation to anger that are hard-wired into us by evolution. In the next post, we'll look at how these play out in practice.

88 comments

Comments sorted by top scores.

comment by Kaj_Sotala · 2012-07-03T15:41:07.413Z · LW(p) · GW(p)

Yay Dr. Zany! And a good post in general.

However, Western behavior in the Ultimatum Game seems to be a cultural, not biological, phenomenon.

Replies from: shminux, VaniverBy the mid‐1990s researchers were arguing that a set of robust experimental findings from behavioral economics were evidence for set of evolved universal motivations (Fehr & Gächter 1998, Hoffman et al. 1998). Foremost among these experiments, the Ultimatum Game, provides a pair of anonymous subjects with a sum of real money for a one‐shot interaction. One of the pair—the proposer—can offer a portion of this sum to a second subject, the responder. Responders must decide whether to accept or reject the offer. If a responder accepts, she gets the amount of the offer and the proposer takes the remainder; if she rejects both players get zero. If subjects are motivated purely by self‐interest, responders should always accept any positive offer; knowing this, a self‐interested proposer should offer the smallest non‐zero amount. Among subjects from industrialized populations—mostly undergraduates from the U.S., Europe, and Asia—proposers typically offer an amount between 40% and 50% of the total, with a modal offer of usually 50% (Camerer 2003). Offers below about 30% are often rejected.

With this seemingly robust empirical finding in their sights, Nowak, Page and Sigmund (2000) constructed an evolutionary analysis of the Ultimatum Game. When they modeled the Ultimatum Game exactly as played, they did not get results matching the undergraduate findings. However, if they added reputational information, such that players could know what their partners did with others on previous rounds of play, the analysis predicted offers and rejections in the range of typical undergraduate responses. They concluded that the Ultimatum Game reveals humans’ species‐specific evolved capacity for fair and punishing behavior in situations with substantial reputation influence. But, since the Ultimatum Game is typically done one‐shot without reputational information, they argued that people make fair offers and reject unfair offers because their motivations evolved in a world where such interactions were not fitness relevant—thus, we are not evolved to fully incorporate the possibility of non‐reputational action in our decision‐making, at least in such artificial experimental contexts.

Recent comparative work has dramatically altered this initial picture. Two unified projects (which we call Phase 1 and Phase 2) have deployed the Ultimatum Game and other related experimental tools across thousands of subjects randomly sampled from 23 small‐scale human societies, including foragers, horticulturalists, pastoralists, and subsistence farmers, drawn from Africa, Amazonia, Oceania, Siberia and New Guinea (Henrich et al. 2005, Henrich et al. 2006). Three different experimental measures show that the people in industrialized societies consistently occupy the extreme end of the human distribution. Notably, small‐scale societies with only face‐to‐face interaction behaved in a manner reminiscent of Nowak et. al.’s analysis before they added the reputational information. That is, these populations made low offers and did not reject. [...]

Analyses of these data show that a population’s degree of market integration and its participation in a world religion both independently predict higher offers, and account for much of the variation between populations. Community size positively predicts greater punishment (Henrich et al. n.d.). The authors suggest that norms and institutions for exchange in ephemeral interactions culturally coevolved with markets and expanding larger‐scale sedentary populations. In some cases, at least in their most efficient forms, neither markets nor large population were feasible before such norms and institutions emerged. That is, it may be that what behavioral economists have been measuring in such games is a specific set of social norms, evolved for dealing with money and strangers, that have emerged since the origins of agriculture and the rise of complex societies.

↑ comment by Shmi (shminux) · 2012-07-04T00:03:36.877Z · LW(p) · GW(p)

I'm guessing that the results would be significantly affected by the perceived relative status, as the offer can be more about signaling than rational choice. If the two players happen to perceive the relative status similarly, or if the second player perceives equal or larger status disparity, s/he will likely accept. Maybe even think of the first player as foolish for offering too much and being a lousy bargainer. A rejection would often be due to the status-related outrage ("Who does s/he think s/he is to offer me only a pittance?")

So, if you think that being in control of how much to offer raises your status, you are likely to offer less, and if you think that not having any say in the amount automatically makes you lower status, you would be likely to accept a low offer.

Thus I would expect that in a society where equality is not considered an unalienable right, but is rather determined by material possessions, the average accepted offer would be lower. Not sure if this matches the experimental results.

comment by Grognor · 2012-07-03T14:14:49.516Z · LW(p) · GW(p)

There are many problems here.

At the end of paragraph 2 and the other examples, you say

This exactly mirrors the Prisoner's Dilemma.

But it doesn't, as you point out later in the post, because the payoff matrix isn't D-C > C-C > D-D, as you explain, but rather C-C > D-C > C-D, because of reputational effects, which is not a prisoner's dilemma. "Prisoner's dilemma" is a very specific term, and you are inflating it.

evolution is also strongly motivated [...] evolution will certainly take note.

I doubt that quite strongly!

The evolutionarily dominant strategy is commonly called “Tit-for-tat” - basically, cooperate if and only if you expect your opponent to do so.

That is not tit-for-tat! Tit-for-tat is start with cooperate and then parrot the opponent's previous move. It does not do what it "expects" the opponent to do. Furthermore, if you categorically expect your opponent to cooperate, you should defect (just like you should if you expect him to defect). You only cooperate if you expect your opponent to cooperate if he expects you to cooperate ad nauseum.

This so-called "superrationality” appears even more [...]

That is not superrationality! Superrationality achieves cooperation by reasoning that you and your opponent will get the same result for the same reasons, so you should cooperate in order to logically bind your result to C-C (since C-C and D-D are the only two options). What is with all this misuse of terminology? You write like the agents in the examples of this game are using causal decision theory (which defects all the time no matter what) and then bring up elements that cannot possibly be implemented in causal decision theory, and it grinds my gears!

And if two people with these sorts of emotional hangups play the Prisoner's Dilemma together, they'll end up cooperating on all hundred crimes, getting out of jail in a mere century and leaving rational utility maximizers to sit back and wonder how they did it.

This is in direct violation of one of the themes of Less Wrong. If "rational expected utility maximizers" are doing worse than "irrational emotional hangups", then you're using a wrong definition of "rational". You do this throughout the post, and it's especially jarring because you are or were one of the best writers for this website.

playing as a "rational economic agent" gets you a bad result

9_9

[...] anger makes us irrational. But this is the good kind of irrationality [...]

"The good kind of irrationality" is like "the good kind of bad thing". An oxymoron, by definition.

[...] if we're playing an Ultimatum Game against a human, and that human precommits to rejecting any offer less than 50-50, we're much more likely to believe her than if we were playing against a rational utility-maximizing agent

Bullshit. A rational agent is going to do what works. We know this because we stipulated that it was rational. If you mean to say a "stupid number crunching robot that misses obvious details like how to play ultimatum games" then sure it might do as you describe. But don't call it "rational".

It is distasteful and a little bit contradictory to the spirit of rationality to believe it should lose out so badly to simple emotion, and the problem might be correctable.

You think?

Downvoted.

Replies from: APMason, shokwave, wedrifid, cousin_it, Yvain, MarkusRamikin, sixes_and_sevens, Andreas_Giger↑ comment by APMason · 2012-07-03T14:48:06.920Z · LW(p) · GW(p)

I agree with pretty much everything you've said here, except:

You only cooperate if you expect your opponent to cooperate if he expects you to cooperate ad nauseum.

You don't actually need to continue this chain - if you're playing against any opponent which cooperates iff you cooperate, then you want to cooperate - even if the opponent would also cooperate against someone who cooperated no matter what, so your statement is also true without the "ad nauseum" (provided the opponent would defect if you defected).

Replies from: Grognor↑ comment by shokwave · 2012-07-03T17:28:41.199Z · LW(p) · GW(p)

You're reading this uncharitably. There are also parts that are unclear on Yvain's part, sure, but not to the extent that you claim.

The original group project situation Yvain explores does mirror the Prisoner's Dilemma. Then, later, he introduces reputational effects to illustrate one of the Real World Solutions to the Prisoner's Dilemma that we have already developed.

It's not made crystal clear....

So one might expect the real world to have produced some practical solutions to Prisoners' Dilemmas. One of the best known such systems is called “society”. You may have heard of it.

Well, actually it is.

...

Evolution

I understood Yvain to be speaking metaphorically, or perhaps tongue-in-cheek, when talking about what evolution would take note of. I believe this was his intention, and furthermore is a reasonable reading given our knowledge of Yvain.

This is in direct violation of one of the themes of Less Wrong. If "rational expected utility maximizers" are doing worse than "irrational emotional hangups", then you're using a wrong definition of "rational". You do this throughout the post, and it's especially jarring because you are or were one of the best writers for this website.

I expect that Yvain used 'rational' against the theme of LW on purpose, to create a tension - rationality failing to outperform emotional hangups is a contradiction, that would motivate readers to find the false premise or re-analyse the situation.

I do concur with your point about tit-for-tat. Similarly for super-rationality; although it's possible Yvain is not familiar with Hofstadter's definition and was using 'super' as an intensifier, it seems unlikely.

↑ comment by wedrifid · 2012-07-03T14:59:52.781Z · LW(p) · GW(p)

You think?

Downvoted.

I had this downvoted based on on form and irritating tone before I looked closely and decided enough of the quotes from Yvain are, indeed, plainly wrong and I encourage hearty dismissal.

You do this throughout the post, and it's especially jarring because you are or were one of the best writers for this website.

Agree. Who is he and what has he done to the real Yvain?

↑ comment by cousin_it · 2012-07-04T16:17:22.060Z · LW(p) · GW(p)

Great critique!

The first time I read the post, I stopped reading when "tit-for-tat" and "superrationality" were misused in two consecutive sentences. Sadly, that part seems to be still inaccurate after Yvain edited it, because TFT is not dominant in the 100-fold repeated PD, if the strategy pool contains strategies that feed on TFT.

Replies from: wedrifid↑ comment by wedrifid · 2012-07-04T16:39:44.120Z · LW(p) · GW(p)

The first time I read the post, I stopped reading when "tit-for-tat" and "superrationality" were misused in two consecutive sentences. Sadly, that part seems to be still inaccurate after Yvain edited it, because TFT is not dominant in the 100-fold repeated PD, if the strategy pool contains strategies that feed on TFT.

To be fair he doesn't seem to make the claim that TFT is dominant in the fixed length iterated PD. (I noticed how outraged I was that Yvain was making such a basic error so I thought I should double check before agreeing emphatically!) Even so I'm not comfortable with just saying TFT is "evolutionarily dominant" in completely unspecified circumstances.

↑ comment by Scott Alexander (Yvain) · 2012-07-04T15:39:38.404Z · LW(p) · GW(p)

You'll notice I used scare quotes around most of the words you objected to. I'm trying to point out the apparent paradox, using the language that game theorists and other people not already on this website would use, without claiming that the paradox is real or unsolvable.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2012-07-07T14:38:37.645Z · LW(p) · GW(p)

"This so-called "superrationality” " in the post is still wrong, I think. Would work without "so-called", since the meaning is clear from the context, but it's not conventional usage.

↑ comment by MarkusRamikin · 2012-07-03T17:07:58.136Z · LW(p) · GW(p)

This is disturbing. I had been looking forward to the sequence, but based on this comment and some others I'm starting to wonder how accurate it is overall.

Replies from: shokwave↑ comment by shokwave · 2012-07-03T20:20:38.856Z · LW(p) · GW(p)

This is disturbing. I had been looking forward to the sequence, but based on this comment and some others I'm starting to wonder how accurate it is overall.

Overall, it is accurate. There are nits to pick, sure, and some loose language, but the actual math and theory is solid so far. The generalisations to real life

If I am assigned to work on a school project with a group ... Diplomacy - both the concept and the board game ... Civilization - again, both the concept and the game ... global warming ... etc

are correct as well.

↑ comment by sixes_and_sevens · 2012-07-03T15:09:36.959Z · LW(p) · GW(p)

I am largely appreciative of your overall comment, but "rational" is a historically legitimate term to describe naive utility-maximisers in this manner. The original post introduced it in inverted commas, suggesting a special usage of the term. While there are less ambiguous ways this could have been expressed, it seems to me the main benefit of doing so would be to pre-empt people complaining about an unfavourable usage of the term "rational". Your response to it seems excessive.

Replies from: VincentYu, Grognor↑ comment by VincentYu · 2012-07-03T15:37:14.456Z · LW(p) · GW(p)

Agreed.

I want to point out that Eliezer's (and LW's general) use of the word 'rationality' is entirely different from the use of the word in the game theory literature, where it usually means VNM-rationaliity, or is used to elaborate concepts like sequential rationality in SPNE-type equilibria.

ETA: Reading Grognor's reply to the parent, it seems that much of the negative affect is due to inconsistent use of the word 'rational(ity)' on LW. Maybe it's time to try yet again to taboo LW's 'rationality' to avoid the namespace collision with academic literature.

Replies from: wedrifid↑ comment by wedrifid · 2012-07-03T16:10:16.966Z · LW(p) · GW(p)

I want to point out that Eliezer's (and LW's general) use of the word 'rationality' is entirely different from the use of the word in the game theory literature

And the common usage of 'rational' on lesswrong should be different to what is used in a significant proportion of game theory literature. Said literature gives advice, reasoning and conclusions that is epistemically, instrumentally and normatively bad. According to the basic principles of the site it is in fact stupid and not-rational to defect against a clone of yourself in a true Prisoner's Dilemma. A kind of stupidity that is not too much different to being 'rational' like Spock.

ETA: Reading Grognor's reply to the parent, it seems that much of the negative affect is due to inconsistent use of the word 'rational(ity)' on LW. Maybe it's time to try yet again to taboo LW's 'rationality' to avoid the namespace collision with academic literature.

No. The themes of epistemic and instrumental rationality are the foundational premise of the site. It is right there in the tagline on the top of the page. I oppose all attempts to replace instrumental rationality with something that involves doing stupid things.

I do endorse avoiding excessive use of the word.

Replies from: VincentYu, drnickbone, PhilosophyTutor↑ comment by VincentYu · 2012-07-03T16:36:00.308Z · LW(p) · GW(p)

Said literature gives advice, reasoning and conclusions that is epistemically, instrumentally and normatively bad.

This is a recurring issue, so perhaps my instructor and textbooks were atypical: we never discussed or even cared whether someone should defect on PD in my game theory course. The bounds were made clear to us in lecture – game theory studies concepts like Nash equilibria and backward induction (using the term 'rationality' to mean VNM-rationality) and applies them to situations like PD; that is all. The use of any normative language in homework sets or exams was pretty much automatically marked incorrect. What one 'should' or 'ought' to do were instead relegated to other courses in, e.g, economics, philosophy, political science. I'd like to know from others if this is a typical experience from a game theory course (and if anyone happens to be working in the field: if this is representative of the literature).

And the common usage of 'rational' on lesswrong should be different to what is used in a significant proportion of game theory literature.

No. The themes of epistemic and instrumental rationality are the foundational premise of the site. It is right there in the tagline on the top of the page. I oppose all attempts to replace instrumental rationality with something that involves doing stupid things.

Upon reflection, I tend to agree with these statements. In this case, perhaps we should taboo 'rationality' in its game theoretic meaning – use the phrase 'VNM-rationality' whenever that is meant instead of LW's 'rationality'.

Replies from: MarkusRamikin, wedrifid↑ comment by MarkusRamikin · 2012-07-03T17:02:43.685Z · LW(p) · GW(p)

How about let's not taboo anything, just make it clear up front what is meant when really necessary. I would prefer that because I think such taboos contribute to the entry barrier for every LW newcomer; I don't want newcomers used to the game-theoretic jargon to keep unwittingly running afoul of this and getting downvoted.

Perhaps it would be useful if Yvain inserted a clarification of this early in the Sequence.

↑ comment by wedrifid · 2012-07-03T16:59:31.630Z · LW(p) · GW(p)

The use of any normative language in homework sets or exams was pretty much automatically marked incorrect.

The normative claim is one I am making now about the 'rationality' theories in question. It is the same kind of normative claim I make when I say "empirical tests are better than beliefs from ad baculum".

Upon reflection, I tend to agree with these statements. In this case, perhaps we should taboo 'rationality' in its game theoretic meaning – use the phrase 'VNM-rationality' whenever that is meant instead of LW's 'rationality'.

I could agree to that---conditional on confirmation from one of the Vladimirs that the axioms in question do, in fact, imply the faux-rational (CDT like) conclusions the term would be used to represent. I don't actually see it at a glance and would expect another hidden assumption to be required. I wouldn't be comfortable using the term without confirmation.

Replies from: VincentYu↑ comment by VincentYu · 2012-07-03T17:11:21.015Z · LW(p) · GW(p)

The normative claim is one I am making now about the 'rationality' theories in question.

I quoted badly; I believe there was a misunderstanding. The first quote in the parent to this should be taken in the context of your sentence segment that "Said literature gives advice". In my paragraph, I was objecting to this from my experiences in my course, where I did not receive any advice on what to do in games like PD. Instead, the type of advice that I received was on how to calculate Nash equilibria and find SPNEs.

Otherwise, I am mostly in agreement with the latter part of that sentence. (ETA: That is, I agree that if current game theoretic equilibrium solutions are taken as advice on what one ought to do, then that is often epistemically, instrumentally, and normatively bad.)

More ETA:

conditional on confirmation from one of the Vladimirs that the axioms in question do, in fact, imply the faux-rational (CDT like) conclusions the term would be used to represent. I don't actually see it at a glance and would expect another hidden assumption to be required.

You are correct – VNM-rationality is incredibly weak (though humans don't satisfy it). It is, after all, logically equivalent to the existence of a utility function (the proof of this by von Neumann and Morgenstern led to the eponymous VNM theorem). The faux-rationality on LW and in popular culture requires much stronger assumptions. But again, I don't think these assumptions are made in the game theory literature – I think that faux-rationality is misattributed to game theory. The game theory I was taught used only VNM-rationality, and gave no advice.

↑ comment by drnickbone · 2012-07-03T18:51:33.556Z · LW(p) · GW(p)

Said literature gives advice, reasoning and conclusions that is epistemically, instrumentally and normatively bad.

I remember hearing about studies where economics and game theory students ended up less "moral" by many usual measures after completing their courses. Less inclined to co-operate, more likely to lie and cheat, more concerned about money, more likely to excuse overtly selfish behaviour and so on. And then these fine, new, upstanding citizens, went on to become the next generation of bankers, traders, stock-brokers, and advisers to politicians and industry. The rest as they say is history.

↑ comment by PhilosophyTutor · 2012-07-09T06:41:28.206Z · LW(p) · GW(p)

Said literature gives advice, reasoning and conclusions that is epistemically, instrumentally and normatively bad.

Said literature makes statements about what is game-theory-rational. Those statements are only epistemically, instrumentally or normatively bad if you take them to be statements about what is LW-rational or "rational" in the layperson's sense.

Ideally we'd use different terms for game-theory-rational and LW-rational, but in the meantime we just need to keep the distinction clear in our heads so that we don't accidentally equivocate between the two.

Replies from: wedrifid↑ comment by wedrifid · 2012-07-09T06:50:45.250Z · LW(p) · GW(p)

Said literature makes statements about what is game-theory-rational. Those statements are only epistemically, instrumentally or normatively bad if you take them to be statements about what is LW-rational or "rational" in the layperson's sense.

Disagree on instrumentally and normatively. Agree regarding epistemically---at least when the works are careful with what claims are made. Also disagree with the "game-theory-rational", although I understand the principle you are trying to get at. A more limited claim needs to be made or more precise terminology.

Replies from: PhilosophyTutor↑ comment by PhilosophyTutor · 2012-07-09T08:15:26.973Z · LW(p) · GW(p)

I would be interested in reading about the bases for your disagreement. Game theory is essentially the exploration of what happens if you postulate entities who are perfectly informed, personal utility-maximisers who do not care at all either way about other entities. There's no explicit or implicit claim that people ought to behave like those entities, thus no normative content whatsoever. So I can't see how the game theory literature could be said to give normatively bad advice, unless the speaker misunderstood the definition of rationality being used, and thought that some definition of rationality was being used in which rationality is normative.

I'm not sure what negative epistemic or instrumental outcomes you foresee either, but I'm open to the possibility that there are some.

Is there a term you prefer to "game-theory-rational" that captures the same meaning? As stated above, game theory is the exploration of what happens when entities that are "rational" by that specific definition interact with the world or each other, so it seems like the ideal term to me.

Replies from: wedrifid↑ comment by wedrifid · 2012-07-09T10:27:40.171Z · LW(p) · GW(p)

I would be interested in reading about the bases for your disagreement. Game theory is essentially the exploration of what happens if you postulate entities who are perfectly informed, personal utility-maximisers who do not care at all either way about other entities.

Under this definition you can't claim epistemic accuracy either. In particular the 'perfectly informed' assumption when combined with the personal utility maximization leads to different behaviors to those described as 'rational'. (It needs to be weakened to "perfectly informed about everything except those parts of the universe that are the other agent.)

There's no explicit or implicit claim that people ought to behave like those entities, thus no normative content whatsoever.

This isn't about the agents having selfish desires (in fact, they don't even have to "not care at all about other entities"---altruism determines what the utility function is, not how to maximise it.) No, this is about shoddy claims about decision theory that are either connotatively misleading or erroneous depending on how they are framed. All those poor paperclip maximisers who read such sources and take them at face value will end up producing less paperclips than they could have if they knew the correct way to interact with the staples maximisers in contrived scenarios.

Replies from: PhilosophyTutor↑ comment by PhilosophyTutor · 2012-07-09T11:47:23.547Z · LW(p) · GW(p)

This isn't about the agents having selfish desires (in fact, they don't even have to "not care at all about other entities"---altruism determines what the utility function is, not how to maximise it.)

This is wrong. The standard assumption is that game-theory-rational entities are neither altruistic nor malevolent. Otherwise the Prisoner's Dilemma wouldn't be a dilemma in game theory. It's only a dilemma as long as both players are solely interested in their own outcomes. As soon as you allow players to have altruistic interests in other players' outcomes it ceases to be a dilemma.

You can do similar mathematical analyses with altruistic agents, but at that point speaking strictly you're doing decision-theoretic calculations or possibly utilitarian calculations not game-theoretic calculations.

Utilitarian ethics, game theory and decision theory are three different things, and it seems to me your criticism assumes that statements about game theory should be taken as statements about utilitarian ethics or statements about decision theory. I think that is an instance of the fallacy of composition and we're better served to stay very aware of the distinctions between those three frameworks.

Replies from: wedrifid↑ comment by wedrifid · 2012-07-09T12:35:34.840Z · LW(p) · GW(p)

This is wrong. The standard assumption is that game-theory-rational entities are neither altruistic nor malevolent.

No, it just isn't. Game theory is completely agnostic about what the preferences of the players are based on. Game theory takes a payoff matrix and calculates things like Nash Equilibrium and Dominant Strategies. The verbal description of why the payoff matrix happens to be as it is is fluff.

Otherwise the Prisoner's Dilemma wouldn't be a dilemma in game theory. It's only a dilemma as long as both players are solely interested in their own outcomes. As soon as you allow players to have altruistic interests in other players' outcomes it ceases to be a dilemma.

As soon as you allow altruistic interests the game ceases to be a Prisoner's Dilemma. The dilemma (and game theory in general) relies on the players being perfectly selfish in the sense that they ruthlessly maximise their own payoffs as they are defined, not in the sense that those payoffs must never refer to aspects of the universe that happen to include the physical state of the other agents.

Consider the Codependent Prisoner's Dilemma. Romeo and Juliet have been captured and the guards are trying to extort confessions out of them. However Romeo and Juliet are both lovesick and infatuated and care only about what happens to their lover, not what happens to themselves. Naturally the guards offer Romeo the deal "If you confess we'll let Juliet go and you'll get 10 years but if you don't confess you'll both get 1 year" (and vice versa, with a both confess clause in there somewhere). Game theory is perfectly equipped at handling this game. In fact, so much so that it wouldn't even bother calling it a new name. It's just a Prisoner's Dilemma and the fact that the conflict of interests between Romeo and Juliet happens to be based on codependent altruism rather than narcissism is outside the scope of what game theorists care about.

Replies from: PhilosophyTutor↑ comment by PhilosophyTutor · 2012-07-11T04:19:25.211Z · LW(p) · GW(p)

It seems from my perspective that we are talking past each other and that your responses are no longer tracking the original point. I don't personally think that deserves upvotes, but others obviously differ.

Your original claim was that:

Said literature gives advice, reasoning and conclusions that is epistemically, instrumentally and normatively bad.

Now given that game theory is not making any normative claims, it can't be saying things which are normatively bad. Similarly since game theory does not say that you should either go out and act like a game-theory-rational agent or that you should act as if others will do so, it can't be saying anything instrumentally bad either.

I just don't see how it could even be possible for game theory to do what you claim it does. That would be like stating that a document describing the rules of poker was instrumentally and normatively bad because it encouraged wasteful, zero-sum gaming. It would be mistaking description for prescription.

We have already agreed, I think, that there is nothing epistemically bad about game theory taken as it is.

Everything below responds to the off-track discussion above and can be safely ignored by posters not specifically interested in that digression.

In game theory each player's payoff matrix is their own. Notice that Codependent Romeo does not care where Codependent Juliet ends up in her payoff matrix. If Codependent Romeo was altruistic in the sense of wanting to maximise Juliet's satisfaction with her payoff, he'd be keeping silent. Because Codependent Romeo is game-theory-rational, he's indifferent to Codependent Juliet's satisfaction with her outcome and only cares about maximising his personal payoff.

The standard assumption in a game-theoretic analysis is that a poker player wants money, a chess player wants to win chess games and so on, and that they are indifferent to their opponent(s) opinion about the outcome, just as Codependent Romeo is maximising his own payoff matrix and is indifferent to Codependent Juliet's.

That is what we attempt to convey when we tell people that game-theory-rational players are neither benevolent nor malevolent. Even if you incorporate something you want to call "altruism" into their preference order, they still don't care directly about where anyone else ends up in those other peoples' preference orders.

Replies from: wedrifid↑ comment by wedrifid · 2012-07-11T04:34:24.995Z · LW(p) · GW(p)

Now given that game theory is not making any normative claims, it can't be saying things which are normatively bad.

Not true. The word 'connotations' comes to mind. As does "reframing to the extent of outright redefining a critical keyword". That is not a normatively neutral act. It is legitimate for me to judge it and I choose to do so---negatively.

↑ comment by Grognor · 2012-07-03T15:37:39.092Z · LW(p) · GW(p)

I think this was a legitimate use of "by definition", since it's the definition we use on this website. You're right that "rational" has often meant "blindly crunching numbers without looking at all available information &c." but I thought we had a widespread agreement here not to use the word like that.

You're right that my response seems excessive, but I don't know if it actually is excessive rather than merely seeming so.

Replies from: Viliam_Bur, sixes_and_sevens↑ comment by Viliam_Bur · 2012-07-03T17:55:38.265Z · LW(p) · GW(p)

I think this was a legitimate use of "by definition", since it's the definition we use on this website.

A term "bad rationality" is also used on this website. It is a partial rationality, and it may be harmful. On the other hand, as humans, partial rationality is all we have, don't we?

But now I am discussing labels on the map, not the territory.

↑ comment by sixes_and_sevens · 2012-07-03T15:54:20.894Z · LW(p) · GW(p)

You're attaching a negative connotation where there doesn't have to be one. In econ and game theory literature, "rational" means something else, not necessarily something bad. It also refers to something specific. If we want to talk about that specific referent, we have limited options.

I would propose suffixing alternative uses of the word "rational" with a disambiguating particle. Thus above, Yvain could have used "econ-rational". If we ever have cause to talk about the Rationalist philosophical tradition, they can be "p-Rationalists". Annoyingly, I don't actually believe we need to do this for disambiguation purposes.

Replies from: Cyan↑ comment by Andreas_Giger · 2012-07-03T14:39:56.002Z · LW(p) · GW(p)

Upvoted. By the way, I think you meant to type "C-C > D-C > D-D" instead of "C-C > D-C > D-C", and you might also want to include C-D. Not sure whether C-D is supposed to be last or second last in Yvain's example, because of the reputational effects.

comment by Paul Crowley (ciphergoth) · 2012-07-03T09:44:10.472Z · LW(p) · GW(p)

This sequence is really great - thank you for writing it!

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2012-07-03T17:48:38.352Z · LW(p) · GW(p)

Seconded - game theory is awfully useful, and this sequence is making a great job of explaining it in a clear and engaging way.

Replies from: wedrifid↑ comment by wedrifid · 2012-07-03T17:56:36.546Z · LW(p) · GW(p)

Seconded - game theory is awfully useful, and this sequence is making a great job of explaining it in a clear and engaging way.

Do you say this after reading this particular post? The others were good, this one is embarrassing.

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2012-07-03T18:06:34.281Z · LW(p) · GW(p)

I thought this was good, even if he could have been a little more precise with regard to some terms.

comment by shokwave · 2012-07-03T05:04:36.672Z · LW(p) · GW(p)

Game theory is particularly interesting because it adds up to normalcy so fast - very simple math on very simple situations very rapidly describes real life, macro-level behaviour.

Tom Siegfried has a powerful quote: Game theory captures something about how the world works. (note: bizarre HTML book setup, read pg 73-5)

comment by Nisan · 2012-07-03T20:45:21.402Z · LW(p) · GW(p)

Tangentially, I now understand exactly what I don't like about Eric S. Raymond's morality:

I am among those who fear... that the U.S. response to 9/11 was not nearly as violent and brutal as it needed to be. To prevent future acts of this kind, it is probably necessary that those who consider them should shit their pants with fear at the mere thought of the U.S.’s reaction.

... the correct response to a person who says “You do not own yourself, but are owned by society (or the state), and I am society (or the state) speaking.” is to injure him as gravely as you think you can get away with ... In fact, I think if you do not do violence in that situation you are failing in a significant ethical duty.

A precommitment to retribution is effective when dealing with "rational" agents or CDT agents. In fact a self-interested TDT agent in a world of CDT agents would do well by retaliating against all injuries with disproportionate force. (And also issuing extortionate threats; to be fair, ESR doesn't advocate this.) If you buy Gary Drescher's reduction of morality to decision theory, this is where the moral duty of revenge comes from. But a superrational agent in a world of superrational agents will rarely need to exercise retribution at all.

Human beings might be thought of as superrational agents who make mistakes (moral errors). I don't know of a good technical model for this, but I feel like one recommendation that would come out of it is to not punish people disproportionately, because what if others did that to you when you make a mistake?

Replies from: Desrtopa, army1987, army1987, army1987↑ comment by Desrtopa · 2012-07-03T22:13:20.058Z · LW(p) · GW(p)

I think the utility-maximizing reasons to avoid disproportionate punishment in such cases among humans have more to do with likely perpetrators being somewhat blind to such disincentives (remember, these are people who attack others by killing themselves,) and the fact that nations operate in a reputation system where acts of disproportionality which are too large tend to attract negative reputation.

Since humans tend to operate under friendship/anger/fairness formulations rather than utility maximizing ones, a sultan who honors a precommitment to cut off the head of a man who elopes with his daughter is seen as reasonable in circumstances (including a historically common degree of protectiveness of one's offspring) where one who massacred the man's entire village to be even more sure of deterring other attempts to cross him would be viewed as cruel and tyrannical.

↑ comment by A1987dM (army1987) · 2013-10-23T11:52:55.857Z · LW(p) · GW(p)

I'd be curious to see what the results of a tournament of iterated PD with noise (i.e., each move is flipped with probability 5% -- and the opponent will never know the pre-noise move) would be.

Replies from: gwern↑ comment by gwern · 2013-10-24T01:58:23.453Z · LW(p) · GW(p)

http://en.wikipedia.org/wiki/Trembling_hand_perfect_equilibrium may be a useful starting point.

↑ comment by A1987dM (army1987) · 2013-07-12T16:44:50.178Z · LW(p) · GW(p)

The link doesn't go to where I think it was supposed to.

Replies from: Nisan↑ comment by A1987dM (army1987) · 2013-07-12T17:02:56.151Z · LW(p) · GW(p)

Human beings might be thought of as superrational agents who make mistakes (moral errors). I don't know of a good technical model for this, but I feel like one recommendation that would come out of it is to not punish people disproportionately, because what if others did that to you when you make a mistake?

Not exactly about the same thing, but see this.

comment by drnickbone · 2012-07-03T18:03:03.824Z · LW(p) · GW(p)

The evolutionarily dominant strategy is commonly called “Tit-for-tat” - basically, cooperate if and only if you expect your opponent to do so.

No, Tit-for-Tat co-operates if and only if the other player co-operated last time. It works only in an iterated Prisoner's Dilemma, where you have multiple interactions with the same player.

Co-operate if and only if you expect the other player to co-operate (because of reputation, emotional behaviour etc.) is a quite different strategy. Strategies with some reputational or prediction element like this will work in cases where you have a one-shot interaction with each player, but multiple interactions with a community of players.

Strategies where you "commit" yourself to co-operating but only with players who have similarly committed themselves are different yet again, and work on true one-shot dilemmas. These are the "super-rational" strategies. Variants of these (TDT, UDT etc.) avoid the need for explicit commitments, since they always do what they wish they'd committed to doing.

Basically, I think you are mixing up quite different solutions here to the Prisoner's Dilemma.

EDIT: I see that Wedifrid made much the same points already. Sorry for the repetition.

comment by wedrifid · 2012-07-03T15:46:38.229Z · LW(p) · GW(p)

The evolutionarily dominant strategy is commonly called “Tit-for-tat” - basically, cooperate if and only if you expect your opponent to do so.

That strategy is neither evolutionarily dominant nor "tit-for-tat". Tit-for-tat is applicable in the Iterated Prisoner's Dilemma with unknown duration and involves cooperating on the first round thereafter doing whatever the opponent did in the round before the current round. As the name implies it is somewhat like a specific implementation of "eye for an eye".

As for evolutionary dominance the strategy "cooperate if and only if you expect your opponent to do so" is strictly worse than "If cooperating gives the expected result of your opponent also cooperating then do so unless they will cooperate anyway". ie. Your proposed "completely-not-tit-for-tat" strategy cooperates with CooperateBot which is a terrible move. ("CooperateBot" may represent a much smaller or lower status monkey, for example.)

comment by beoShaffer · 2012-07-03T04:16:02.704Z · LW(p) · GW(p)

I like it, but suggest that you link back to the previous entry in the sequence and/or the sequence index.

Replies from: Kennycomment by [deleted] · 2012-07-03T19:31:50.989Z · LW(p) · GW(p)

You may want to be more careful about using game theory on real-world problems. Game theory makes a lot of assumptions (some explizit, others implizit) that most of the time are not given in real life.

You will even have a hard time to find good examples for real life prisoners who are in a game theoretic PD. In reality, most of the times the prisoners dilemma looks rather like this: Same payoff matrix as the classical PD, BUT both prisoners may chose to break their silence any time. Once a prisoner has confessed, there is no more going back to silence. This situation will probably yield cooperation (unless the prisoners cannot get accurate information).

Most real-world situations do neither fit one-shot games nor iterated games. Real life rarely has discrete game turns.

Many real-world situations are about behaviours that have some duration and can be stopped/changed.

Many real- life situations permit/enable the player to change his decision from cooperation to defection if he learns about the defection of the other player. In many of these situations the betrayed player is able to change his own action fast enough to deny the defector any advantage from defecting.

↑ comment by [deleted] · 2012-07-03T19:56:17.810Z · LW(p) · GW(p)

Of course, you can still model this with game theory, but you need to break "turns" into smaller units (Planck seconds, if you want to go all the way), as for iteration vs something being a one shot game, you could say either of these is universally the case based on definitions of what is a repetition of the same game, and what is different enough to qualify as a new scenario.

So game theory is not broken for real world problems, but like any theory I have seen when you scale it up from a simple puzzle to interactions with the universe you make the problem more difficult.

comment by Kingoftheinternet · 2012-07-03T12:26:36.801Z · LW(p) · GW(p)

Many uses of the word "rational" here were fine ("rational economic agent" is understandable), but others really bothered me ("It is distasteful and a little bit contradictory to the spirit of rationality to believe it should lose out so badly to simple emotion" -- why perpetuate the Spock myth? I want to show this to my friends!). I have no specific suggestion at hand, but circumlocuting around the word in some of the cases above would bring the article from excellent to perfection.

comment by Maelin · 2012-07-03T07:39:44.103Z · LW(p) · GW(p)

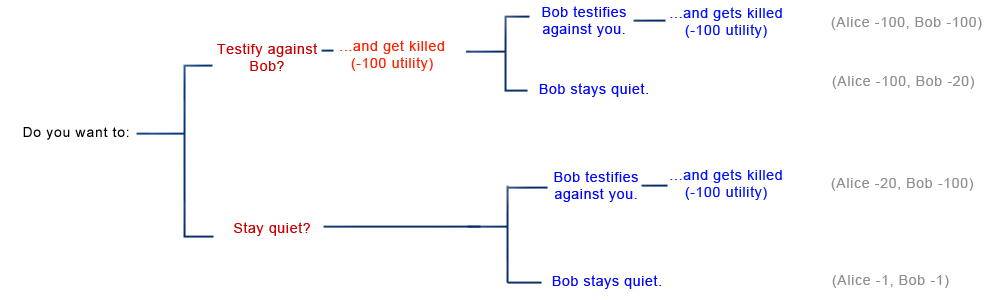

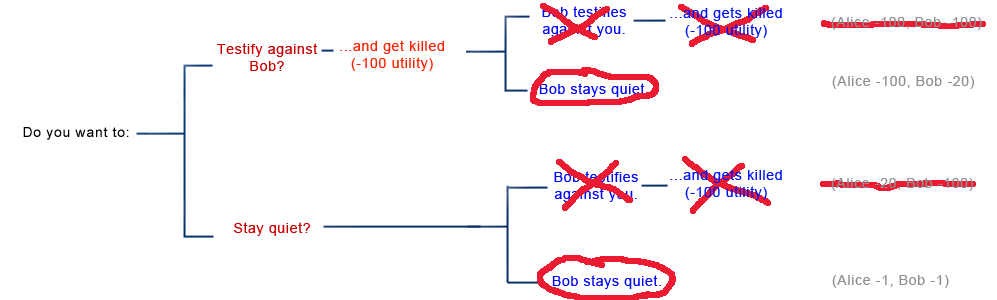

Excellent article, but the image is too wide so it gets cropped by LW's fixed-width content section. Looks like this. Using Firefox 9.0.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2012-07-07T14:20:00.590Z · LW(p) · GW(p)

Reduced the width from 800px to 700px (fixed the issue for me).

comment by Thomas · 2012-07-04T15:14:27.497Z · LW(p) · GW(p)

We all enjoy defecting of a salesman, who doesn't cooperate holding a price high, but defect and lower it to have a gain.

The defection in economy has its implication in this mechanism of pricing.

The defecting is just as crucial!

Replies from: Raoul589↑ comment by Raoul589 · 2013-04-11T17:20:51.214Z · LW(p) · GW(p)

In this way, defection seems to have two social meanings:

Defecting proactively is betrayal. Defecting reactively is punishment.

We seem to have strong negative opinions of the former and somewhat positive opinions of the latter. I think in your salesman example you're talking about punishment being crucial. In fact, the defection of the customer is only necessary as a response to the salesman's original defection.

I am curious as to whether you have a similarly real life example of where proactive defection (i.e. betrayal) is crucial (for some societal or group benefit)?

Replies from: wedrifid↑ comment by wedrifid · 2013-04-11T17:39:21.564Z · LW(p) · GW(p)

Defecting proactively is betrayal. Defecting reactively is punishment.

We seem to have strong negative opinions of the former and somewhat positive opinions of the latter.

And for this reason we tend to be predisposed to interpreting the behavior of enemies as 'proactive/betrayal' and our own as 'reactive/punishment' (where we acknowledge that we have defected at all).

comment by wedrifid · 2012-07-03T15:52:19.708Z · LW(p) · GW(p)

Prisoners' Dilemmas even come up in nature. In baboon tribes, when a female is in “heat”, males often compete for the chance to woo her. The most successful males are those who can get a friend to help fight off the other monkeys, and who then helps that friend find his own monkey loving. But these monkeys are tempted to take their friend's female as well. Two males who cooperate each seduce one female. If one cooperates and the other defects, he has a good chance at both females. But if the two can't cooperate at all, then they will be beaten off by other monkey alliances and won't get to have sex with anyone. Still a Prisoner's Dilemma!

If anything this is closer to Parfit's Hitchiker. While both are game theory problems and a good decision theory will result in cooperation on both of them (assuming adequate prediction capabilities) the non-sequential nature of the game matters and some decision making strategies will handle them differently.

comment by Viliam_Bur · 2012-07-03T09:49:12.465Z · LW(p) · GW(p)

Reputation is a way to change many one-time Prisonner's Dilemmas into one big Iterated Prisonner's Dilemma, where mutual cooperation is the best strategy for rational players. But how exactly does it work in real life?

I guess it works better when a small group of people interact again and again; and it works worse in a large group of people where many interactions are with strangers. So we should expect more cooperation in a village than in a big city.

Even in big cities people can create smaller units and interact more frequently within these units. So they would trust more their neighbors, coworkers, etc. But here is an opportunity for exploitation by people who don't mind frequent migration or changing jobs -- they often reset their social karma, so they should be trusted less. We should be also suspicious about other karma resetting moves, such as when a company changes their name.

Even if we don't know someone in person, we can do some probabilistic reasoning by thinking about their reference class: "Do I have an experience of people with traits X, Y, Z cooperating or defecting?" However, this kind of reasoning may be frowned upon socially, sometimes even illegal. It is said that people should not be punished for having a bad reference class because of a trait they didn't choose voluntarily and cannot change. Sometimes it is considered wrong to judge people by changeable traits, for example how they are dressed. On the other hand, reference classes like "people having a university diploma" are socially allowed.

Here is an interesting (potentially mindkilling) prediction: When it is legally forbidden to use reference classes and other forms of evaluating prestige, the rate of defection increases. (An extreme situation would be when you are legally required to cooperate regardless of what your opponent does.)

Replies from: Richard_Kennaway, AspiringRationalist, TimS, wedrifid↑ comment by Richard_Kennaway · 2012-07-03T10:25:38.704Z · LW(p) · GW(p)

Even in big cities people can create smaller units and interact more frequently within these units. So they would trust more their neighbors, coworkers, etc. But here is an opportunity for exploitation by people who don't mind frequent migration or changing jobs -- they often reset their social karma, so they should be trusted less.

And they are -- itinerant people are universally less trusted than the ones with home addresses.

↑ comment by NoSignalNoNoise (AspiringRationalist) · 2012-07-05T04:33:43.595Z · LW(p) · GW(p)

Reputation is a way to change many one-time Prisonner's Dilemmas into one big Iterated Prisonner's Dilemma, where mutual cooperation is the best strategy for rational players. But how exactly does it work in real life?

People are hard-wired to obey social norms and cooperate with members of their communities even in the absence of personal consequences like reputation. Most people would not steal from a stranger even if they knew they would not be caught.

Replies from: wedrifid↑ comment by wedrifid · 2012-07-05T04:40:07.761Z · LW(p) · GW(p)

People are hard-wired to obey social norms and cooperate with members of their communities even in the absence of personal consequences like reputation.

People are hard-wired to manage reputation through some forms of cooperation without explicitly thinking about the consequences to reputation in each instance.

↑ comment by TimS · 2012-07-03T16:25:43.662Z · LW(p) · GW(p)

Sometimes it is considered wrong to judge people by changeable traits, for example how they are dressed.

Can you give any example of people saying the two types of judgment are comparable? As you say, there's a sense in modern society that unchosen traits should not be treated with moral disdain. But the analysis is totally different for chosen traits.

Replies from: Viliam_Bur↑ comment by Viliam_Bur · 2012-07-03T18:08:07.213Z · LW(p) · GW(p)

Two real-world examples, but both can also be interpreted differently:

In the first case, people are protesting against claim that "dressing like a slut increases the probability that the woman will be raped". Of course the discussion is not strictly Bayesian, but mostly about connotations.

In the second case, people are protesting the fact that looking like a criminal, while not being a criminal increases their probability of being killed in a supposed self-defense.

(The second example seems like an ad-absurdum version of anti-discrimination, but apparently those people mean it.)

Replies from: TimS↑ comment by TimS · 2012-07-03T20:47:25.261Z · LW(p) · GW(p)

Behaviors can change in frequency. Debates about whether to punish behaviors are debates about whether a decrease in frequency of the behavior (dressing sexually provocatively or conforming to the norms of a lower-status subgroup) is desired.

But contrast, non-behavior characteristics don't change frequency. Productive social reactions are about whether the characteristic should be accommodated (red heads - yes, ax-crazy murders - no).

The difference in the topics of the two debates makes me think that attempting to draw them in parallel is misleading.

Replies from: AspiringRationalist↑ comment by NoSignalNoNoise (AspiringRationalist) · 2012-07-05T04:29:45.498Z · LW(p) · GW(p)

Debates about whether to punish behaviors are debates about whether a decrease in frequency of the behavior is desired.

Whether a decrease in the frequency of the behavior is desired is only one piece of the debate. Other important pieces (from a consequentialist perspective) include how effective the punishment will be, how costly it will be to implement the punishment and what the side effects will be. Even if, for example, society collectively decides that if fewer women dressed like sluts there would be fewer rapes, it does not immediately follow that dressing that way should be a punishable offense.

↑ comment by wedrifid · 2012-07-03T17:05:22.170Z · LW(p) · GW(p)

Here is an interesting (potentially mindkilling) prediction: When it is legally forbidden to use reference classes and other forms of evaluating prestige, the rate of defection increases. (An extreme situation would be when you are legally required to cooperate regardless of what your opponent does.)

Interesting. Source?

Replies from: Viliam_Bur↑ comment by Viliam_Bur · 2012-07-03T18:20:03.487Z · LW(p) · GW(p)

Just my prediction. An example in my mind was an interaction between a state (represented by some person) and individual: e.g. if you are entitled to receive a support in unemployment, you will get it, even if the common sense makes it obvious that you are just abusing the rules; as long as you pretend to follow them.

This is open to interpretation, but my understanding is: "help unemployed people" = cooperate, "let them die" = defect; "inform truthfully about your employment" = cooperate, "falsely pretend to be unemployed (while making money illegally)" = defect.

I suppose there are more examples like that, which could be generalized that a state (or other big organization) becomes a CooperateBot when trying to achieve a win/win situation, and is abused later.

comment by Vaniver · 2012-07-03T06:02:32.271Z · LW(p) · GW(p)

Another, mostly unrelated comment: the ultimatum game can actually tell you two different things. First, what divisions do people propose, and second, what divisions do people accept?

Presumably, everyone accepts fair divisions. Different groups of people have different percentages that reject unfair divisions, and different percentages that offer unfair divisions (a simplification, since the degree of fairness can also be varied). There are four potential clusters: groups that propose fair and accept unfair, groups that propose fair and reject unfair, groups that propose unfair and accept unfair, and groups that propose unfair and reject unfair. (Empirically, I believe only three of these clusters show up, but it'd take a lit search to verify that. The first two groups can be differentiated by having confederates propose unfair divisions)

These differences between people groups have real-world implications.

[edit] Note that Kaj_Sotala has found at least one paper on the subject.

comment by Vaniver · 2012-07-03T05:52:13.390Z · LW(p) · GW(p)

If I defect but you cooperate, then I get to spend all day on the beach and still get a good grade - the best outcome for me, the worst for you.

! No, it's not. The "you" in this example prefers getting a good grade and privately fuming about having to do the work themselves to failing and not having to do the work. (And actually, for many of the academic group projects I've been involved in, it's less and happier work for the responsible member to do everything themselves, because there's too little work for too many people otherwise.)

The basic solution to the prisoner's dilemma: the only winning move is not to play. When you find a payoff matrix that looks like the prisoner's dilemma when denominated in years, add other considerations to the game until it is no longer the prisoner's dilemma when denominated in utility.

What's neat about that statement of the solution is that it naturally extends in every direction. Precommitments are an attempt to add another consideration- and their credibility is how much utility it looks like you can bestow to them. "My utility for punishing my daughter's defiler is higher than my utility for having clean carpets" is easy to believe, "my utility for keeping my commitments is higher than my utility for being $20,000 richer" is difficult to believe. (Scale should impact willingness to accept on an ultimatum game.)

Replies from: mwengler↑ comment by mwengler · 2012-07-04T22:05:02.332Z · LW(p) · GW(p)

The basic solution to the prisoner's dilemma: the only winning move is not to play. When you find a payoff matrix that looks like the prisoner's dilemma when denominated in years, add other considerations to the game until it is no longer the prisoner's dilemma when denominated in utility.

I don't understand this, please explain. Suggesting to "add other considerations to the game until it is no longer the prisoner's dilemma when denominated in utility" seems about equivalent to lying to yourself about what you really want (what your utility is) in order to justify doing something.

So if you have a chance to explain what you mean I would appreciate it.

Replies from: Vaniver↑ comment by Vaniver · 2012-07-05T02:24:41.566Z · LW(p) · GW(p)

I don't understand this, please explain. Suggesting to "add other considerations to the game until it is no longer the prisoner's dilemma when denominated in utility" seems about equivalent to lying to yourself about what you really want (what your utility is) in order to justify doing something.

"Features" might be a clearer word than "considerations." [edit] For example, consider the group project example. If you just look at the amount of work done, it's a prisoner's dilemma, with the result that no one does any work. When you look at the whole situation, you notice that, oh, grades are involved- and so it's not a prisoner's dilemma at all, because everyone (presumably) prefers passing the project and working to failing the project and not working. That's a 'consideration' or 'feature' that gets added to the game to make it not a PD.

"Utility" is the numerical score you assign to the desirability of a particular future, and it has some neat properties when it comes to probabilistic reasoning. For example, if going to the beach has a utility of 5 and hitting yourself in the head with a hammer has a utility of -20, then a 80% chance of going to the beach and a 20% chance of hitting yourself in the head with a hammer has a utility of 0, and you should be indifferent between that gamble and some other action with a utility of 0. If you aren't indifferent, then you mismeasured your utilities!

Game theory is the correct way to go from payoff matrices to courses of action, but the issue is that the payoff matrices are subjective. Consider one gamble, in which you and your partner both go free with 95% probability and both go to prison for 20 years with 5% probability. Would you be indifferent between that and it being certain that you would go to prison for 1 year and your partner would go to prison for 20 years? The Alice and Bob Yvain described would be. It's what they really want. Is it any surprise that the right play for monsters like that is to defect?

For most humans, that's not what they really want. They do actually care about the well-being of others; they care about having a good reputation and high standing in their community; they care about being proud of themselves and their actions. Their utility score is more than just 0 minus the number of years they spend in prison.

And so if Alice and Bob are in a situation where Alice prefers CC to DC, and Bob prefers CC to CD, then CC is now a Nash equilibrium- and both of them can securely take it. When people look at the Prisoner's Dilemma and say "Defecting can't be the right strategy," they're often doing that because they disbelieve the payoff matrix.

Because when you accept the payoff matrix, the answer is already determined and it's just a bit of calculation to find it. Is 0 better than -15? Yes. Is -1 better than -20? Yes. If you agree with both of those statements, and expect your opponent to agree with both statements, then the story's over, and both players defect.

comment by Andreas_Giger · 2012-07-03T12:10:36.549Z · LW(p) · GW(p)

Most people, when they hear the rational arguments in favor of defecting every single time on the iterated 100-crime Prisoner's Dilemma, will feel some kind of emotional resistance. Thoughts like “Well, maybe I'll try cooperating anyway a few times, see if it works”, or “If I promised to cooperate with my opponent, then it would be dishonorable for me to defect on the last turn, even if it helps me out., or even “Bob is my friend! Think of all the good times we've had together, robbing banks and running straight into waiting police cordons. I could never betray him!”

I don't want to start a discussion here, I just want to clarify your position on this: Are you implying that it is perfectly rational to always assume that your opponent is a perfect rationalist?

Replies from: Yvain↑ comment by Scott Alexander (Yvain) · 2012-07-04T15:41:11.115Z · LW(p) · GW(p)

No.

comment by BlackNoise · 2012-07-03T03:45:15.651Z · LW(p) · GW(p)

though not quite as good as me cooperating against everyone else's defection.

Shouldn't it be the other way around? (you defecting while everyone else cooperates)

ETA: liking this sequence so far, feels like I'm getting the concepts better now.

Replies from: Nonecomment by Douglas_Knight · 2012-07-04T17:09:12.767Z · LW(p) · GW(p)

What is your source for your baboon anecdote? it is contrary to what I have read, eg, in Baboon Metaphysics. Or here:

Lower-ranking males form alliances and can harass newly immigrated but dominant males and protect adult females with which they have bonds. Overall, though, dominance rank of a male indicates reproductive success so that high-ranking males have more mating opportunities, more offspring, and increased fitness compared to lower-ranking males

comment by [deleted] · 2012-07-03T13:22:19.667Z · LW(p) · GW(p)

So far as I can analyse, isn't a hostage negotiation a Prisoners Dilemma too? (Terrorists can spare (C) or kill (D), Government can pay (C) or raid (D))

Replies from: shokwave↑ comment by shokwave · 2012-07-03T13:26:46.935Z · LW(p) · GW(p)

No, it's a game of Chicken.

It's not a Prisoner's Dilemma because when the government pays, the terrorists gain no extra value from killing a hostage.

Replies from: Kaj_Sotala, Andreas_Giger, wedrifid↑ comment by Kaj_Sotala · 2012-07-03T18:33:44.784Z · LW(p) · GW(p)

Also, if the government cooperates, that will encourage other terrorists to take more hostages later on, making the CC payoff unsymmetrical.

Replies from: wedrifid↑ comment by wedrifid · 2012-07-03T19:23:09.268Z · LW(p) · GW(p)

Also, if the government cooperates, that will encourage other terrorists to take more hostages later on, making the CC payoff unsymmetrical.

I'm actually curious as to whether this has been studied in practice. This is the kind of thing I expect people with big egos to do regardless of whether the actual expected value is positive.

↑ comment by Andreas_Giger · 2012-07-03T13:35:50.936Z · LW(p) · GW(p)

It's not Chicken either, because of the reason you just gave.

Edit: Seeing how this post got downvoted with no reply being posted, I have to assume it was someone who doesn't know much about game theory, so let me explain:

If the terrorists gain no extra value from killing a hostage if the government pays, then DC > CC is false for the terrorist side; however both PD and Chicken are symmetrical problems that require DC > CC to be true for all sides. Therefore, this problem is neither PD nor Chicken.

↑ comment by wedrifid · 2012-07-03T13:32:17.781Z · LW(p) · GW(p)

It's not a Prisoner's Dilemma because when the government pays, the terrorists gain no extra value from killing a hostage.

But the hostages are (presumably) infidels or something enemy-like.

Replies from: Andreas_Giger↑ comment by Andreas_Giger · 2012-07-03T13:52:12.710Z · LW(p) · GW(p)

Yes, in that case it would actually be PD.

comment by [deleted] · 2012-07-04T16:34:44.414Z · LW(p) · GW(p)

Most people, when they hear the rational arguments in favor of defecting every single time on the iterated 100-crime Prisoner's Dilemma, will feel some kind of emotional resistance.

The rationalist strategy is not to defect from the beginning, but to cooperate till somewhere into the 90s

Replies from: wedrifid↑ comment by wedrifid · 2012-07-04T17:00:16.190Z · LW(p) · GW(p)

The rationalist strategy is not to defect from the beginning, but to cooperate till somewhere into the 90s

That certainly isn't implied by the problem and the agent. It depends entirely on what the opponent is expected to do. I would use that strategy when playing against an average lesswrong user. I wouldn't use it when playing against some overconfident kid who has done some first year economics classes. I wouldn't use it when playing against a CDT bot or a TDT agent.